Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Dean Liu and Version 1 by Huan Zhang.

Deep learning-based image quality enhancement models have been proposed to improve the perceptual quality of distorted synthesized views impaired by compression and the Depth Image-Based Rendering (DIBR) process in a multi-view video system. Due to the lack of Multi-view Video plus Depth (MVD) data, a deep learning-based model using more synthetic Synthesized View Images (SVI) is proposed, in which a random irregular polygon-based SVI synthesis method is proposed to simulate the DIBR distortion based on existing massive RGB/RGBD data. In addition, the DIBR distortion mask prediction network is embedded to further enhance the performance.

- quality enhancement

- synthetic images

- data augmentation

- 3D video system

- DIBR

1. DIBR Distortion Simulation

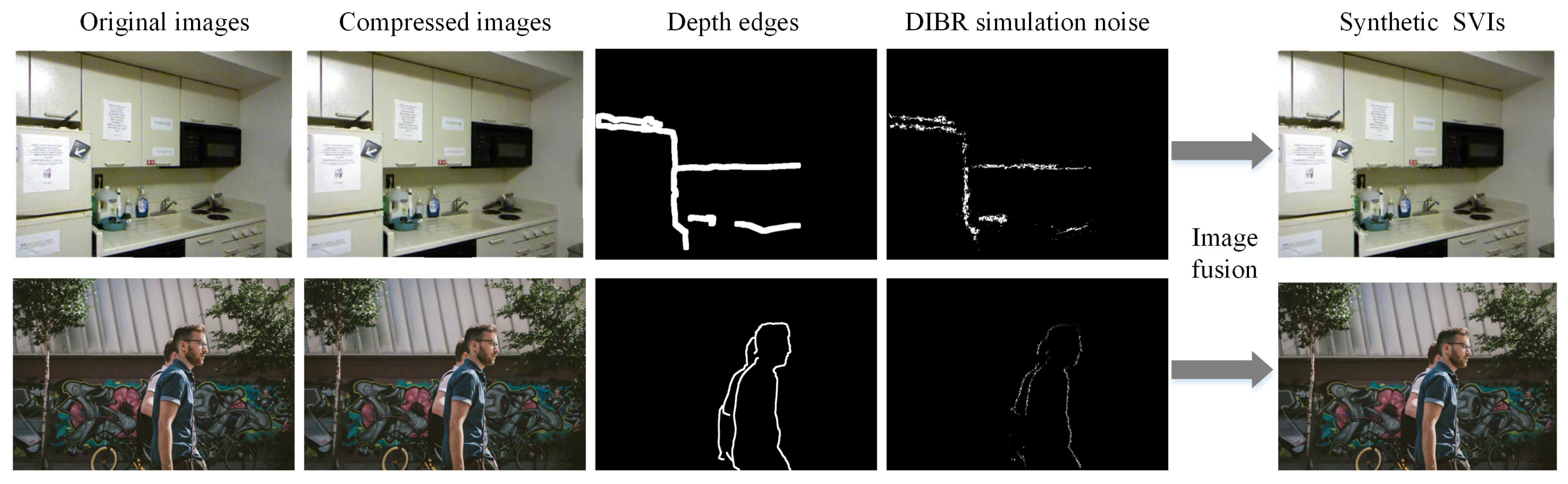

Figure 1 shows the pipeline of DIBR distortion simulation. Original images from NYU [1] and DIV2K [2] databases (public RGB/RGBD databases) are first compressed by using codec with a given Quantization Parameter (QP) parameter. The associated depth images of the compressed images are available for RGBD images or could be generated by mono-depth estimation methods [3][4]. Then, the DIBR distortion will be generated along the depth edges because depth edges are assumed to be the most possible areas where DIBR distortion resides. Next, the proposed random irregular polygon-based DIBR distortion generation method is employed on the compressed RGB/RGBD data. In this way, the synthetic synthesized view database is constructed, which includes synthetic synthesized images and corresponding DIBR distortion masks.

Figure 1. Overview of DIBR distortion simulation pipeline.

2. Different Local Noise Comparison and Proposed Random Irregular Polygon-Based DIBR Distortion Generation

Figure 2a demonstrates the SVI with DIBR distortion of sequences Lovebird1 and Balloons, such as cracker, fragment, and irregular geometric displacement along the edges of objects. To investigate which kind of distortion resembles the DIBR distortion more, three different noise patterns, e.g., Gaussian noise, speckle noise, and patch shuffle-based noise, are compared. Gaussian noise is a well-known noise with normal distribution. Speckle noise is a type of granular noise which often exists in medical ultrasound images and synthetic aperture radar (SAR) images. Patch shuffle [5] is a method to randomly shuffle the pixels in a local patch of images or feature maps during training which is used to regularize the training of classification-related CNN models. Taking the DIBR distortion simulation effects for Lovebird1 as example, as shown in Figure 2, different synthetic SVIs are obtained by adding compressed neighboring captured views with Gaussian noise, speckle noise, and patch shuffle-based noise along the areas with strongly discontinuous depth, respectively. The real SVI is listed as anchor. Denote the captured view as I, then the synthetic synthesized view by the random noise can be written as

where denotes the synthetic SVI, I denotes the compressed captured view images, 1 denotes the matrix with all elements as 1, M denotes the mask area corresponding to the detected strong depth edges, ⊙ denotes dot product, and denotes the images added with random noise, i.e., Gaussian noise, speckle noise, or the patch-shuffled version of I. It could be observed that synthesized by Gaussian noise and speckle noise is not very visually resembling synthesis distortion, and synthesized by patch-based noise exhibits similar behaviors a little in the way that the pixels in a local patch appear as disorderly and irregular.

Figure 2. Comparison of DIBR distortion simulation effects by local random noise. (a,b) are SVIs from sequences Lovebird1 and Balloons, respectively, and the enlarged areas are the representative areas with both compression and DIBR distortion. (c–j) represent the DIBR distortion simulation effects of rectangle areas in (a,b) by Gaussian, speckle, patch shuffle-based, and the proposed random irregular polygon-based noise on compressed captured views of Lovebird1 and Balloons, respectively.

SVI with DIBR distortion can be viewed as the tiny movement of textures within random polygon area along the depth transition area. To better simulate the irregular geometric distortion, in this section, a simple random polygon generation method which could control irregularity and spikiness will be introduced as follows. A random polygon generation method could be found in [6]. Following the method [7], to generate a random polygon, a random set of points with angularly sorted order would be first generated; then, the vertices would be connected based on the order. First, given a center point P, a group of points would be sampled on a circle around point P. Random noise is added by varying the angular spacing between sequential points and the radial distance of each point from the center. The process can be formulated as

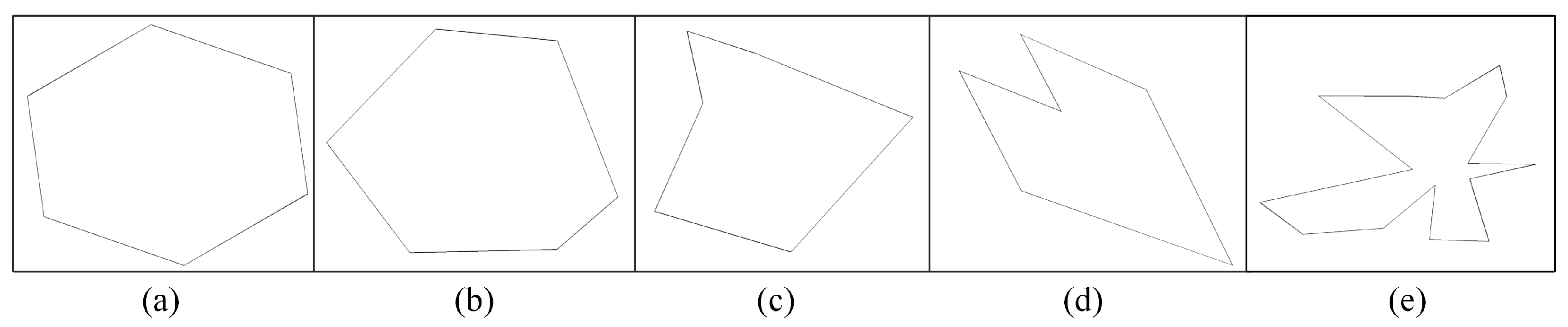

where and represent the angle and radius between the i-th point and assumed center point, respectively. denotes the random variable controlling angular space between sequential points, which is subject to a uniform distribution featured by the smallest value and largest value , where n denotes the number of vertices. Moreover, is subject to Gaussian distribution with a given radius R as mean value and as the variance. R could be used to adjust the magnitude of the generated polygon. could be used to adjust the irregularity of the generated polygon by controlling the angular variance degree through the interval size of U. could be used to adjust the spikiness of the generated polygon by controlling the radius variance through the normal distribution. Large and indicates strong irregularity and spikiness, and vice versa, which can be shown in Figure 3.

Figure 3. Examples of generated random polygons. n denotes the number of vertices, denotes irregularity, and denotes spikiness. (a) n = 6, = 0, = 0. (b) n = 6, = 0.5, = 0. (c) n = 6, = 0, = 0.5. (d) n = 6, = 0.7, = 0.7. (e) n = 15, = 0.7, = 0.7.

Thus, the synthetic SVI composed by the proposed random polygon noise can be obtained as

where denotes the vertices set located in a local region generated by the random polygon method, denotes a random vector for all points of to be bodily shifted in . is fused with I in the strong depth regions. In Figure 2f,j, it can be observed that the DIBR distortion generated by the activity of textures within random polygon area along the edges resembles the distortion visually.

3. DIBR Distortion Mask Prediction Network Embedding

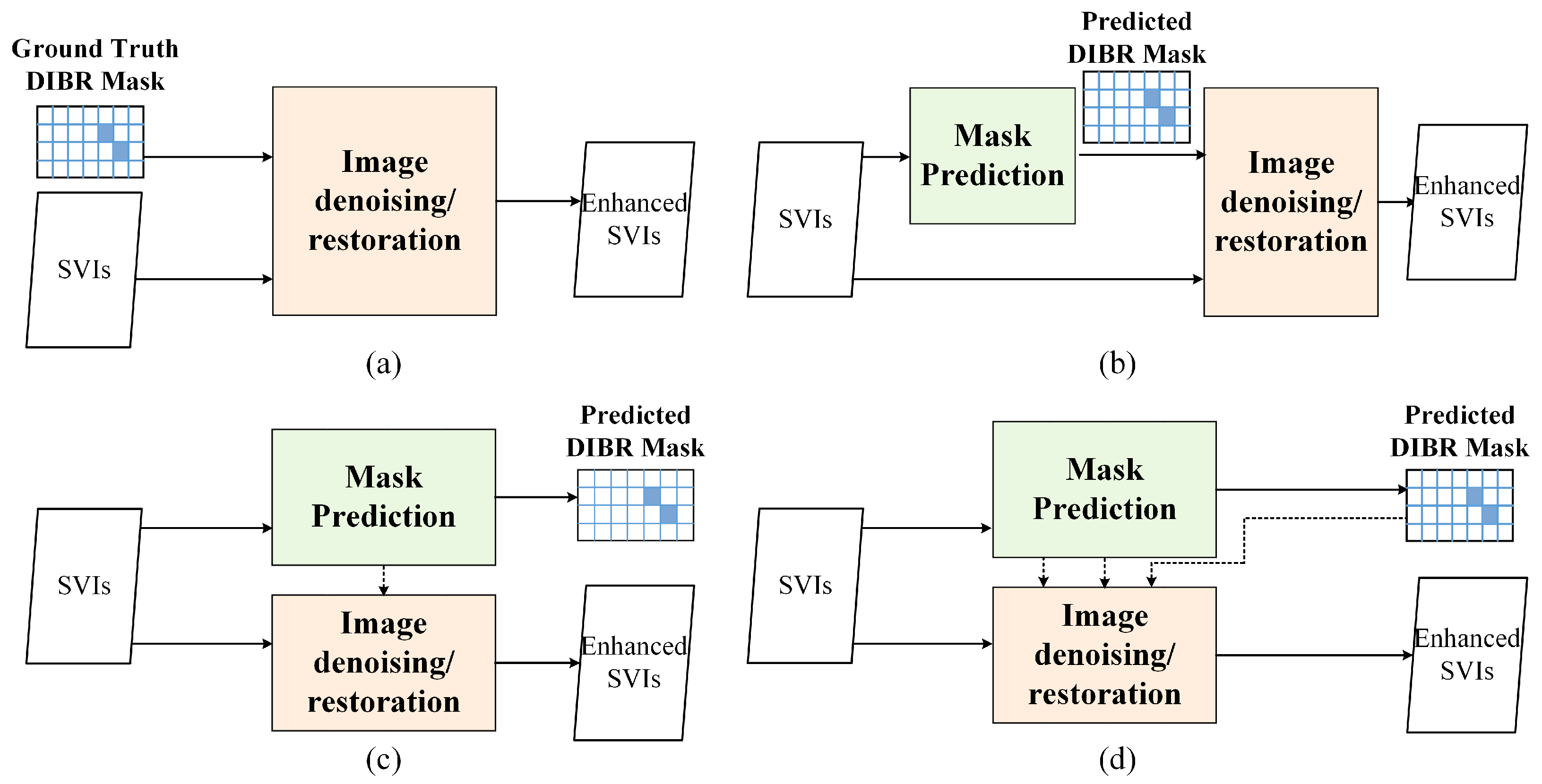

Existing IQA models for SVI demonstrate that DIBR distortion position determination is the key procedure for quality assessment [8][9], which hints that knowing and paying more attention to DIBR distortion position may elevate SVQE models in enhancing SVI quality. Therefore, how to incorporate the DIBR distortion position into SVQE models becomes a new issue. The intuitive way is directly integrating DIBR distortion position with distorted image as a whole input. Figure 54a shows the sketch map of this way. It could be validated by experiment in Section 4 that knowing DIBR distortion position is helpful for synthesized image quality enhancement. However, the ground truth DIBR distortion position is often not known, so the position has to be detected or estimated. Inspired by de-raining [10] and shadow removal [11][12], SVI quality enhancement could be regarded as two tasks, i.e., DIBR distortion mask estimation and image restoration/denoising. Reviewing these works, there are three main possible ways to group mask estimation and image restoration task network, i.e., successive (series) network, parallel network (multi-task), parallel interactive network. The sketch map of these ways is demonstrated in Figure 54b–d. In addition to different organization or design of networks, attention mechanism, such as spatial attention [13], self-attention [14], or non-local attention [15], is also considered in existing denoising or restoration networks. In thiRes work, weearchers mainly focus on networks which explicitly combine the DIBR mask prediction and DIBR distortion elimination and mainly test the successive (series) network shown in Figure 54b.

Figure 4. Four possible ways of image denoising/restoration networks integrating with DIBR distortion position. (a) Intuitive way of integrating ground truth DIBR distortion position. (b) Successive networks with DIBR distortion prediction. (c) Parallel networks with DIBR distortion prediction. (d) Parallel interactive network with DIBR distortion prediction.

References

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor indoor segmentation and support inference from RGBD images. In Proceedings of the 12th European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 746–760.

- Timofte, R.; Gu, S.; Wu, J.; Van Gool, L.; Zhang, L.; Yang, M.H.; Haris, M.; Shakhnarovich, G.; Ukita, N.; Hu, S.; et al. NTIRE 2018 challenge on single image super-resolution: Methods and results. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 852–863.

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1623–1637.

- Shih, M.L.; Su, S.Y.; Kopf, J.; Huang, J.B. 3D photography using context-aware layered depth inpainting. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8025–8035.

- Kang, G.; Dong, X.; Zheng, L.; Yang, Y. Patchshuffle regularization. arXiv 2017, arXiv:1707.07103.

- Hada, P.S. Approaches for Generating 2D Shapes. Master’s Dissertation, Department of Computer Science, University of Nevada, Las Vegas, NV, USA, 2014.

- Random Polygon Generation. Available online: https://stackoverflow.com/questions/8997099/algorithm-to-generate-random-2d-polygon (accessed on 19 October 2022).

- Li, L.; Zhou, Y.; Gu, K.; Lin, W.; Wang, S. Quality assessment of DIBR-synthesized images by measuring local geometric distortions and global sharpness. IEEE Trans. Multimed. 2018, 20, 914–926.

- Wang, G.; Wang, Z.; Gu, K.; Xia, Z. Blind quality assessment for 3D-synthesized images by measuring geometric distortions and image complexity. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4040–4044.

- Zhang, H.; Patel, V.M. Density-aware single image de-raining using a multi-stream dense network. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 695–704.

- Cun, X.; Pun, C.; Shi, C. Towards ghost-free shadow removal via dual hierarchical aggregation network and shadow matting GAN. In Proceedings of the 34th AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 10680–10687.

- Purohit, K.; Suin, M.; Rajagopalan, A.N.; Boddeti, V.N. Spatially-adaptive image restoration using distortion-guided networks. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 2289–2299.

- Pan, Z.; Yu, W.; Lei, J.; Ling, N.; Kwong, S. TSAN: Synthesized view quality enhancement via two-stream attention network for 3D-HEVC. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 345–358.

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. arXiv 2022, arXiv:2204.04676.

- Wan, Z.; Zhang, B.; Chen, D.; Zhang, P.; Chen, D.; Liao, J.; Wen, F. Bringing old photos back to life. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2744–2754.

More