Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Amina Yu and Version 3 by Amina Yu.

Deep vision multimodal learning aims at combining deep visual representation learning with other modalities, such as text, sound, and data collected from other sensors. With the fast development of deep learning, vision multimodal learning has gained much interest from the community. The construction of a learning architecture and framework is the core technology of deep multimodal learning. The design of feature extraction, modality aggregation, and multimodal loss function will be discussed.

- computer vision

- Learning Architectures

1. Feature Extraction

Feature extraction is the first step after the multimodal input signal enters the network. Essentially, the input signal is mapped to the corresponding feature space to form a feature vector. A well-performing representation tends to perform better on subsequent downstream subtasks. Zhang et al. [1][8] show that even only improving visual features significantly improves the performance across all vision–language tasks. For feature extraction, thit wass paper mainly collecteds and discusseds the transform-based network architecture.

Transformers [2][9] are a family of sequence-to-sequence deep neural networks. While they were originally designed for natural language processing (NLP), they have recently been widely used on modalities such as images [3][10], video [4][11], and audio [5][12]. Transformers use the self-attention mechanism to embed the input signal, then map it into the feature space for representation. An attention mechanism can be described as mapping a query and a set of key–value pairs to an output, where the query, key, value, and output are all in the form of vectors. The output is computed as a weighted sum of values, each weighted by the query’s similarity function with the corresponding key. This approach [2][9] effectively handles the task of feature extraction from long sequences. As it has a larger receptive field, it can better capture the auto-correlation feature information in the input sequence. Transformer-based models have the following two good properties: unity and translation ability. Unity refers to the architecture in which feature extraction for different modalities can all use the transformer-based architecture of the network. Translation ability means that the feature hierarchy between different modalities can be easily aligned or transferred due to the same feature extractor architecture. These two properties are discussed in detail in the remainder of this section.

For multimodal feature extraction, transformers have demonstrated the ability of unity. In a typical multimodal learning network, text features are extracted using BERT [6][13], and image features are extracted using ResNet [7][14]. This approach results in the granularity on both sides not being aligned, as the text side is the token word and the image side is the global feature. A better modeling method should also convert the local features of the image into “visual words” so that the text words can be aligned with the “visual words”. After the transformer has achieved state-of-the-art achievements on each unimodality task, the task of using the transformer for multimodal learning is taken for granted. Furthermore, because the individual feature extraction of each modality can be realized with it, a unified framework is formed. Therefore, the interaction and fusion of various modality information also become more accessible. Singh et al. [8][15] use a single holistic universal model for all language and visual alignment tasks. They use an image encoder transformer adopted from ViT [3][10] and a text encoder transformer to process unimodal information and a multimodal encoder transformer that takes as input the encoded unimodal image and text and integrates their representations for multimodal reasoning. The decoder is applied to the output of the appropriate encoder for different downstream tasks to obtain the result.

Transformers also have demonstrated the ability of translation ability. Likhosherstov et al. [9][16] propose co-training transformers. By training multiple tasks on a single modality and co-training on multimodalities, the model’s performance is greatly improved. Akbari et al. [10][17] present a framework for learning multimodal representations from unlabeled data using convolution-free transformer architectures. These works show the important role of transformers in multimodal feature extraction and they will certainly become a major trend in the future.

However, due to the excessive memory and a large number of parameter requirements from transformers, existing work typically fixes or fine-tunes the language model and trains only the vision module, limiting its ability to learn cross-modal information in an end-to-end performance. Lee et al. [11][18] decompose the transformers into modality-specific and modality-shared parts so that the model learns the dynamics of each modality both individually and together, which dramatically reduces the number of parameters in the model, making it easier to train and more flexible.

Another mechanism that has been used for multimodal feature extraction is called a memory network [12][13][19,20]. It is often used in conjunction with transformers, which are models that focus on different aspects. Most neural network models cannot read and write long-term memory parts and cannot be tightly coupled with inference. The method based on a memory network stores the extracted features in external memory and designs a pairing and reading algorithm, which has a good effect on improving the modal information inference of sequences and is effective on video captioning [14][21], vision-and-language navigation [15][16][22,23], and visual-and-textual question answering [17][24] tasks.

In conclusion, recent works dig out the potential value of the transformer-based network as the multimodal feature extractor. The transformer-based network could be applied to vision, language, and audio modalities. Thus, the multimodal feature extractor can be composed of a unified architectural form, which is suitable for aligning the features and transferring the knowledge between modalities.

2. Modality Aggregation

After extracting multimodal features, it is important to aggregate them together. The more typical fusion methods [18][19][25,26] are divided into early fusion and late fusion. Early fusion refers to directly splicing the multimodal input signals and then sending them into a unified network structure for training. Late fusion [20][21][27,28] refers to the fusion of the features of each modal signal after feature extraction. Finally, the network structure at the end is designed according to the different downstream tasks. As early and late fusion inhibit intra- and intermodal interactions, current research focuses on intermediate fusion methods, which allow these fusion operations to be placed in multiple layers of deep learning models. Different modalities can be fused simultaneously into a shared presentation layer or executed incrementally using one or more modalities at a time. Depending on the specific task and network architecture, the multidodal fusion method can be very flexible. Karpathy et al. [22][29] uses a “slow-fusion” network where extracted video stream features are gradually fused across multiple multimodal fusion layers, which performs better in a large-scale video stream classification task. Neverova et al. [23][30] consider the difference in information level between modalities and fuse the information of different modalities step by step, which are visual input modalities, then motion input modals, then audio input modalities. Ma et al. [24][31] propose a method to automatically search for an optimal fusion strategy regarding input data. In terms of specific fusion architecture, existing fusion methods can be divided into matrix- or MLP-based, attention-based, and other specific methods.

Matrix-based fusion. Zedeh et al. [25][32] use “tensor fusion” to obtain richer feature fusion methods with three types of tensors: unimodal, bimodal, and trimodal. Liu et al. propose the low-rank multimodal fusion method, which improves efficiency based on “tensor fusion”. Hou et al. [26][33] integrate multimodal features by considering higher-order matrices.

MLP-based fusion. Xu et al. [27][34] propose a framework consisting of three parts: a compositional semantics language model, a deep video model, and a joint embedding model. In the joint embedding model, they minimize the distance of the outputs of the deep video model and compositional language model in the joint space and update these two models jointly. Sahu et al. [28][35] first encode all modalities, then use decoding to restore features, and finally calculate the loss between features.

Attention-based fusion [29][36]. Zedeh et al.use “delta-memory attention” and “multiview gated memory” to simultaneously capture temporal and intermodal interactions for better multiview fusion. The purpose of using the memory network is to save the multimodal interaction information at the last moment. In order to capture the interactive information between multimodalities and unimodality, the multi-interactive attention mechanism [30][37] is further used; that is, textual and visual will fuse information through attention when they cross the multiple layers. Nagran et al. [31][38] use a shared token between two transformers so that this token becomes the communication bottleneck of different modalities to save the cost of computational attention.

In addition to these specific fusion methods, there is some auxiliary work. Perez et al. [32][39] help find the best fusion architecture for the target task and dataset. However, these methods often suffer from the exponential increase in dimensions and the number of modalities. The low-rank multimodal fusion method [33][40] performs multimodal fusion using low-rank tensors to improve efficiency. Gat et al. [34][41] propose a novel regularization term based on the functional entropy. Intuitively, this term encourages balancing each modality’s contribution to the classification result.

In conclusion, direct concating of modal inputs is abandoned, and more work is considered to fuse them gradually while extracting features or after that. The fusion methods should be chosen specifically due to the properties of modalities and tasks.

3. Multimodal Loss Function

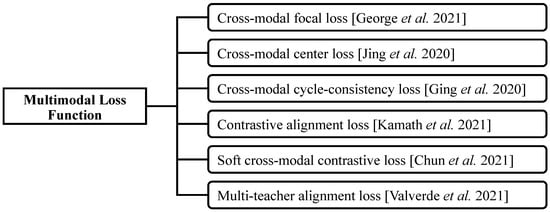

A reasonable loss function design can effectively help the network to train, but there is currently no comprehensive summary of some special loss functions used in multimodal training. This section lists some loss functions specifically designed for multimodal learning, shown in Figure 12.

Figure 12.

Multimodal loss function summary.

Cross-modal focal loss [35][42]. This is an application of focal loss [36][43] in the multimodal field. Its core idea is that when one of the channels can correctly classify a sample with high confidence, the loss contribution of the sample to the other branch can be reduced; if one channel can completely correctly classify a sample, then the other branch can no longer penalize the model. It shows that another modality mainly controls the loss value of the current modality.

Cross-modal center loss [37][44]. This is an application of center loss [38][45] in the multimodal field, which minimizes the distances of features from objects belonging to the same class across all modalities.

Cross-modal cycle-consistency loss [39][46]. This is used to enforce the semantic alignment between clips and sentences. It replaces the cross-modal attention units used in [40][41][47,48]. Its basic idea is to consider mapping the original modality to the target modality during cross-modal matching and find the matching target modality information with the highest similarity for specific original modality information. The matched target modal information is inversely mapped back to the original modal. Finally, the distance between the mapped value and the original value is calculated.

Contrastive alignment loss [42][49]. This loss function adopts InfoNCE [43][50] with reference to contrastive learning. It enhances the alignment of visual and textual embedded feature representations, ensuring that aligned visual feature representations and linguistic feature representations are relatively close in feature space. This loss function does not act on the position but directly on the feature level to improve the similarity between the corresponding samples.

Soft cross-modal contrastive loss [44][51]. To capture pairwise similarities, HIB formulates a soft version of the contrastive loss [45][52] widely used for training deep metric embeddings. Soft cross-modal contrastive loss adopts the probabilistic embedding loss in previous contrastive loss, where the match probabilities are now based on the cross-modal pairs.

Multiteacher alignment loss [46][53]. This is a loss function used in multimodal knowledge distillation [47][54] that facilitates the distillation of information from multimodal teachers in a self-supervised manner. It measures the distance of the feature distribution of each modality after the feature extraction stage.

In conclusion, most of the loss functions for multimodal learning are extended by some previous unimodality loss functions, which are complemented according to the cyclic consistency or alignment between modalities.