Caching has attracted much attention recently because it holds the promise of scaling the service capability of radio access networks (RANs). To realize caching, the physical layer and higher layers have to function together, with the aid of prediction and memory units, which substantially broadens the concept of cross-layer design to a multi-unit collaboration methodology.

- caching

- radio access networks

- virtual real-time services

- AI-empowered networks

- cross-layer design

- pricing

- recommendation

- edge compression

- proactive computation

1. Introduction

Modern radio access networks are capable of achieving data rates of Gbps, while they may still fail to meet the predicted bandwidth requirements of future networks. A recent report from Cisco [1] forecasts that mobile data traffic will grow to 77.49 EB per month in 2022. In theory, a human brain may process up to 100T bits per second [2]. As a result, a huge gap may exist between the future bandwidth demand and provision in next generation radio access networks (RANs). Unfortunately, on-demand transmission that dominates current RAN architectures has almost achieved its performance limits revealed by Shannon in 1948, given extensive development of physical layer techniques in the past decades. On the other hand, the radio spectrum has been over-allocated, while the overall energy consumption is explosive. Since the potential of on-demand transmission has been fully exploited, it is time to conceive novel transmission architectures for sixth generation (6G) networks [3] so as to scale its service capability. The cache-empowered RAN is one of the potential solutions that hold the promise of scaling service capability [4].

Caching techniques were originally developed for computer systems in the 1960s. Web caching was conceived for the Internet due to the explosively increasing number of websites in the 2000s. In contrast to on-demand transmission, caching allows proactive content placement before being requested, which has motivated some novel infrastructures such as information-centric networks (ICNs) and content delivery networks (CDNs).

More recently, caching has been found to substantially benefit data transmissions over harsh wireless channels and meet growing demands with restrained radio resources in various ways [5][6][7][8][5,6,7,8].

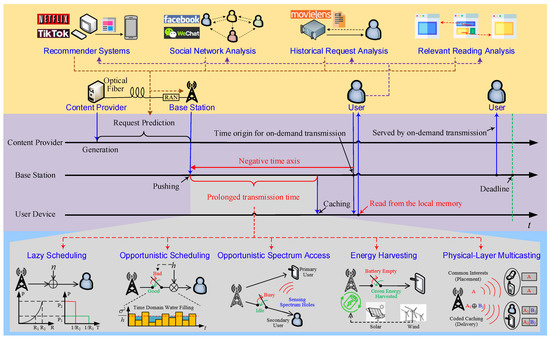

Though considerable literature on the subject of wireless caching exists, there is a need to revisit it from a cross-layer perspective, as shown in Figure 1.

| Transmission Techniques | Application Scenarios | How Is SE or EE Gain Attained? | Why Is Delay Increased? |

|---|---|---|---|

| Lazy Scheduling | Additive White Gaussian Noise Channels | Due to the convexity of Shannon capacity, EE is a decreasing function of the transmission power/rate. | Low data rate |

| Opportunistic Scheduling | Fading Channels | EE/SE is increased by time domain water-filling, or simply accessing good channels only. | Channel states remaining poor |

| Opportunistic Spectrum Access | Secondary Users | SE is increased by sensing and accessing idle timeslots or spectrum holes. | Spectrum remaining busy |

| Energy Harvesting | Renewable Energy Powered BSs/UEs | The renewable energy harvested from solar panels, wind turbines, or even the RF environment helps to save grid power. | No or little energy harvested |

| Physical-Layer Multicasting | Users with Common Interests | Multiple users located in the same cell are served by broadcasting a common signal to them. | Waiting for common requests |

2. Proactive Service: Gains, Costs, and Needs

Without waiting for users’ orders, a cache-empowered RAN provides proactive services.

2.1. Caching Gains: A Time-Domain Perspective

-

Caching enables physical layer multicasting [9][12]. In theory, caching is capable of serving infinitely many users with a common request, thereby making RANs scalable. Classic on-demand transmission can seldom benefit from the multicasting gain because users seldom ask for a common message simultaneously. Aligning common requests in the time domain may, however, cause severe delay and damage Quality-of-Service (QoS). Proactive caching brings a solution to attain multicasting gain without inducing delay in data services. Even when users have different requests, judiciously designed coded caching strategies [10][11][13,14] allow RANs to enjoy the multicasting gain.

-

Caching extends the tolerable transmission time, thereby bringing spectral efficiency (SE) or energy efficiency (EE) gains. Lazy scheduling [12][15], opportunistic scheduling [13][14][16,17], opportunistic spectrum access (OSA) [15][18], and energy harvesting (EH) [16][19] may increase the SE and EE. However, their applications are usually prohibited or limited due to their random transmission delay. Caching enables content transmission before user requests and hence substantially prolongs the delay tolerance.

-

Caching enables low-complexity interference mitigation or alignment [17][10]. It is well known that a user can cancel a signal’s interference based on prior knowledge about the message that the signal bears. Classic successive interference cancellation (SIC) decodes the interference first by treating the desired signal as noise. However, SIC can suffer from high complexity and error propagation. By contrast, caching provides reliable prior knowledge on the interfering signal, which significantly reduces the complexity of interference cancellation.

2.2. Memory Cost to Be Paid for Caching

A cost to be paid arises from the memories at edge nodes and end devices, which are inexpensive but not free [4]. The memory cost is determined by not only how many bits are cached, but also how long they are cached [18][19][21,22]. The average memory size of user devices continues to grow. However, no matter how large a memory is, overflow occurs if the cached contents are never evicted. As a result, a content item should be evicted from the edge node if it becomes unpopular or outdated, or from a user’s device if it has been already read by this user. After its eviction, the occupied memory space will be released, which enables memory sharing in the time domain. The eviction time is a challenging issue. Evicting too early may cause possible future requests to be missed, evicting too late wastes the storage resource. On the other hand, if a file is cached much earlier than its request time, the storage resource is also wasted. If it is cached too late, it may miss user requests, thereby losing the caching gain. In addition, it is inefficient to update memory when the channel remains poor or the spectrum is busy. Hence there is a need to jointly optimize pushing and memory updating, which generalizes the concept of cross-layer design as both wireless links and memories are involved. Such communication-storage coordination becomes very challenging with preference skewness, radio environment dynamics, and coded caching/prefetching.3. Request Time Prediction: Beyond Content Popularity

RequestRequest time prediction is potentially highly beneficial in proactive caching. Unfortunately, conventional popularity based models, either static or time-varying, are content-specific. They mainly focus on the content popularity distribution among users.

3.1. Characterization of Random Request Time

Request time prediction relies on the fact, also observed in [4], that a content item is usually requested by a user at most once. We set a content item’s generation time to be the time origin. The item can be requested by a user at a random time after its generation, denoted by X, also referred to as the request delay. If it is never requested by the user, we regard the request delay to be X=0−. Otherwise, the user will ask for it at X≥0. The accurate request delay X can hardly be predicted, but its probability density function (p.d.f.), denoted by p(x), is predictable. We shall refer to p(x) as the statistical request delay information (RDI), which characterizes our prediction about the request time [11].

RDI provides more knowledge than demand probability and popularity, because we can obtain a user’s demand probability α for a content item from its RDI, i.e., α=∫∞0p(x)dx. Further, if we assign lower indices i and k to indicate users and content items respectively, the popularity of item k can be characterized by Ni∑kNk, in which Nk=∑iαik represents the expected total number of requests for item k.

3.2. RDI Estimation Methods

Artime predfiction is potentially highly beneficial in proactive caching. Unfortunately, conventional popularity based models, either static or time-varying, are content-specific. They mainly focusial Intelligence (AI) and big data technologies provide powerful tools for understanding user behaviors in the time domain [25,26,27]. onA the contentime-varying popularity distribution among users.3.1. Characterization of Random Request Time

Requestprediction for tvime prediction relies on the fact, also observedeo clips can be found in [428,29], tin whichat a content item is usually requested by a user at most once. We set a content item’s generation real data from YouTube and Facebook are used. In practice, the request time to be the time origin. The item can be requesis also affected by a user at a random time after its generation, denoted by X, also referred to as the request delay. If it is never requested by the user, we regard the request delay to be X=0−. Otherwise, the user will ask for it at X≥0. The accurate request delay X can hardly be predictedone’s environments, activities, social connections, etc. For instance, one tends to watch video clips to kill time in the subway or during leisure time, but its probability density function (p.d.f.), denoted by p(x), is predictable. We shall refer to p(x) as the statistical request delay information (RDI), which characterizes our nternet surfing is strictly prohibited while driving. Consequently, user-specific prediction about the request time [20]. RDI prbrings tovides more knowledge than demand probability and popularity, because we can obtain a user’s demand probability α for a content item from its RDI, i.e., α=∫∞0p(x)dx. Further, if we assign lower indices i and k to indicate users and content items respectively, the popularity of item k can be characterized by Ni∑kNk, in which Nk=∑iαik represents the expected total number of requests for item k.ther human behavior analysis, natural language processing (NLP), social networks, etc., leading to many cross-disciplinary research opportunities that include but are not limited to3.2. RDI Estimation Methods

-

Exploiting the impact of social networks, recommendation systems, and search engines,

-

Discovering relevant content using NLP,

-

Analyzing a user behaviors, e.g., activities, mobilities, and localizations.

Artificial Intelligence (AI) and big data technologies provide powerful tools for understanding user behaviors in the time domain [21][22][23]. A time-varying popularity prediction for video clips can be found in [24][25], in which real data from YouTube and Facebook are used. In practice, the request time is also affected by one’s environments, activities, social connections, etc. For instance, one tends to watch video clips to kill time in the subway or during leisure time, but internet surfing is strictly prohibited while driving. Consequently, user-specific prediction brings together human behavior analysis, natural language processing (NLP), social networks, etc., leading to many cross-disciplinary research opportunities that include but are not limited to

-

Learning a user’s historical requests and data rating [26][27],

-

Exploiting the impact of social networks, recommendation systems, and search engines,

-

Discovering relevant content using NLP,

-

Analyzing a user behaviors, e.g., activities, mobilities, and localizations.

Proactive caching prolongs the transmission time, which enables many possible energy- and/or spectral-efficient physical layer techniques. We are interested in how a content item is pushed given its RDI and what its EE/SE limit is. Quantitative case studies on the EE of pushing over additive white Gaussian noise (AWGN), multiple-input single-output (MISO), and fading channels are presented in [12][13][14][15,16,17], respectively. A user that tolerates a maximal delay of T seconds may request a content item having B bits. The AWGN channel has a normalized bandwidth and power spectral density of noise.4. Fundamental Limits of Caching: A Cross-Layer Perspective

4.1. Communication Gains

4.2. Memory Costs

As noted previously, a cost of caching is increased memory cost, which has to pay the memory cost, which can be reduced by efficiently reusing memory in the time domain. The memory cost is determined by not only how many bits are cached, but also how long they are cached. Memory is wasted if a content item is cached much earlier than being requested or evicted too late after being unpopular. Unfortunately, due to the lack of the request time prediction, how to reuse memory efficiently in the time domain has long been ignored.

Memory scheduling becomes more challenging in the following three scenarios. First, memory-efficient scheduling with coded caching remains open because the hit ratio of coded caching is still unknown. Second, the hit ratio can be increased by dropping less popular items when the memory is full. This makes the eviction policy more complicated [28][24]. Finally, the joint scheduling of memories and wireless links generalizes the concept of cross-layer design by involving both the communication and memory units. Deep learning and deep reinforcement learning are expected to play key roles in dealing with the dynamic nature of user requests and radio environments [29][30][31][33,34,35].

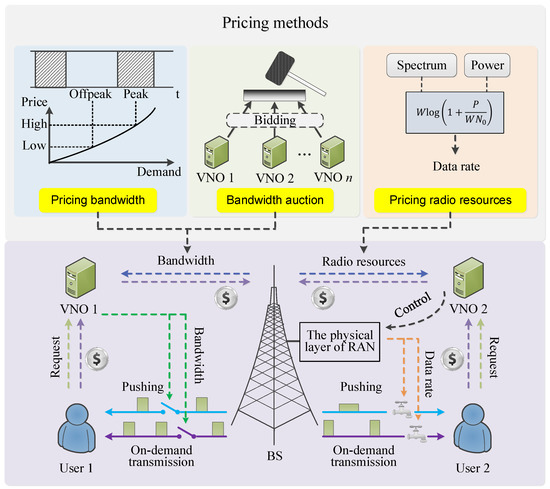

5. Pricing: Creating Incentive for Caching

5.1. Pricing Caching Service Using a Hierarchical Architecture

We conceive a hierarchical architecture with virtual network operators (VNOs) [32][37], as shown in Figure 24. A RAN sells its bandwidth to VNOs, which buy bandwidth to serve their associated users, either by on-demand transmission or caching. If a user cannot find the requested file from the local memory, her or his VNO has to buy bandwidth to serve it. A VNO charges its user for the data volume that the user requests, no matter how a requested file is served.

5.2. Pricing User Cooperation

Though user cooperation plays a central role in caching, selfish users may be unwilling to cooperate. Pricing is an effective tool to motivate user cooperation in various layers.

Caching-oriented pricing should reward users who contribute more memory for caching or private data for request prediction. A user’s hit ratio is increased with more memory used for caching. However, more memory means higher device cost for a user. To reward users contributing more memory for caching, they should enjoy a discount on the telecommunication service fee. On the other hand, the accuracy of request prediction increases with more historical request data or more knowledge about social connections. Sharing these data means more risk in leaking a user’s privacy, with which some users are seriously concerned. To gather more data for request prediction, a lower price should be charged for cooperative users.

5.3. Competition and Evolution

5.4. Pricing Radio Resources, Memory, and Privacy

To fully unlock caching gains, VNOs should be allowed to control the physical layer directly. In particular, a RAN sells its radio resources to VNOs and lets a VNO decide how to use its bought power and spectrum, etc. In this case, VNOs have more freedom and incentive to optimize the SE or EE.

The memory cost should be considered in pricing. Intuitively, the hit ratio is increased if a user allocates more memory for caching, but more memory means a higher device cost paid by this user. Accordingly, users who are willing to contribute more memory to cache more data should be rewarded e.g., by offering them some discount, as noted previously.

Sharing the infrastructure, each VNO will announces its own pricing and reward policy. Each user then will chooses her or his favorite VNO based on the willingness of sharing private data, memory allocation, and her or his own preferences. In this context, mechanism design needs more quantitative study from a game-theoretic perspective.

6. Recommendation: Making RANs More Proactive

6.1. Joint Caching and Recommendation

6.2. After-Request Recommendation and Soft Hit