Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Giulia Sancesario and Version 2 by Vivi Li.

The recent growth of open data-sharing initiatives collecting lifestyle, clinical, and biological data from Alzheimer's disease (AD) patients has provided a potentially unlimited amount of information about the disease, far exceeding the human ability to make sense of it. Integrating Big Data from multi-omics studies provides the potential to explore the pathophysiological mechanisms of the entire biological continuum of AD. In this context, Artificial Intelligence (AI) offers a wide variety of methods to analyze large and complex data in order to improve knowledge in the AD field.

- Alzheimer’s disease

- diagnosis

- machine learning

- artificial intelligence

1. Introduction

Alzheimer’s disease (AD) is an irreversible neurodegenerative disease that progressively destroys cognitive skills, up to the development of dementia. The clinical diagnosis of AD is based on the presence of objective cognitive deficits (which are, typically, prominent memory impairments). In some cases, AD may show atypical presentations, with impairments in non-amnesic domains (i.e., attention, executive functions, visuo-constructive practice and language) [1]. However, AD shares many common clinical features with other neurodegenerative dementia, including Lewy body dementia [2], frontotemporal disorders [3], and vascular dementia, making early and differential diagnosis difficult, especially in the first stage of the disease [4][5][4,5]. Finally, the occurrence of co-existing pathologies is a common feature in those cases of neurodegenerative diseases that share a common pathogenic mechanism, consisting of extracellular and/or intracellular insoluble fibril aggregates of abnormal misfolded proteins (e.g., the formation of amyloid plaques, tau tangles, or α-synuclein inclusions). In this context, the system biology approach, which aims at the integration of clinical and multi-omics data, can help to detect and recognize the pathophysiological and molecular changes characteristic of AD or other pathologies, as well as the associated clinical manifestations occurring, in particular, in the pre-clinical stages [6][8].

Amyloid plaques and neurofibrillary tangles are the neuropathological hallmarks of the disease [7][8][9,10], which can be evaluated in vivo by neuroimaging investigation and cerebrospinal fluid (CSF) biomarker assessment; namely, considering amyloidβ1-42 (Aβ42), its ratio with amyloid β1-40 (Aβ42/Aβ40), total tau protein (t-tau), and hyperphosphorylated tau (p-tau181).

As AD is multi-factorial, many conditions can influence the individual risk and age of onset, particularly metabolic impairments (diabetes mellitus, hypertension, obesity, and low HDL cholesterol), depression, hearing loss, traumatic brain injury, and alcohol abuse [9][11]. Lifestyle factors such as smoking, low physical activity, and social isolation are potentially modifiable, while several of these may have bi-directional relationships and may be early manifestations, other than risk factors, in the prodromal phase of dementia [10][12]. All of these clinical, biological, socio-demographic, and lifestyle factors contribute to defining the development of the disease and, therefore, are useful in trying to understand the still-misunderstood etiology of AD.

Decades of experimental and clinical research have contributed to identify many mechanisms in the pathogenesis of the disease, such as the β-amyloid hypothesis. Clinical and biological data from electronic health records and multi-omics sciences represent a potentially unlimited amount of information about biological processes, such as genomes, transcriptomes, and proteomes, which can be explored through Big Data exploitation [6][11][8,13]. The rapid collection of data from tens of thousands of AD patients far exceeds the human ability to make sense of the disease.

Complex AI-based models can be successful in extracting meaningful information from Big Data; however, as their complexity increases, it becomes more and more difficult to interpret how they produce their output. Thus, they have been called black-box models. Making AI explainable is a key problem of AI technological development in recent years, and is of pivotal importance in healthcare applications, where both patients and clinicians need to trust research methods to make decisions about people’s health [12][14]. The AD pathology is characterized by high complexity and heterogeneity, and many authors have demonstrated the absence of etiological uniformity and diverse treatment suitability for each patient, supporting the need for an accurate individual diagnosis [13][14][15,16]. In order to make the most of biological experiments and refine their findings, they have to be supported and followed by complex biological modeling, based on mathematical and statistical tools such as Artificial Neural Networks [15][17]. Formalized domain expertise from psychology, neuroscience, neurology, psychiatry, geriatric medicine, biology, and genetics can be integrated with novel analytic approaches from bioinformatics and statistics to be applied on Big Data in AD research projects, with the aim of answering detailed questions through the use of predictive models. These can succeed in answering key questions about promising biomarker combinations, patient sub-groups, and disease progression, finally leading to the development of effective treatment strategies, helping patients with tailored medical approaches [16][18].

In this context, AI technology represents a promising approach to investigate the pathological mechanisms of AD by analyzing such complex data. In this review, we focus on recent findings using AI for AD research and future challenges awaiting its application: Will it be possible to make an early diagnosis of AD with AI? Will AI be able to predict conversion from Mild Cognitive Impairment (MCI) to AD dementia, stratify patients, and identify “malignant” forms with worse disease progression? Finally, will AI be able to predict the course and progression of the disease and help in finding a cure for AD?

1.1. AI and the Biomedical Research

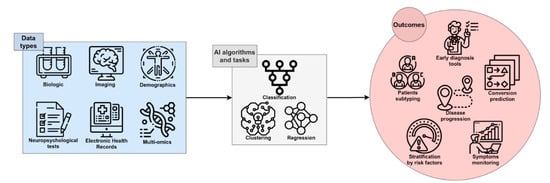

AI has recently significantly revolutionized the way that digital data is analyzed and used. Currently, in some applications, AI is used to perform simple tasks, such as face or speech recognition, and often outperforms human abilities in those tasks [17][19]. This is definitely a great opportunity to be transferred to medical care, due to the potential for fast, low-cost, and accurate automation; for example, in the processing of digital images by AI algorithms [18][20]. Several studies have been performed with the attempt to ameliorate the knowledge of complex multifactorial diseases such as AD: AI exploits the features of Machine Learning (ML) and Deep Learning (DL) to develop algorithms that can be used in the clinical and biomedical fields for the classification and stratification of patients, based on the integration and processing of a large variety of available data sources, including neuroimaging, biochemical markers, clinical, and neuropsychological (NPS) data from patients and controls. An extensive application of AI in the biomedical field is for Computer-Aided Diagnosis (CAD). This kind of application aims to automate the diagnostic process upon data analysis, potentially contributing to reaching an early and differential diagnosis of AD or dementia of different etiology (Figure 1).

Figure 1. The framework of AI in AD research. Single-modality or integrated data can be processed by several algorithms and tasks, leading to useful outcomes for early and accurate diagnosis, prediction of the course and progression of disease, patient stratification, and discovery of novel therapeutic targets and disease-modifying therapies.

ML is a fundamental branch of AI, consisting of a collection of data analysis techniques that aim to generate predictive models by learning from data, progressively improving the ability to make predictions through experience (see glossary; Table 1) [19][21]. DL is a sub-field of ML and uses methods that are able to learn relationships between inputs and outputs by modeling highly non-linear interactions into higher representations, at a more abstract level (see glossary) [20][22]. Moreover, there are two main categories of predictive models based on ML or DL techniques: Supervised and unsupervised. In supervised learning, the algorithms learn from labeled data to associate an input (e.g., cortical thicknesses data) with a specific output (e.g., presence/absence of a disease or neuropsychological test performance), leading to models that are able to predict the output variable. In unsupervised learning, the algorithms learn from unlabeled data with the purpose of identifying clusters among the observations, based on similar features (see glossary); unlike in supervised learning, there are no correct answers and the aim of the algorithm is to discover structures within variables.

Table 1. Glossary.

| Method | Definition | Details |

|---|---|---|

| Machine Learning | A collection of data analysis techniques that aim to generate predictive models by learning from data and improving their ability to make predictions through experience. | ML models are considered shallow learners, working on data with hand-crafted features defined through expert-based knowledge. Raw data must be pre-processed before constructing a ML system, requiring domain expertise to proceed with feature extraction and engineering, in order to train the algorithm appropriately. As an example of a ML algorithm, a Support Vector Machine (SVM) accomplishes the classification task by finding the hyperplane that, in the multi-dimensional feature space, optimally separates the data into two (or more) classes. |

| Deep Learning | A sub-field of ML that uses methods that are able to learn relationships between inputs and outputs by modeling highly non-linear interactions. | DL models are different from shallow learners and can elaborate raw data, thus requiring little or no feature engineering, thanks to their ability to model complex functions and identify relevant aspects in the data distribution. DL algorithms are based on Artificial Neural Networks (ANNs), which are inspired by the human brain and can model very complex functions, identifying important aspects in the features and suppressing irrelevant ones. As an example of a DL algorithm, a Convolutional Neural Network (CNN) is composed of nodes organized into layers. It can take an image as input, elaborate the features of the image through its layers, and assign a class attribution as output, thus differentiating between two or more groups. |

| Supervised learning | A ML task defined through the use of labeled data sets for algorithm training. | The algorithms learn to give the right answer, as defined by the ground truth set, which has labels assigned to the data.An SVM performing the classification task is an example of an algorithm trained by supervised learning. |

| Unsupervised learning | A set of algorithms aimed to discover hidden patterns or data groupings without the need for human intervention. | In unsupervised learning, unlike supervised learning, there are no correct answers and the algorithm’s aim is to discover structures within variables. The algorithms work with unlabeled data.Common unsupervised methods include clustering algorithms and Principal Component Analysis (PCA). |

| Classification task | The algorithm is trained to predict a class label. | A classical example is the classification of patients affected by a disease vs. normal controls. The algorithm learns to associate input data with an output label in a supervised manner, and its results can be evaluated by metrics such as accuracy score. |

| Regression task | The algorithm is trained to predict the value of a continuous variable. | An example is the prediction of hippocampus volume as a numerical quantity. The algorithm learns to associate input data with an output value in a supervised manner, and its results can be evaluated by metrics such as the Root Mean Squared Error (RMSE). |

| Clustering | Clustering consists of partitioning a data set, in order to find a grouping of the data points. | Clustering is one of the most important unsupervised learning techniques. Its main goal is to reveal sub-groups within heterogeneous data, in such a way that greater homogeneity is shown within clusters (rather than between clusters). Clustering algorithms can lead to the identification of patterns across subjects or patients that are difficult to find even for an expert clinician. |

| Overfitting | Overfitting occurs when the model is too dependent on training data to make accurate predictions on test data. | When a model is overfitted, its learned ability to separate between two classes does not generalize well to data it has never seen before, therefore limiting its usability for real-world applications. |

| Ensemble learning | Model ensembling consists of combining multiple ML models, in order to obtain better predictive performance than any of the constituents alone. | A single model alone can be weak in generating predictions. Combining multiple models can compensate for their individual weaknesses. |

| Transfer learning | A supervised learning technique in which the knowledge previously acquired from the model in one task is used to solve related ones. | In a transfer learning approach, the model is first pre-trained on a source task, then re-trained and tested on a target task. The source task should be related to the target task, with similar relations between the input and output data. In fact, in the pre-training phase, the model gains helpful knowledge for the target task. |

| Cox regression | The Cox proportional hazards model is a regression technique for investigating the association between the time of an event occurring and one or more predictor variables. | Cox regression gives hazard rates as measures of how factors influence the risk for an event occurrence (outcome), be it death or infection. |

AI techniques have found a wide range of applications in the clinical and biomedical fields, in an effort to automate, standardize, and improve the accuracy of early prediction (regression task), classification of patients (classification task), or the stratification of subjects, based on the processing of specific data (see glossary). In a classification task, the algorithm is trained to associate a label (e.g., “AD diagnosis”) to a given set of features (e.g., clinical parameters, cognitive status, genotypes, biochemical markers, imaging, and so on), in order to be able to generate predictions. Once the model is ready, it is able to predict a class, defined by a label (e.g., “AD” or “control”), by analyzing the set of features of a new given example. The regression task instead involves predicting the value of a variable (e.g., “hippocampus volume” or “biomarker level”) measured on a continuous scale.

Despite ample research effort, we still do not have a cure capable of modifying and/or halting the course of the disease. Some clinical trials are ongoing, especially with the use of monoclonal antibodies targeting Aβ peptides, modified Aβ species, and monomeric as well as aggregated oligomers, which have shown to be safe and have clinical efficacy in AD patients [21][23]. However, AI pipelines can be applied in automatic compound synthesis in order to analyze the literature and high-throughput compound screening data, to perform an initial molecular screening and automated chemical synthesis [22][24]. By updating the AI model after cell- or organoid-based experiments, AI can be used to propose a new molecular optimization plan and new bioassays can be conducted to evaluate the biological effects of the compound, thus enabling an automated drug development cycle based on AI design and high-throughput bioassay, greatly accelerating the development of new drugs [23][25]. AI technology can be used to repurpose known drugs for treatment of Alzheimer’s disease [22][24][25][26][24,26,27,28]. This is a fast, low-cost drug development pathway, in which AI is used to predict drug repurposing by analyzing large-scale transcriptomics, molecular structure data, and clinical databases. Finally, AI can be exploited to simplify clinical trials too, both in the design and implementation phase [22][24]. Participant selection can be optimized by using AI algorithms on genetic and clinical data, thus predicting which subset of the population may be sensitive to new drugs [27][29]. Notably, coupling AI with data from wearables enables almost real-time non-invasive diagnostics, potentially preventing drop-out at subject level [28][30]. Although promising and rapidly growing, only few of these AI applications have made it to the clinical application stage; nonetheless, AI represents a promising technology to support research and, finally, to develop novel effective therapies [22][29][24,31].

1.2. Public Databases and Biobanks

The application of AI-based techniques for AD and other diseases research requires extensive data sets, composed of hundreds to thousands of entries describing subjects over many clinical and biological variables, which can be employed to develop novel algorithms by analyzing the features of the disease. In the last 20 years, many open data-sharing initiatives have grown in the field of neurodegenerative disease research [30][32]; and, in particular, in AD research. Some important data-sharing resources are the Alzheimer’s Disease Genetics Consortium (ADGC, www.adgenetics.org, accessed on 30 May 2021 Date Month Year), Alzheimer’s Disease Sequencing Project (ADSP, www.niagads.org/adsp/content/home accessed on 30 May 2021), Alzheimer’s Disease Neuroimaging Initiative (ADNI, http://adni.loni.usc.edu/ accessed on 30 May 2021), AlzGene (www.alzgene.org accessed on 30 May 2021), Dementias Platform UK (DPUK, https://portal.dementiasplatform.uk/ accessed on 30 May 2021), Genetics of Alzheimer’s Disease Data Storage Site (NIAGADS, www.niagads.org/ accessed on 30 May 2021), Global Alzheimer’s Association Interactive Network (GAAIN, www.gaain.org/ accessed on 30 May 2021), and National Centralized Repository for Alzheimer’s Disease and Related Dementias (NCRAD, https://ncrad.iu.edu accessed on 30 May 2021) [31][32][33,34]. Such public databases and repositories collect biological specimens and data from clinical and cognitive tests; lifestyle, neuroimages; genetics; and CSF and blood biomarkers from normal, cognitively impaired, or demented individuals, which can be combined to apply cutting-edge ML algorithms. Moreover, the National Alzheimer’s Coordinating Center (NACC) has constructed a large relational database for both exploratory and explanatory AD research, by use of standardized clinical and neuropathological research data [33][35]; DementiaBank, the component of TalkBank dedicated to data on language in dementia, provides data sets from verbal tasks such as the Pitt corpus, which contain audio files and text transcriptions from AD subjects and controls [34][35][36,37].

In the so-called “Omics era”, several databases of omics data—not limited to or specific for neurodegenerative diseases—have been established, such as the Gene Expression Omnibus (GEO), which collects functional genomics data of array- and sequence-based data regarding many physiological and pathological conditions, including AD, among others [36][38]. Finally, UK Biobank collects and stores healthcare databases and associated biological specimens for a wide range of health-related outcomes from a large prospective study including over 500,000 participants [37][39].

In the AD field, public and private databases represent the substrate and the source for AI to facilitate a more comprehensive understanding of disease heterogeneity, as well as personalized medicine and drug development.

So far, the first CAD tools for AD were constructed through the use of AI methods for the analysis of brain imaging [45][46][46,47]. Analyzing MRI data from the OASIS database [47][48], a feature extraction and selection method called “eigenbrain”, which is carried out using PCA (see glossary), was used to capture the characteristic changes of anatomical structures between AD and NC; namely, severe atrophy of the cerebral cortex, enlargement of the ventricles, and shrinkage of the hippocampus. The applied SVM algorithm achieved a mean accuracy of 92.36% for an automated classification system of AD diagnosis, based on MRI data [48][49]. For instance, by using FDG-PET of the brain, a DL algorithm for the early prediction of AD was developed, achieving 82% specificity and 100% sensitivity at an average of 75.8 months prior to the final diagnosis [49][50].

The majority of ML models for classifying AD from NC are trained with neuroimaging data, which have the advantage of high accuracy [50][51], but limitations associated to their high cost and lack of diffusion in non-specialized centers. A large group of studies have focused on the identification of fluid marker panels as potential screening tests. In addition to Aβ- and tau-related biomarkers, novel candidate markers according to other mechanisms of AD pathology have been investigated in experimental and meta-analysis studies, in order to optimize the predictive modeling.

A recent study has applied ML algorithms to evaluate data on novel biomarkers that were available on PubMed, Cochrane Systematic Reviews, and Cochrane Collaboration Central Register of Controlled Clinical Trials databases. Experimental or review studies have investigated biomarkers for dementia or AD using ML algorithms, including SVM, logistic regression, random forest, and naïve Bayes. The panel included indices of synaptic dysfunction and loss, neuroinflammation, and neuronal injury (e.g., neurofilament light; NFL). An algorithm, developed by integrating all the data from such fluid biomarkers, has been shown to be capable of accurately predicting AD, thus achieving state-of-the-art results [51][52].

To the end of designing a blood-based test for identifying AD, the European Medical Information Framework for Alzheimer’s disease biomarker discovery cohort conducted a study using both ML and DL models. Data used for modeling included 883 plasma metabolites assessed in 242 cognitively normal individuals and 115 patients with AD-type dementia, and demonstrated that the panel of plasma markers had good discriminatory power and have the potential to match the AUC of well-established AD CSF biomarkers. Finally, the authors concluded that it can be commonly included in clinical research as part of the diagnostic work-up, with an AUC in the range of 0.85–0.88 [52][53].

Moreover, AI has the potential to integrate data obtained through the use of new technologies, such as devices designed for the evaluation of language and verbal fluency or executive functions in healthy or mildly impaired individuals. A system for acoustic feature extraction over speech segments in AD patients was developed, by analyzing data from DementiaBank’s Pitt corpus data set [34][35][36,37]. The acoustic features of patient speeches have the advantage of being cost-effective and non-invasive, compared to imaging or blood biomarkers, and can be integrated to develop screening tools for MCI and AD. Moreover, another system has exploited a digital pen to record drawing dynamics, which can detect slight signs of mild impairment in asymptomatic individuals [53][54]. The ability of this pen was evaluated in patients performing the Clock Drawing Test (CDT), which allowed for the identification of subtle to mild cognitive impairment, with an inexpensive and efficient tool having promising clinical and pre-clinical applications.

So far, the first CAD tools for AD were constructed through the use of AI methods for the analysis of brain imaging [45][46][46,47]. Analyzing MRI data from the OASIS database [47][48], a feature extraction and selection method called “eigenbrain”, which is carried out using PCA (see glossary), was used to capture the characteristic changes of anatomical structures between AD and NC; namely, severe atrophy of the cerebral cortex, enlargement of the ventricles, and shrinkage of the hippocampus. The applied SVM algorithm achieved a mean accuracy of 92.36% for an automated classification system of AD diagnosis, based on MRI data [48][49]. For instance, by using FDG-PET of the brain, a DL algorithm for the early prediction of AD was developed, achieving 82% specificity and 100% sensitivity at an average of 75.8 months prior to the final diagnosis [49][50].

The majority of ML models for classifying AD from NC are trained with neuroimaging data, which have the advantage of high accuracy [50][51], but limitations associated to their high cost and lack of diffusion in non-specialized centers. A large group of studies have focused on the identification of fluid marker panels as potential screening tests. In addition to Aβ- and tau-related biomarkers, novel candidate markers according to other mechanisms of AD pathology have been investigated in experimental and meta-analysis studies, in order to optimize the predictive modeling.

A recent study has applied ML algorithms to evaluate data on novel biomarkers that were available on PubMed, Cochrane Systematic Reviews, and Cochrane Collaboration Central Register of Controlled Clinical Trials databases. Experimental or review studies have investigated biomarkers for dementia or AD using ML algorithms, including SVM, logistic regression, random forest, and naïve Bayes. The panel included indices of synaptic dysfunction and loss, neuroinflammation, and neuronal injury (e.g., neurofilament light; NFL). An algorithm, developed by integrating all the data from such fluid biomarkers, has been shown to be capable of accurately predicting AD, thus achieving state-of-the-art results [51][52].

To the end of designing a blood-based test for identifying AD, the European Medical Information Framework for Alzheimer’s disease biomarker discovery cohort conducted a study using both ML and DL models. Data used for modeling included 883 plasma metabolites assessed in 242 cognitively normal individuals and 115 patients with AD-type dementia, and demonstrated that the panel of plasma markers had good discriminatory power and have the potential to match the AUC of well-established AD CSF biomarkers. Finally, the authors concluded that it can be commonly included in clinical research as part of the diagnostic work-up, with an AUC in the range of 0.85–0.88 [52][53].

Moreover, AI has the potential to integrate data obtained through the use of new technologies, such as devices designed for the evaluation of language and verbal fluency or executive functions in healthy or mildly impaired individuals. A system for acoustic feature extraction over speech segments in AD patients was developed, by analyzing data from DementiaBank’s Pitt corpus data set [34][35][36,37]. The acoustic features of patient speeches have the advantage of being cost-effective and non-invasive, compared to imaging or blood biomarkers, and can be integrated to develop screening tools for MCI and AD. Moreover, another system has exploited a digital pen to record drawing dynamics, which can detect slight signs of mild impairment in asymptomatic individuals [53][54]. The ability of this pen was evaluated in patients performing the Clock Drawing Test (CDT), which allowed for the identification of subtle to mild cognitive impairment, with an inexpensive and efficient tool having promising clinical and pre-clinical applications.

Different data modalities reflect the AD-related pathological markers that are complementary to each other, which can be concatenated as multi-modal features as input to an ML model for classification [74][75][76][75,76,77]. Notwithstanding, the modality with the larger number of features may weigh more than the others when training the algorithm, inducing bias in the interpretation. In order to overcome this limitation and extract multi-modal feature representations, DL architectures can be used, which do not need feature engineering, due to their ability to non-linearly transform input variables [77][78].

A DL model for both MCI-to-AD prediction and AD vs. NC classification was trained on data from the ADNI database, including demographic, NPS and genetic data, APOE polymorphism, and MRI. The model processed all the data in a multi-modal feature extraction phase, aiming to combine all data together and obtain a classification output. The AD vs. NC classification task achieved by this model reached performances close to 100%, as expected; whereas, for the MCI-to-AD prediction task, the AUC and accuracy were 0.925 and 86%, respectively [54][55].

Some models transferred the knowledge in performing AD vs. NC classification to a prediction task—that is, MCI-to-AD conversion—using transfer learning methodology (see glossary). A system with the highest capacity of discriminating AD from NC by analyzing three-dimensional MRI data was recently tested for MCI-to-AD prediction, reaching a high accuracy (82.4%) and AUC (0.83). This finding demonstrated that information from related domains can help AI to solve tasks targeted at the identification of patients at risk of developing AD-related dementia [55][56].

An interpretation system was embedded with a classification model for both early diagnosis of AD and MCI-to-AD prediction, in order to increase the impact in clinical practice. This model integrates 11 data modalities from ADNI, including NPS tests, neuroimaging, demographics, and electronic health records data (e.g., laboratory blood test, neurological exam, and clinical symptom data). The model outputs a sentence in natural language, explaining the involvement of attributes in the model’s classification output. The model achieved a good performance while balancing the accuracy–interpretability trade-off in both AD classification and MCI-to-AD prediction tasks, allowing for actionable decisions that can enhance physician confidence, contributing to the realization of explainable AI (XAI) in healthcare [78][79].

Most of the AI models for AD are mainly built on biomarkers such as brain imaging, often with the use of Aβ and tau ligands, Aβ- or tau-PET, as well as biomarkers in CSF, which have high accuracy and predictive value; however, their invasive nature, high cost, and limited availability restrict their use to highly specialized centers [79][80][81][82][80,81,82,83]. A possible turning point has emerged with the recent development of ultra-sensitive methods for the detection of brain-derived proteins in blood, making it possible to measure NFL [83][84], Aβ42, and Aβ40 [84][85][85,86], and tau and P-tau in plasma [86][87][87,88]. The accuracy of plasma P-tau combined with other non-invasive biomarkers for predicting future AD dementia was recently evaluated in patients with mild cognitive symptoms from ADNI and the Swedish BioFINDER cohort, including patients with repeated examinations and clinical assessments over a period of 4 years to ensure a clinical diagnosis (https://biofinder.se/ accessed on 30 May 2021). The prediction included not only the discrimination between progression to AD dementia and stable cognitive symptoms, but also versus progression to other forms of dementia. The accuracy of plasma biomarkers was compared with corresponding markers in CSF, and with the diagnostic prediction of expert physicians in memory clinics, based on the assessment at baseline of extensive clinical assessments, cognitive testing, and structural brain imaging [87][88]. Plasma P-tau in combination with the other non-invasive markers showed a higher value in predicting AD dementia within 4 years, with respect to clinical-based prediction (AUC of 0.89–0.92 and 0.72, respectively). In addition, the biomarker combination showed similarly high predictive accuracy in both plasma and CSF, making plasma an effective alternative to CSF, thus providing a tool to improve the diagnostic potential in clinical practice [87][88].

Different data modalities reflect the AD-related pathological markers that are complementary to each other, which can be concatenated as multi-modal features as input to an ML model for classification [74][75][76][75,76,77]. Notwithstanding, the modality with the larger number of features may weigh more than the others when training the algorithm, inducing bias in the interpretation. In order to overcome this limitation and extract multi-modal feature representations, DL architectures can be used, which do not need feature engineering, due to their ability to non-linearly transform input variables [77][78].

A DL model for both MCI-to-AD prediction and AD vs. NC classification was trained on data from the ADNI database, including demographic, NPS and genetic data, APOE polymorphism, and MRI. The model processed all the data in a multi-modal feature extraction phase, aiming to combine all data together and obtain a classification output. The AD vs. NC classification task achieved by this model reached performances close to 100%, as expected; whereas, for the MCI-to-AD prediction task, the AUC and accuracy were 0.925 and 86%, respectively [54][55].

Some models transferred the knowledge in performing AD vs. NC classification to a prediction task—that is, MCI-to-AD conversion—using transfer learning methodology (see glossary). A system with the highest capacity of discriminating AD from NC by analyzing three-dimensional MRI data was recently tested for MCI-to-AD prediction, reaching a high accuracy (82.4%) and AUC (0.83). This finding demonstrated that information from related domains can help AI to solve tasks targeted at the identification of patients at risk of developing AD-related dementia [55][56].

An interpretation system was embedded with a classification model for both early diagnosis of AD and MCI-to-AD prediction, in order to increase the impact in clinical practice. This model integrates 11 data modalities from ADNI, including NPS tests, neuroimaging, demographics, and electronic health records data (e.g., laboratory blood test, neurological exam, and clinical symptom data). The model outputs a sentence in natural language, explaining the involvement of attributes in the model’s classification output. The model achieved a good performance while balancing the accuracy–interpretability trade-off in both AD classification and MCI-to-AD prediction tasks, allowing for actionable decisions that can enhance physician confidence, contributing to the realization of explainable AI (XAI) in healthcare [78][79].

Most of the AI models for AD are mainly built on biomarkers such as brain imaging, often with the use of Aβ and tau ligands, Aβ- or tau-PET, as well as biomarkers in CSF, which have high accuracy and predictive value; however, their invasive nature, high cost, and limited availability restrict their use to highly specialized centers [79][80][81][82][80,81,82,83]. A possible turning point has emerged with the recent development of ultra-sensitive methods for the detection of brain-derived proteins in blood, making it possible to measure NFL [83][84], Aβ42, and Aβ40 [84][85][85,86], and tau and P-tau in plasma [86][87][87,88]. The accuracy of plasma P-tau combined with other non-invasive biomarkers for predicting future AD dementia was recently evaluated in patients with mild cognitive symptoms from ADNI and the Swedish BioFINDER cohort, including patients with repeated examinations and clinical assessments over a period of 4 years to ensure a clinical diagnosis (https://biofinder.se/ accessed on 30 May 2021). The prediction included not only the discrimination between progression to AD dementia and stable cognitive symptoms, but also versus progression to other forms of dementia. The accuracy of plasma biomarkers was compared with corresponding markers in CSF, and with the diagnostic prediction of expert physicians in memory clinics, based on the assessment at baseline of extensive clinical assessments, cognitive testing, and structural brain imaging [87][88]. Plasma P-tau in combination with the other non-invasive markers showed a higher value in predicting AD dementia within 4 years, with respect to clinical-based prediction (AUC of 0.89–0.92 and 0.72, respectively). In addition, the biomarker combination showed similarly high predictive accuracy in both plasma and CSF, making plasma an effective alternative to CSF, thus providing a tool to improve the diagnostic potential in clinical practice [87][88].

2. AI for AD Diagnosis

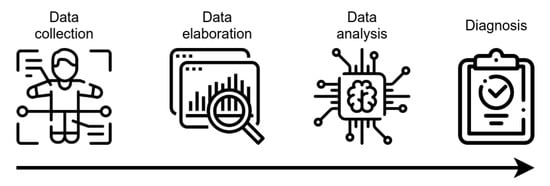

AI technology, mainly ML algorithms, can handle high-dimensional complex systems that exceed the human capacity of data analysis. ML has been used in the CAD of many pathologies, including AD, by combining electronic medical records, NPS tests, brain imaging, and biological markers, together with data obtained by novel developed tools (e.g., wearable sensors) for the assessment of executive functions (Figure 2). Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), 18F-fluorodeoxyglucose-Positron Emission Tomography (FDG-PET), and Diffusion Tensor Imaging (DTI) provide detailed information about the brain structure and functionality, allowing for the identification of features supporting the diagnosis, such as atrophy, amyloid deposition, or microstructural damages [38][39][40,41]. Moreover, neuroimages can discriminate pathological processes not due to AD that can lead to cognitive decline (e.g., brain tumors or cerebrovascular disease). Several studies have demonstrated that markers of primary AD pathology (CSF Aβ1-42, total tau and p-tau181, amyloid-PET), neurodegeneration (structural MRI, FDG-PET), or biomarker combinations can be integrated into complex tools for diagnostic or predictive purposes [40][41][42][43][6,42,43,44]. Of interest, polymorphism in the apolipoprotein E (APOE) gene is the strongest genetic risk factor in the sporadic form of AD, which has an added predictive value, with the APOEε4 allele conferring an increased risk of early age of onset, while the APOEε2 allele confers a decreased risk, relative to the common APOEε3 allele [44][45].

Figure 2. Schematic representation of CAD tools functioning. After collection, data are elaborated, in order to be made ready for the analysis using AI-based techniques. The outcoming result is the assignment of a class, with potential value for diagnostic evaluation.

3. Prediction of MCI-to-AD Conversion

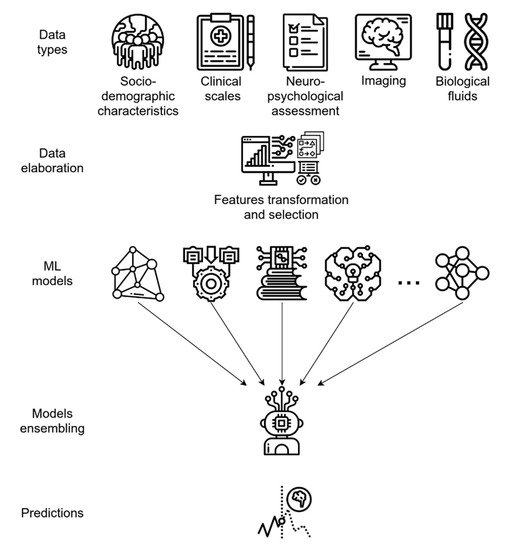

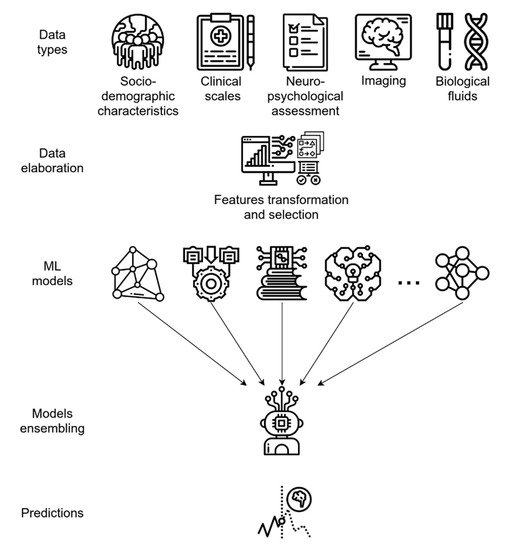

Diagnosing probable AD in a subject with moderate–severe cognitive decline or evidence of cortex atrophy is usually not difficult for a skilled neurologist when appropriate data are available. Therefore, it is not surprising for an AI model to solve the task of AD vs. NC subject classification with high accuracy, when taking into account NPS test results or neuroimaging data [54][55]. To date, several predictive models have been developed [54][55][56][57][58][59][60][61][62][63][64][65][66][67][68][69][70][71][55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72], yielding peak accuracy values of 100% in AD vs. NC classification [54][55]. In contrast, a much more challenging task for AI is to identify individuals with subjective or mild impairment who will develop AD dementia, with respect to stable MCI or MCI not due to AD, given the shaded differences and the overlapping symptoms in the clinical or biological variables defining these groups in the early phases [72][73]. Algorithms designed to predict MCI-to-AD conversion aim to classify MCI patients into two groups: those who will convert to AD (MCI-c) within a certain time frame (usually 3 years) and those who will not convert (MCI-nc). Yearly, about 15% of MCI patients convert to AD [59][73][60,74] and, thus, early and timely identification is crucial, in order to ameliorate the outcome and slow the progression of the disease. Several AI-based models test the accuracy of combinations of non-invasive predictors, as well as socio-demographic and clinical data, in order to develop effective screening or predictive tools. By using the ADNI data set, socio-demographic characteristics, clinical scale ratings, and NPS test scores have been used to train different supervised ML algorithms and, finally, develop an ensemble model utilizing them (see glossary). This ensemble learning application demonstrated a high predictive performance, with an AUC of 0.88 in predicting MCI-to-AD conversion [61][62], and has the advantage of using only non-invasive and easily collectable predictors, rather than neuroimaging or CSF biomarkers, thus enhancing its potential use and diffusion in clinical practice. As for CAD systems, both MRI and PET data can be independently modeled by ML algorithms, yielding good predictive accuracy; however, integrating neuroimaging data with other variables, such as cognitive measures, genetic factors, or biochemical changes, can significatively enhance the model performance, as is generally expected when integrating multi-modal data (Figure 3) [30][63][65][70][71][32,64,66,71,72]. For example, the integration of MRI with multiple modality data, such as PET, CSF biomarkers, and genomic data, reached 84.7% accuracy in an MCI-c vs. MCI-nc classification task. When only single-modality data was used, the accuracy of the model was lower than the all-modalities implementation [63][64].

Figure 3. Predictive ML ensemble method for the conversion of MCI to AD based on multi-modal data (i.e., socio-demographics, clinical, NPS, biological fluids, and imaging data). The system uses a feature transformation and selection phase followed by data integration, allowing for more efficient use of variables. The final ensemble of several different ML models provides accurate final predictions of AD or AD conversion.