| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Madan Mohan Gupta | + 2612 word(s) | 2612 | 2021-05-08 11:00:56 | | | |

| 2 | Karina Chen | Meta information modification | 2612 | 2021-05-13 03:57:53 | | | | |

| 3 | Karina Chen | Meta information modification | 2612 | 2021-07-01 04:22:00 | | | | |

| 4 | Catherine Yang | Meta information modification | 2612 | 2021-09-30 03:43:19 | | |

Video Upload Options

The emergence and global spread of COVID-19 has disrupted the traditional mechanisms of education throughout the world. Institutions of learning were caught unprepared and this jeopardised the face-to-face method of curriculum delivery and assessment. Teaching institutions have shifted to an asynchronous mode whilst attempting to preserve the principles of integrity, equity, inclusiveness, fairness, ethics, and safety. A framework of assessment that enables educators to utilise appropriate methods in measuring a student’s progress is crucial for the success of teaching and learning, especially in health education that demands high standards and comprises consistent scientific content.

1. Assessment in a Synchronous and Asynchronous Environment

Assessment can be conducted in a synchronous and asynchronous environment. Assessment in a synchronous environment is conducted in real-time and can be face-to-face or online, whereas asynchronous environment interaction does not take place in real-time can be via virtual or any other mode. Difference between synchronous and asynchronous environment assessment is given in Table 1.

Table 1. Difference between synchronous and asynchronous environment assessment.

| Synchronous | Asynchronous |

|---|---|

| Real-time—time bounded | Anytime—the flexibility of time |

| Less time available for the student to respond to the question | More time available for the student to respond to the question |

| Exam at one location | Exam at multiple locations—more convenient to the student |

During COVID-19, social distancing has become the “new normal” and it is difficult to conduct synchronous examinations face-to-face because they are complex and require significant infrastructural development. Although this is challenging in the immediate pandemic period, many modifications are being tested. This has led to innovations in teaching, learning, and assessment such that asynchronous learning, interactive visuals, or graphics can play a significant role in stimulating the learning process to reach higher-order thinking.

The asynchronous method allows more flexibility regarding time and space. Several online assessment methods are flexible, where the interaction of participants may not occur at the same time. The asynchronous online examination can offer a practicable solution for a fair assessment of the students by providing complex problems where the application of theoretical knowledge is required. The structure allows adequate time for research and response and is a suitable method of assessment in the present situation. In light of COVID-19, the conventional assessment appears far from feasible and we are left with little choice but to implement the online asynchronous assessment methods [1][2][3].

2. Development of Assessment for an Asynchronous Environment

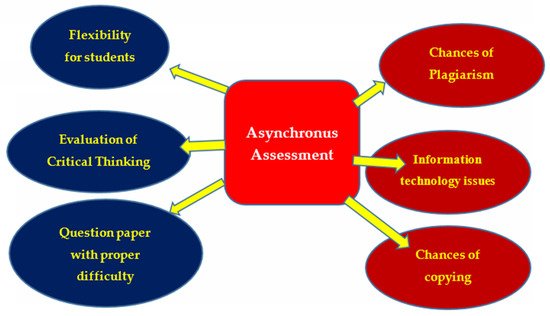

Assessment in an asynchronous environment can be given to the students by posting the material online and allowing students the freedom to research and complete the assignment within the allotted period. It broadens the assessment possibilities and offers the teacher an opportunity to explore innovative tools, because it represents the open book format of an examination but suffers from drawbacks of plagiarism and copying, especially in mathematical subjects. There are also information technology, issues such as software availability and internet connectivity (Figure 1) [2][4][5].

Figure 1. Asynchronous assessment method.

In medical education, asynchronous assessment modalities should require the application of theoretical knowledge as well as critical thinking while interpreting clinical data. These could be realised by case studies and problem-based questions.

A valid, fair, and reliable asynchronous assessment method can only be designed and developed by considering the level of target students, curricular difficulty, and the pattern of knowledge, skill, and competence levels to be assessed. The level of target students refers to the target group, i.e., whether the examination paper is intended for undergraduate or postgraduate students. Checking the knowledge of the undergraduate students’ exam papers will be based on their year of study. Students in the first year of study will be assessed on theoretical knowledge, while final year students will be faced with questions that require more clinical-based knowledge.

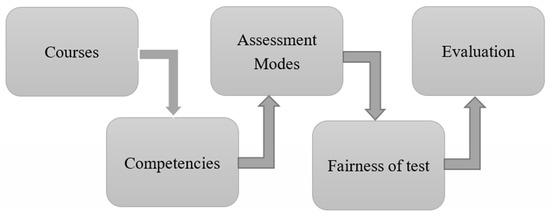

The validation of the assessment method should be done on a trial basis before it is approved for implementation. Evaluation should be conducted based on item analysis of student performance and the difficulty index of questions to differentiate excellent, good, and poor students [6][3][5]. For asynchronous assessment, the teacher utilises various tools to diagnose the knowledge, skill, and competence of students; some of these modalities include open-ended questions, problem-based questions, virtual OSCE (Objective structural Clinical Examination), and an oral examination. A well-designed course with the competencies and measurable learning outcomes helps determine the modes of assessment. Upon choosing a mode of assessment, the questions are developed considering the criteria of reliability, validity, and accuracy. After the questions are prepared and administered to the students, the final step is to evaluate their performance (Figure 2) [4][5][7].

Figure 2. Online assessment and its parameters.

2.1. Open-Ended Questions

Open-ended questions are useful when a teacher wants insight into the learner’s view and to gather a more elaborate response about the problem (instead of a “yes” or “no” answer). The response to open-ended questions is dynamic and allows the student to express their answer with more information or new solutions to the problem. An open-ended question allows the teacher to check the critical thinking power of the students by applying why, where, how, and when type questions, that encourage critical thinking. The teacher is open to and expects different possible solutions to a single problem with justifiable reasoning. The advantage of open-ended questions over multiple-choice questions (MCQs) is that it is suitable to test deep learning. The MCQ format is limited to assess the facts only, whereas open-ended questions evaluate the students’ understanding of a concept. Open-ended questions help students to build confidence by naturally solving the problem. It allows teachers to evaluate students’ abilities to apply information to clinical and scientific problems, and also reveal their misunderstandings about essential content. If open-ended questions are properly structured as per the rubrics, it can allow students to include their feelings, attitudes, and understanding about the problem statement which requires ample research and justifications, but this may not be applicable in all cases, e.g., ‘What are the excipients required to prepare a pharmaceutical tablet?’—in this case, only a student’s knowledge can be evaluated. Marking open-ended questions is also a strenuous and time-consuming job for the teachers because it increases their workload. Open-ended questions have lower reliability than those of MCQs. The teacher has to consider all these points carefully and develop well-structured open-ended questions to assess the higher-order thinking of the students A teacher can assess student knowledge and ability to critically evaluate a given situation through such questions, e.g., ‘What is the solution to convert a poorly flowable drug powder into a good flowable crystal for pharmaceutical tablet formulation?’ [7][8][9][10][11][12][13].

2.2. Modified Essay Questions

A widely used format in medical education is the modified essay question (MEQ), where a clinical scenario is followed by a series of sequential questions requiring short answers. This is a compromise approach between multiple-choice and short answer questions (SAQ) because it tests higher-order cognitive skills when compared to MCQs, while allowing for more standardised marking than the conventional open-ended question [10][11][12].

Example 1: A 66-year-old Indian male is presented to the emergency department with a complaint of worsening shortness of breath and cough for one week. He smokes two packs of cigarettes per month. His past medical history includes hypertension, diabetes, chronic obstructive pulmonary disease (COPD), and obstructive sleep apnoea. What are the laboratory tests needed to confirm the diagnosis, and what should be the best initial treatment plan? After four days the patient’s condition is stable; what is the best discharge treatment plan for this patient?

Example 2: You are working as a research scientist in a pharmaceutical company and your team is involved in the formulation development of controlled-release tablets for hypertension. After an initial trial, you found that almost 90% of the drug is released within 6 h. How can this problem be overcome, and what type of formulation change would you suggest so that drug release will occur over 24 h instead of 6 h?

2.3. “Key Featured” Questions

In such a question, a description of a realistic case is followed by a small number of questions that require only essential decisions. These questions may be either multiple-choice or open-ended depending on the content of the question. Key feature questions (KFQs) measure problem-solving and clinical decision-making ability validly and reliably. The questions in KFQs mainly focus on critical areas such diagnosis and management of clinical problems The construction of the questions is time-consuming, with inexperienced teachers needing up to three hours to produce a single key feature case with questions, while experienced ones may produce up to four an hour. Key feature questions are best used for testing the application of knowledge and problem solving in “high stake” examinations [13][14][15].

2.4. Script Concordance Test

Script concordance test (SCT) is a case-based assessment format of clinical reasoning in which questions are nested into several cases and intended to reflect the students’ competence in interpreting clinical data under circumstances of uncertainty [15][16]. A case with its related questions constitutes an item. Scenarios are followed by a series of questions, presented in three parts. The first part (“if you were thinking of”) contains a relevant diagnostic or management option. The second part (“and then you were to find”) presents a new clinical finding, such as a physical sign, a pre-existing condition, an imaging study, or a laboratory test result. The third part (“this option would become”) is a five-point Likert scale that captures examinees’ decisions. The task for examinees is to decide what effect the new finding will have on the status of the option in direction (positive, negative, or neutral) and intensity. This effect is captured with a Likert scale because script theory assumes that clinical reasoning is composed of a series of qualitative judgments. This is an appropriate approach for asynchronous environment assessment because it demands both critical and clinical thinking [17][18][19].

2.5. Problem-Based Questions

Problem-based learning is an increasingly integral part of higher education across the world, especially in healthcare training programs. It is a widely popular and effective small group learning approach that enhances the application of knowledge, higher-order thinking, and self-directed learning skills. It is a student-centred teaching approach that exposes students to real-world scenarios that need to be solved using reasoning skills and existing theoretical knowledge. Students are encouraged to utilise their higher thinking faculty, according to Bloom’s classification, to prove their understanding and appreciation of a given subject area. Where the physical presence of students in the laboratory is not feasible, assessment can be conducted by providing challenging problem-based case studies (as per the level of the student). The problem-based questions can be an individual/group assignment, and the teacher can use a discussion forum on the learning management system (LMS) online platform, allowing students to post their views and possible solutions to the problem. The asynchronous communication environment is suitable for problems based on case studies because it provides sufficient time for the learner to gather resources in the search for solutions [14][15][18].

Teachers can engage online learners for their weekly assessments on discussion boards using their LMS. A subject-related issue based on lessons of the previous week can be created to allow student interaction and enhance problem-solving, skills e.g., pharmaceutical formulation problems with pre-formulation study data, clinical cases with disease symptoms, diagnostic, therapeutic data, and patient medication history. Another method is to divide the problem into various facets and assign each part to a separate group of students. At the end of the individual session, all groups are asked to interact to solve the main issue by putting their pieces together in an amicable way. Students must be given clear timelines for responses and a well-structured question which is substantial, concise, provocative, timely, logical, grammatically sound, and clear [16][17][20]. The structure should afford a stimulus to initiate the thinking process and offer possible options or methods that can be justified. It should allow students to achieve the goal depending on their interpretation of the data provided and the imagination of each responder to predict different possible solutions. The participants need to complement and challenge each other to think deeper by asking for explanations, examples, checking facts, considering extreme conditions, and extrapolating conclusions. The moderator should post the questions promptly and allow sufficient time for responders to post their responses. The moderator then facilitates the conversations, and intervenes only if required to obtain greater insight, stimulate, or guide further responses.

The discussion board is to be managed and monitored for valid users in a closed forum from a registered device through an official IP address. The integrity issue raised is really difficult and the examiner has to rely on the ethical commitment of the examinee.

2.6. Virtual OSCE

Over recent years, we have seen an increasing use of Objective Structured Clinical Examinations (OSCEs) in the health professional training to ensure that students achieve minimum clinical standards. In OSCE, simulated patients are useful assessment tools that evaluate student–patient interactions related to clinical and medical issues. In the current COVID-19 crisis, students will not be able to appear for the traditional physical OSCE, and a more practicable approach is based on their interaction with the virtual patient. The use of a high-fidelity virtual patient-based learning tool in OSCE is useful for medical and healthcare students for clinical training assessment [20][21][22].

High fidelity patients use simulators with programmable physiologic responses to disease states, interventions, and medications. Some examples of situations where faculty members can provide a standardised experience with simulation include cardiac arrest, respiratory arrest, surgeries, allergic reactions, cardiac pulmonary resuscitation, basic first aid, myocardial infarction, stroke procedures, renal failure, bleeding, and trauma. Although simulation should not replace students spending time with real patients, it provides an opportunity to prepare students, complements classroom learning, fulfils curricular goals, standardises experiences, and enhances assessment opportunities in times when physical face-to-face interaction is not possible. Virtual simulation tools are also available for various pharmaceutical, analytical, synthetic, clinical experimental environments, and industry operations [21][22][23].

2.7. Oral Examination

The oral exam is a commonly used mode of evaluation to assess competencies, including knowledge, communication skills, and critical thinking ability. It is a significant evaluation tool for a comprehensive assessment of the clinical competence of a student in the health profession. The oral assessment involves student’s verbal response to questions asked, and its dimensions include primary content type (object of assessment), interaction (between the examiner and student), authenticity (validity), structure (organised questions), examiners (evaluators), and orality (oral format). All six dimensions are equally important in the oral examination where the mode of communication between examiners and students will be purely online instead of physical face-to-face interaction. Clear instructions regarding the purpose and time limit shall be important to make the online oral examination relevant and effective [22][23][24][25].

Short questions, open-ended questions, and problem-based questions are relevant and effective asynchronous means to assess the knowledge, skill, and attitude of the students because these types of questions require critical thinking, and can act as a catalyst for the students to provide new ideas in problem-solving. Although MCQs, extended matching questions (EMQs), and true/false questions possess all psychometric properties of a good assessment, these modalities may not be recommended for online asynchronous assessment because they are more subjected to cheating which can have serious implications on the validity of examinations. However, they can be appropriately adapted for time-bound assessments for continuous evaluation. Mini-CEX, DOPS, OSCE assessment are not feasible during a pandemic because face-to-face interaction is required at the site, which is not permissible due to gathering restrictions and social distancing. When the physical presence of the student is not feasible at the hospital or laboratory site, virtual oral examination and virtual OSCE becomes more relevant because the examiner can interact with the students via a suitable platform and ask questions relevant to the experiment/topic. In virtual OSCE, students will be evaluated based on their interactions with virtual patients [22][23][26][27][28][29][30][31][32][33].

References

- Bartlett, M.; Crossley, J.; McKinley, R.K. Improving the quality of written feedback using written feedback. Educ. Prim. Care 2016, 28, 16–22.

- Bordage, G.; Page, G. An Alternative Approach to PMPs: The “Key Features” Concept. In Further Developments in Assessing Clinical Competence; Hart, I., Harden, R., Eds.; Can-Heal Publications: Montreal, QC, Canada, 1987; pp. 57–75.

- Boushehri, E.; Monajemi, A.; Arabshahi, K.S. Key feature, clinical reasoning problem. Puzzle and scenario writing: Are there any differences between them in evaluating clinical reasoning? Trends Med. 2019, 19, 1–7.

- Braun, U.K.; Gill, A.C.; Teal, C.R.; Morrison, L.J. The Utility of Reflective Writing after a Palliative Care Experience: Can We Assess Medical Students’ Professionalism? J. Palliat. Med. 2013, 16, 1342–1349.

- Car, L.T.; Kyaw, B.M.; Dunleavy, G.; Smart, N.A.; Semwal, M.; Rotgans, J.I.; Low-Beer, N.; Campbell, J. Digital Problem-Based Learning in Health Professions: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J. Med. Internet Res. 2019, 21, e12945.

- Boud, D.; Falchikov, N. Aligning assessment with long-term learning. Assess. Evaluation High. Educ. 2006, 31, 399–413.

- Cockett, A.; Jackson, C. The use of assessment rubrics to enhance feedback in higher education: An integrative literature review. Nurse Educ. Today 2018, 69, 8–13.

- Courteille, O.; Bergin, R.; Stockeld, D.; Ponzer, S.; Fors, U. The use of a virtual patient case in an OSCE-based exam—A pilot study. Med. Teach. 2008, 30, e66–e76.

- Craddock, D.; Mathias, H. Assessment options in higher education. Assess. Eval. High. Educ. 2009, 34, 127–140.

- Epstein, R.M. Assessment in Medical Education. N. Engl. J. Med. 2007, 356, 387–396.

- Farmer, E.A.; Page, G. A practical guide to assessing clinical decision-making skills using the key features approach. Med. Educ. 2005, 39, 1188–1194.

- Feletti, G.I.; Smith, E.K.M. Modified Essay Questions: Are they worth the effort? Med. Educ. 1986, 20, 126–132.

- Husain, H.; Bais, B.; Hussain, A.; Samad, S.A. How to Construct Open Ended Questions. Procedia Soc. Behav. Sci. 2012, 60, 456–462.

- Fournier, J.P.; Demeester, A.; Charlin, B. Script Concordance Tests: Guidelines for Construction. BMC Med. Inform. Decis. Mak. 2008, 8, 18.

- Gagnon, R.; Van der Vleuten, C. Script concordance testing: More cases or more questions? Adv. Health Sci. Educ. Theory Pract. 2009, 14, 367–375.

- Garrison, G.D.; Baia, P.; Canning, J.E.; Strang, A.F. An Asynchronous Learning Approach for the Instructional Component of a Dual-Campus Pharmacy Resident Teaching Program. Am. J. Pharm. Educ. 2015, 79, 29.

- Hift, R.J. Should essays and other “open-ended”-type questions retain a place in written summative assessment in clinical Medicine? BMC Med. Educ. 2014, 14, 249.

- Johnson, N.; Khachadoorian-Elia, H.; Royce, C.; York-Best, C.; Atkins, K.; Chen, X.P.; Pelletier, A. Faculty perspectives on the use of standardized versus non-standardized oral examinations to assess medical students. Int. J. Med. Educ. 2018, 9, 255–261.

- Joughin, G. Dimensions of Oral Assessment. Assess. Evaluation High. Educ. 1998, 23, 367–378.

- Al-Kadri, H.M.; Al-Moamary, M.S.; Al-Takroni, H.; Roberts, C.; Van Der Vleuten, C.P. Self-assessment and students’ study strategies in a community of clinical practice: A qualitative study. Med. Educ. Online 2012, 17, 11204.

- Keppell, M.; Carless, D. Learning-oriented assessment: A technology-based case study. Assess. Educ. Princ. Policy Pr. 2006, 13, 179–191.

- Koole, S.; Dornan, T.; Aper, D.L.; Wever, B.D.; Scherpbier, A.; Valcke, M.; Cohen-Schotanus, J.; Derese, A. Using video-cases to assess student reflection: Development and validation of an instrument. BMC Med. Educ. 2012, 12, 22.

- Lin, C.W.; Tsai, T.C.; Sun, C.K.; Chen, D.F.; Liu, K.M. Power of the policy: How the announcement of high-stakes clinical examination altered OSCE implementation at institutional level. BMC Med. Educ. 2013, 24, 8.

- Lubarsky, S.; Charlin, B.; Cook, D.A.; Chalk, C.; Van Der Vleuten, C.P. Script concordance testing: A review of published validity evidence. Med. Educ. 2011, 45, 329–338.

- Lynam, S.; Cachia, M. Students’ perceptions of the role of assessments at higher education. Assess. Evaluation High. Educ. 2017, 43, 223–234.

- Moniz, T.; Arntfield, S.; Miller, K.; Lingard, L.; Watling, C.; Regehr, G. Considerations in the use of reflective writing for student assessment: Issues of reliability and validity. Med. Educ. 2015, 49, 901–908.

- Norcini, J.; Anderson, M.B.; Bollela, V.; Burch, V.; Costa, M.J.; Duvivier, R.; Hays, R.; Mackay, M.F.P.; Roberts, T.; Swanson, D. 2018 Consensus framework for good assessment. Med. Teach. 2018, 40, 1102–1109.

- Norcini, J.; Anderson, B.; Bollela, V.; Burch, V.; Costa, M.J.; Duvivier, R.; Galbraith, R.; Hays, R.; Kent, A.; Perrott, V.; et al. Criteria for good assessment: Consensus statement and recommendations from the Ottawa 2010 Conference. Med. Teach. 2011, 33, 206–214.

- Okada, A.; Scott, P.; Mendonça, M. Effective web videoconferencing for proctoring online oral exams: A case study at scale in Brazil. Open Prax. 2015, 7, 227–242.

- Sullivan, D.P. An Integrated Approach to Preempt Cheating on Asynchronous, Objective, Online Assessments in Graduate Business Classes. Online Learn. 2016, 20, 195–209.

- Palmer, E.J.; Devitt, P.G. Assessment of higher order cognitive skills in undergraduate education: Modified essay or multiple choice questions? Research paper. BMC Med. Educ. 2007, 7, 49.

- Pangaro, L.N.; Cate, O.T. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med. Teach. 2013, 35, e1197–e1210.

- Pearce, J.; Edwards, D.; Fraillon, J.; Coates, H.; Canny, B.J.; Wilkinson, D. The rationale for and use of assessment frameworks: Improving assessment and reporting quality in medical education. Perspect. Med. Educ. 2015, 4, 110–118.