Video Upload Options

Unmanned ground vehicles (UGVs) have great potential in the application of both civilian and military fields, and have become the focus of research in many countries. Environmental perception technology is the foundation of UGVs, which is of great significance to achieve a safer and more efficient performance.

1. Introduction

The unmanned ground vehicle (UGV) is a comprehensive intelligent system that integrates environmental perception, location, navigation, path planning, decision-making and motion control [1]. It combines high technologies including computer science, data fusion, machine vision, deep learning, etc., to satisfy actual needs to achieve predetermined goals [2].

In the field of civil application, UGVs are mainly embodied in autonomous driving. High intelligent driver models can completely or partially replace the driver’s active control [3][4][5]. Moreover, UGVs with sensors can easily act as “probe vehicles” and perform traffic sensing to achieve better information sharing with other agents in intelligent transport systems [6]. Thus, it has great potential in reducing traffic accidents and alleviating traffic congestion. In the field of military application, it is competent in tasks such as acquiring intelligence, monitoring and reconnaissance, transportation and logistics, demining and placement of improvised explosive devices, providing fire support, communication transfer, and medical transfer on the battlefield [7], which can effectively assist troops in combat operations.

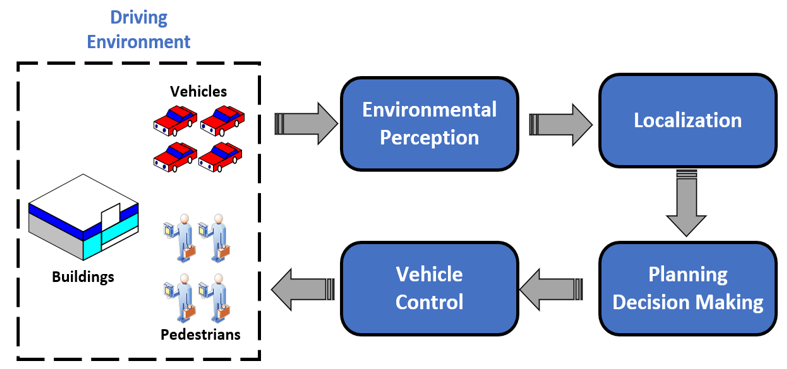

The overall technical framework for UGVs is shown in Figure 1. It is obvious that environmental perception is an extremely important technology for UGVs, including the perception of the external environment and the state estimation of the vehicle itself. An environmental perception system with high-precision is the basis for UGVs to drive safely and perform their duties efficiently. Environmental perception for UGVs requires various sensors such as Lidar, monocular camera and millimeter-wave radar to collect environmental information as input for planning, decision making and motion controlling system.

Figure 1. Technical framework for UGVs.

Environment perception technology includes simultaneous localization and mapping (SLAM), semantic segmentation, vehicle detection, pedestrian detection, road detection and many other aspects. Among various technologies, as vehicles are the most numerous and diverse targets in the driving environment, how to correctly identify vehicles has become a research hotspot for UGVs [8]. In the civil field, the correct detection of road vehicles can reduce traffic accidents, build a more complete ADAS [9][10] and achieve better integration with driver model [11][12], while in the field of military, the correct detection of military vehicle targets is of great significance to the battlefield reconnaissance, threat assessment and accurate attack in modern warfare [13].

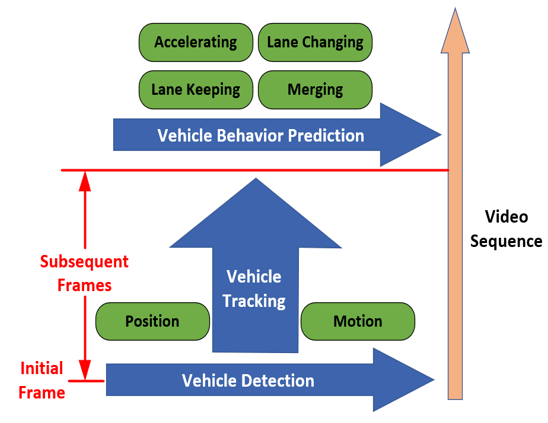

The complete framework of vehicle recognition in UGVs autonomous driving system is portrayed in Figure 2. Generally, vehicle detection is used to extract vehicle targets in a single frame of an image, vehicle tracking aims to reidentify positions of the vehicles in subsequent frames, vehicle behavior prediction refers to characterizing vehicles’ behavior basing on detection and tracking in order to make a better decision for ego vehicle [14]. For tracking technology, readers can refer to [15][16], while for vehicle behavior prediction, [17] presented a brief review on deep-learning-based methods. This review paper focuses on the vehicle detection component among the complete vehicle recognition process, summarizes and discusses related research on vehicle detection technology with sensors as the main line.

Figure 2. The overall framework of vehicle recognition technology for unmanned ground vehicles (UGVs).

2. Sensors for Vehicle Detection

The operation of UGVs requires a persistent collection of environmental information, and the efficient collection of environmental information relies on high-precision and high-reliability sensors. Therefore, sensors are crucial for the efficient work of UGVs. They can be divided into two categories: Exteroceptive Sensors (ESs) and Proprioceptive Sensors (PSs) according to the source of collected information.

ESs are mainly used to collect external environmental information, specifically vehicle detection, pedestrian detection, road detection, semantic segmentation, commonly used ESs include Lidar, millimeter-wave radar, cameras, ultrasonic. PSs are mainly used to collect real-time information about the platform itself, such as vehicle speed, acceleration, attitude angle, wheel speed, and position, to ensure real-time state estimation of UGV itself, common PSs include GNSS, and IMU.

Readers can refer to [18] for detailed information on different sensors. This section mainly introduces ESs that have the potential for vehicle detection. ESs can be further divided into two types: active sensors and passive sensors. The active sensors discussed in this section include Lidar, radar, and ultrasonic, while passive sensors include monocular cameras, stereo cameras, omni-direction cameras, event cameras and infrared cameras. Readers can refer to Table 1 for the comparison of different sensors.

Table 1. Information for Different Exteroceptive Sensors.

|

Sensors |

Affecting Factor |

Color Texture |

Depth |

Disguised |

Range |

Accuracy (Resolution) |

Size |

Cost |

|

|

Illumination |

Weather |

||||||||

|

Lidar |

- |

√ |

- |

√ |

Active |

<200 m |

Distance accuracy: <0.03 m Angular resolution: <1.5° |

Large |

High |

|

Radar (Long Range) |

- |

- |

- |

√ |

Active |

<250 m |

Distance accuracy: 0.1 m~0.3 m Angular resolution: 2°~5° |

Small |

Medium |

|

Radar (FMCW 77 GHz) |

- |

- |

- |

√ |

Active |

<200 m |

Distance accuracy: 0.05 m~0.15 m Angular resolution: about 1° |

Small |

Very Low |

|

Ultrasonic |

- |

- |

- |

√ |

Active |

<5 m |

Distance accuracy: 0.2 m~1.0 m |

Small |

Low |

|

Monocular Camera |

√ |

√ |

√ |

- |

Passive |

- |

0.3 mm~3 mm (Different fields of view and resolution have different accuracy) |

Small |

Low |

|

Stereo Camera |

√ |

√ |

√ |

√ |

Passive |

<100 m |

Depth accuracy: 0.05 m~0.1 m Attitude resolution: <0.2° |

Medium |

Low |

|

Omni-direction Camera |

√ |

√ |

√ |

- |

Passive |

- |

Resolution (Pixels): can reach 6000 × 3000 |

Small |

Low |

|

Infrared Camera |

- |

√ |

- |

- |

Passive |

- |

Resolution (Pixels): 320 × 256~ 1280 × 1024 |

Small |

Low |

|

Event Camera |

√ |

√ |

- |

- |

Passive |

- |

Resolution (Pixels): 128 × 128~768 × 640 |

Small |

Low |

* The range of cameras except for depth range of stereo camera is related to operation environmental thus there is no fixed detection distance.

2.1. Lidar

Lidar can obtain object position, orientation, and velocity information by transmitting and receiving laser beam and calculating time difference. The collected data type is a series of 3D point information called a point cloud, specifically the coordinates relative to the center of the Lidar coordinate system and echo intensity. Lidar can realize omni-directional detection, and can be divided into single line Lidar and multi-line Lidar according to the number of laser beams, the single line Lidar can only obtain two-dimensional information of the target, while the multi-line Lidar can obtain three-dimensional information.

Lidar is mainly used in SLAM [19], point cloud matching and localization [20], object detection, trajectory prediction and tracking [21]. Lidar has a long detection distance and a wide field of view, it has high data acquisition accuracy and can obtain target depth information, and it is not affected by light conditions. However, the size of Lidar is large with extremely expensive, it cannot collect the color and texture information of the target, the angular resolution is low, and the long-distance point cloud is sparsely distributed, which is easy to cause misdetection and missed detection, and it is easily affected by sediments in the environment (rain, snow, fog, sandstorms, etc.) [22], at the same time, Lidar is an active sensor, and the position of the sensor can be detected by the laser emitted by itself in the military field, and its concealment is poor.

2.2. Radar

Radar is widely used in the military and civilian fields with important strategic significance. The working principle of a radar sensor is like that of Lidar, but the emitted signal source is radio waves, which can detect the position and distance of the target.

Radars can be classified according to the different transmission bands, and the radars used by UGVs are mostly millimeter-wave radars, which are mainly used for object detection and tracking, blind-spot detection, lane change assistance, collision warning and other ADAS-related functions [18]. Millimeter-wave radars equipped on UGVs can be further divided into “FMCW radar 24-GHz” and “FMCW radar 77-GHz” according to their frequency range. Compared with long-range radar, “FMCW radar 77-GHz” has a shorter range but relatively high accuracy with very low cost, therefore almost every new car is equipped with one or several “FMCW radar 77-GHz” for its high cost- performance. More detailed information about radar data processing can refer to [23].

Compared with Lidar, radar has a longer detection range, smaller size, lower price, and is not easily affected by light and weather conditions. However, radar cannot collect information such as color and texture, the data acquisition accuracy is general, and there are many noise data, the filtering algorithm is often needed for preprocessing, at the same time, radar is an active sensor, which has poor concealment and is easy to interfere with other equipment [24].

2.3. Ultrasonic

Ultrasonic detects objects by emitting sound waves and is mainly used in the field of ships. In terms of UGVs, ultrasonic is mainly used for the detection of close targets [25], ADAS related functions such as automatic parking [26] and collision warning [27].

Ultrasonic is small in size, low in cost, and not affected by weather and light conditions, but its detection distance is short, the accuracy is low, it is prone to noise, and it is also easy to interfere with other equipment [28].

2.4. Monocular Camera

Monocular cameras store environmental information in the form of pixels by converting optical signals into electrical signals. The image collected by the monocular camera is basically the same as the environment perceived by the human eye. The monocular camera is one of the most popular sensors in UGV fields, which is strongly capable of many kinds of tasks for environmental perception.

Monocular cameras are mainly used in semantic segmentation [29], vehicle detection [30][31], pedestrian detection [32], road detection [33], traffic signal detection [34], traffic sign detection [35], etc. Compared with Lidar, radar, and ultrasonic, the most prominent advantage of monocular cameras is that they can generate high-resolution images containing environmental color and texture information, and as a passive sensor, it has good concealment. Moreover, the size of the monocular camera is small with low cost. Nevertheless, the monocular camera cannot obtain depth information, it is highly susceptible to illumination conditions and weather conditions, for the high-resolution images collected, longer calculation time is required for data processing, which challenges the real-time performance of the algorithm.

2.5. Stereo Camera

The working principle of the stereo camera and the monocular camera is the same, compared with the monocular camera, the stereo camera is equipped with an additional lens at a symmetrical position, and the depth information and movement of the environment can be obtained by taking two pictures at the same time through multiple viewing angles information. In addition, a stereo vision system can also be formed by installing two or more monocular cameras at different positions on the UGVs, but this will bring greater difficulties to camera calibration.

In the field of UGVs, stereo cameras are mainly used for SLAM [36], vehicle detection [37], road detection [38], traffic sign detection [39], ADAS [40], etc. Compared with Lidar, stereo cameras can collect more dense point cloud information [41], compared with monocular cameras, binocular cameras can obtain additional target depth information. However, it is also susceptible to weather and illumination conditions, in addition, the field of view is narrow, and additional calculation is required to process depth information [41].

2.6. Omni-Direction Camera

Compared with a monocular camera, an omni-direction camera has too large a view to collect a circular panoramic image centered on the camera. With the improvement of the hardware level, they are gradually applied in the field of UGVs. Current research work mainly includes integrated navigation combined with SLAM [42] and semantic segmentation [43].

The advantages of omni-direction camera are mainly reflected in its omni-directional detection field of view and its ability to collect color and texture information, however, the computational cost is high due to the increased collection of image point clouds.

2.7. Event Camera

An overview of event camera technology can be found in [44]. Compared with traditional cameras that capture images at a fixed frame rate, the working principle of event cameras is quite different. The event camera outputs a series of asynchronous signals by measuring the brightness change of each pixel in the image at the microsecond level. The signal data include position information, encoding time and brightness changes.

Event cameras have great application potential in high dynamic application scenarios for UGVs, such as SLAM [45], state estimation [46] and target tracking [47]. The advantages of the event camera are its high dynamic measurement range, sparse spatio-temporal data flow, short information transmission and processing time [48], but its image pixel size is small and the image resolution is low.

2.8. Infrared Camera

Infrared cameras collect environmental information by receiving signals of infrared radiation from objects. Infrared cameras can better complement traditional cameras, and are usually used in environments with peak illumination, such as vehicles driving out of a tunnel and facing the sun, or detection of hot bodies (mostly used in nighttime) [18]. Infrared cameras can be divided into infrared cameras that work in the near-infrared (NIR) area (emit infrared sources to increase the brightness of objects to achieve detection) and far-infrared cameras that work in the far-infrared area (to achieve detection based on the infrared characteristics of the object). Among them, the near-infrared camera is sensitive to the wavelength of 0.15–1.4 μm, while the far-infrared camera is sensitive to the wavelength of 6–15 μm. In practical applications, the corresponding infrared camera needs to be selected according to the wavelength of different detection targets.

In the field of UGVs, infrared cameras are mainly used for pedestrian detection at night [49][50] and vehicle detection [51]. The most prominent advantage of an infrared camera is its good performance at night, Moreover, it is small in size, low in cost, and not easily affected by illumination conditions. However, the images collected do not contain color, texture and depth information, and the resolution is relatively low.

References

- Bishop. A survey of intelligent vehicle applications worldwide. In Proceedings of the IEEE Intelligent Vehicles Symposium 2000 (Cat. No. 00TH8511); Dearborn, MI, USA, 5-5 October 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 25–30.

- Li, ; Gong, J.; Lu, C.; Xi, J. Importance Weighted Gaussian Process Regression for Transferable Driver Behaviour Learning in the Lane Change Scenario. IEEE Trans. Veh. Technol. 2020, 69, 12497–12509.

- Li, ; Wang, B.; Gong, J.; Gao, T.; Lu, C.; Wang, G. Development and Evaluation of Two Learning-Based Personalized Driver Models for Pure Pursuit Path-Tracking Behaviors. In 2018 IEEE Intelligent Vehicles Symposium (IV); Changshu, China, 26-30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 79–84.

- Li, ; Gong, C.; Lu, C.; Gong, J.; Lu, J.; Xu, Y.; Hu, F. Transferable Driver Behavior Learning via Distribution Adaption in the Lane Change Scenario. In 2019 IEEE Intelligent Vehicles Symposium (IV); Paris, France, 9-12 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 193–200.

- Lu, ; Hu, F.; Cao, D.; Gong, J.; Xing, Y.; Li, Z. Transfer Learning for Driver Model Adaptation in Lane-Changing Scenarios Using Manifold Alignment. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3281–3293.

- Ma, ; Qian, S. High-Resolution Traffic Sensing with Probe Autonomous Vehicles: A Data-Driven Approach. Sensors 2021, 21, 464.

- Chen, ; Zhang, Y. An overview of research on military unmanned ground vehicles. Binggong Xuebao/Acta Armamentarii 2014, 35, 1696–1706.

- Sivaraman, ; Trivedi, M.M. Looking at vehicles on the road: A survey of vision-based vehicle detection, tracking, and behavior analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795.

- Xia, L.; Tang, T.B. Vehicle detection techniques for collision avoidance systems: A review. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2318–2338.

- Li, Z.; Lu, C.; Gong, J.; Hu, F. A Comparative Study on Transferable Driver Behavior Learning Methods in the Lane-Changing Scenario. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 3999–4005.

- Hu, F.; Cao, D.; Gong, J.; Xing, Y.; Li, Z. Virtual-to-Real Knowledge Transfer for Driving Behavior Recognition: Framework and a Case Study. IEEE Trans. Veh. Technol. 2019, 68, 6391–6402.

- Li, ; Zhan, W.; Hu, Y.; Tomizuka, M. Generic tracking and probabilistic prediction framework and its application in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3634–3649.

- Li, M.; Zhang, Q.; Luo, Y.; Liang, B.S.; Yang, X.Y. Review of ground vehicles recognition. Tien Tzu Hsueh Pao/Acta Electron. Sin. 2014, 42, 538–546.

- Li, ; Yang, F.; Tomizuka, M.; Choi, C. Evolvegraph: Multi-agent trajectory prediction with dynamic relational reasoning. In Proceedings of the Neural Information Processing Systems (NeurIPS), virtual, 6–12 December 2020.

- Ciaparrone, ; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88.

- Luo, ; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.-K. Multiple object tracking: A literature review. Artif. Intell. 2020, 293, 103448.

- Mozaffari, ; Al-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep Learning-Based Vehicle Behavior Prediction for Autonomous Driving Applications: A Review. IEEE Trans. Intell. Transp. Syst. 2020, 1–15, doi:10.1109/TITS.2020.3012034.

- Rosique, ; Lorente, P.N.; Fernandez, C.; Padilla, A. A Systematic Review of Perception System and Simulators for Autonomous Vehicles Research. Sensors 2019, 19, 648.

- Munir, F.; Rafique, A.; Ko, Y.; Sheri, A.M.; Jeon, M. Object Modeling from 3D Point Cloud Data for Self-Driving Vehicles. In 2018 IEEE Intelligent Vehicles Symposium (IV); Changshu, China, 26-30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 409–414.

- Javanmardi, ; Gu, Y.; Kamijo, S. Adaptive Resolution Refinement of NDT Map Based on Localization Error Modeled by Map Factors. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2237–2243.

- Kraemer, ; Bouzouraa, M.E.; Stiller, C. Utilizing LiDAR Intensity in Object Tracking. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1543–1548.

- Chen, T; Wang, R; Dai, B; Liu, D; Song, J. Likelihood-Field-Model-Based Dynamic Vehicle Detection and Tracking for Self-Driving. IEEE Trans. Intell. Transp. Syst. 2016, 11, 3142–3158.

- Patole, M.; Torlak, M.; Wang, D.; Ali, M. Automotive radars: A review of signal processing techniques. IEEE Signal Process. Mag. 2017, 34, 22–35.

- Zhou, Z.; Zhao, C.; Zou, W. A compressed sensing radar detection scheme for closing vehicle detection. In Proceedings of the 2012 IEEE International Conference on Communications (ICC), Ottawa, Canada, 10–15 June 2012; pp. 6371–6375.

- Pech, ; Nauth, P.M.; Michalik, R. A new Approach for Pedestrian Detection in Vehicles by Ultrasonic Signal Analysis. In Proceedings of the IEEE EUROCON 2019-18th International Conference on Smart Technologies, Novi Sad, Serbia, 1–4 July 2019; pp. 1–5.

- Wu, ; Tsai, P.; Hu, N.; Chen, J. Research and implementation of auto parking system based on ultrasonic sensors. In Proceedings of the 2016 International Conference on Advanced Materials for Science and Engineering (ICAMSE), Tainan, China, 12–13 November 2016; pp. 643–645.

- Krishnan, Design of Collision Detection System for Smart Car Using Li-Fi and Ultrasonic Sensor. IEEE Trans. Veh. Technol. 2018, 67, 11420–11426.

- Mizumachi, ; Kaminuma, A.; Ono, N.; Ando, S. Robust Sensing of Approaching Vehicles Relying on Acoustic Cue. In Proceedings of the 2014 International Symposium on Computer, Consumer and Control, Taichung, Taiwan, 10-12 June 2014; pp. 533–536.

- Syed, ; Morris, B.T. SSeg-LSTM: Semantic Scene Segmentation for Trajectory Prediction. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9-12 June 2019; pp. 2504–2509.

- Weber, M; Fürst, M; Zöllner, J. M. Direct 3D Detection of Vehicles in Monocular Images with a CNN based 3D Decoder. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9-12 June 2019; pp. 417-423.

- Dai, ; Liu, D.; Yang, L.; Liu, Y. Research on Headlight Technology of Night Vehicle Intelligent Detection Based on Hough Transform. In Proceedings of the 2019 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Changsha, China, 12-13 January 2019; pp. 49–52.

- Han, ; Wang, Y.; Yang, Z.; Gao, X. Small-Scale Pedestrian Detection Based on Deep Neural Network. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1–10.

- Wang, ; Gao, J.; Yuan, Y. Embedding Structured Contour and Location Prior in Siamesed Fully Convolutional Networks for Road Detection. IEEE Trans. Intell. Transp. Syst. 2018, 19, 230–241.

- Wang, ; Zhou, L. Traffic Light Recognition with High Dynamic Range Imaging and Deep Learning. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1341–1352.

- Tian, ; Gelernter, J.; Wang, X.; Li, J.; Yu, Y. Traffic Sign Detection Using a Multi-Scale Recurrent Attention Network. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1–10.

- Li, ; Liu, Z.; Ü, Ö.; Lian, J.; Zhou, Y.; Zhao, Y. Dense 3D Semantic SLAM of traffic environment based on stereo vision. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26-30 June 2018; pp. 965–970.

- Zhu, ; Li, J.; Zhang, H. Stereo vision based road scene segment and vehicle detection. In Proceedings of 2nd International Conference on Information Technology and Electronic Commerce, Dalian, China, 20-21 December 2014; pp. 152–156.

- Yang, ; Fang, B.; Tang, Y.Y. Fast and Accurate Vanishing Point Detection and Its Application in Inverse Perspective Mapping of Structured Road. IEEE Trans. Syst. Mancybern. Syst. 2018, 48, 755–766.

- Doval, N.; Al-Kaff, A.; Beltrán, J.; Fernández, F.G.; López, G.F. Traffic Sign Detection and 3D Localization via Deep Convolutional Neural Networks and Stereo Vision. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27-30 October 2019; pp. 1411–1416.

- Donguk, ; Hansung, P.; Kanghyun, J.; Kangik, E.; Sungmin, Y.; Taeho, K. Omnidirectional stereo vision based vehicle detection and distance measurement for driver assistance system. In Proceedings of the IECON 2013—39th Annual Conference of the IEEE Industrial Electronics Society, Vienna, Austria, 10-13 November 2013; pp. 5507–5511.

- Arnold, ; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A Survey on 3D Object Detection Methods for Autonomous Driving Applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795.

- Wang, ; Yue, J.; Dong, Y.; Shen, R.; Zhang, X. Real-time Omnidirectional Visual SLAM with Semi-Dense Mapping. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26-30 June 2018; pp. 695–700.

- Yang, ; Hu, X.; Bergasa, L.M.; Romera, E.; Huang, X.; Sun, D.; Wang, K. Can we PASS beyond the Field of View? Panoramic Annular Semantic Segmentation for Real-World Surrounding Perception. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9-12 June 2019; pp. 446–453.

- Gallego, ; Delbruck, T.; Orchard, G.M.; Bartolozzi, C.; Scaramuzza, D. Event-based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020; pp. 1–1, doi:10.1109/TPAMI.2020.3008413.

- Rebecq, ; Horstschaefer, T.; Gallego, G.; Scaramuzza, D. EVO: A Geometric Approach to Event-Based 6-DOF Parallel Tracking and Mapping in Real Time. IEEE Robot. Autom. Lett. 2017, 2, 593–600.

- Maqueda, ; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-Based Vision Meets Deep Learning on Steering Prediction for Self-Driving Cars. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18-23 June 2018; pp. 5419–5427.

- Lagorce, ; Meyer, C.; Ieng, S.; Filliat, D.; Benosman, R. Asynchronous Event-Based Multikernel Algorithm for High-Speed Visual Features Tracking. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1710–1720.

- Janai, ; Güney, F.; Behl, A.; Geiger, A. Computer vision for autonomous vehicles: Problems, datasets and state of the art. Found. Trends® Comput. Graph. Vis. 2020, 12, 1–308.

- Wang, ; Lin, L.; Li, Y. Multi-feature fusion based region of interest generation method for far-infrared pedestrian detection system. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26-30 June 2018; pp. 1257–1264.

- Lee, ; Chan, Y.; Fu, L.; Hsiao, P. Near-Infrared-Based Nighttime Pedestrian Detection Using Grouped Part Models. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1929–1940.

- Mita, S; Yuquan, X; lshimaru, K; Nishino, S. Robust 3D Perception for any Environment and any Weather Condition using Thermal Stereo. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9-12 June 2019; pp. 2569–2574.