| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Samuel Bruno Berenguel Baeta | + 484 word(s) | 484 | 2020-04-24 10:46:27 | | | |

| 2 | Camila Xu | -39 word(s) | 445 | 2020-10-30 10:46:43 | | | | |

| 3 | Camila Xu | -39 word(s) | 445 | 2020-10-30 10:47:11 | | |

Video Upload Options

OmniSCV is a tool for generating datasets of omnidirectional images with semantic and depth information.

1. Introduction

Omnidirectional or 360º images are becoming widespread in industry and in consumer society, causing omnidirectional computer vision to gain attention. Their wide field of view allows the gathering of a great amount of information about the environment from only an image. However, the distortion of these images requires the development of specific algorithms for their treatment and interpretation. Moreover, a high number of images is essential for the correct training of computer vision algorithms based on learning.

|

|

|

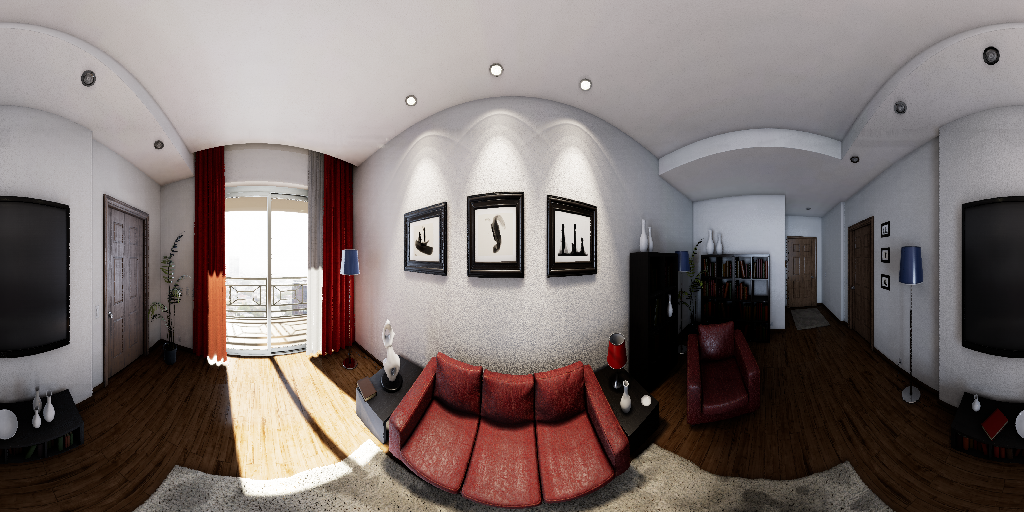

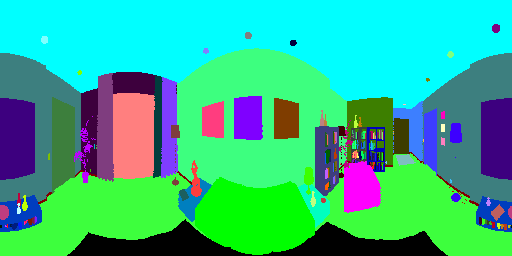

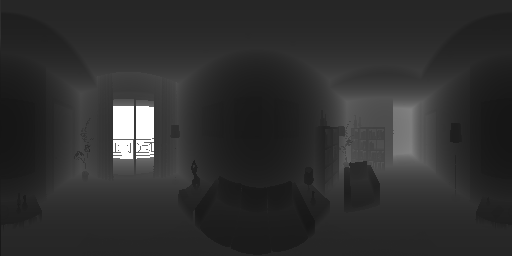

| Equirectangular panorama RGB | Equirectangular panorama Semantic | Equirectangular panorama Depth |

|

|

|

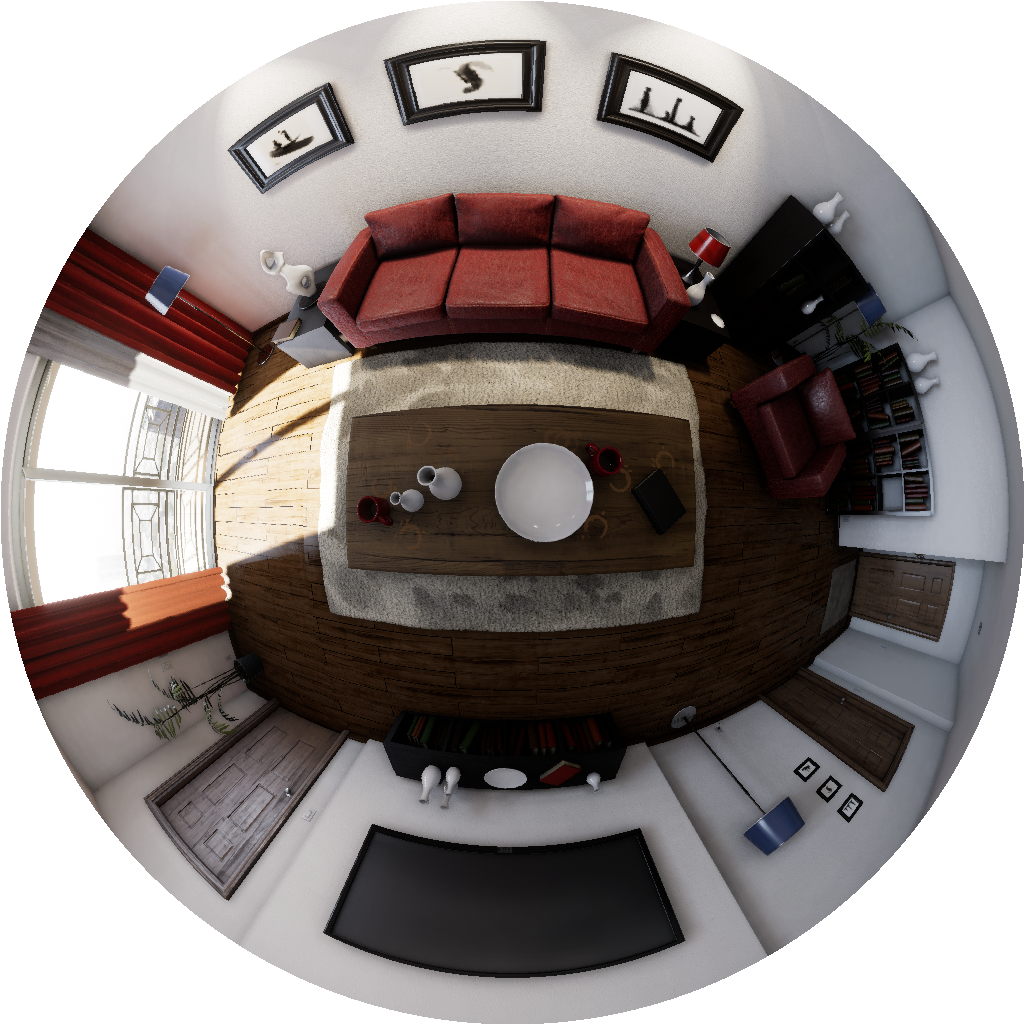

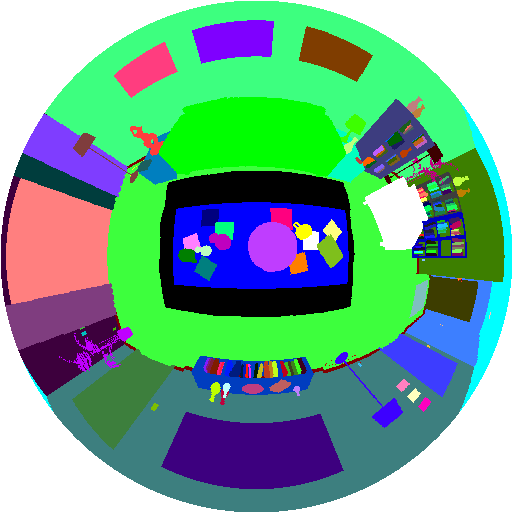

| Fish-eye RGB | Fish-eye Semantic | Fish-eye Depth |

|

|

|

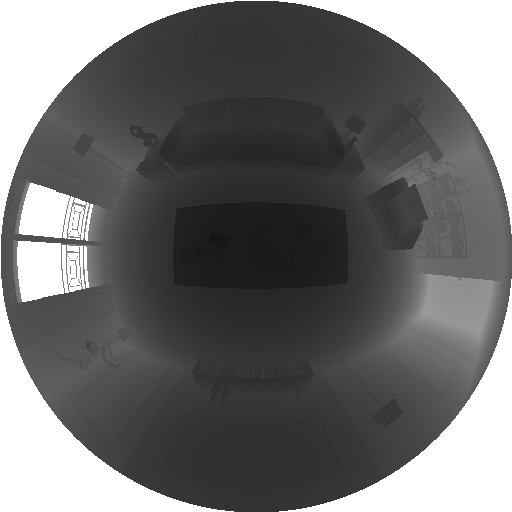

| Non-central panorama RGB | Non-central panorama Semantic | Non-central panorama Depth |

On the other hand, due to the fast development of graphic engines such as Unreal Engine 4 [12], current works take advantage of virtual environments with realistic quality to generate perspective imagery. Simulators such as CARLA [13] and SYNTHIA [14] recreate outdoor scenarios. Works like [15] use the video game Grand Theft Auto V (GTA V) to obtain images with total knowledge of the camera pose, while [16] also obtaining semantic information and object detection for tracking applications. In the same vein, [18,19] obtain video sequences with semantic and depth information for the generation of autonomous driving datasets in different weather conditions and through different scenarios, from rural roads to city streets. These virtual realistic environments have helped to create large datasets of images and videos, mainly from outdoor scenarios, dedicated to autonomous driving. New approaches such as the OmniScape dataset [20] uses virtual environments to obtain omnidirectional images with semantic and depth information in order to create datasets for autonomous driving. However, most of these existing datasets only have outdoors images. There are very few synthetic indoor datasets [21] and most of them only have perspective images or equirectangular panoramas.

OmniSCV is a tool for generating datasets of omnidirectional images with semantic and depth information. The images are synthesized from a set of captures acquired in virtual realistic environments from Unreal Engine 4 through an interface plugin, UnrealCV [32]. Working on virtual environments allows to provide pixel-wise information about semantics and depth as well as perfect knowledge of the calibration parameters of the cameras. This allows the creation of ground-truth information with pixel precision for training learning algorithms and testing 3D vision approaches.

2. Models

A great variety of well-know omnidirectional models are implemented. Among the central projection models:

-

Equirectangular panorama

-

Cylindrical panorama

-

Fish-eye lenses:

-

Equiangular

-

Stereographic

-

Orthogonal

-

Equi-solid angle

-

-

Catadioptric systems:

-

Parabolic mirror

-

Hyperbolic mirror

-

Planar mirror

-

-

Scaramuzza's model

-

Kannala-Brandt's model

Among the non-central projection models:

-

Non-central panorama

-

Non-central catadioptric systems:

-

Conical mirror

-

Spherical mirror

-