| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Edom Moges | + 4255 word(s) | 4255 | 2021-01-05 05:17:47 | | | |

| 2 | Camila Xu | Meta information modification | 4255 | 2021-01-08 09:30:44 | | |

Video Upload Options

Hydrological models are a simplified representation of the natural hydrological processes. These models are developed to understand processes, test hypothesis, and support water resources decision-making. However, as they are simplification of the natural processes, they are inherently uncertain. Their uncertainty primarily stems from their structure, parameter, input and calibration data observations. While parameter and structural uncertainties are related to both data information content and process conceptualizations, input and calibration data observations are a result of data information content. In order to enable an improved process understanding and better decision making, a systemic uncertainty analysis of all of the four sources is critical.

1. Introduction

Hydrological models are developed to understand process, test hypothesis, and support decision-making. These models solve empirical and governing equations at different complexities. Their complexities vary depending on how the governing equations are solved over different spatial configurations such as lumped [1], semi-distributed [2], or fully distributed area [3], as well as the extent to which variables and processes are coupled [4][5]. Although model development has progressed in accounting for different processes and complexities, they remain as simplifications of the actual hydrological processes.

Process simplification is a consequence of limited knowledge and data, imprecise measurements, and involvement of multiple scales and interactive processes [6][7][8][9]. These simplifications introduce uncertainty and make it an intrinsic property of any model [10][11][12]. Analyzing this uncertainty requires separate methodological treatments beyond model development. However, uncertainty analysis (UA) benefits hydrological modeling in (i) identifying model limitations and improvement strategies [13][14], (ii) guiding further data collection [15][16], and (iii) quantifying the uncertainty associated with model predictions.

Various UA techniques have been developed. A few of the methods include generalized likelihood uncertainty estimation (GLUE) [17], differential evolution adaptive metropolis (DREAM) [18], parameter estimation code (PEST) [19], Bayesian total error analysis (BATEA) [20], and multi-objective analysis (Borg) [21]. These techniques have been applied in different water resources decision-making such as water supply design [22], flood mapping [23][24], and hydropower plant evaluation [25]. However, there is a lack of a systematic review and categorization of these techniques to provide a much-needed UA guideline for selecting a suitable method.

2. Sources of Hydrological Model Uncertainties

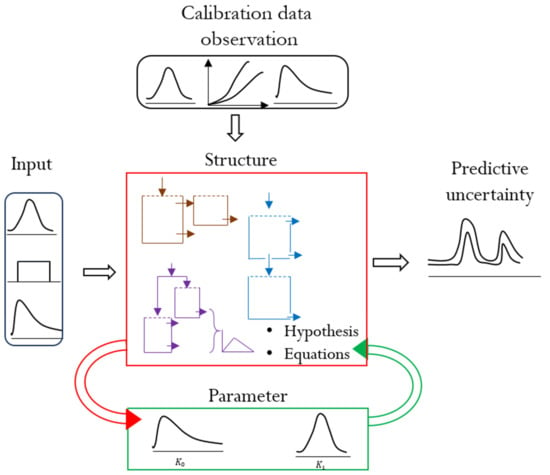

Hydrological model uncertainties stem from parameters, model structure, calibration (observation) and input data (Figure 1). In addition to these sources, uncertainties can stem from model initial and boundary conditions; however, these sources are not considered in this review. Hydrological models often contain parameterizations that are a result of conceptual simplifications, known as effective parameters [26]. Parameter uncertainty can be a result of the inability to estimate or measure these effective parameters that integrate and conceptualize processes [27]. In addition, parameter uncertainty can be a result of the natural process variability and observation errors. Although some model parameters, such as hydraulic conductivity, are measurable at the point scale, their values at the catchment scale vary significantly. Hence, the practical difficulty in measuring this variability can also lead to parameter uncertainty. On the other hand, due to errors in the calibration data, parameter uncertainty can be encountered even if a model is an exact representation of the hydrologic system. Therefore, inability to accurately estimate effective parameters, the challenge of measuring natural variability, and the existence of observation errors lead to parameter uncertainty. This uncertainty often manifests itself in model calibration through the lack of a single optimal set of parameters [28].

Figure 1. Different sources of hydrological model uncertainty. The arrows indicate the direction of interaction of the uncertainty sources. The center rectangle demonstrates different structures, hypotheses, and equations as examples of structural uncertainty. The top and left rectangles indicate input data distributions as sources of input forcing and calibration data uncertainties. The bottom rectangle shows parameter uncertainty using parameter distributions. The right-side sketch shows the resultant predictive uncertainty in a hydrograph as upper and lower predictive bands.

An exact representation of a hydrological system is challenging due to the absence of a unifying theory, limited knowledge and numerical and process simplifications. Such limitations constitute model structural uncertainty. Model structural uncertainty can also refer to alternative conceptualizations, such as the hydro-stratigraphy of the subsurface or the discretization of surface and process features [29][30][31]. Model performance is strongly dependent on model structures [32]. As a result, structural uncertainty is critical as it can render the model and quantification of other uncertainties useless [32][33][34]. Comparing structural and parameter uncertainty, Højberg et al. [35] showed that structural uncertainty is dominant, particularly when the model is used beyond its calibration sphere. Moreover, Rojas et al. [36] concluded that structural uncertainty may contribute up to 30% of the predictive uncertainty. Using the variance decomposition of streamflow estimates, Troin et al. [37] showed that model structure is the highest predictive uncertainty contributor.

Input forcing to hydrological models includes different hydro-meteorological, catchment, and subsurface data. Although there have been improvements in data acquisition and processing, the data used for model forcing are sparse, and subjected to gaps, imprecisions, and uncertainties [38]. In most cases, input data involve interpolations, scaling, and derivation from other measurements that result in an uncertainty range of 10–40% [38]. These inaccuracies in input data constitute input uncertainty. Failure to account for input uncertainty can lead to biased parameter estimation [20][39] and mislead water balance calculations [40]. Consequently, input uncertainty can interfere with the quantification of predictive uncertainty. Comparing input and model parameter uncertainty particularly in a data sparse region, Bárdossy et al. [41] showed the severity of input uncertainty over parameter uncertainty and suggested a simultaneous analysis of both uncertainties to have a meaningful result.

Hydrological modeling often involves calibrating the model parameters and evaluating model predictions using observed state and/or flux variables, such as streamflow and groundwater levels. These observations are subjected to similar measurement uncertainties as input data. Streamflow observations, for instance, are derived from rating curves that translate river stage measurements to discharge estimates. This translation not only propagates the random and systematic stage measurement errors but also passes the structural (rating curve equation) and parameter uncertainties involved in the calibration of the rating curve. In addition, discharge estimates suffer from interpolation and extrapolation errors of the rating curve, hysteresis, change in site conditions (e.g., bed movement), and seasonal variations in measurement and flow conditions [42][43][44][45]. Extrapolation contributes the highest uncertainty [46][47][48]. Overall, discharge observation uncertainty ranges from 5% [49][50] to 25% when extrapolation is involved [51].

Predictive uncertainty is the result of the above uncertainties exhibited on the model output. This uncertainty can be biased or variance-dominated depending on the level of model complexity and the information content of the data, bias being dominant in simple models and variance being dominant in complex models. Predictive uncertainty is usually heteroscedastic, the magnitude of uncertainty varying with the magnitude of the model output (non-Gaussian residuals). In streamflow modeling, this is partly due to the limited number of high flow observations (low frequency events) to constrain model calibration. Different data transformation schemes are used to address heteroscedasticity including the Box–Cox transformation and simple log transformation [47][48].

3. Hydrological Model Uncertainty Analysis

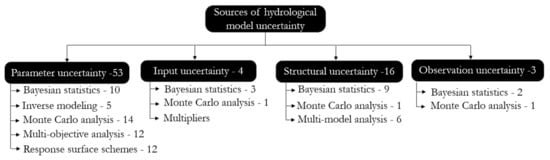

We have found six broad classes of UA methods: (i) Monte Carlo sampling, (ii) response surface-based schemes including polynomial chaos expansion and machine learning, (iii) multi-modeling approaches, (iv) Bayesian statistics, (v) multi-objective analysis, and (vi) least square-based inverse modeling (Figure 2). Furthermore, approximation and analytical solutions based on Taylor series are also used in UA. However, since such approaches are not widely applied, they are not included in this review.

Figure 2. Summary of sources of hydrological model uncertainty and broad techniques used to deal with them.

The number of papers reviewed in this section across the different sources and methods are shown in Figure 2. This numbers indicate pioneering articles, articles that discuss limitations and advantages of the methods, and articles that introduce method improvements. Hence, the numbers do not indicate articles that are application oriented. As rainfall multipliers are embedded as part of model parameters they are not included as a unique method to be reviewed. In general, although it could be a limited sample, the numbers may reflect an approximation of how different methods are being developed for each source and how these methods are being critiqued and advanced as a focus of different studies.

The bellow six broad classes are a summary of different UA methods (Table 1). The common thread across these methods is their involvement of many actual/surrogate model executions. This can translate into high computational time depending on (1) the model’s execution time, (2) whether the surrogate training requires multiple runs, and (3) whether the model execution is parallel.

Table 1. Summary of uncertainty analysis methods, sources of uncertainty, and color coded broader methodological categories.

| No. | UA Methods Reviewed | Applicable in Uncertainty Source | Method Classes | Web Page to Download the Software Tool | Reference Application |

|---|---|---|---|---|---|

| 1 | Monte Carlo | Parameter/input/ structural uncertainty | Monte Carlo | http://www.uncertain-future.org.uk/?page_id=131 | [52] |

| 2 | GLUE | Parameter/ structural uncertainty | Monte Carlo and behavioral threshold | http://www.uncertain-future.org.uk/?page_id=131 | [53] |

| 3 | FUSE | Structural | Monte Carlo | https://github.com/cvitolo/fuse | [54] |

| 4 | Multi-objective | Parameter uncertainty | Multi-objective optimization | http://borgmoea.org/https://faculty.sites.uci.edu/jasper/software/; http://moeaframework.org/index.html |

[55][56][57] |

| 5 | Machine Learning | Predictive uncertainty | Machine Learning | https://scikit-learn.org/stable/ | [58] |

| 6 | PEST/UCODE | Parameter/ predictive uncertainty | Least square analysis | http://www.pesthomepage.org/ https://igwmc.mines.edu/ucode-2/ |

[59][60][61] |

| 7 | Polynomial chaos expansion | Predictive/ parameter uncertainty | Polynomial chaos expansion | https://www.uqlab.com/featureshttp://muq.mit.edu/ https://pypi.org/project/UQToolbox/ https://github.com/jonathf/chaospy |

[62][63][64] |

| 8 | Ensemble averaging | Structural uncertainty | Multi-models | [65] | |

| 9 | BMA | Structural uncertainty | Multi-models plus Bayesian statistics | https://faculty.sites.uci.edu/jasper/software | [66] |

| 10 | HME | Structural uncertainty | Multi-models plus Bayesian statistics | https://faculty.sites.uci.edu/jasper/software | [67] |

| 11 | DREAM | Parameter/input uncertainty | Bayesian statistics | https://faculty.sites.uci.edu/jasper/software/ | [18] |

| 12 | BATEA and IBUNE | Input/structure/ parameter uncertainty | Bayesian statistics | https://faculty.sites.uci.edu/jasper/software/ | [68][69] |

3.1. Parameter Uncertainty

Compared to the other sources of uncertainty, several techniques are employed to address parameter uncertainty (Figure 2). One of the widely used parameter UA methods is generalized likelihood uncertainty estimation (GLUE) [17], which accounts for the equifinality hypothesis [28]. The equifinality hypothesis highlights the existence of multiple parameter sets that describe hydrological processes indiscriminately or producing the same final result. The approach augments Monte Carlo simulations with a behavioral threshold measure that distinguishes hydrologically tenable and untenable parameters (and structures). Although GLUE’s straightforward conceptualization and implementation allow it to achieve a widespread use, GLUE has been criticized for its subjectivity in choosing a behavioral threshold and its lack of a formal statistical foundation [70][71][72]. Extending GLUE, Beven [28] suggested the limits of acceptability approach where pre-defined uncertainties that objectively reflect both input and output observation uncertainties are used as a measure of acceptability than the subjective behavioral thresholds. Although its use is not as widespread as GLUE, this approach has been applied, e.g., [73][74]. The limitation of its application is partly due to the lack of measurements that reflect the level of input and output uncertainties and their interactions [75][76][77].

Following GLUE, numerous contributions have been made to formally quantify parameter uncertainty. These approaches primarily rely on Bayesian statistics [78][79][80][81]. The main advantage of the formal Bayesian statistics is that parameter uncertainty is not only quantified, but can also be reduced through the inclusion of prior knowledge. Although GLUE’s behavioral thresholds can achieve this goal, their formulation is subjective. The latest addition to the formal approach is DREAM [18], which has been applied widely. DREAM’s widespread application is derived from its unique capability of merging the differential evolution algorithm [82] with the adaptive Markov Chain Monte Carlo (MCMC) approach [83][84]. The differential evolution allows DREAM to efficiently explore non-linear and discontinuous parameter spaces, while the adaptive MCMC component keeps it within the parameter posterior space. Contrary to other MCMC schemes [85], DREAM uses multiple chains to exchange sampling space information rather than confirming the attainment of the stationary state. The primary challenge of DREAM and other Bayesian methods is identifying a likelihood function that results in homoscedastic residuals, justifying the use of a strong assumption for a formal likelihood function. This challenge is particularly difficult in disproportionately low flow dominated observations of streamflow coupled with a few high flows [28][47][86][87][88].

Least squares-based parameter inversions incorporated in PEST [19] and US Geological Survey computer program (UCODE) [61] are also used to quantify parameter uncertainty. These approaches rely on linear approximation of models and Gaussian residuals. Besides estimating parameter uncertainty, these methods are also useful in determining the number of model parameters that can be estimated using the available data [89][90]. This is valuable in highly parameterized models to prioritize data collection for model improvement. Furthermore, PEST expedites Monte Carlo based UA through its null space Monte Carlo analysis and the use of singular value decomposition (SVD). This further allows PEST to be used in its SVD-assist mode with Tikhonov regularization. The SVD-assist approach coupled with parallelized Jacobian matrix calculation makes parameter estimation close to observations (because of Tikhonov regularization) and faster (due to parallelization plus SVD reducing the parameter space) when calibrating highly parameterized and ill-posed problems. The main limitations of PEST and other least-squared based UA are their assumption of model linearity and Gaussian residuals. These assumptions have limited their use in surface water models riddled with threshold-based processes, discontinuities and integer variables.

Multi-objective optimization (MOO) is another UA approach for parameter uncertainty. MOO-based UA has received substantial interest due to the absence of a single global optimal solution due to equifinality, and because of the need for improved constraint of flux and store outputs in hydrological models [55][91][92]. MOO allows retrieving information from the increasingly available data against which model predictions can be compared [56][93]. It handles parameter uncertainty by conditioning model calibration through multiple complementary and competing objective functions. The functions can be defined using (1) multiple responses such as, metrics that measure the matching of different segments of a hydrograph, (2) same-variable output but at multiple sites, and (3) multi-variable outputs such as streamflow, soil moisture, and evapotranspiration [56][94][95]. The result of MOO provides trade-off solutions referred to as non-dominated (Pareto) solutions in which each member solution matches some aspect of the objective better than every other member.

Parameter uncertainty is considered well identified when the optimized parameter uncertainty substantially decreased from the prior uncertainty, leading to a reduced model prediction uncertainty. In MOO, the uncertainty range can further be narrowed by rejecting solutions that failed the model validation test. MOO also provides unique parameter uncertainty information following the shape of the Pareto front. For instance, extended Pareto front in each objective indicates high uncertainty in reproducing the parameterization of the processes [56][95][96]. Furthermore, MOO provides functionality beyond parameter UA as the shape of the tradeoff allows us to understand model structural limitations [97]. However, this advantage might not be straightforward because meaningful multi-objective tradeoffs in hydrological modeling are less frequent [97].

One of the challenges in MOO is the lack of consistent criteria for choosing the number and type of the objective functions and inability to formally emphasize one criterion over the other. Like GLUE, MOO is also statistically informal as it does not use Bayesian probability theory. Theoretically, the Pareto solutions and GLUE’s behavioral solutions may overlap; however, they are not necessarily equivalent. The difference between the parameter uncertainty sets defined by the Pareto front and the GLUE approach is that GLUE’s equifinality set consists of both the dominated and non-dominated parameter whereas the Pareto set contains only the non-dominated sets [98]. The difference between Bayesian UA and multi-objective analysis is that Bayesian schemes usually have a single objective (likelihood functions) or an aggregated multi-objective. In cases where multi-objective configuration is employed, the Bayesian scheme results in a compromise solution [99] while MOO results in a full Pareto solution [98]. Detailed review of MOO can be found in [95].

Response surface methods are used to approximate predictive uncertainty that is primarily caused by parameter uncertainty. Response surface methods employ a proxy function emulating the original model’s parameter and output relationships, making the method blind to model internal workings or the nature of outputs that are not emulated. The common proxy functions used to approximate models are truncated Polynomial Chaos Expansion (PCE) [2][100][101] and machine learning schemes [102][103][104][105]. Since these methodologies are computationally inexpensive, the approximation is suited for UA in complex models that demand long run time or applied for real time forecasts. Response surface methods train the approximating functions based on a subset of the original model output and parameter relationships. Consequently, one of the major challenges of these schemes is ensuring the accuracy of the approximating function. If the subset is not representative, the function can lead to biased approximation. Identifying the right size and representative subset are an ongoing research. Furthermore, the accuracy of the response surface methods depends on the smoothness of the original model parameter and output surface relationship. Thus, the approximation is better for problems that are not highly non-linear and response surfaces that are not riddled with thresholds and multiple local optimums. Commonly, a validation test on a split sample is considered to confirm the approximating function’s accuracy [2][105].

The typical approach of uncertainty quantification using response surfaces is to conduct Monte Carlo simulations using the approximated function. The resultant Monte Carlo based distributions represent the predictive uncertainty. Compared to the machine learning alternative, PCE offers an analytical approximation of the uncertainty through the estimate of variance without the need for simulations [106]. Further review of PCE can be found in [107][108], while review of machine learning approaches are provided by [109].

Dotto et al. [110] compared Monte Carlo (GLUE), multi-objective (A Multi-Algorithm, Genetically Adaptive Multiobjective—AMALGAM [57]) and Bayesian (Model-Independent Markov Chain Monte Carlo Analysis—MICA [111]) techniques to estimate parameter uncertainty. They found that while all the techniques performed similarly; GLUE was slow but easiest to implement. Although the Bayesian technique was less suitable because it required homoscedastic residuals, it was the only one avoiding subjectivity. Finally, they indicated that method choice depends on the modelers’ experience, priority, and problem complexity. Further, Keating et al. [112] found equivalent performance between the Bayesian DREAM and the least square null-space Monte Carlo. Comparing GLUE and formal Bayesian techniques, Thi et al. [113] indicated formal Bayesian method’s efficiency in resulting to a better identified parameter. However, Jin et al. [114] indicated that a stricter behavioral threshold choice can achieve similar identifiability with an increased computation [115]. A detailed comparison of formal Bayesian techniques against GLUE and its behavioral threshold choices can be found in [115].

3.2. Input Uncertainty

Hydrological models require different hydroclimatic input data. For this review, we focused on precipitation and its uncertainty. Traditionally, input uncertainty is addressed using a factor that multiplies input precipitation to correct measurement uncertainty. This approach is relatively fast and easy as the multiplication factor is expert judgement-based or estimated with model parameters. However, the method is ad hoc and does not have formal procedures to determine the multiplying factor. Beyond this approach, precipitation uncertainties can be studied using Monte Carlo (GLUE) and Bayesian statistics. The Monte Carlo approach estimates the rainfall multiplying along with the model parameters. The Bayesian techniques can be classified into two classes, model dependent and model independent, depending on whether the input uncertainty is estimated dependently or independently of the model parameters. Balin et al. [116] demonstrated how input uncertainty can be estimated as a standalone quantity, separate from model parameters. They decoupled input uncertainty from the model by assuming a distribution that represents the “true” precipitation. This approach is advantageous, because it relieves input uncertainty from the remaining uncertainty sources. However, the approach is limited because of the difficulty in determining the “true” input distribution.

On the other hand, Bayesian total error analysis (BATEA [69]) and integrated Bayesian uncertainty estimator (IBUNE [68]) estimate input uncertainty along with model parameters. Hence, these approaches recover input uncertainty with parameter and structural uncertainties. Thereby, the estimated input uncertainty is dependent on the specific model structure and can vary with changes in model structures. Consequently, the input uncertainty might not only be a result of input measurements, but also structural and parameter uncertainties. These approaches can also be computationally expensive in distributed models, which require spatially and temporally varying inputs.

3.3. Structural Uncertainty

Multi-model averaging and ensemble techniques, which pool together alternative model structures, have been applied to quantify and reduce structural uncertainty. Hagedorn et al. [117] noted that the success of multi-model averaging is a result of error compensation, consistency, and reliability. Consistency refers to the lack of a single model that performs best regardless of circumstances, and reliability refers to averages performing better than the worst component an ensemble. Moreover, Moges et al. [118] showed why a multi-model average performs better than a single model using statistical proof and empirical large-sample data.

Predictions from ensembles are combined using either equal or performance-based weights. Velázquez et al. [65] presented a study that benefits from equal weighting while performance based weighted multi-model predictions are discussed in [32][66][119]. A comparison of different alternative model averaging techniques was presented in [120]. Equal weighting is advantageous in the absence of model weighting/discrimination criteria and when component models have similar performances. However, when the models’ performances are significantly different and each model is specialized in simulating certain components of the hydrological processes, using variable weights provide better predictions.

One of the widely used weighted multi-model averaging is Bayesian model averaging (BMA) [121][122][123]. BMA and similar Bayesian based averaging techniques are advantageous since their weights are interpretable and derived from model posterior performance that combines the model’s ability to fit observations and experts’ prior knowledge. Despite its widespread application, BMA has its limitations in addressing structural uncertainty. For instance, even though model performance varies across hydrograph segments [124], runoff seasons [14], or catchment circumstances [67], BMA assigns constant weights to component models. Furthermore, improvement in BMA’s performance was noted when component models are weighted differently for different quantiles of a hydrograph [124]. In contrast, hierarchical mixture of experts (HME) [67], an extension of BMA, has the capacity to assign different weights to different models depending on the dominant processes governing different segments of a hydrograph. This flexibility allows HME to be useful in dealing with structural uncertainty when model performances vary.

Hydrology involves governing physical principles and established process signatures that need to be respected. As a result, any statistically based multi-model averaging techniques need to be critically streamlined so that they respect hydrological principles. In this regard, Gupta et al. [125] stressed the application of diagnostic modeling. Similarly, Sivapalan et al. [126] argued the importance of parsimony and incorporation of dominant processes in model structural development. To this end, Moges et al. [127] streamlined HME by coupling it with hydrological signatures that diagnose model inadequacy. Such approaches can be explored to characterize catchment hydrology while avoiding disparities between statistical success and process description.

Structural uncertainty has also been addressed in the framework for understanding structural errors (FUSE [54]), which explores structural uncertainty through shuffling and combination of parts of alternative hydrological models. The parts constitute alternative formulations of processes. FUSE has similarity with Monte Carlo (or Bootstrap) methods. However, the samples in FUSE are components of a model representing the different alternative process conceptualizations. Further, given the ease of parallelization, FUSE is practical to address structural uncertainty.

The chief criticism of all multi-modeling UA is the limitation of identifying and incorporating the entire span of feasible model structures. Although the above methods can handle any number of structures, the practical inability to identify and explore the entire structural space constrains the scope of the investigation. As a result, full examination of structural uncertainty is limited.

3.4. Calibration Data Uncertainty

Although different calibration data are used to constrain hydrological models, streamflow observation uncertainty is common in the literature. Hence, this subsection focuses on streamflow observation uncertainty. Some of the methodologies used to address observation uncertainty include Monte Carlo simulations to estimate the rating curve uncertainty, use of the upper and lower bounds of the rating curve estimates, and Bayesian techniques. The first method propagates observation uncertainty to parameter and/or predictive uncertainty estimates through a repeated calibration of the hydrologic model [40], making the method computationally intensive. In the second approach, only the upper and lower rating curve uncertainty bounds are considered to come up with the corresponding upper and lower bounds of predictive uncertainty [128]. This approach is computationally effective, but it does not maximize exploring the full information contained in the rating curve uncertainty. To improve the rating curve uncertainty utilization, McMillan et al. [129] suggested derivation of an informal likelihood function that accounts for the entire rating curve uncertainty. This technique allowed them to apply Bayesian statistics and use the rating curve information to analyze observation uncertainty. In contrast, Sirakosa and Renard [130] used a formal Bayesian technique where the rating curve parameters and the hydrological model parameters are inferred simultaneously by directly using the stage measurement instead of the streamflow estimate.

Observation uncertainty influences predictive uncertainty. McMillan et al. [129] showed improvement in model predictive uncertainty by considering discharge observation uncertainty compared to a model that does not consider discharge uncertainty while Beven and Smith [77] demonstrated the disinformation emanating from observation uncertainties. Aronica et al. [128] showed how different rating curve realizations lead to different predictive uncertainties, whereas Engeland et al. [40] concluded that considering or not considering observation uncertainty leads to different parameter uncertainties and different interactions with structure, parameter, and predictive uncertainties. These studies indicate the significance of observation uncertainty and the need to account it.

3.5. Predictive Uncertainty

The resultant uncertainty estimate of a model output is predictive uncertainty. Predictive uncertainty can be quantified by propagating parameter, structure and input uncertainties to the model output. This propagation is mostly performed by Monte Carlo simulation of parameter, structure, and input uncertainties. Predictive uncertainty can be reduced using different machine learning schemes [58][131]. Although their physical process realism needs validation, these schemes reduce predictive uncertainty as they can learn and identify patterns in the model residuals. Besides, to reduce predictive uncertainty, it is important to disentangle and understand the propagation of the different sources of uncertainties.

References

- Bergström, S. Development and Application of a Conceptual Runoff Model for Scandinavian Catchments; SMHI Norrköping: Norrköping, Sweden, 1976. [Google Scholar]

- Hossain, F.; Anagnostou, E.N. Assessment of a stochastic interpolation based parameter sampling scheme for efficient uncertainty analyses of hydrologic models. Comput. Geosci. 2005, 31, 497–512. [Google Scholar] [CrossRef]

- Abbott, M.B.; Bathurst, J.C.; Cunge, J.A.; O’Connell, P.E.; Rasmussen, J. An introduction to the European Hydrological System—Systeme Hydrologique Europeen, “SHE”, 1: History and philosophy of a physically-based, distributed modelling system. J. Hydrol. 1986, 87, 45–59. [Google Scholar] [CrossRef]

- Markstrom, S.L.; Niswonger, R.G.; Regan, R.S.; Prudic, D.E.; Barlow, P.M. GSFLOW—Coupled Ground-Water and Surface-Water Flow Model Based on the Integration of the Precipitation-Runoff Modeling System (PRMS) and the Modular Ground-Water Flow Model (MODFLOW-2005); U.S. Department of the Interior: Washington, DC, USA; U.S. Geological Survey: Washington, DC, USA, 2008. [Google Scholar]

- Kollet, S.; Maxwell, R.M. Integrated surface–groundwater flow modeling: A free-surface overland flow boundary condition in a parallel groundwater flow model. Adv. Water Resour. 2006, 29, 945–958. [Google Scholar] [CrossRef]

- Gupta, H.V.; Beven, K.J.; Wagener, T. Model Calibration and Uncertainty Estimation. In Encyclopedia of Hydrological Sciences; John Wiley & Sons, Ltd.: Chichester, UK, 2005. [Google Scholar]

- Kirchner, J.W. Getting the right answers for the right reasons: Linking measurements, analyses, and models to advance the science of hydrology. Water Resour. Res. 2006, 42. [Google Scholar] [CrossRef]

- Kirchner, J.W. Catchments as simple dynamical systems: Catchment characterization, rainfall-runoff modeling, and doing hydrology backward. Water Resour. Res. 2009, 45. [Google Scholar] [CrossRef]

- Blöschl, G.; Sivapalan, M. Scale issues in hydrological modelling: A review. Hydrol. Process. 1995, 9, 251–290. [Google Scholar] [CrossRef]

- Gan, T.Y.; Biftu, G.F. Effects of model complexity and structure, parameter interactions and data on watershed modeling. Water Sci. Appl. 2003, 6, 317–329. [Google Scholar] [CrossRef]

- Orth, R.; Staudinger, M.; Seneviratne, S.I.; Seibert, J.; Zappa, M. Does model performance improve with complexity? A case study with three hydrological models. J. Hydrol. 2015, 523, 147–159. [Google Scholar] [CrossRef]

- Li, H.; Xu, C.-Y.; Beldring, S. How much can we gain with increasing model complexity with the same model concepts? J. Hydrol. 2015, 527, 858–871. [Google Scholar] [CrossRef]

- Bulygina, N.; Gupta, H.V. Estimating the uncertain mathematical structure of a water balance model via Bayesian data assimilation. Water Resour. Res. 2009, 45. [Google Scholar] [CrossRef]

- Son, K.; Sivapalan, M. Improving model structure and reducing parameter uncertainty in conceptual water balance models through the use of auxiliary data. Water Resour. Res. 2007, 43. [Google Scholar] [CrossRef]

- Lu, D.; Ricciuto, D.; Evans, K. An efficient Bayesian data-worth analysis using a multilevel Monte Carlo method. Adv. Water Resour. 2018, 113, 223–235. [Google Scholar] [CrossRef]

- Neuman, S.P.; Xue, L.; Ye, M.; Lu, D. Bayesian analysis of data-worth considering model and parameter uncertainties. Adv. Water Resour. 2012, 36, 75–85. [Google Scholar] [CrossRef]

- Beven, K.J.; Binley, A.M. The future of distributed models: Model calibration and uncertainty prediction. Hydrol. Process. 1992, 6, 279–298. [Google Scholar] [CrossRef]

- Vrugt, J.A. Markov chain Monte Carlo simulation using the DREAM software package: Theory, concepts, and MATLAB implementation. Environ. Model. Softw. 2016, 75, 273–316. [Google Scholar] [CrossRef]

- Doherty, J. PEST, Model-Independent Parameter Estimation—User Manual, 5th ed.; with slight additions; Watermark Numerical Computing: Brisbane, Australia, 2010. [Google Scholar]

- Kavetski, D.; Franks, S.W.; Kuczera, G. Confronting input uncertainty in environmental modelling. Water Science and Application 2003, 6, 49–68. [Google Scholar] [CrossRef]

- Hadka, D.; Reed, P. Borg: An Auto-Adaptive Many-Objective Evolutionary Computing Framework. Evol. Comput. 2013, 21, 231–259. [Google Scholar] [CrossRef]

- Muleta, M.K.; McMillan, J.; Amenu, G.G.; Burian, S. Bayesian Approach for Uncertainty Analysis of an Urban Storm Water Model and Its Application to a Heavily Urbanized Watershed. J. Hydrol. Eng. 2013, 18, 1360–1371. [Google Scholar] [CrossRef]

- Jung, Y.; Merwade, V. Uncertainty Quantification in Flood Inundation Mapping Using Generalized Likelihood Uncertainty Estimate and Sensitivity Analysis. J. Hydrol. Eng. 2012, 17, 507–520. [Google Scholar] [CrossRef]

- Rampinelli, C.G.; Knack, I.M.; Smith, T. Flood Mapping Uncertainty from a Restoration Perspective: A Practical Case Study. Water 2020, 12, 1948. [Google Scholar] [CrossRef]

- McMillan, H.K.; Seibert, J.; Petersen-Overleir, A.; Lang, M.; White, P.; Snelder, T.; Rutherford, K.; Krueger, T.; Mason, R.; Kiang, J. How uncertainty analysis of streamflow data can reduce costs and promote robust decisions in water management applications. Water Resour. Res. 2017, 53, 5220–5228. [Google Scholar] [CrossRef]

- Beven, K. Changing ideas in hydrology—The case of physically-based models. J. Hydrol. 1989, 105, 157–172. [Google Scholar] [CrossRef]

- Wagener, T.; Gupta, H.V. Model identification for hydrological forecasting under uncertainty. Stoch. Environ. Res. Risk Assess. 2005, 19, 378–387. [Google Scholar] [CrossRef]

- Beven, K. A manifesto for the equifinality thesis. J. Hydrol. 2006, 320, 18–36. [Google Scholar] [CrossRef]

- Refsgaard, J.C.; Christensen, S.; Sonnenborg, T.O.; Seifert, D.; Højberg, A.L.; Troldborg, L. Review of strategies for handling geological uncertainty in groundwater flow and transport modeling. Adv. Water Resour. 2012, 36, 36–50. [Google Scholar] [CrossRef]

- Rojas, R.; Kahunde, S.; Peeters, L.; Batelaan, O.; Feyen, L.; Dassargues, A. Application of a multimodel approach to account for conceptual model and scenario uncertainties in groundwater modelling. J. Hydrol. 2010, 394, 416–435. [Google Scholar] [CrossRef]

- Zeng, X.; Wang, D.; Wu, J.; Zhu, X.; Wang, L.; Zou, X. Uncertainty Evaluation of a Groundwater Conceptual Model by Using a Multimodel Averaging Method. Hum. Ecol. Risk Assessment: Int. J. 2015, 21, 1246–1258. [Google Scholar] [CrossRef]

- Butts, M.B.; Payne, J.T.; Kristensen, M.; Madsen, H. An evaluation of the impact of model structure on hydrological modelling uncertainty for streamflow simulation. J. Hydrol. 2004, 298, 242–266. [Google Scholar] [CrossRef]

- Doherty, J.; Welter, D. A short exploration of structural noise. Water Resour. Res. 2010, 46, 46. [Google Scholar] [CrossRef]

- Refsgaard, J.C.; Van Der Sluijs, J.P.; Brown, J.; Van Der Keur, P. A framework for dealing with uncertainty due to model structure error. Adv. Water Resour. 2006, 29, 1586–1597. [Google Scholar] [CrossRef]

- Højberg, A.L.; Refsgaard, J.C. Model uncertainty-parameter uncertainty versus conceptual models. Water Sci. Technol. 2005, 52, 177–186. [Google Scholar] [CrossRef] [PubMed]

- Rojas, R.; Feyen, L.; Dassargues, A. Conceptual model uncertainty in groundwater modeling: Combining generalized likelihood uncertainty estimation and Bayesian model averaging. Water Resour. Res. 2008, 44, 44. [Google Scholar] [CrossRef]

- Troin, M.; Arsenault, R.; Martel, J.-L.; Brissette, F. Uncertainty of Hydrological Model Components in Climate Change Studies over Two Nordic Quebec Catchments. J. Hydrometeorol. 2018, 19, 27–46. [Google Scholar] [CrossRef]

- McMillan, H.; Westerberg, I.K.; Krueger, T. Hydrological data uncertainty and its implications. Wiley Interdiscip. Rev. Water 2018, 5, 1319. [Google Scholar] [CrossRef]

- Renard, B.; Kavetski, D.; Kuczera, G.; Thyer, M.; Franks, S.W. Understanding predictive uncertainty in hydrologic modeling: The challenge of identifying input and structural errors. Water Resour. Res. 2010, 46. [Google Scholar] [CrossRef]

- Engeland, K.; Steinsland, I.; Johansen, S.S.; Petersen-Øverleir, A.; Kolberg, S. Effects of uncertainties in hydrological modelling. A case study of a mountainous catchment in Southern Norway. J. Hydrol. 2016, 536, 147–160. [Google Scholar] [CrossRef]

- Bárdossy, A.; Anwar, F.; Seidel, J. Hydrological Modelling in Data Sparse Environment: Inverse Modelling of a Historical Flood Event. Water 2020, 12, 3242. [Google Scholar] [CrossRef]

- Kiang, J.E.; Cohn, T.A.; Mason, R.R., Jr. Quantifying Uncertainty in Discharge Measurements: A New Approach. In Proceedings of the World Environmental and Water Resources Congress 2009, Kansas City, MO, USA, 17–21 May 2009; American Society of Civil Engineers: Reston, VA, USA, 2009; pp. 1–8. [Google Scholar]

- McMillan, H.; Krueger, T.; Freer, J. Benchmarking observational uncertainties for hydrology: Rainfall, river discharge and water quality. Hydrol. Process. 2012, 26, 4078–4111. [Google Scholar] [CrossRef]

- Sevrez, D.; Renard, B.; Reitan, T.; Mason, R.; Sikorska, A.E.; McMillan, H.; Le Coz, J.; Belleville, A.; Coxon, G.; Freer, J.; et al. A Comparison of Methods for Streamflow Uncertainty Estimation. Water Resour. Res. 2018, 54, 7149–7176. [Google Scholar] [CrossRef]

- Sikorska, A.E.; Scheidegger, A.; Banasik, K.; Rieckermann, J. Considering rating curve uncertainty in water level predictions. Hydrol. Earth Syst. Sci. 2013, 17, 4415–4427. [Google Scholar] [CrossRef]

- Domeneghetti, A.; Castellarin, A.; Brath, A. Assessing rating-curve uncertainty and its effects on hydraulic model calibration. Hydrol. Earth Syst. Sci. 2012, 16, 1191–1202. [Google Scholar] [CrossRef]

- Evin, G.; Kavetski, D.; Thyer, M.; Kuczera, G. Pitfalls and improvements in the joint inference of heteroscedasticity and autocorrelation in hydrological model calibration. Water Resour. Res. 2013, 49, 4518–4524. [Google Scholar] [CrossRef]

- Martinez, G.F.; Gupta, H.V. Hydrologic consistency as a basis for assessing complexity of monthly water balance models for the continental United States. Water Resour. Res. 2011, 47. [Google Scholar] [CrossRef]

- Cong, S.; Xu, Y. The effect of discharge measurement error in flood frequency analysis. J. Hydrol. 1987, 96, 237–254. [Google Scholar] [CrossRef]

- WMO. Guide to Hydrological Practices Volume I Hydrology-from Measurement to Hydrological Information; WMO: Geneva, Switzerland, 2008. [Google Scholar]

- Di Baldassarre, G.; Montanari, A. Uncertainty in river discharge observations: A quantitative analysis. Hydrol. Earth Syst. Sci. 2009, 13, 913–921. [Google Scholar] [CrossRef]

- Breuer, L.; Huisman, J.A.; Frede, H.-G. Monte Carlo assessment of uncertainty in the simulated hydrological response to land use change. Environ. Model. Assess. 2006, 11, 209–218. [Google Scholar] [CrossRef]

- Beven, K.J. Environmental Modelling: An Uncertain Future: An Introduction to Techniques for Uncertainty Estimation in Environmental Prediction; Routledge: Abingdon, UK, 2009; ISBN 9780415457590. [Google Scholar]

- Clark, M.P.; Slater, A.G.; Rupp, D.E.; Woods, R.A.; Vrugt, J.A.; Gupta, H.V.; Wagener, T.; Hay, L.E. Framework for Understanding Structural Errors (FUSE): A modular framework to diagnose differences between hydrological models. Water Resour. Res. 2008, 44. [Google Scholar] [CrossRef]

- Yapo, P.O.; Gupta, H.V.; Sorooshian, S. Multi-objective global optimization for hydrologic models. J. Hydrol. 1998, 204, 83–97. [Google Scholar] [CrossRef]

- Gupta, H.V.; Sorooshian, S.; Yapo, P.O. Toward improved calibration of hydrologic models: Multiple and noncommensurable measures of information. Water Resour. Res. 1998, 34, 751–763. [Google Scholar] [CrossRef]

- Vrugt, J.A.; Robinson, B.A. Improved evolutionary optimization from genetically adaptive multimethod search. Proc. Natl. Acad. Sci. USA 2007, 104, 708–711. [Google Scholar] [CrossRef] [PubMed]

- Demissie, Y.; Valocchi, A.; Minsker, B.S.; Bailey, B.A. Integrating a calibrated groundwater flow model with error-correcting data-driven models to improve predictions. J. Hydrol. 2009, 364, 257–271. [Google Scholar] [CrossRef]

- Doherty, J. Ground Water Model Calibration Using Pilot Points and Regularization. Ground Water 2003, 41, 170–177. [Google Scholar] [CrossRef]

- Doherty, J.; Johnston, J.M. 201. Methodologies for calibration and predictive analysis of a watershed model. JAWRA J. Am. Water Resour. Assoc. 2003, 39, 251–265. [Google Scholar] [CrossRef]

- Poeter, E.P.; Hill, M.C. UCODE, a computer code for universal inverse modeling. Comput. Geosci. 1999, 25, 457–462. [Google Scholar] [CrossRef]

- Sochala, P.; Le Maître, O. Polynomial Chaos expansion for subsurface flows with uncertain soil parameters. Adv. Water Resour. 2013, 62, 139–154. [Google Scholar] [CrossRef]

- Wu, B.; Zheng, Y.; Tian, Y.; Wu, X.; Yao, Y.; Han, F.; Liu, J.; Zheng, C. Systematic assessment of the uncertainty in integrated surface water-groundwater modeling based on the probabilistic collocation method. Water Resour. Res. 2014, 50, 5848–5865. [Google Scholar] [CrossRef]

- Feinberg, J.; Langtangen, H.P. Chaospy: An open source tool for designing methods of uncertainty quantification. J. Comput. Sci. 2015, 11, 46–57. [Google Scholar] [CrossRef]

- Velázquez, J.A.; Anctil, F.; Perrin, C. Performance and reliability of multimodel hydrological ensemble simulations based on seventeen lumped models and a thousand catchments. Hydrol. Earth Syst. Sci. 2010, 14, 2303–2317. [Google Scholar] [CrossRef]

- Vrugt, J.A.; Diks, C.G.H.; Clark, M.P. Ensemble Bayesian model averaging using Markov Chain Monte Carlo sampling. Environ. Fluid Mech. 2008, 8, 579–595. [Google Scholar] [CrossRef]

- Marshall, L.; Nott, D.; Sharma, A. Towards dynamic catchment modelling: A Bayesian hierarchical mixtures of experts framework. Hydrol. Process. 2007, 21, 847–861. [Google Scholar] [CrossRef]

- Ajami, N.K.; Duan, Q.; Sorooshian, S. An integrated hydrologic Bayesian multimodel combination framework: Confronting input, parameter, and model structural uncertainty in hydrologic prediction. Water Resour. Res. 2007, 43. [Google Scholar] [CrossRef]

- Kavetski, D.; Kuczera, G.; Franks, S.W. Bayesian analysis of input uncertainty in hydrological modeling: 2. Application. Water Resour. Res. 2006, 42. [Google Scholar] [CrossRef]

- Beven, K.; Smith, P.; Freer, J. Comment on “Hydrological forecasting uncertainty assessment: Incoherence of the GLUE methodology” by Pietro Mantovan and Ezio Todini. J. Hydrol. 2007, 338, 315–318. [Google Scholar] [CrossRef]

- Beven, K.; Smith, P.J.; Freer, J. So just why would a modeller choose to be incoherent? J. Hydrol. 2008, 354, 15–32. [Google Scholar] [CrossRef]

- Mantovan, P.; Todini, E. Hydrological forecasting uncertainty assessment: Incoherence of the GLUE methodology. J. Hydrol. 2006, 330, 368–381. [Google Scholar] [CrossRef]

- Liu, Y.; Freer, J.; Beven, K.J.; Matgen, P. Towards a limits of acceptability approach to the calibration of hydrological models: Extending observation error. J. Hydrol. 2009, 367, 93–103. [Google Scholar] [CrossRef]

- Blazkova, S.; Beven, K. A limits of acceptability approach to model evaluation and uncertainty estimation in flood frequency estimation by continuous simulation: Skalka catchment, Czech Republic. Water Resour. Res. 2009, 45, 45. [Google Scholar] [CrossRef]

- Teweldebrhan, A.T.; Burkhart, J.F.; Schuler, T.V. Parameter uncertainty analysis for an operational hydrological model using residual-based and limits of acceptability approaches. Hydrol. Earth Syst. Sci. 2018, 22, 5021–5039. [Google Scholar] [CrossRef]

- Vrugt, J.A.; Beven, K.J. Embracing equifinality with efficiency: Limits of Acceptability sampling using the DREAM(LOA) algorithm. J. Hydrol. 2018, 559, 954–971. [Google Scholar] [CrossRef]

- Beven, K.J.; Smith, P.J. Concepts of Information Content and Likelihood in Parameter Calibration for Hydrological Simulation Models. J. Hydrol. Eng. 2015, 20, A4014010. [Google Scholar] [CrossRef]

- Bates, B.C.; Campbell, E.P. A Markov Chain Monte Carlo Scheme for parameter estimation and inference in conceptual rainfall-runoff modeling. Water Resour. Res. 2001, 37, 937–947. [Google Scholar] [CrossRef]

- Kuczera, G.; Parent, E. Monte Carlo assessment of parameter uncertainty in conceptual catchment models: The Metropolis algorithm. J. Hydrol. 1998, 211, 69–85. [Google Scholar] [CrossRef]

- Thiemann, M.; Trosset, M.; Gupta, H.; Sorooshian, S. Bayesian recursive parameter estimation for hydrologic models. Water Resour. Res. 2001, 37, 2521–2535. [Google Scholar] [CrossRef]

- Vrugt, J.A.; Ter Braak, C.J.F.; Gupta, H.V.; Robinson, B.A. Equifinality of formal (DREAM) and informal (GLUE) Bayesian approaches in hydrologic modeling? Stoch. Environ. Res. Risk Assess. 2008, 23, 1011–1026. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Haario, H.; Saksman, E.; Tamminen, J. Adaptive proposal distribution for random walk Metropolis algorithm. Comput. Stat. 1999, 14, 375–395. [Google Scholar] [CrossRef]

- Hastings, W.K.; Mardia, K.V. Monte Carlo Sampling Methods Using Markov Chains and Their Applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Viglione, A.; Hosking, J.R.M.; Francesco, L.; Alan, M.; Eric, G.; Olivier, P.; Jose, S.; Chi, N.; Karine, H. Package ‘nsRFA’. Available online: https://cran.r-project.org/web/packages/nsRFA/nsRFA.pdf (accessed on 13 July 2017).

- Evin, G.; Thyer, M.; Kavetski, D.; McInerney, D.; Kuczera, G. Comparison of joint versus postprocessor approaches for hydrological uncertainty estimation accounting for error autocorrelation and heteroscedasticity. Water Resour. Res. 2014, 50, 2350–2375. [Google Scholar] [CrossRef]

- Smith, T.; Sharma, A.; Marshall, L.; Mehrotra, R.; Sisson, S. Development of a formal likelihood function for improved Bayesian inference of ephemeral catchments. Water Resour. Res. 2010, 46, 46. [Google Scholar] [CrossRef]

- Smith, T.; Marshall, L.; Sharma, A. Modeling residual hydrologic errors with Bayesian inference. J. Hydrol. 2015, 528, 29–37. [Google Scholar] [CrossRef]

- Doherty, J.; Hunt, R.J. Two statistics for evaluating parameter identifiability and error reduction. J. Hydrol. 2009, 366, 119–127. [Google Scholar] [CrossRef]

- Stigter, J.D.; Beck, M.; Molenaar, J. Assessing local structural identifiability for environmental models. Environ. Model. Softw. 2017, 93, 398–408. [Google Scholar] [CrossRef]

- Reed, P.; Minsker, B.S.; Goldberg, D.E. Simplifying multiobjective optimization: An automated design methodology for the nondominated sorted genetic algorithm-II. Water Resour. Res. 2003, 39. [Google Scholar] [CrossRef]

- Vrugt, J.A.; Gupta, H.V.; Bastidas, L.A.; Bouten, W.; Sorooshian, S. Effective and efficient algorithm for multiobjective optimization of hydrologic models. Water Resour. Res. 2003, 39. [Google Scholar] [CrossRef]

- Yassin, F.; Razavi, S.; Wheater, H.; Sapriza-Azuri, G.; Davison, B.; Pietroniro, A. Enhanced identification of a hydrologic model using streamflow and satellite water storage data: A multicriteria sensitivity analysis and optimization approach. Hydrol. Process. 2017, 31, 3320–3333. [Google Scholar] [CrossRef]

- Madsen, H. Parameter estimation in distributed hydrological catchment modelling using automatic calibration with multiple objectives. Adv. Water Resour. 2003, 26, 205–216. [Google Scholar] [CrossRef]

- Efstratiadis, A.; Koutsoyiannis, D. One decade of multi-objective calibration approaches in hydrological modelling: A review. Hydrol. Sci. J. 2010, 55, 58–78. [Google Scholar] [CrossRef]

- Yassin, F.; Razavi, S.; ElShamy, M.; Davison, B.; Sapriza-Azuri, G.; Wheater, H. Representation and improved parameterization of reservoir operation in hydrological and land-surface models. Hydrol. Earth Syst. Sci. 2019, 23, 3735–3764. [Google Scholar] [CrossRef]

- Kollat, J.B.; Reed, P.M.; Wagener, T. When are multiobjective calibration trade-offs in hydrologic models meaningful? Water Resour. Res. 2012, 48, 48. [Google Scholar] [CrossRef]

- Engeland, K.; Braud, I.; Gottschalk, L.; Leblois, E. Multi-objective regional modelling. J. Hydrol. 2006, 327, 339–351. [Google Scholar] [CrossRef]

- Tang, Y.; Marshall, L.; Sharma, A.; Ajami, H. A Bayesian alternative for multi-objective ecohydrological model specification. J. Hydrol. 2018, 556, 25–38. [Google Scholar] [CrossRef]

- Isukapalli, S.S.; Roy, A.; Georgopoulos, P.G. Stochastic Response Surface Methods (SRSMs) for Uncertainty Propagation: Application to Environmental and Biological Systems. Risk Anal. 1998, 18, 351–363. [Google Scholar] [CrossRef]

- Tran, V.N.; Kim, J. Quantification of predictive uncertainty with a metamodel: Toward more efficient hydrologic simulations. Stoch. Environ. Res. Risk Assess. 2019, 33, 1453–1476. [Google Scholar] [CrossRef]

- Shrestha, D.L.; Kayastha, N.; Solomatine, D.P.; Price, R. Encapsulation of parametric uncertainty statistics by various predictive machine learning models: MLUE method. J. Hydroinform. 2014, 16, 95–113. [Google Scholar] [CrossRef]

- Solomatine, D.P.; Shrestha, D.L. A novel method to estimate model uncertainty using machine learning techniques. Water Resour. Res. 2009, 45. [Google Scholar] [CrossRef]

- Khu, S.-T.; Werner, M.G.F. Reduction of Monte-Carlo simulation runs for uncertainty estimation in hydrological modelling. Hydrol. Earth Syst. Sci. 2003, 7, 680–692. [Google Scholar] [CrossRef]

- Zhang, X.; Srinivasan, R.; Van Liew, M. Approximating SWAT Model Using Artificial Neural Network and Support Vector Machine. JAWRA J. Am. Water Resour. Assoc. 2009, 45, 460–474. [Google Scholar] [CrossRef]

- Shi, L.; Yang, J.; Zhang, D.; Li, H. Probabilistic collocation method for unconfined flow in heterogeneous media. J. Hydrol. 2009, 365, 4–10. [Google Scholar] [CrossRef]

- Kaintura, A.; Dhaene, T.; Spina, D. Review of Polynomial Chaos-Based Methods for Uncertainty Quantification in Modern Integrated Circuits. Electronics 2018, 7, 30. [Google Scholar] [CrossRef]

- Smith, R.C. Uncertainty Quantification: Theory, Implementation, and Applications; SIAM: Philadelphia, PA, USA, 2013; ISBN 9781611973211. [Google Scholar]

- Razavi, S.; Tolson, B.A.; Burn, D.H. Review of surrogate modeling in water resources. Water Resour. Res. 2012, 48, 48. [Google Scholar] [CrossRef]

- Dotto, C.B.S.; Mannina, G.; Kleidorfer, M.; Vezzaro, L.; Henrichs, M.; McCarthy, D.T.; Freni, G.; Rauch, W.; Deletic, A. Comparison of different uncertainty techniques in urban stormwater quantity and quality modelling. Water Res. 2012, 46, 2545–2558. [Google Scholar] [CrossRef]

- Doherty, J. MICA: Model Independent Markov Chain Monte Carlo Analysis; Watermark Numerical Computing: Brisbane, Australia, 2003. [Google Scholar]

- Keating, E.H.; Doherty, J.; Vrugt, J.A.; Kang, Q. Optimization and uncertainty assessment of strongly nonlinear groundwater models with high parameter dimensionality. Water Resour. Res. 2010, 46, 46. [Google Scholar] [CrossRef]

- Thi, P.C.; Ball, J.; Dao, N.H. Uncertainty Estimation Using the Glue and Bayesian Approaches in Flood Estimation: A case Study—Ba River, Vietnam. Water 2018, 10, 1641. [Google Scholar] [CrossRef]

- Jin, X.; Xu, C.-Y.; Zhang, Q.; Singh, V.P. Parameter and modeling uncertainty simulated by GLUE and a formal Bayesian method for a conceptual hydrological model. J. Hydrol. 2010, 383, 147–155. [Google Scholar] [CrossRef]

- Li, L.; Xia, J.; Xu, C.-Y.; Singh, V.P. Evaluation of the subjective factors of the GLUE method and comparison with the formal Bayesian method in uncertainty assessment of hydrological models. J. Hydrol. 2010, 390, 210–221. [Google Scholar] [CrossRef]

- Balin, D.; Lee, H.; Rode, M. Is point uncertain rainfall likely to have a great impact on distributed complex hydrological modeling? Water Resour. Res. 2010, 46. [Google Scholar] [CrossRef]

- Hagedorn, R.; Doblas-Reyes, F.J.; Palmer, T.N. The rationale behind the success of multi-model ensembles in seasonal forecasting—I. Basic concept. Tellus A Dyn. Meteorol. Oceanogr. 2005, 57, 219–233. [Google Scholar] [CrossRef]

- Moges, E.; Jared, A.; Demissie, Y.; Yan, E.; Mortuza, R.; Mahat, V. Bayesian Augmented L-Moment Approach for Regional Frequency Analysis. In Proceedings of the World Environmental and Water Resources Congress 2018, Minnesota, MN, USA, 3–7 June 2018; pp. 165–180. [Google Scholar]

- Georgakakos, K.; Seo, D.-J.; Gupta, H.; Schaake, J.; Butts, M.B. Towards the characterization of streamflow simulation uncertainty through multimodel ensembles. J. Hydrol. 2004, 298, 222–241. [Google Scholar] [CrossRef]

- Arsenault, R.; Gatien, P.; Renaud, B.; Brissette, F.; Martel, J.-L. A comparative analysis of 9 multi-model averaging approaches in hydrological continuous streamflow simulation. J. Hydrol. 2015, 529, 754–767. [Google Scholar] [CrossRef]

- Raftery, A.E.; Madigan, D.; Hoeting, J.A. Bayesian Model Averaging for Linear Regression Models. J. Am. Stat. Assoc. 1997, 92, 179–191. [Google Scholar] [CrossRef]

- Hoeting, J.A.; Madigan, D.; Raftery, A.E.; Volinsky, C.T. Bayesian model averaging: A tutorial (with comments by M. Clyde, David Draper and E. I. George, and a rejoinder by the authors. Stat. Sci. 1999, 14, 382–417. [Google Scholar]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian Model Averaging to Calibrate Forecast Ensembles. Mon. Weather. Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef]

- Duan, Q.; Ajami, N.K.; Gao, X.; Sorooshian, S. Multi-model ensemble hydrologic prediction using Bayesian model averaging. Adv. Water Resour. 2007, 30, 1371–1386. [Google Scholar] [CrossRef]

- Gupta, H.V.; Wagener, T.; Liu, Y. Reconciling theory with observations: Elements of a diagnostic approach to model evaluation. Hydrol. Process. 2008, 22, 3802–3813. [Google Scholar] [CrossRef]

- Sivapalan, M.; Blöschl, G.; Zhang, L.; Vertessy, R. Downward approach to hydrological prediction. Hydrol. Process. 2003, 17, 2101–2111. [Google Scholar] [CrossRef]

- Moges, E.; Demissie, Y.; Li, H. Hierarchical mixture of experts and diagnostic modeling approach to reduce hydrologic model structural uncertainty. Water Resour. Res. 2016, 52, 2551–2570. [Google Scholar] [CrossRef]

- Aronica, G.T.; Candela, A.; Viola, F.; Cannarozzo, M. Influence of Rating Curve Uncertainty on Daily Rainfall-Runoff Model Predictions; IAHS Publ.: Wallingford, UK, 2005. [Google Scholar]

- McMillan, H.; Freer, J.; Pappenberger, F.; Krueger, T.; Clark, M. Impacts of uncertain river flow data on rainfall-runoff model calibration and discharge predictions. Hydrol. Process. 2010, 24, 1270–1284. [Google Scholar] [CrossRef]

- Sikorska, A.E.; Renard, B. Calibrating a hydrological model in stage space to account for rating curve uncertainties: General framework and key challenges. Adv. Water Resour. 2017, 105, 51–66. [Google Scholar] [CrossRef]

- Xu, T.; Valocchi, A.J. A Bayesian approach to improved calibration and prediction of groundwater models with structural error. Water Resour. Res. 2015, 51, 9290–9311. [Google Scholar] [CrossRef]