Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Styliani Verykokou | -- | 485 | 2025-01-06 03:31:40 | | | |

| 2 | Camila Xu | Meta information modification | 485 | 2025-01-06 03:35:21 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Verykokou, S.; Ioannidis, C. Image Matching: A Comprehensive Overview of Conventional and Learning-Based Methods. Encyclopedia. Available online: https://encyclopedia.pub/entry/57570 (accessed on 07 February 2026).

Verykokou S, Ioannidis C. Image Matching: A Comprehensive Overview of Conventional and Learning-Based Methods. Encyclopedia. Available at: https://encyclopedia.pub/entry/57570. Accessed February 07, 2026.

Verykokou, Styliani, Charalabos Ioannidis. "Image Matching: A Comprehensive Overview of Conventional and Learning-Based Methods" Encyclopedia, https://encyclopedia.pub/entry/57570 (accessed February 07, 2026).

Verykokou, S., & Ioannidis, C. (2025, January 06). Image Matching: A Comprehensive Overview of Conventional and Learning-Based Methods. In Encyclopedia. https://encyclopedia.pub/entry/57570

Verykokou, Styliani and Charalabos Ioannidis. "Image Matching: A Comprehensive Overview of Conventional and Learning-Based Methods." Encyclopedia. Web. 06 January, 2025.

Copy Citation

This entry provides a comprehensive overview of methods used in image matching. It starts by introducing area-based matching, outlining well-established techniques for determining correspondences. Then, it presents the concept of feature-based image matching, covering feature point detection and description issues, including both handcrafted and learning-based operators. Brief presentations of frequently used detectors and descriptors are included, followed by a presentation of descriptor matching and outlier rejection techniques. Finally, the entry provides a brief overview of relational matching.

photogrammetry

computer vision

image matching

feature-based matching

area-based matching

relational matching

handcrafted operators

learning-based operators

outlier rejection

The term “image matching” refers to the automatic establishment of correspondences between points, grayscale tones, features, relations, or other entities in overlapping images. It is a critical process for various photogrammetric and computer vision applications, such as the automatic detection of tie points for aerial triangulation, photo-triangulation, or Structure from Motion (SfM) applications [1], relative orientation processes [2], automatic collection of digital terrain/surface models (DTMs/DSMs) [3], augmented reality applications [4], and many other fields.

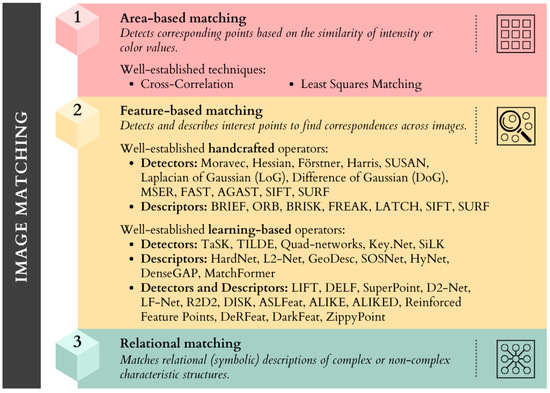

Three main categories of image matching can be distinguished, depending on the entity being matched: (i) area-based matching, (ii) feature-based matching, and (iii) relational matching [5]. Area-based matching identifies correspondences based on the similarity of intensity or color values within image regions. Feature-based matching focuses on detecting and describing interest points that are robust under transformations such as scale, rotation, and illumination changes. Lastly, relational matching utilizes symbolic relationships between image structures, making it well-suited for template-based applications. To provide a clear overview of the main techniques used in image matching, Figure 1 illustrates the three primary approaches, highlighting their fundamental principles and well-established techniques, as well as representative examples addressed in this entry. Conventional methods, which rely on heuristic-driven approaches, are used across all three categories, whereas learning-based methods, which use machine learning techniques, are mainly limited to feature-based matching. In the context of feature-based matching, two distinct subcategories can be identified: conventional approaches, which involve handcrafted detectors and descriptors, and learning-based approaches, which include training models to identify and describe features, while relying on the availability of high-quality training data. Handcrafted operators are manually designed algorithms that detect and describe features using predefined rules, mathematical models, or methods (e.g., corner detection, gradient analysis) to extract distinctive features from images. Learning-based operators use machine learning techniques to detect and describe features after being trained on large datasets to learn feature representations and correspondences.

Figure 1. Overview of image matching methods, outlining well-established techniques and representative examples.

This entry focuses on feature-based matching, which is the most commonly used method, and also outlines some basic fundamentals of the other two matching methods (area-based matching and relational matching). In the context of feature-based matching, some basic operators for detecting and describing interest points are presented, including both handcrafted and learning-based operators. In addition, the main methods for eliminating invalid correspondences are presented, which ensure that the final matching result is considered acceptable.

References

- Verykokou, S.; Ioannidis, C. An Overview on Image-Based and Scanner-Based 3D Modeling Technologies. Sensors 2023, 23, 596.

- Tang, L.; Heipke, C. Automatic Relative Orientation of Aerial Images. Photogramm. Eng. Remote Sens. 1996, 62, 47–55.

- Rahmayudi, A.; Rizaldy, A. Comparison of Semi-Automatic DTM from Image Matching with DTM from LiDAR. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, 41, 373–380.

- Verykokou, S.; Boutsi, A.M.; Ioannidis, C. Mobile Augmented Reality for Low-End Devices Based on Planar Surface Recognition and Optimized Vertex Data Rendering. Appl. Sci. 2021, 11, 8750.

- Gruen, A. Development and Status of Image Matching in Photogrammetry. Photogramm. Rec. 2012, 27, 36–57.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

775

Online Date:

06 Jan 2025

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No