Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Shunsuke Shigaki | -- | 2380 | 2024-02-09 10:42:43 | | | |

| 2 | Peter Tang | + 1 word(s) | 2381 | 2024-02-17 03:53:37 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Shigaki, S.; Ando, N. Biological Experimental Setup Using Engineering Tools. Encyclopedia. Available online: https://encyclopedia.pub/entry/54960 (accessed on 13 January 2026).

Shigaki S, Ando N. Biological Experimental Setup Using Engineering Tools. Encyclopedia. Available at: https://encyclopedia.pub/entry/54960. Accessed January 13, 2026.

Shigaki, Shunsuke, Noriyasu Ando. "Biological Experimental Setup Using Engineering Tools" Encyclopedia, https://encyclopedia.pub/entry/54960 (accessed January 13, 2026).

Shigaki, S., & Ando, N. (2024, February 09). Biological Experimental Setup Using Engineering Tools. In Encyclopedia. https://encyclopedia.pub/entry/54960

Shigaki, Shunsuke and Noriyasu Ando. "Biological Experimental Setup Using Engineering Tools." Encyclopedia. Web. 09 February, 2024.

Copy Citation

Despite their diminutive neural systems, insects exhibit sophisticated adaptive behaviors in diverse environments. An insect receives various environmental stimuli through its sensory organs and selectively and rapidly integrates them to produce an adaptive motor output. Living organisms commonly have this sensory-motor integration, and attempts have been made to elucidate this mechanism biologically and reconstruct it through engineering.

adaptive behavior

insect

sensory-motor integration

insect on-board system

virtual reality system for an insect

1. Introduction

The recent shortage of workers due to the declining birthrate and aging population has led to a demand for the automation of tasks and the introduction of systems that can replace human workers. According to a survey by the International Federation of Robotics, the industrial robotics market trend is expected to head back to growth and reach record highs from 2021 onward, despite a period of stagnation due to the impact of the spread of the new coronavirus [1]. Thus, industrial robots in factory production lines and transport robots operating in distribution warehouses contribute to work efficiency and automation [2][3]; however, challenges such as working with humans remain unresolved [4]. Although artificial systems play an active role in limited spaces and tasks, several challenges must be overcome before they can be integrated into human society. While integrating intelligent robots into human society is expected to bring many benefits, we must also consider the social and ethical issues that will emerge. In fact, it has been pointed out that social support robots that interact with people face many ethical issues [5]. Moreover, as a general issue raised, the problems of people losing their jobs as a result of automation and who is responsible when a robot makes a mistake have not been solved. In addition, there is a risk that the data used for learning by artificial intelligence may contain private information, which could lead to more serious privacy violation problems in the future. The most frightening situation is that artificial intelligence may learn wrong data and make decisions that are unacceptable to humans based on wrong inferences, thus causing harm to human society. The potential ethical, privacy, and security issues associated with replacing everything with robots or artificial intelligence cannot be avoided. Therefore, it is important that we firmly promote the development of laws such as the “Robot Law” [6] at a time when robots have not yet penetrated society. It is desirable to build a society in which robots coexist with humans by drawing a line between humans and robots (or artificial intelligence).

However, robots are currently not melted into human society. What are the important elements for robots to permeate society? Among the elements that make up the robot, the implementation of “adaptability”, i.e., the ability to perform a wide range of tasks in complex and unknown environments, is an important and highly anticipated capability. Some researchers have tried to obtain this adaptability by fusing AI and robotics, which have been studied independently [7]. This research motivation has been supported worldwide and competitions, such as the RoboCup Challenge [8] and the DARPA Challenge [9][10], have been held. Thanks to the advancement of the computer, artificial systems have acquired a certain degree of adaptability [11], but they work only to a limited extent. One reason for this is the difficulty for AI in capturing as a formal, symbolic problem from unreliable sensor data as it moves to more natural environments [7]. As another aspect, while artificial intelligence has made remarkable progress in areas such as natural language processing and image recognition, where huge amounts of datasets are available for use in learning, in the real world, where tasks are performed using physical or chemical characteristics, acquiring large amounts of datasets that cover all situations is difficult. Moreover, wear and deterioration of the sensors and actuators installed on the robot change the amount of information it can obtain and its mobility, making it impractical to work while perceiving and relearning them in real time. Therefore, for the further development of artificial intelligence, it is important to let artificial intelligence experience the real world and continue to collect data. In other words, further development of artificial intelligence requires a deeper connection between the robotic body and AI. Moreover, although the computational power of computers has increased dramatically with the development of integrated circuits, it is difficult to expect further improvements in computational power because the end of Moore’s law, which predicts the number of transistors implemented in an integrated circuit [12], is in sight. Moreover, the scale of microcomputers that can be installed in a robot moving around in an unknown environment is limited, and global communication is not always possible.

As an alternative, methods have been considered to reproduce the body structure and intelligence of living things through engineering [13] and incorporate biological materials into artificial systems [14]. In addition, research has been proposed to investigate the brain function of organisms using robots as a tool for analysis [15]. Of course, it is impossible to perfectly reproduce all the flexibility and agility of sensors and actuators in engineering an imitation of an organism. As a result, systems have been proposed that can incorporate gait patterns similar to animals [16] and localize an odor source in the outside environment [17], but because these are only partial imitations, they have not yet achieved performance comparable to that of organisms. To address this problem, cyborg research [18], which utilizes actual insects as robots, is gaining attention. Current cyborg research focuses on the utilization of insects as moving vehicles, and is thus established by having the operator control the insect’s direction of movement. Insects are equipped with many sensory organs that cannot be imitated by artificial sensors, but current insect cyborgs do not benefit from the use of superior sensory organs. In addition, insects also age or vary from one individual to another, making it difficult to achieve uniform performance. However, among living organisms, more than one million species of insects that have small nervous systems [19] but can behave adaptively have been identified worldwide, and it is thought that there are up to several dozen times as many undiscovered insect species [20]. This suggests that insects have a way of adapting to diverse environments. In addition, an insect does not carry out all operations with its brain, but uses the body as part of its computing machines, thereby reducing the load on the brain [21][22]. This is called embodied intelligence. In other words, an organism, including an insect, has a very good balance between the body (hardware) and brain (intelligence or software), and is a good example of the future relationship between robots and AI. An insect can accomplish a variety of tasks despite its small brain by making good use of embodied intelligence. For example, they have a variety of migratory strategies, such as walking, flying, and swimming, which are essential for survival [23][24][25]; navigation strategies that skillfully use multiple senses [26]; and swarming behavior that creates an insect society [27]. The size of insect brains cannot be compared because insect species vary in size. However, most insect brains are extremely small, only a few millimeters in size, despite their multifunctionality. Considering that robots accomplish tasks in real time, their processing power is very high, and it is conceivable that there is still much to be learned for designing economic and efficient autonomous robots.

2. Overview of the Biological Experimental Setup Using Engineering Tools

Insects receive various stimuli from the external world, process them appropriately, and convert them into behavior, enabling them to adapt. This behavior is generated by information acquisition by superior sensory organs and information processing by the microbrain [28], which is only a few millimeters in size. Therefore, various experiments have been conducted in the fields of neuroethics, animal behavior, physiology, genetics, and molecular biology to investigate sensory organs and the cranial nervous system.

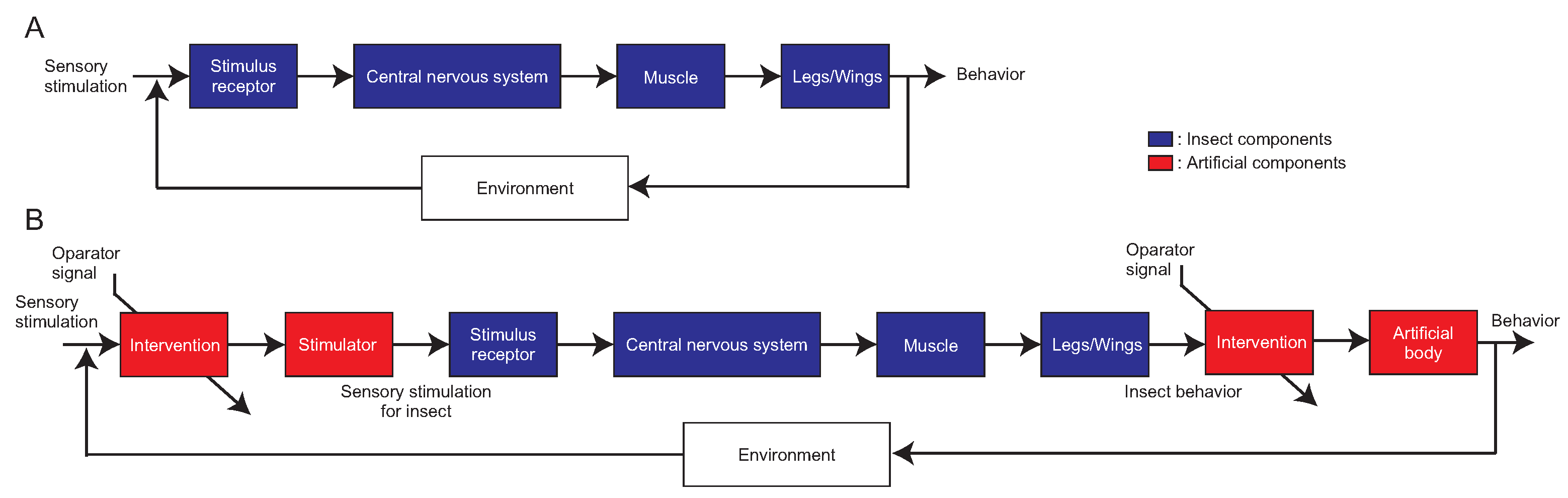

As shown in Figure 1A, when an insect detects a key stimulus among sensory stimuli, a signal is transmitted to the central nervous system and converted into an appropriate motor command. This results in control of the muscles, which is emerged as behavior. The researchers focus on Figure 1, which shows that insects constantly receive new sensory stimuli from the surrounding environment and generate different behaviors each time. Adaptive behaviors of an insect are unlikely to be elicited by the insect alone, and seem to emerge through interactions with the environment [29]. In other words, it is difficult to generate adaptive behaviors in open-loop experiments that are disconnected from the natural environment, and it is necessary to advance the research from other aspects for a true understanding of behavior. However, it is impossible to know when and to what extent insects are receptive to sensory stimuli in free-walking or flight experiments. To solve this problem, the insect–machine hybrid system [18][30] and the virtual reality system for insects [31] have recently appeared, incorporating state-of-the-art engineering technology into biological experiments. These systems allow us to conduct experiments while maintaining the relationship between the insect and its environment, and thus, adaptive behavior can be measured. In addition, by intervening in the input of sensory stimuli to the insect and the output of the robot after the insect has acted, as shown in Figure 1B, it is possible to highlight the conditions under which adaptive behavior occurs.

Figure 1. A block diagram from sensory stimuli to behavioral output. (A) Block diagram of an insect. The central nervous system generates motor commands. (B) Block diagram of intervention system for an insect. Intervention methods vary depending on the type of experimental setup, such as cyborg or VR for insects.

A necessary element of an experimental system that incorporates engineering technology into biological experiments is an interface between the organism and the artificial system, such as electrophysiology, behavior measurement, and electrical stimulation. Once the interface is established, it is possible to manipulate robots using signals from insect physiological responses or behavior, or to control insects by electrical stimulation. This research deals with the control of robots by insects. Previous studies have attempted to control robots using the insect itself or parts of the insect. By taking a part or element of the insect to be investigated and controlling a robot, the functional contribution of that part in the real world can be clarified. To investigate the characteristics of sensory organs, only the sensory organs can be connected to the robot, or to investigate brain functions, the robot can be operated by electrical signals from the brain. For example, to investigate the characteristics of insect sensory organs, a study has been reported in which behavioral experiments were conducted using insect antennae as sensors for a robot [32][33]. There is research that verified the validity of an antennal lobe model by using technic that use antennae as sensors for robots, reading an electroantennogram of an insect, and controlling a robot from a neuron model that represents the antennal lobe in the brain [34]. Another study controlled the robot with the insect brain to investigate how visual information is processed. Many insects elicit a variety of visually induced behaviors; motion-sensitive visual interneurons are a type of neuron responsible for these behaviors [35][36]. N. Ejaz et al. found that H1 neurons that respond to horizontal optic flow have adaptive gain control for dynamic image motion [37]. The research team also showed that robots can be controlled to perform collision avoidance tasks using techniques that measure the activity of H1 neurons [38]. In addition to studies of controlling robots based on physiological responses, other studies have been reported to investigate adaptive functions by changing physical characteristics. A famous example is research on physically changing the length of the legs of freely moving ants [39]. Intervening in leg length can force ants to change their stride length, which is expected to change the frequency of their gait. This change in gait was found to cause ants to stray when searching for nests. In other words, it is clear that ants rely on their internal pedometers to navigate their desert habitat.

Most of the studies described above are invasive experiments. In the case of non-model organisms, the number of organisms that can be experimented on is limited unless a maintenance strain has been established in a laboratory. For that reason, it is ideal to be able to conduct experiments under various conditions in an intact state. To solve this problem, an insect on-board system and VR for insects can be used to measure behavioral changes when various sensory and motor operations are applied under intact conditions. The obstacle avoidance behavior of a cockroach [40], sound-source localization behavior of a cricket [41], and odor-source localization behavior of a silk moth [42][43] have been investigated using the insect on-board system. Insects that have boarded the robot, even though they are from different species, have successfully controlled the robot as their own body and performed the given task appropriately. Recently, a study has been conducted to investigate the phototaxis of a free-walking pill bug by developing a robot equipped with a light stimulator that tracks the pill bug [44]. The advantages of this system are that it can measure the relationship between behavior and sensory stimuli in a more natural walking state, and it can be used for organisms such as centipedes, for which tethered measurement systems are difficult to use.

In an experiment on VR for insects, Kaushik et al. found that dipterans use airflow and odor information for visual navigation [45]. Ando et al. also reported a VR system for source localization that can be used to quantitatively analyze the source localization behavior of crickets [46]. Although not an insect, Radvansky and Dombeck successfully used a VR system to measure olfactory navigation behavior in mammals (mice) [47]. All motile organisms use spatially distributed chemical features in their surroundings to induce behavior, but investigating the principles of this behavior induction has been difficult due to the technical challenges of spatially and temporally controlling chemical concentrations during behavioral experiments. Their research has demonstrated that the introduction of VR into the olfactory navigation experiment of organisms can solve the above problem. As described above, there is much that is not known about the olfactory behavior of organisms due to the complexity and difficulty in quantifying the spatial and temporal nature of the odor as a sensory stimulus, and the same is true for the olfactory behavior of insects. Therefore, the authors have utilized an insect on-board system and VR for insects to investigate the female localization behavior of an adult male silkmoth.

References

- IFR Presents World Robotics 2021 Reports. Available online: https://ifr.org/ifr-press-releases/news/robot-sales-rise-again (accessed on 16 December 2023).

- Ermolov, I. Industrial Robotics Review. In Robotics: Industry 4.0 Issues & New Intelligent Control Paradigms. Studies in Systems, Decision and Control; Kravets, A., Ed.; Springer Nature: Cham, Switzerland, 2020; Volume 272.

- Liu, Z.; Quan, L.; Wenjun, X.; Lihui, W.; Zude, Z. Robot learning towards smart robotic manufacturing: A review. Robot. Comput.-Integr. Manuf. 2022, 77, 102360.

- Robla-Gómez, S.; Becerra, V.M.; Llata, J.R.; Gonzalez-Sarabia, E.; Torre-Ferrero, C.; Perez-Oria, J. Working together: A review on safe human–robot collaboration in industrial environments. IEEE Access 2017, 5, 26754–26773.

- Boada, J.P.; Maestre, B.R.; Genís, C.T. The ethical issues of social assistive robotics: A critical literature review. Technol. Soc. 2021, 67, 101726.

- Calo, M.R.; Froomkin, M.; Kerr, I.R. Robot Law; Edward Elgar Publishing: Northampton, MA, USA, 2016.

- Rajan, K.; Saffiotti, A. Towards a science of integrated AI and Robotics. Artif. Intell. 2017, 247, 1–9.

- Kitano, H.; Tambe, M.; Stone, P.; Veloso, M.; Coradeschi, S.; Osawa, E.; Hitoshi, M.; Itsuki, N.; Asada, M. The RoboCup synthetic agent challenge 97. In RoboCup-97: Robot Soccer World Cup I; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1, pp. 62–73.

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56.

- Krotkov, E.; Hackett, D.; Jackel, L.; Perschbacher, M.; Pippine, J.; Strauss, J.; Pratt, G.; Orlowski, C. The DARPA robotics challenge finals: Results and perspectives. In The DARPA Robotics Challenge Finals: Humanoid Robots to the Rescue; Springer Nature: Cham, Switzerland, 2018; pp. 1–26.

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596.

- Theis, T.N.; Wong, H.S.P. The end of moore’s law: A new beginning for information technology. Comput. Sci. Eng. 2017, 19, 41–50.

- Ahmed, F.; Waqas, M.; Jawed, B.; Soomro, A.M.; Kumar, S.; Hina, A.; Umair, K.; Kim, K.H.; Choi, K.H. Decade of bio-inspired soft robots: A review. Smart Mater. Struct. 2022, 31, 073002.

- Webster-Wood, V.A.; Akkus, O.; Gurkan, U.A.; Chiel, H.J.; Quinn, R.D. Organismal engineering: Toward a robotic taxonomic key for devices using organic materials. Sci. Robot. 2017, 2, eaap9281.

- Prescott, T.J.; Wilson, S.P. Understanding brain functional architecture through robotics. Sci. Robot. 2023, 8, eadg6014.

- Owaki, D.; Ishiguro, A. A quadruped robot exhibiting spontaneous gait transitions from walking to trotting to galloping. Sci. Rep. 2017, 7, 277.

- Shigaki, S.; Yamada, M.; Kurabayashi, D.; Hosoda, K. Robust moth-inspired algorithm for odor source lo-calization using multimodal information. Sensors 2023, 23, 1475.

- Siljak, H.; Nardelli, P.H.; Moioli, R.C. Cyborg Insects: Bug or a Feature? IEEE Access 2022, 10, 49398–49411.

- Alivisatos, A.P.; Chun, M.; Church, G.M.; Greenspan, R.J.; Roukes, M.L.; Yuste, R. The brain activity map project and the challenge of functional connectomics. Neuron 2012, 74, 970–974.

- Stork, N.E. How many species of insects and other terrestrial arthropods are there on Earth? Annu. Rev. Entomol. 2018, 63, 31–45.

- Pfeifer, R.; Scheier, C. Understanding Intelligence; MIT Press: Cambridge, MA, USA, 2001.

- Wystrach, A. Movements, embodiment and the emergence of decisions. Insights from insect navigation. Biochem. Biophys. Res. Commun. 2021, 564, 70–77.

- David, I.; Ayali, A. From Motor-Output to Connectivity: An In-Depth Study of in vitro Rhythmic Patterns in the Cockroach Periplaneta americana. Front. Insect Sci. 2021, 1, 655933.

- Roth, E.; Hall, R.W.; Daniel, T.L.; Sponberg, S. Integration of parallel mechanosensory and visual pathways resolved through sensory conflict. Proc. Natl. Acad. Sci. USA 2016, 113, 12832–12837.

- Hughes, G.M. The co-ordination of insect movements: III. Swimming in Dytiscus, Hydrophilus and a dragonfly nymph. J. Exp. Biol. 1958, 35, 567–583.

- Heinze, S. Unraveling the neural basis of insect navigation. Curr. Opin. Insect Sci. 2017, 24, 58–67.

- Weitekamp, C.A.; Libbrecht, R.; Keller, L. Genetics and evolution of social behavior in insects. Annu. Rev. Genet. 2017, 51, 219–239.

- Mizunami, M.; Yokohari, F.; Takahata, M. Exploration into the adaptive design of the arthropod “microbrain”. Zool. Sci. 1999, 16, 703–709.

- Asama, H. Mobiligence: Emergence of adaptive motor function through interaction among the body, brain and environment. Environment 2007, 1, 3.

- Ando, N.; Kanzaki, R. Insect-machine hybrid robot. Curr. Opin. Insect Sci. 2020, 42, 61–69.

- Naik, H.; Bastien, R.; Navab, N.; Couzin, I.D. Animals in Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2073–2083.

- Kuwana, Y.; Nagasawa, S.; Shimoyama, I.; Kanzaki, R. Synthesis of the pheromone-oriented behaviour of silkworm moths by a mobile robot with moth antennae as pheromone sensors. Biosens. Bioelectron. 1999, 14, 195–202.

- Yamada, N.; Ohashi, H.; Umedachi, T.; Shimizu, M.; Hosoda, K.; Shigaki, S. Dynamic Model Identification for Insect Electroantennogram with Printed Electrode. Sens. Mater. 2021, 33, 4173–4184.

- Martinez, D.; Chaffiol, A.; Voges, N.; Gu, Y.; Anton, S.; Rospars, J.P.; Lucas, P. Multiphasic on/off pheromone signalling in moths as neural correlates of a search strategy. PLoS ONE 2013, 8, e61220.

- Borst, A. Fly visual course control: Behaviour, algorithms and circuits. Nat. Rev. Neurosci. 2014, 15, 590–599.

- Egelhaaf, M.; Kern, R.; Lindemann, J.P. Motion as a source of environmental information: A fresh view on biological motion computation by insect brains. Front. Neural Circuits 2014, 8, 127.

- Ejaz, N.; Krapp, H.G.; Tanaka, R.J. Closed-loop response properties of a visual interneuron involved in fly optomotor control. Front. Neural Circuits 2013, 7, 50.

- Huang, J.V.; Wei, Y.; Krapp, H.G. A biohybrid fly-robot interface system that performs active collision avoidance. Bioinspiration Biomim. 2019, 14, 065001.

- Wittlinger, M.; Wehner, R.; Wolf, H. The desert ant odometer: A stride integrator that accounts for stride length and walking speed. J. Exp. Biol. 2007, 210, 198–207.

- Hertz, G. Cockroach Controlled Robot, Version 1. New York Times, 7 June 2005.

- Wessnitzer, J.; Asthenidis, A.; Petrou, G.; Webb, B.A. cricket controlled robot orienting towards a sound source. In Proceedings of the Conference towards Autonomous Robotic Systems, Sheffield, UK, 31 August–2 September 2011; pp. 1–12.

- Emoto, S.; Ando, N.; Takahashi, H.; Kanzaki, R. Insect-controlled robot–evaluation of adaptation ability–. J. Robot. Mechatron. 2007, 19, 436–443.

- Ando, N.; Emoto, S.; Kanzaki, R. Odour-tracking capability of a silkmoth driving a mobile robot with turning bias and time delay. Bioinspir. Biomim. 2013, 8, 016008.

- Shirai, K.; Shimamura, K.; Koubara, A.; Shigaki, S.; Fujisawa, R. Development of a behavioral trajectory measurement system (Bucket-ANTAM) for or-ganisms moving in a two-dimensional plane. Artif. Life Robot. 2022, 27, 698–705.

- Kaushik, P.K.; Renz, M.; Olsson, S.B. Characterizing long-range search behavior in Diptera using complex 3D virtual environments. Proc. Natl. Acad. Sci. USA 2020, 117, 12201–12207.

- Ando, N.; Shidara, H.; Hommaru, N.; Ogawa, H. Auditory Virtual Reality for Insect Phonotaxis. J. Robot. Mechatron. 2021, 33, 494–504.

- Radvansky, B.A.; Dombeck, D.A. An olfactory virtual reality system for mice. Nat. Commun. 2018, 9, 839.

More

Information

Subjects:

Entomology

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

435

Revisions:

2 times

(View History)

Update Date:

17 Feb 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No