Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Zhu, Y.; Salowe, R.; Chow, C.; Li, S.; Bastani, O.; O’brien, J.M. Advancing Glaucoma Care. Encyclopedia. Available online: https://encyclopedia.pub/entry/54495 (accessed on 07 February 2026).

Zhu Y, Salowe R, Chow C, Li S, Bastani O, O’brien JM. Advancing Glaucoma Care. Encyclopedia. Available at: https://encyclopedia.pub/entry/54495. Accessed February 07, 2026.

Zhu, Yan, Rebecca Salowe, Caven Chow, Shuo Li, Osbert Bastani, Joan M. O’brien. "Advancing Glaucoma Care" Encyclopedia, https://encyclopedia.pub/entry/54495 (accessed February 07, 2026).

Zhu, Y., Salowe, R., Chow, C., Li, S., Bastani, O., & O’brien, J.M. (2024, January 29). Advancing Glaucoma Care. In Encyclopedia. https://encyclopedia.pub/entry/54495

Zhu, Yan, et al. "Advancing Glaucoma Care." Encyclopedia. Web. 29 January, 2024.

Copy Citation

Glaucoma, the leading cause of irreversible blindness worldwide, comprises a group of progressive optic neuropathies requiring early detection and lifelong treatment to preserve vision. Artificial intelligence (AI) technologies are now demonstrating transformative potential across the spectrum of clinical glaucoma care.

artificial intelligence

glaucoma

computer-aided diagnosis

1. Introduction

Glaucoma, often referred to as the “silent thief of sight”, is the leading cause of irreversible blindness worldwide [1]. Its insidious nature, characterized by a gradual loss of peripheral vision often unappreciated by the patient, underscores the critical importance of early detection and continuous monitoring [2]. In the clinic, glaucoma is typically diagnosed and monitored using a multimodal approach, including tonometry to measure intraocular pressure (IOP), visual field tests, optical coherence tomography (OCT), and fundoscopic examinations [3]. These methods, while foundational, have their limitations: tonometry can be influenced by corneal thickness, visual field tests depend on patient responsiveness, and OCT and fundoscopic exams require expert interpretation, often with some degree of subjectivity [4]. Given these constraints, as the emphasis on early detection and intervention grows, there is an unmet need for more consistent, objective, and precise monitoring techniques [5]. Artificial intelligence (AI) has emerged as a solution to harness this extensive data, aiming to offer automated, consistent, and predictive insights into all areas of glaucoma care [6].

AI’s ability to analyze vast amounts of data, detect intricate patterns, and predict disease trajectories offers a paradigm shift in how researchers approach glaucoma diagnosis and monitoring [7]. In tracing the historical context, the journey of AI’s integration into glaucoma care is a testament to the continuous evolution of medical technology. The late 20th and early 21st centuries saw a surge in ophthalmic imaging techniques, notably OCT, providing high-resolution views of the optic nerve and retinal layers [8]. With this influx of data came increased challenges in interpretation. It was during this phase, particularly in the 2010s, that AI began making its mark. Leveraging machine learning algorithms, early applications of AI sought to automate the analysis of visual fields and OCT scans, aiming to identify subtle patterns indicative of glaucoma progression [9]. Transitioning through the years, as datasets grew and algorithms became more sophisticated, AI’s role transitioned from simple analysis to prediction, including forecasting disease trajectories and potential treatment outcomes.

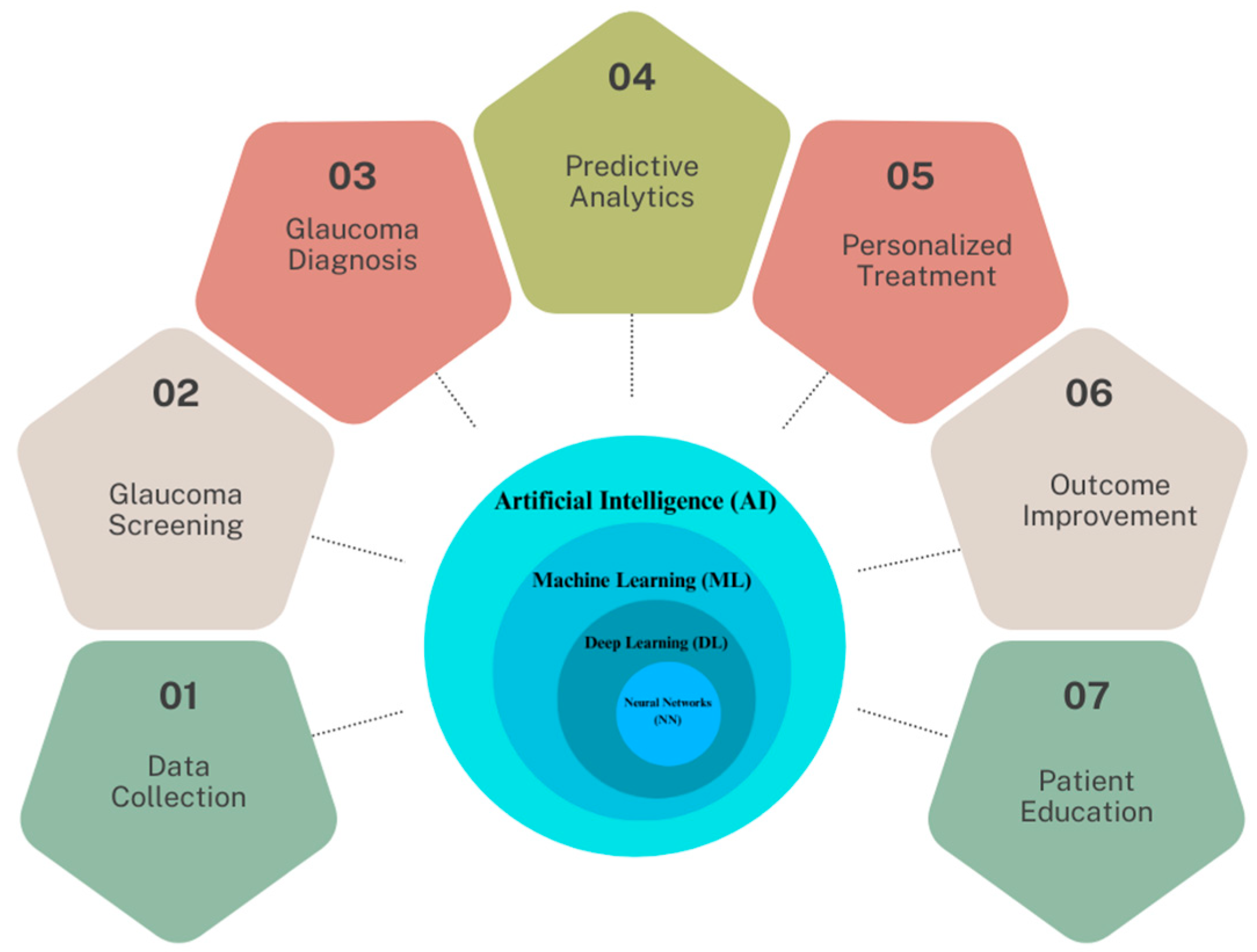

In light of these developments, this research focuses on the multifaceted applications and implications of AI in glaucoma care, which are illustrated in Figure 1. At the core of this schematic is the AI continuum, representing various AI methodologies such as Machine Learning (ML), Neural Networks (NN), and Deep Learning (DL), which form the foundation for advanced data analysis in glaucoma research.

Figure 1. The spectrum of AI applications in glaucoma healthcare.

2. From Traditional to AI-Enhanced Data Collection

Data collection for glaucoma diagnosis has traditionally revolved around a combination of clinical assessments and specialized imaging techniques. Key metrics such as IOP are gathered using tonometry, while the structure of the optic nerve head and retinal nerve fiber layer (RNFL) are visualized through imaging modalities such as OCT and fundus photography. Additionally, visual field tests map out the patient’s field of vision, identifying any deficits or abnormalities characteristic of glaucoma. Recognizing the limitations of traditional methods, as the volume of diagnostic data grew, so did the need for more efficient and accurate data processing.

The integration of AI is beginning to transform the data collection process in glaucoma care, enhancing both efficiency and accuracy. For example, AI-powered imaging devices are now being developed to auto-calibrate based on patient specifics, which could potentially improve image quality [10]. Additionally, emerging AI algorithms in these devices process data in real-time, providing immediate insights and the ability to predict trends based on historical data [11]. The Retinal Fundus Glaucoma Challenge (REFUGE) represents a pivotal step in AI-driven ophthalmology [12]. Established with MICCAI 2018, it addressed the constraints of conventional glaucoma assessment using color fundus photography. REFUGE introduced a groundbreaking dataset of 1200 fundus images with detailed ground truth segmentations and clinical labels, the largest of its kind. This initiative was crucial for standardizing AI model evaluations in glaucoma diagnosis, allowing for consistent and fair comparisons. Notably, some AI models in the challenge surpassed human experts in glaucoma classification, demonstrating AI’s potential in enhancing diagnostic precision through an advanced, large-scale dataset [12]. Although this marks a significant advancement, the field is still in the early stages of transitioning into the AI era, with traditional methods and AI-based approaches coexisting.

This evolution is further augmented by the advent of wearable technology, which introduces new dimensions in continuous monitoring and real-time data analysis for glaucoma management. With the advent of wearable technology and smart devices, continuous monitoring and real-time data collection have become feasible [13]. One of the most useful wearable technologies for glaucoma detection are contact lenses. The SSCLs introduced by Zhang et al. [14] allow continuous 24 h monitoring of IOP through an embedded wireless sensor built upon commercial soft contact lenses. In vivo testing in a dog model demonstrated the ability to wirelessly track circadian IOP fluctuations with a sensitivity of 662 ppm/mmHg (R2 = 0.88) using a portable vector network analyzer coupled to a contact-lens reader coil. Measurements in human subjects exhibited even higher sensitivity of 1121 ppm/mmHg (R2 = 0.91) attributed to superior fit enabled by the soft hydrogel lens base. This sensitivity exceeds previous wearable sensors by more than two times. The seamless interface of the SSCLs with the cornea was confirmed through anterior segment OCT imaging in human eyes. The wireless, 24 h IOP data obtained by these soft hydrogel-based sensors can aid in glaucoma detection and management through continuous monitoring of ocular hypertensive events and linking IOP trends to disease progression. Thus, from ensuring quality data collection to real-time processing, AI is beginning to embed itself deeply into the data collection process for glaucoma diagnosis and management, making these more robust and insightful.

However, recent studies in AI-assisted glaucoma diagnosis underscore the importance of training data diversity. For example, the REFUGE challenge, a significant initiative in AI-driven ophthalmology, utilized a dataset of 1200 fundus images, aiming for broad demographic representation. However, as highlighted in the literature, there is a recognized need for more inclusive data encompassing a wider range of ethnicities and age groups. This inclusivity is critical, given the variability in glaucoma presentation across different populations. Studies emphasize the potential risk of biased AI models due to non-diverse training datasets, which may not effectively represent glaucoma manifestations in underrepresented groups. Thus, the current shift towards more ethnically and demographically inclusive datasets is a vital step in developing universally applicable and unbiased AI models for glaucoma detection.

3. AI’s Role in Glaucoma Screening

3.1. Early Detection and Challenges

Early detection and treatment of glaucoma is essential as vision loss from the disease is currently irreversible. However, the disease is difficult to detect in the early stages, as it is asymptomatic and typically begins with peripheral rather than central vision loss. As a result, almost 50% of glaucoma patients are undiagnosed, delaying treatment until irreversible vision loss has already occurred [15]. Screening for glaucoma is therefore an important mechanism to detect signs of disease in undiagnosed individuals, allowing intervention while there is still vision left to preserve [16]. Currently, the impact and reach of glaucoma screenings is limited by a reliance on individual examinations by glaucoma specialists, ophthalmologists, or optometrists. Screenings can be lengthy, labor-intensive, and challenging to practically implement, ultimately limiting the number of screened individuals. This is especially true in developing countries, where there is a high burden of glaucoma and a limited number of trained eye professionals [17]. As the prevalence of glaucoma continues to rise in an aging population, there is a growing mismatch between the need for glaucoma screenings and the supply of available resources [18].

3.2. AI’s Potential to Transform Glaucoma Screening

In response to these challenges, AI-enabled screening for glaucoma could help fill this unmet need, increasing access to care and lessening the burden on healthcare systems [19]. A system that accurately flags possible glaucoma on images in real time could allow for large-scale screenings to be conducted without the presence of a vision specialist. Patients with signs of glaucoma could then be referred to an ophthalmologist or optometrist for a comprehensive examination, diagnosis, and treatment. Ideally, glaucoma screenings utilizing AI would be low-cost, accurate, and easily translated to low-resource settings. These screenings could then take place in remote rural areas, underserved urban areas, or countries with a scarcity of ophthalmic specialists providing frontline eye care.

Fundus photography, a low-cost option that fits these criteria, has already been successfully incorporated into AI-enabled screening programs to detect diabetic retinopathy [20]. Fundus images provide visualization of anatomic changes to the optic nerve head, such as optic disc cupping and thinning of the neuroretinal rim. These structural abnormalities often precede loss of visual fields [21]. Among its benefits for screening, fundus photography is low-cost, non-invasive, quick, and portable, allowing application to low-resource settings [22]. Starting around 2018, many studies have developed convolutional neural networks (CNNs) trained on thousands of labeled fundus photos to distinguish glaucomatous from healthy eyes [23]. A range of CNN architectures have been applied including ResNet, InceptionNet, and VGGNet, often utilizing transfer learning and reporting high performance [24].

Recent advancements in glaucoma detection have incorporated Vision Transformers like the data-efficient image transformer (DeiT), showing notable efficacy in analyzing fundus photography. These models utilize self-attention mechanisms, effectively capturing the global characteristics of fundus images and thereby enhancing classification accuracy. For instance, studies such as those by Wassel et al. [25] and Fan et al. [26] have demonstrated the competitive performance of Vision Transformer models, particularly in terms of generalizability across diverse datasets. Notably, the attention maps from DeiT models tend to concentrate on clinically relevant areas, like the neuroretinal rim, aligning with regions commonly assessed in manual image review. This alignment suggests that DeiT models can complement traditional diagnostic approaches by focusing on key areas used in glaucoma assessment. The emerging use of Vision Transformers, including DeiT, in glaucoma detection highlights their potential in contributing to the evolving landscape of AI applications in ophthalmology.

3.3. AI Outperforming Human Experts and Challenges

Several studies have shown that deep learning models can achieve equal or better accuracy in differentiating normal from glaucomatous eyes when compared with expert glaucoma specialists [27][28][29][30]. Ting et al. [31] developed a deep learning model using 494,661 retinal images to detect diabetic retinopathy and achieved an AUC of 0.942 in detecting “referable” glaucoma. Similarly, Li et al. [24] created a deep learning algorithm using 48,116 fundus images to detect glaucomatous optic neuropathy. The model achieved an AUC of 0.986, with a sensitivity of 95.6% and specificity of 92.0%. The Pegasus system (version v1.0) [32], a cloud-based AI from Visulytix Ltd. (London, UK) evaluates fundus photos using specialized CNNs to extract and classify the optic nerve. Compared to medical professionals, it achieved an accuracy of 83.4% in identifying glaucomatous damage. Orbis International provides free access to an AI tool called Cybersight AI to eye care professionals in low- and middle-income countries [33]. This open access tool can detect diabetic retinopathy, glaucoma, and macular disease on fundus images. At clinics in Rwanda, screening with this device led to accurate referrals for diabetic retinopathy and high rates of patient satisfaction, though more research is needed on diagnostic accuracy for glaucoma [33].

Several challenges must be addressed in order to successfully integrate AI-enabled glaucoma screening into real-world settings. First, researchers must ensure that deep learning models maintain their accuracy when applied to images from different cameras with varying photographic quality. Studies show that these models currently underperform when images are captured on different cameras compared with those used in training datasets [34]. Second, more research is needed on how co-morbid pathologies can impact the performance of such algorithms. Anatomic variability and pathologic conditions can affect the appearance of the optic nerve head, so many training datasets eliminate images with ocular pathologies; however, real-world screenings will be filled with individuals with a variety of ocular conditions. Finally, more studies must integrate testing of deep learning models in the actual settings where they will be implemented and ensure generalizability to diverse racial and ethnic groups. Many models with high accuracies upon testing do not demonstrate similar accuracy in the real world [34].

The interpretability of AI models in clinical settings is a crucial aspect that warrants detailed discussion. AI models, particularly those based on deep learning, often function as ‘black boxes’, providing limited insight into how they derive their conclusions. This lack of transparency can be a significant barrier to the adoption of AI in clinical practice, where understanding the reasoning behind a diagnosis is fundamental for clinician trust and decision making.

Recent advancements in AI have seen the development of techniques aimed at unraveling these black boxes, thus enhancing the interpretability of AI systems. Methods such as Layer-wise Relevance Propagation (LRP) and Class Activation Mapping (CAM) are being explored to provide visual explanations of AI decisions. For instance, in glaucoma detection, these methods can highlight areas in fundus images or OCT scans that the AI model deems significant for its diagnosis. This not only aids clinicians in understanding AI decisions but also serves as a tool for validating the accuracy of the AI model. The integration of such interpretability frameworks into AI systems for glaucoma detection is a promising step towards their acceptance and effective utilization in clinical environments.

4. AI’s Role in Glaucoma Diagnosis

Unlike screening, where the primary aim of AI is to flag potential glaucoma cases for further examination, AI in glaucoma diagnosis tackles a more nuanced challenge. Here, AI is tasked with confirming the presence of glaucoma in individuals who have been flagged during screening or who present with symptoms. This involves a detailed analysis of clinical data, requiring algorithms to be highly accurate and reliable in differentiating glaucoma from other conditions that may present similarly. Determining an official diagnosis of glaucoma is a more difficult application for AI than screening for suspected disease, and this is not yet established or accepted in many clinical practices. Despite these challenges, there has been exponential growth in research in AI applications for glaucoma diagnosis in the past decade. Most applications focus on OCT, visual fields, or hybrid models that combine structural and functional data.

4.1. Leveraging OCT for Glaucoma Diagnosis

OCT, which provides a three-dimensional view of the retina and optic nerve head, is the most widespread tool used to measure structural damage from glaucoma. Key findings include high AUC scores ranging from 0.78 to 0.99, underscoring the effectiveness of these models in differentiating glaucoma eyes from normal eyes and in predicting RNFL thickness and different glaucoma stages. In the clinic, structures of interest are automatically segmented by the machine’s software to generate relevant quantitative measures, such as RNFL thickness. Early studies in the 2000s applied machine learning classifiers to time-domain OCT (TD-OCT), showing comparable or better glaucoma detection accuracy than standard OCT parameters alone [35]. With the advent of spectral-domain OCT (SD-OCT) in the 2010s, newer parameters like RNFL thickness enabled sensitivity of 50–80% and specificity of 80–95% for glaucoma diagnosis when analyzed by classifiers [36]. Recently, swept-source OCT (SS-OCT) with scanning speeds of 100,000 A-scans/second has shown potential for earlier glaucoma detection, with algorithms applied to SS-OCT achieving an AUC of 0.95 [37].

Different types of OCT images have been used to develop deep learning algorithms for glaucoma diagnosis, including the OCT conventional report, 2D B scans, 3D volumetric scans, anterior segment OCTs, and OCT-angiography (OCT-A) images. Deep learning models trained with images extracted from the OCT single report can achieve high accuracy in detection of glaucoma [38][39][40][41]. Other models rely on raw OCT scans for model training, rather than previously defined features from automated segmentation software. The usage of raw scans can help to reduce the effects of segmentation error, which can be present in 19.9% to 46.3% of SD-OCT scans [42]. Mariottoni et al. [43] trained a deep learning algorithm to predict RNFL thickness from raw OCT B-scans. These segmentation-free predictions were highly correlated with the actual RNFL thickness (r = 0.983, p < 0.001), with a mean absolute error of 2 μm in images of good quality. Thompson et al. [44] also used OCT B-scans to develop a deep learning algorithm that discriminated glaucomatous from healthy eyes. The diagnostic performance of this algorithm was better than using conventional RNFL thickness (AUROC 0.96 vs. 0.87 for the global peripapillary RNFL thickness, p < 0.001). OCT volumetric scans of the optic nerve head can provide more comprehensive features and aid in glaucoma detection. Maetschke et al. [45] developed a 3D deep learning model using volumetric OCT scans of the optic nerve head, which achieved a higher AUROC compared to a classic machine learning method using segmentation-based features (AUROC 0.94 vs. 0.89, p < 0.05).

AI-based image analysis of anterior segment OCTs and OCT-A has not yet been explored in depth but does hold potential [46]. Anterior segment OCTs, used to diagnose narrow angles or angle closures, have difficulties related to subjective interpretation. Fu et al. [47] developed a deep learning system trained to detect angle closure from Visante OCT images, which achieved an AUROC of 0.96, sensitivity of 0.90 ± 0.02, and specificity of 0.92 ± 0.008, compared to clinician gradings of the same images. Xu et al. [48] developed a model that could detect gonioscopic angle closure, with an AUROC of 0.928 in the test dataset of Chinese-American eyes and an AUC of 0.933 on the cross-validation dataset, also with AUCs of 0.964 and 0.952 for detecting primary angle closure disease (PACD) based on 2- and 3-quadrant definitions, respectively. OCT-A provides dynamic imaging to map the red blood cell movement over time at a given cross-section. Bowd et al. [49] trained a deep learning model on en face 4.5 × 4.5 mm radial peripapillary capillary OCT-A optic nerve head vessel density images. The model showed improvement compared to the gradient boosting classifier analysis of the built-in software in the OCT-A device.

In addition, Machine-to-Machine (M2M) approaches that predict RNFL thickness from fundus photographs are also a growing area of research. OCT has become the standard of care to objectively quantify structural damage in glaucoma [50], but it is expensive and not easily portable. M2M approaches can be used to quantify (not just qualify) glaucomatous damage, especially in low-resource settings without OCT access. Medeiros et al. [51] developed a machine learning classifier for glaucomatous damage in fundus photos, using OCT-derived RNFL thickness as a reference. The model showed a strong correlation (r = 0.832) with actual RNFL values and identified glaucomatous damage with an AUC of 0.944, though 30% of OCT variance was unaccounted for. Thompson et al. [52] employed a similar approach but used a different reference standard from OCT: the Bruch’s membrane opening-minimum rim width (BMO-MRW) parameter. Again, predictions from the deep learning model were well correlated with the actual BMO-MRW values (Pearson’s r = 0.88, p < 0.001), with an AUC of 0.933 for distinguishing deep learning predictions from glaucomatous and healthy eyes.

As researchers venture into the realm of AI’s practical applications in glaucoma diagnosis, it is crucial to shift the focus from controlled research environments to real-world clinical settings. The efficacy and reliability of AI technologies must be critically evaluated in diverse clinical environments to understand their performance and applicability in routine clinical practice. The intricacies of real-world application, such as varied patient demographics, differing equipment, and non-standardized operating procedures, present unique challenges that are not typically encountered in controlled research settings.

Recent studies have begun to address this gap by conducting field trials and observational studies in various clinical settings. For example, the use of AI in community eye clinics and in regions with limited access to specialized care provides valuable insights into the performance of these technologies outside traditional research environments. These studies often highlight the need for robust AI models that can adapt to varying image qualities and different patient populations. Additionally, the integration of AI into existing healthcare workflows and its impact on clinical decision-making processes are being actively explored. These real-world evaluations are critical in ensuring that AI technologies not only meet the stringent requirements of clinical validation but also demonstrate practical utility and scalability in diverse healthcare settings.

4.2. Visual Fields and the Power of Hybrid Models

In addition to OCT scans, visual fields have been explored for AI-enabled diagnosis of glaucoma. Standard automated perimetry (SAP) using the Humphrey Field Analyzer has been the main method for assessing visual field defects in glaucoma. SAP provides numerical data on light sensitivity at different visual field locations, as well as summary indices like mean deviation. Beginning in the 1990s, machine learning techniques like artificial neural networks were applied to analyze and interpret SAP visual fields for glaucoma diagnosis. More recently, CNNs have also been trained using raw visual field data or probability maps to classify fields as normal versus glaucomatous [53][54].

Li et al. [54] trained a deep learning algorithm with the probability map of the pattern deviation image, showing that it had superior performance in distinguishing normal from glaucomatous visual fields (accuracy 87.6%) than either human graders (62.6%), the Glaucoma System 2 (52.3%), or the Advanced Glaucoma Intervention Study criteria (45.9%). Although it is considered one of the most robust algorithms using visual fields, one limitation is that the input pattern deviation images may preclude early glaucoma from being identified. Elze et al. [55] used “archetypal analysis” to classify patterns of visual field loss, such as arcuate defects, finding good correspondence to human classifications from the Ocular Hypertension Treatment Study (OHTS). With a follow-up study also using archetypal analysis, Wang et al. [56] classified central visual field patterns in glaucoma, showing that specific subtypes with nasal defects were associated with more severe total central loss in the future. Brusini et al. [57] developed a model that could identify local patterns of visual field loss and classify and quantify the degree of severity based on subjective assessments. Li et al. [58] developed iGlaucoma mobile software, which is a smartphone application-based deep learning algorithm that extracts data points in the visual field using optical character recognition techniques. This software outperformed ophthalmologist readers and has undergone real-world prospective external validation testing.

Early studies suggest that hybrid deep learning models that combine structural and functional tests have increased performance over models trained with either test alone [59]. Such models better mimic a clinical diagnosis from eye specialists, which is typically multimodal and does not rely on a single imaging modality as input. Xiong et al. [60] showed that a multimodal algorithm using both visual fields and OCT scans to detect glaucomatous optic neuropathy had superior performance compared with models that relied on each modality alone. Other groups have focused on prediction models, such as prediction of visual field sensitivities from RNFL thickness from OCT [61][62][63][64]. Using fundus photographs to predict RNFL thickness has been shown to predict future development of field defects in eyes of glaucoma suspects [65]. Lee et al. [66] trained a deep learning algorithm to predict mean deviation from optic disc photographs, which could be useful when SAPs are not available. Sedai et al. [67] combined multimodal information into a model, using clinical data (age, IOP, inter-visit interval), circumpapillary (cp) RNFL thickness from OCT, and visual field sensitivities to predict cpRNFL thickness at the subsequent visit. This model showed consistent performance among suspects and cases and could potentially be used to personalize the frequency of follow-up visits for patients.

4.3. Challenges and Future Prospects for AI in Glaucoma Diagnosis

One major barrier shared by all algorithms trained to diagnose glaucoma is the lack of a gold standard definition for the presence and progression of this disease. Numerous studies cite high interprovider variability in glaucoma diagnosis [68][69], which serves as the reference standard for evaluating algorithm outputs. A clear, concrete definition of glaucoma could help set the bar for model accuracy [34]. For example, diabetic retinopathy has an agreed upon classification system, allowing a more straightforward approach to developing AI for diagnostic applications with proven success; in 2018, a deep learning system for diagnosis of this disease in diabetic patients received FDA approval for use in primary care clinics [20]. This system documents the appearance of the optic nerve but is not approved to diagnose glaucoma at this time.

Another key challenge in diagnosis is how to design the interface between the clinician and the AI model. For instance, recent work has demonstrated that professional radiologists selectively comply with AI recommendations in a suboptimal way, which can lead to worse performance than desired [70]. Relatedly, selective compliance has also led to issues with racial bias in other domains [71]. Techniques such as explainability have been proposed to help bridge this gap, though significant challenges remain to ensuring reliability of explainability techniques [72]. As an alternative, high-quality uncertainty quantification has been shown to help improve end-user trust in other domains [73] and may be valuable for clinical decision support systems as well.

Moreover, each imaging modality also poses its own set of challenges to implementation in real-world settings. OCT machines are expensive and therefore not as applicable for low-resource areas. Additionally, like fundus images, anatomic abnormalities can influence results, and there is a lack of interchangeability across OCT devices [46]. Visual field testing is subjective and can be affected by patient factors such as attention and fatigue [74]. Additionally, most models are typically trained using visual field tests labelled as reliable and may not be able to identify unreliable exams, which are very common in clinical settings. Finally, because structural changes are known to precede functional damage in glaucoma, it can be difficult to provide an early diagnosis using visual fields. As a result, many AI applications using visual fields are better suited to assess disease progression, rather than diagnosis. There are also several barriers to the development and implementation of hybrid models. Such models require paired data from imaging modalities in training and testing datasets, which imposes limits on the availability and feasibility of data collection. When using multiple input types, there is also a need to add more training data to avoid overfitting [75].

References

- Quigley, H.A. Number of people with glaucoma worldwide. Br. J. Ophthalmol. 1996, 80, 389–393.

- Yuksel Elgin, C.; Chen, D.; Al-Aswad, L.A. Ophthalmic imaging for the diagnosis and monitoring of glaucoma: A review. Clin. Exp. Ophthalmol. 2022, 50, 183–197.

- Weinreb, R.N.; Weinreb, R.N.; Leung, C.K.; Crowston, J.G.; Medeiros, F.A.; Friedman, D.S.; Wiggs, J.L.; Martin, K.R. Primary open-angle glaucoma. Nat. Rev. Dis. Primers 2016, 2, 16067.

- Yarmohammadi, A.; Zangwill, L.M.; Diniz-Filho, A.; Suh, M.H.; Yousefi, S.; Saunders, L.J.; Belghith, A.; Manalastas, P.I.C.; Medeiros, F.A.; Weinreb, R.N. Relationship between optical coherence tomography angiography vessel density and severity of visual field loss in glaucoma. Ophthalmology 2016, 123, 2498–2508.

- Sharma, P.; Sample, P.A.; Zangwill, L.M.; Schuman, J.S. Diagnostic Tools for Glaucoma Detection and Management. Surv. Ophthalmol. 2008, 53, S17–S32.

- Devalla, S.K.; Liang, Z.; Pham, T.H.; Boote, C.; Strouthidis, N.G.; Thiery, A.H.; Girard, M.J.A. Glaucoma management in the era of artificial intelligence. Br. J. Ophthalmol. 2019, 104, 301–311.

- Zhang, L.; Tang, L.; Xia, M.; Cao, G. The application of artificial intelligence in glaucoma diagnosis and prediction. Front. Cell Dev. Biol. 2023, 11, 1173094.

- Asrani, S.; Essaid, L.; Alder, B.D.; Santiago-Turla, C. Artifacts in Spectral-Domain Optical Coherence Tomography Measurements in Glaucoma. JAMA Ophthalmol. 2014, 132, 396–402.

- Komura, D.; Ishikawa, S. Machine Learning Methods for Histopathological Image Analysis. Comput. Struct. Biotechnol. J. 2018, 16, 34–42.

- Coan, L.J.; Williams, B.M.; Adithya, V.K.; Upadhyaya, S.; Alkafri, A.; Czanner, S.; Venkatesh, R.; Willoughby, C.E.; Kavitha, S.; Czanner, G. Automatic detection of glaucoma via fundus imaging and artificial intelligence: A review. Surv. Ophthalmol. 2022, 68, 17–41.

- Song, C.; Ben-Shlomo, G.; Que, L. A Multifunctional Smart Soft Contact Lens Device Enabled by Nanopore Thin Film for Glaucoma Diagnostics and In Situ Drug Delivery. J. Microelectromech. Syst. 2019, 28, 810–816.

- Orlando, J.I.; Fu, H.; Breda, J.B.; van Keer, K.; Bathula, D.R.; Diaz-Pinto, A.; Fang, R.; Heng, P.-A.; Kim, J.; Lee, J.; et al. REFUGE Challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Med Image Anal. 2020, 59, 101570.

- Gambhir, S.S.; Ge, T.J.; Vermesh, O.; Spitler, R.; Gold, G.E. Continuous health monitoring: An opportunity for precision health. Sci. Transl. Med. 2021, 13, eabe5383.

- Zhang, J.; Kim, K.; Kim, H.J.; Meyer, D.; Park, W.; Lee, S.A.; Dai, Y.; Kim, B.; Moon, H.; Shah, J.V.; et al. Smart soft contact lenses for continuous 24-hour monitoring of intraocular pressure in glaucoma care. Nat. Commun. 2022, 13, 5518.

- Susanna, R., Jr.; De Moraes, C.G.; Cioffi, G.A.; Ritch, R. Why Do People (Still) Go Blind from Glaucoma? Transl. Vis. Sci. Technol. 2015, 4, 1.

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A review of deep learning for screening, diagnosis, and detection of glaucoma progression. Transl. Vis. Sci. Technol. 2020, 9, 42.

- Delgado, M.F.; Abdelrahman, A.M.; Terahi, M.; Woll, J.J.M.Q.; Gil-Carrasco, F.; Cook, C.; Benharbit, M.; Boisseau, S.; Chung, E.; Hadjiat, Y.; et al. Management of Glaucoma in Developing Countries: Challenges and Opportunities for Improvement. Clin. Outcomes Res. 2019, 11, 591–604.

- Myers, J.S.; Fudemberg, S.J.; Lee, D. Evolution of optic nerve photography for glaucoma screening: A review. Clin. Exp. Ophthalmol. 2017, 46, 169–176.

- Ittoop, S.M.; Jaccard, N.; Lanouette, G.; Kahook, M.Y. The Role of Artificial Intelligence in the Diagnosis and Management of Glaucoma. Eur. J. Gastroenterol. Hepatol. 2021, 31, 137–146.

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018, 1, 39.

- Swaminathan, S.S.; Jammal, A.A.; Berchuck, S.I.; Medeiros, F.A. Rapid initial OCT RNFL thinning is predictive of faster visual field loss during extended follow-up in glaucoma. Arch. Ophthalmol. 2021, 229, 100–107.

- Miller, S.E.; Thapa, S.; Robin, A.L.; Niziol, L.M.; Ramulu, P.Y.; Woodward, M.A.; Paudyal, I.; Pitha, I.; Kim, T.N.; Newman-Casey, P.A. Glaucoma Screening in Nepal: Cup-to-Disc Estimate With Standard Mydriatic Fundus Camera Compared to Portable Nonmydriatic Camera. Arch. Ophthalmol. 2017, 182, 99–106.

- Mirzania, D.; Thompson, A.C.; Muir, K.W. Applications of deep learning in detection of glaucoma: A systematic review. Eur. J. Ophthalmol. 2020, 31, 1618–1642.

- Li, Z.; He, Y.; Keel, S.; Meng, W.; Chang, R.T.; He, M. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018, 125, 1199–1206.

- Wassel, M.; Hamdi, A.M.; Adly, N.; Torki, M. Vision Transformers Based Classification for Glaucomatous Eye Condition. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 5082–5088.

- Fan, R.; Alipour, K.; Bowd, C.; Christopher, M.; Brye, N.; Proudfoot, J.A.; Goldbaum, M.H.; Belghith, A.; Girkin, C.A.; Fazio, M.A.; et al. Detecting glaucoma from fundus photographs using deep learning without convolutions: Transformer for improved generalization. Ophthalmol. Sci. 2023, 3, 100233.

- Al-Aswad, L.A.; Kapoor, R.; Chu, C.K.; Walters, S.; Gong, D.; Garg, A.; Gopal, K.; Patel, V.; Sameer, T.; Rogers, T.W.; et al. Evaluation of a Deep Learning System For Identifying Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Eur. J. Gastroenterol. Hepatol. 2019, 28, 1029–1034.

- Ahn, J.M.; Kim, S.; Ahn, K.-S.; Cho, S.-H.; Lee, K.B.; Kim, U.S. A deep learning model for the detection of both advanced and early glaucoma using fundus photography. PLoS ONE 2018, 13, e0207982.

- Liu, H.; Li, L.; Wormstone, I.M.; Qiao, C.; Zhang, C.; Liu, P.; Li, S.; Wang, H.; Mou, D.; Pang, R.; et al. Development and Validation of a Deep Learning System to Detect Glaucomatous Optic Neuropathy Using Fundus Photographs. JAMA Ophthalmol. 2019, 137, 1353–1360.

- Hemelings, R.; Elen, B.; Barbosa-Breda, J.; Blaschko, M.B.; De Boever, P.; Stalmans, I. Deep learning on fundus images detects glaucoma beyond the optic disc. Sci. Rep. 2021, 11, 20313.

- Ting, D.S.W.; Cheung, C.Y.-L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; Yeo, I.Y.S.; Lee, S.Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA 2017, 318, 2211–2223.

- Rogers, T.W.; Jaccard, N.; Carbonaro, F.; Lemij, H.G.; Vermeer, K.A.; Reus, N.J.; Trikha, S. Evaluation of an AI system for the automated detection of glaucoma from stereoscopic optic disc photographs: The European Optic Disc Assessment Study. Eye 2019, 33, 1791–1797.

- Whitestone, N.; Nkurikiye, J.; Patnaik, J.L.; Jaccard, N.; Lanouette, G.; Cherwek, D.H.; Congdon, N.; Mathenge, W. Feasibility and acceptance of artificial intelligence-based diabetic retinopathy screening in Rwanda. Br. J. Ophthalmol. 2023.

- AlRyalat, S.A.; Singh, P.; Kalpathy-Cramer, J.; Kahook, M.Y. Artificial Intelligence and Glaucoma: Going Back to Basics. Clin. Ophthalmol. 2023, 17, 1525–1530.

- Burgansky-Eliash, Z.; Wollstein, G.; Chu, T.; Ramsey, J.D.; Glymour, C.; Noecker, R.J.; Ishikawa, H.; Schuman, J.S. Optical Coherence Tomography Machine Learning Classifiers for Glaucoma Detection: A Preliminary Study. Investig. Opthalmol. Vis. Sci. 2005, 46, 4147–4152.

- Barella, K.A.; Costa, V.P.; Vidotti, V.G.; Silva, F.R.; Dias, M.; Gomi, E.S. Glaucoma Diagnostic Accuracy of Machine Learning Classifiers Using Retinal Nerve Fiber Layer and Optic Nerve Data from SD-OCT. J. Ophthalmol. 2013, 2013, 789129.

- Christopher, M.; Belghith, A.; Weinreb, R.N.; Bowd, C.; Goldbaum, M.H.; Saunders, L.J.; Medeiros, F.A.; Zangwill, L.M. Retinal Nerve Fiber Layer Features Identified by Unsupervised Machine Learning on Optical Coherence Tomography Scans Predict Glaucoma Progression. Investig. Opthalmol. Vis. Sci. 2018, 59, 2748–2756.

- Shin, Y.; Cho, H.; Jeong, H.C.; Seong, M.; Choi, J.-W.; Lee, W.J. Deep Learning-based Diagnosis of Glaucoma Using Wide-field Optical Coherence Tomography Images. Eur. J. Gastroenterol. Hepatol. 2021, 30, 803–812.

- Hood, D.C.; La Bruna, S.; Tsamis, E.; Thakoor, K.A.; Rai, A.; Leshno, A.; de Moraes, C.G.; Cioffi, G.A.; Liebmann, J.M. Detecting glaucoma with only OCT: Implications for the clinic, research, screening, and AI development. Prog. Retin. Eye Res. 2022, 90, 101052.

- Thakoor, K.A.; Koorathota, S.C.; Hood, D.C.; Sajda, P. Robust and Interpretable Convolutional Neural Networks to Detect Glaucoma in Optical Coherence Tomography Images. IEEE Trans. Biomed. Eng. 2021, 68, 2456–2466.

- Muhammad, H.B.; Fuchs, T.J.; De Cuir, N.; De Moraes, C.G.; Blumberg, D.M.; Liebmann, J.M.; Ritch, R.; Hood, D.C. Hybrid Deep Learning on Single Wide-field Optical Coherence tomography Scans Accurately Classifies Glaucoma Suspects. Eur. J. Gastroenterol. Hepatol. 2017, 26, 1086–1094.

- Miki, A.; Kumoi, M.; Usui, S.; Endo, T.; Kawashima, R.; Morimoto, T.; Matsushita, K.; Fujikado, T.; Nishida, K. Prevalence and Associated Factors of Segmentation Errors in the Peripapillary Retinal Nerve Fiber Layer and Macular Ganglion Cell Complex in Spectral-domain Optical Coherence Tomography Images. Eur. J. Gastroenterol. Hepatol. 2017, 26, 995–1000.

- Mariottoni, E.B.; Jammal, A.A.; Urata, C.N.; Berchuck, S.I.; Thompson, A.C.; Estrela, T.; Medeiros, F.A. Quantification of Retinal Nerve Fibre Layer Thickness on Optical Coherence Tomography with a Deep Learning Segmentation-Free Approach. Sci. Rep. 2020, 10, 402.

- Thompson, A.C.; Jammal, A.A.; Berchuck, S.I.; Mariottoni, E.B.; Medeiros, F.A. Assessment of a Segmentation-Free Deep Learning Algorithm for Diagnosing Glaucoma From Optical Coherence Tomography Scans. JAMA Ophthalmol. 2020, 138, 333–339.

- Maetschke, S.; Antony, B.; Ishikawa, H.; Wollstein, G.; Schuman, J.; Garnavi, R. A feature agnostic approach for glaucoma detection in OCT volumes. PLoS ONE 2019, 14, e0219126.

- Chen, D.; Ran, E.A.; Tan, T.F.; Ramachandran, R.; Li, F.; Cheung, C.; Yousefi, S.; Tham, C.C.F.; Ting, D.S.; Zhang, X.; et al. Applications of Artificial Intelligence and Deep Learning in Glaucoma. Asia-Pacific J. Ophthalmol. 2023, 12, 80–93.

- Fu, H.; Baskaran, M.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J.; Tun, T.A.; Mahesh, M.; Perera, S.A.; Aung, T. A Deep Learning System for Automated Angle-Closure Detection in Anterior Segment Optical Coherence Tomography Images. Arch. Ophthalmol. 2019, 203, 37–45.

- Xu, B.Y.; Chiang, M.; Chaudhary, S.; Kulkarni, S.; Pardeshi, A.A.; Varma, R. Deep Learning Classifiers for Automated Detection of Gonioscopic Angle Closure Based on Anterior Segment OCT Images. Arch. Ophthalmol. 2019, 208, 273–280.

- Bowd, C.; Belghith, A.; Zangwill, L.M.; Christopher, M.; Goldbaum, M.H.; Fan, R.; Rezapour, J.; Moghimi, S.; Kamalipour, A.; Hou, H.; et al. Deep Learning Image Analysis of Optical Coherence Tomography Angiography Measured Vessel Density Improves Classification of Healthy and Glaucoma Eyes. Arch. Ophthalmol. 2021, 236, 298–308.

- Tatham, A.J.; Medeiros, F.A. Detecting Structural Progression in Glaucoma with Optical Coherence Tomography. Ophthalmology 2017, 124, S57–S65.

- Medeiros, F.A.; Jammal, A.A.; Thompson, A.C. From machine to machine: An OCT-trained deep learning algorithm for objective quantification of glaucomatous damage in fundus photographs. Ophthalmology 2019, 126, 513–521.

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Deep Learning Algorithm to Quantify Neuroretinal Rim Loss From Optic Disc Photographs. Arch. Ophthalmol. 2019, 201, 9–18.

- Asaoka, R.; Murata, H.; Hirasawa, K.; Fujino, Y.; Matsuura, M.; Miki, A.; Kanamoto, T.; Ikeda, Y.; Mori, K.; Iwase, A.; et al. Using Deep Learning and Transfer Learning to Accurately Diagnose Early-Onset Glaucoma From Macular Optical Coherence Tomography Images. Arch. Ophthalmol. 2018, 198, 136–145.

- Li, F.; Wang, Z.; Qu, G.; Song, D.; Yuan, Y.; Xu, Y.; Gao, K.; Luo, G.; Xiao, Z.; Lam, D.S.C.; et al. Automatic differentiation of Glaucoma visual field from non-glaucoma visual filed using deep convolutional neural network. BMC Med. Imaging 2018, 18, 35.

- Elze, T.; Pasquale, L.R.; Shen, L.Q.; Chen, T.C.; Wiggs, J.L.; Bex, P.J. Patterns of functional vision loss in glaucoma determined with archetypal analysis. J. R. Soc. Interface 2015, 12, 20141118.

- Wang, M.; Tichelaar, J.; Pasquale, L.R.; Shen, L.Q.; Boland, M.V.; Wellik, S.R.; De Moraes, C.G.; Myers, J.S.; Ramulu, P.; Kwon, M.; et al. Characterization of Central Visual Field Loss in End-stage Glaucoma by Unsupervised Artificial Intelligence. JAMA Ophthalmol 2020, 138, 190–198.

- Brusini, P. Clinical use of a New Method for Visual Field Damage Classification in Glaucoma. Eur. J. Ophthalmol. 1996, 6, 402–407.

- Li, F.; Song, D.; Chen, H.; Xiong, J.; Li, X.; Zhong, H.; Tang, G.; Fan, S.; Lam, D.S.C.; Pan, W.; et al. Development and clinical deployment of a smartphone-based visual field deep learning system for glaucoma detection. NPJ Digit. Med. 2020, 3, 123.

- Yang, H.; Liu, J.; Sui, J.; Pearlson, G.; Calhoun, V.D. A Hybrid Machine Learning Method for Fusing fMRI and Genetic Data: Combining both Improves Classification of Schizophrenia. Front. Hum. Neurosci. 2010, 4, 192.

- Xiong, J.; Li, F.; Song, D.; Tang, G.; He, J.; Gao, K.; Zhang, H.; Cheng, W.; Song, Y.; Lin, F.; et al. Multimodal Machine Learning Using Visual Fields and Peripapillary Circular OCT Scans in Detection of Glaucomatous Optic Neuropathy. Ophthalmology 2021, 129, 171–180.

- Christopher, M.; Bowd, C.; Belghith, A.; Goldbaum, M.H.; Weinreb, R.N.; Fazio, M.A.; Girkin, C.A.; Liebmann, J.M.; Zangwill, L.M. Deep Learning Approaches Predict Glaucomatous Visual Field Damage from OCT Optic Nerve Head En Face Images and Retinal Nerve Fiber Layer Thickness Maps. Ophthalmology 2019, 127, 346–356.

- Hashimoto, Y.; Asaoka, R.; Kiwaki, T.; Sugiura, H.; Asano, S.; Murata, H.; Fujino, Y.; Matsuura, M.; Miki, A.; Mori, K.; et al. Deep learning model to predict visual field in central 10° from optical coherence tomography measurement in glaucoma. Br. J. Ophthalmol. 2020, 105, 507–513.

- Mariottoni, E.B.; Datta, S.; Dov, D.; Jammal, A.A.; Berchuck, S.I.; Tavares, I.M.; Carin, L.; Medeiros, F.A. Artificial Intelligence Mapping of Structure to Function in Glaucoma. Transl. Vis. Sci. Technol. 2020, 9, 19.

- Park, K.; Kim, J.; Lee, J. A deep learning approach to predict visual field using optical coherence tomography. PLoS ONE 2020, 15, e0234902.

- Lee, T.; Jammal, A.A.; Mariottoni, E.B.; Medeiros, F.A. Predicting Glaucoma Development With Longitudinal Deep Learning Predictions From Fundus Photographs. Arch. Ophthalmol. 2021, 225, 86–94.

- Lee, J.; Kim, Y.W.; Ha, A.; Kim, Y.K.; Park, K.H.; Choi, H.J.; Jeoung, J.W. Estimating visual field loss from monoscopic optic disc photography using deep learning model. Sci. Rep. 2020, 10, 21052.

- Sedai, S.; Antony, B.; Ishikawa, H.; Wollstein, G.; Schuman, J.S.; Garnavi, R. Forecasting Retinal Nerve Fiber Layer Thickness from Multimodal Temporal Data Incorporating OCT Volumes. Ophthalmol. Glaucoma 2019, 3, 14–24.

- LS, A. Agreement among optometrists, ophthalmologists, and residents in evaluating the optic disc for glaucoma. Ophthalmology 1994, 101, 1662–1667.

- Varma, R.; Steinmann, W.C.; Scott, I.U. Expert Agreement in Evaluating the Optic Disc for Glaucoma. Ophthalmology 1992, 99, 215–221.

- Agarwal, N.; Moehring, A.; Rajpurkar, P.; Salz, T. Combining Human Expertise with Artificial Intelligence: Experimental Evidence from Radiology (No. w31422); National Bureau of Economic Research: Cambridge, MA, USA, 2023.

- Stevenson, M.T.; Doleac, J.L. Algorithmic Risk Assessment in the Hands of Humans. Available at SSRN 3489440. 2022. Available online: https://scholar.google.com.tw/scholar?hl=zh-TW&as_sdt=0%2C5&q=Stevenson%2C+M.+T.%2C+%26+Doleac%2C+J.+L.+%282022%29.+Algorithmic+risk+assessment+in+the+hands+of+humans.+Available+at+SSRN+3489440.&btnG= (accessed on 3 December 2023).

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit. Health 2021, 3, e745–e750.

- McGrath, S.; Mehta, P.; Zytek, A.; Lage, I.; Lakkaraju, H. When does uncertainty matter?: Understanding the impact of predictive uncertainty in ML assisted decision making. arXiv 2020, arXiv:2011.06167.

- Mendieta, N.; Suárez, J.; Barriga, N.; Herrero, R.; Barrios, B.; Guarro, M. How Do Patients Feel About Visual Field Testing? Analysis of Subjective Perception of Standard Automated Perimetry. Semin. Ophthalmol. 2021, 36, 35–40.

- Zheng, C.; Johnson, T.V.; Garg, A.; Boland, M.V. Artificial intelligence in glaucoma. Curr. Opin. Ophthalmol. 2019, 30, 97–103.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

495

Revisions:

2 times

(View History)

Update Date:

30 Jan 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No