Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Mostafa Zaman Chowdhury | -- | 1920 | 2024-01-17 02:59:33 | | | |

| 2 | Lindsay Dong | + 8 word(s) | 1928 | 2024-01-17 03:05:04 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Hasanujjaman, M.; Chowdhury, M.Z.; Jang, Y.M. Sensor Fusion Technology in Autonomous Vehicles. Encyclopedia. Available online: https://encyclopedia.pub/entry/53929 (accessed on 02 March 2026).

Hasanujjaman M, Chowdhury MZ, Jang YM. Sensor Fusion Technology in Autonomous Vehicles. Encyclopedia. Available at: https://encyclopedia.pub/entry/53929. Accessed March 02, 2026.

Hasanujjaman, Muhammad, Mostafa Zaman Chowdhury, Yeong Min Jang. "Sensor Fusion Technology in Autonomous Vehicles" Encyclopedia, https://encyclopedia.pub/entry/53929 (accessed March 02, 2026).

Hasanujjaman, M., Chowdhury, M.Z., & Jang, Y.M. (2024, January 17). Sensor Fusion Technology in Autonomous Vehicles. In Encyclopedia. https://encyclopedia.pub/entry/53929

Hasanujjaman, Muhammad, et al. "Sensor Fusion Technology in Autonomous Vehicles." Encyclopedia. Web. 17 January, 2024.

Copy Citation

Complete autonomous systems such as self-driving cars to ensure the high reliability and safety of humans need the most efficient combination of four-dimensional (4D) detection, exact localization, and artificial intelligent (AI) networking to establish a fully automated smart transportation system. Sensor fusion also called multisensory data fusion or sensor data fusion is used to improve the specific detection task. In AVs, the primary sensors of cameras, radio detection and ranging (RADAR), and light detection and ranging (LiDAR) are used for object detection, localization, and classification.

autonomous vehicle

AI networking

sensor fusion

traffic surveillance camera

1. Introduction

Global giant autonomous self-driving tech companies and investors such as Tesla, Waymo, Apple, Kia–Hyundai, Ford, Audi, and Huawei are competing to develop more reliable, efficient, safe, and user-friendly autonomous vehicle (AV) smart transportation systems, not only for competitive technological development demand but to also have an extensive safety issue of valuing life and wealth. According to the World Health Organization (WHO) report, yearly approximately 1.35 million [1] people are killed around the world in crashes involving cars, buses, trucks, motorcycles, bicycles, or pedestrians, and estimates that road injuries will cost the world economy USD 1.8 trillion [2] in 2015–2030. Between 94% and 96% of all motor vehicle accidents are caused by different types of human errors, found by the National Highway Transportation Safety Administration (NHTSA) [3]. To ensure human safety and comfort, researchers are trying to implement a fully automated transportation system in which errors or faults will be turned to zero.

In January 2009, Google started self-driving car technology development at the Google X lab and after long sensor efficiency improvement research, in September 2015 Google prefaced the world’s first driverless car where the car successfully rides a blind gentleman on public roads under the project Chauffeur, that was renamed Waymo in December 2016 [4]. Tesla has begun an autopilot project in 2013 and after a couple of modifications, in September 2020, Tesla reintroduced an enhanced autopilot capable of highway travel, parking, and summoning, including navigation on city roads [5]. Other autonomous self-driving car companies mentioned before are also improving their technology day by day to achieve a competitive full automation system that can provide the most beneficial experience for human safety, security, comfort, and smart transportation systems. Although nowadays the success rate for autonomous self-driving car rides during testing periods on public roads is higher than a human-driving car, it is not sufficient yet to operate full automation and causes several errors, faults, and accident records [6][7][8]. A highly sensible and error-free self-driving car is mandatory to establish reliability among people to use AVs. Light detection and ranging (LiDAR), radio detection and ranging (RADAR), and car cameras are the most used sensors in AV technologies for the detection, localization, and ranging of objects [9][10][11][12][13][14].

AVs’ perception systems [15] depend on the internal sensing and processing unit of the vehicle sensors such as camera, RADAR, LiDAR, and ultrasonic sensors. This type of sensing system of AVs is called single vehicle intelligence (SVI) in intelligent transportation systems (ITS). In the SVI system, the AV measures the object vision data by cameras, the relative velocity of the object or obstacle by RADAR sensors, the environment mapping by LiDAR sensor, and ultrasonic sensors are used for parking assistance with very short-range accurate distance detection. AVs with SVI can drive autonomously with the help of sensor detection but are unable to build a node-to-node networking system because the SVI is a unidirectional communication system where the vehicles can sense the driving environment to drive spontaneously.

On the other hand, connected and AVs (CAVs) are operated by the connected vehicle intelligence (CVI) system in ITS [16]. The vehicle-to-everything (V2X) communication system is used in CVI for CAVs driving assistance. V2X communication is a combinational form of vehicle-to-vehicle (V2V), vehicle-to-person (V2P), vehicle-to-infrastructure (V2I), and vehicle-to-network (V2N). The CVI system for CAV, in general, can build a node-to-node wireless network where the central node (vehicle for V2I and V2P) or principal nodes (for V2V) in the systems’ communication range can receive and exchange (for V2N) data packs. In the beginning, dedicated short-range communication (DSRC) was used for vehicular communication. The communication range of DSRC is about 300 m. To develop an advanced and secured CAV system, different protocols are developed, such as IEEE 802.11p in Mach 2012. For effective and more reliable V2X communication, long-term evolution V2X (LTE-V2X) and new radio V2X (NR-V2X) are developed with Rel-14 to Rel-17 between 2017 to 2021 under the 3rd generation partnership project (3GPP) [17]. The features of Avs and CAVs are summarized and presented in Table 1.

Table 1. The summarized features of AVs and CAVs.

| Feature | AVs | CAVs |

|---|---|---|

| Intelligence | SVI | CVI |

| Networking | Sensor’s network | Wireless communication network |

| Range | Approximately 250 m | 300 m (DSRC) to 600 m (NR-V2X) |

| Communication | Object sense by the sensors | Node-to-node communication |

| Reliability | Reliable (Not exactly defined) | 95% (LTE-V2X), 99.999% (NR-V2X) |

| Latency | No deterministic delay | Less than 3 ms (LTE-V2X) |

| Direction | Unidirectional | Multidirectional |

| Data rate | N/A | >30 Mbps |

2. Sensor Fusion Technology in Autonomous Vehicles

Sensors in AV for Object Detection

Self-driving AVs are fully dependent on the sensing system of car cameras, LiDAR, and RADARs. With the combination of all sensed output data from the sensors, called sensor fusion, the AV decides whether it drives, brakes, or turns left–right, and so on. Sensing accuracy is the most crucial for self-driving cars to make error-free driving decisions. Figure 1 presents the area of an AV’s surround monitoring by car sensors for autonomous driving performance.

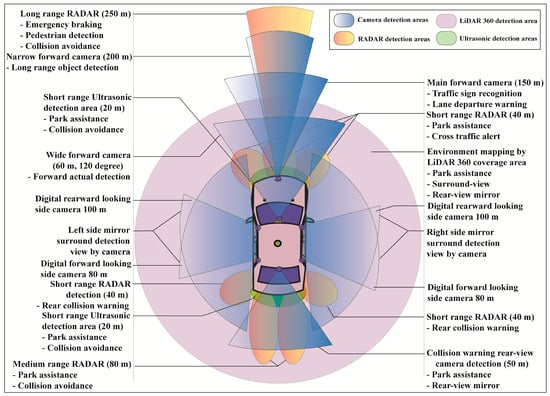

Figure 1. Surround sensing of an autonomous vehicle with different types of cameras, RADARs, LiDAR, and ultrasonic sensors.

Generally, eight sets of camera imaging systems are used in AVs to perform different detection sensing such as one set of narrow forward cameras, one set of main forward cameras, one set of wide forward cameras, four sets of side mirror cameras, and one set of rear-view back side cameras. A narrow forward camera with 200 m capture capability is used for long-range front object detection. The main forward camera with 150 m capture capability is used for traffic sign recognition and lane departure warning. A 120-degree wide forward camera with 60 m detection capability is used for forward actual detection. Four sets of mirror cameras are used for side detection in which the first two cameras each with 100 m capture capability are used for rearward looking and another two cameras each with 80 m capture capability are used for forward monitoring. The collision warning rear-view camera with 50 m detection capability is used in an AV’s backside for parking assistance and rear-view mirror.

Three types of different range RADARs such as low range, medium range, and high range RADARs are used for the calculation of an object’s or obstacle’s actual localization, positioning, and relative velocity. A long-range RADAR with 250 m detection capability is used for emergency braking, pedestrian detection, and collision avoidance. Three sets of short-range RADARs, each with 40 m front-side detection capability, are used for cross-traffic alert and parking assistance. Two sets of medium-range RADARs, each with 80 m backside detection capability are used for collision avoidance and parking assistance. Another two sets of medium-range RADARs, each with 40 m rear detection capability, are used for rear collision warning. Some short-range with 20 m detection capacity ultrasonic passive sensors are also used for collision avoidance and parking assistance.

The AV’s surround is mapped by LiDAR 360, used for the surround-view, parking assistance, and rear-view mirror. In a proposed sensor fusion algorithm, the main three types of AV sensors such as camera, RADAR, and LiDAR are considered. RADAR performances for distance and relative velocity measurements are far better than the ultrasonic sensor. The AV has several cameras for the surrounding view, traffic sign recognition, lane departure warning, side mirroring, and parking assistance. LiDAR is basically used for environmental monitoring and mapping. RADARs provide cross-traffic alerts, pedestrian detection, and collision warning to avoid accidents. In AVs, short-range, medium-range, and long-range RADARs are generally used for sensor measurement purposes and ultrasonic for short-range collision avoidance as well as parking assistance.

Sensor Fusion Technology in AV

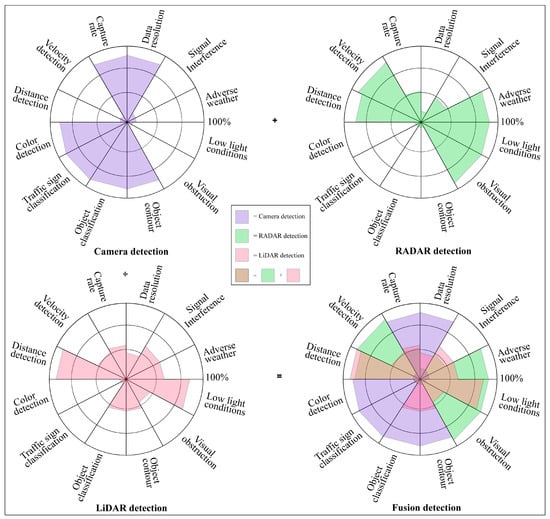

RADAR working performances are reliable in adverse weather and low light conditions and have very impressive sensing capabilities such as relative velocity detection, visual obstruction identification, and obstacles distance measurement but its performance may be decayed by signal interference. RADAR is not good for color detection, traffic sign or object classification, object contour, capture rate, and data resolution. LiDAR also has signal interference effects, but is sovereign for 3D mapping, point cloud architecting, object distance detection, and low light working capability. The cameras’ optical sensing is signal interference-free and decent in color detection, traffic sign classification, object classification, object contouring, data resolution, and capture rate. Camera, LiDAR, RADAR, and ultrasonic sensors have individual detection advantages and limitations [18][19][20][21], which are listed in Table 2. After summarizing and graphing, Figure 2 gives an overlooked view of different sensor detection [22][23][24].

Figure 2. Detection capacity graphical view of AVs’ camera, RADAR, and LiDAR sensors with fusion detection.

Table 2. Pros and cons of camera, LiDAR, RADAR, and ultrasonic detection technologies in AV systems.

| Sensors | Pros | Cons |

|---|---|---|

| Camera |

|

|

| LiDAR |

|

|

| RADAR |

|

|

| Ultrasonic Sensor |

|

|

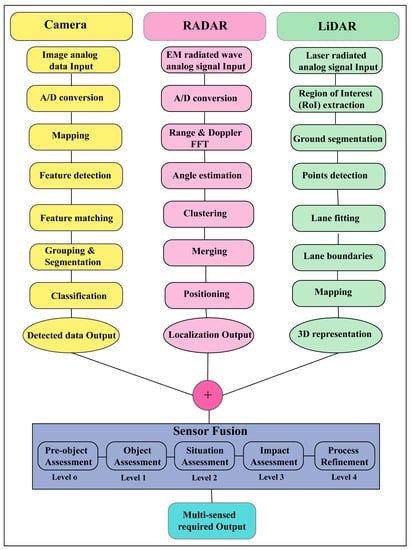

Sensor fusion also called multisensory data fusion or sensor data fusion is used to improve the specific detection task. In AVs, the primary sensors of cameras, RADAR, and LiDAR are used for object detection, localization, and classification. The distributed data fusion technology shown in Figure 3 is used in the proposed system. In five levels of data fusion technologies, wide-band and narrow-band digital signal processing and automated feature extraction are performed in the first level (level 0) fusion domain for pre-object assessment. The second level (level 1) or object assessment is the fusion domain of image and non-image fusion, hybrid target identification, unification, and variable level of fidelity. In the third and fourth levels (levels 2 and 3), situation and impact assessments are the fusion domain of the unified theory of uncertainty, the automated section of knowledge representation, and cognitive-based modulations. The fifth level (level 4) called process refinement is the fusion domain of optimization of non-commensurate sensors, the end-to-end link between inference needs and sensor control parameters, and robust measures of effectiveness (MOE) or measures of performance (MOP). The summarized flow chart of sensor fusion technologies [25][26][27][28] in AVs is shown in Figure 4.

Figure 3. Centralized, decentralized, and distributed types of fusion technologies used for autonomous systems design. Distributed fusion technology has been used in the proposed system.

Figure 4. The summarized flow chart of sensor fusion technologies in AVs.

References

- World Health Organization. Global Status Report on Road Safety. 2018. Available online: https://www.who.int/publications/i/item/9789241565684 (accessed on 5 January 2023).

- Chen, S.; Kuhn, M.; Prettner, K.; Bloom, D.E. The global macroeconomic burden of road injuries: Estimates and projections for 166 countries. Lancet Planet. Health 2019, 3, e390–e398.

- National Highway Traffic Safety Administration. 2016. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/Publication/812318 (accessed on 5 January 2023).

- Waymo. Available online: https://waymo.com/company/ (accessed on 5 January 2023).

- Tesla. Available online: https://www.tesla.com/autopilot (accessed on 6 January 2023).

- Teoh, E.R.; Kidd, D.G. Rage against the machine? Google’s self-driving cars versus human drivers. J. Saf. Res. 2017, 63, 57–60.

- Waymo Safety Report. 2020. Available online: https://storage.googleapis.com/sdc-prod/v1/safety-report/2020-09-waymo-safety-report.pdf (accessed on 6 January 2023).

- Favarò, F.M.; Nader, N.; Eurich, S.O.; Tripp, M.; Varadaraju, N. Examining accident reports involving autonomous vehicles in California. PLoS ONE 2017, 12, e0184952.

- Crouch, S. Velocity Measurement in Automotive Sensing: How FMCW Radar and Lidar Can Work Together. IEEE Potentials 2019, 39, 15–18.

- Hecht, J. LIDAR for Self-Driving Cars. Opt. Photonics News 2018, 29, 26–33.

- Domhof, J.; Kooij, J.F.P.; Gavrila, D.M. A Joint Extrinsic Calibration Tool for Radar, Camera and Lidar. IEEE Trans. Intell. Veh. 2021, 6, 571–582.

- Chen, C.; Fragonara, L.Z.; Tsourdos, A. RoIFusion: 3D Object Detection From LiDAR and Vision. IEEE Access 2021, 9, 51710–51721.

- Hu, W.-C.; Chen, C.-H.; Chen, T.-Y.; Huang, D.-Y.; Wu, Z.-C. Moving object detection and tracking from video captured by moving camera. J. Vis. Commun. Image Represent. 2015, 30, 164–180.

- Manjunath, A.; Liu, Y.; Henriques, B.; Engstle, A. Radar Based Object Detection and Tracking for Autonomous Driving. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munic, Germany, 16–18 April 2018; pp. 1–4.

- Rosique, F.; Navarro, P.J.; Fernández, C.; Padilla, A. A Systematic Review of Perception System and Simulators for Autonomous Vehicles Research. Sensors 2019, 19, 648.

- Campisi, T.; Severino, A.; Al-Rashid, M.A.; Pau, G. The Development of the Smart Cities in the Connected and Autonomous Vehicles (CAVs) Era: From Mobility Patterns to Scaling in Cities. Infrastructures 2021, 6, 100.

- Harounabadi, M.; Soleymani, D.M.; Bhadauria, S.; Leyh, M.; Roth-Mandutz, E. V2X in 3GPP Standardization: NR Sidelink in Release-16 and Beyond. IEEE Commun. Stand. Mag. 2021, 5, 12–21.

- Stettner, R. Compact 3D Flash Lidar Video Cameras and Applications. Laser Radar Technol. Appl. XV 2010, 7684, 39–46.

- Laganiere, R. Solving Computer Vision Problems Using Traditional and Neural Networks Approaches. In Proceedings of the Synopsys Seminar, Embedded Vision Summit, Santa Clara, USA, 20–23 May 2019; Available online: https://www.synopsys.com/designware-ip/processor-solutions/ev-processors/embedded-vision-summit-2019.html#k (accessed on 7 January 2023).

- Soderman, U.; Ahlberg, S.; Elmqvist, M.; Persson, A. Three-dimensional environment models from airborne laser radar data. Laser Radar Technol. Appl. IX. 2004, 5412, 333–334.

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive radars: A review of signal processing techniques. IEEE Signal Process. Mag. 2017, 34, 22–35.

- Ye, Y.; Chen, H.; Zhang, C.; Hao, X.; Zhang, Z. Sarpnet: Shape Attention Regional Proposal Network for Lidar-Based 3d Object Detection. Neurocomputing 2020, 379, 53–63.

- Rovero, F.; Zimmermann, F.; Berzi, D.; Meek, P. Which camera trap type and how many do I need? A review of camera features and study designs for a range of wildlife research applications. Hystrix 2013, 24, 148–156.

- Nabati, R.; Qi, H. Radar-Camera Sensor Fusion for Joint Object Detection and Distance Estimation in Autonomous Vehicles. arXiv 2020, arXiv:2009.08428.

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2019, 8, 2847–2868.

- Heng, L.; Choi, B.; Cui, Z.; Geppert, M.; Hu, S.; Kuan, B.; Liu, P.; Nguyen, R.; Yeo, Y.C.; Geiger, A.; et al. Project AutoVision: Localization and 3D Scene Perception for an Autonomous Vehicle with a Multi-Camera System. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4695–4702.

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61.

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093.

More

Information

Subjects:

Transportation Science & Technology

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

2.8K

Revisions:

2 times

(View History)

Update Date:

17 Jan 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No