Telemedicine has the potential to improve access and delivery of healthcare to diverse and aging populations. Recent advances in technology allow for remote monitoring of physiological measures such as heart rate, oxygen saturation, blood glucose, and blood pressure. However, the ability to accurately detect falls and monitor physical activity remotely without invading privacy or remembering to wear a costly device remains an ongoing concern. Human activity involves a series of actions carried out by one or more individuals to perform an action or task, such as sitting, lying, walking, standing, and falling. The field of human activity recognition (HAR) has made remarkable advancements. The primary objective of HAR is to discern a user’s behavior, enabling computing systems to accurately classify and measure human activity.

1. Introduction

Demands on the healthcare system associated with an aging population pose a significant challenge to nations across the world. Addressing these issues will require the ongoing adaptation of healthcare and social systems

[1]. According to the United States Department of Health and Human Services, those aged 65 and older comprised 17% of the population in 2020, but this proportion is projected to rise to 22% by 2040

[2]. Further, the projected increase in the population of those aged 85 and above is anticipated to increase by twofold. Older adults are more prone to chronic and degenerative diseases such as Alzheimer’s, respiratory diseases, diabetes, cardiovascular disease, osteoarthritis, stroke, and other chronic ailments

[3] which require frequent medical care, monitoring, and follow-up. Further, many seniors choose to live independently and are often alone for extended periods of time. For example, in 2021, over 27% (15.2 million) of older adults residing in the community lived alone

[2]. One major problem for older adults who choose to live alone is their vulnerability to accidental falls, which are experienced by over a quarter of those aged 65 and older annually, leading to three million emergency visits

[4]. Recent studies confirm that preventive measures through active monitoring could help curtail these incidents

[5].

Clinicians who treat patients with chronic neurological conditions such as stroke, Parkinson’s disease, and multiple sclerosis also encounter challenges in providing effective care. This can be due to difficulty in monitoring and measuring changes in function and activity levels over time and assessing patient compliance with treatment outside of scheduled office visits

[6]. Therefore, it would be beneficial if there were accurate and effective ways to continuously monitor patient activity over extended periods of time without infringing on patient privacy. For these reasons, telemedicine and continuous human activity monitoring have become increasingly important components of today’s healthcare system because they can allow clinicians to engage remotely using objective data

[7][8].

Telemedicine systems allow for the transmission of patient data from home to healthcare providers, enabling data analysis, diagnosis, and treatment planning

[9][10]. Given the scenario that many older people prefer to live independently in their homes, incorporating and improving telemedicine services has become crucial for many healthcare organizations

[11], a sentiment supported by the 37% of the population that utilized telemedicine services in 2021

[12]. Telemedicine monitoring facilitates the collection of long-term data, provides analysis reports to healthcare professionals, and enables them to discern both positive and negative trends and patterns in patient behavior. These data are also essential for real-time patient safety monitoring, alerting caregivers and emergency services during incidents such as a fall

[13]. This capability is valuable for assessing patient adherence and responses to medical and rehabilitation interventions

[14]. Various technologies have been developed for human activity recognition (HAR) and fall detection

[15]. However, non-contact mmwave-based radar technology has garnered considerable attention in recent years due to its numerous advantages

[16], such as its portability, low cost, and ability to operate in different ambient and temperature conditions. Furthermore, it provides more privacy compared to traditional cameras and is more convenient than wearable devices

[17][18].

The integration of mmwave-based radar systems in healthcare signifies notable progress, specifically in improving the availability of high-quality medical care for patients in distant areas, thus narrowing the disparity between healthcare services in rural and urban regions. This technological transition allows healthcare facilities to allocate resources more efficiently to situations that are of higher importance, therefore reducing the difficulties associated with repeated hospital visits for patients with chronic illnesses. Moreover, these advancements enhance in-home nursing services for the elderly and disabled communities, encouraging compliance with therapeutic treatments and improving the distribution of healthcare resources. Crucially, these sophisticated monitoring systems not only enhance the quality and effectiveness of treatment but also lead to significant cost reductions. These advancements play a crucial role in helping healthcare systems effectively address the changing requirements of an aging population, representing a significant advancement in modern healthcare delivery.

While mmwave-based radar technology offers significant advantages for HAR and fall detection, the complexity of the data it generates presents a formidable challenge

[19]. Typically, radar signals are composed of high-dimensional point cloud data that is inherently information-rich, requiring advanced processing techniques to extract meaningful insights. Charles et al.

[20] recently proposed PointNet, a deep learning architecture that enables the direct classification of point cloud data from mmwave-based radar signals. Their model preserves spatial information by processing point clouds in their original form. The combination of mmwave radar and PointNet can help HAR applications by improving their performance in terms of precision, responsiveness, and versatility across a wide range of scenarios

[21].

2. Human Activity Recognition Approaches

Human activity involves a series of actions carried out by one or more individuals to perform an action or task, such as sitting, lying, walking, standing, and falling

[22]. The field of HAR has made remarkable advancements over the past decade. The primary objective of HAR is to discern a user’s behavior, enabling computing systems to accurately classify and measure human activity

[23].

Today, smart homes are being constructed with HAR to aid the health of the elderly, disabled, and children by continuously monitoring their daily behavior

[24]. HAR may be useful for observing daily routines, evaluating health conditions, and assisting elderly or disabled individuals. HAR plays a role in automatic health tracking, enhancements in diagnostic methods and care, and enables remote monitoring in home and institutional settings, thereby improving safety and well-being

[25].

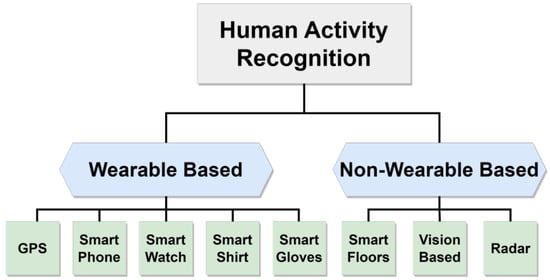

Existing literature in this area often categorizes research based on the features of the devices used, distinguishing between wearable and non-wearable devices, as depicted in

Figure 1. Wearable devices encompass smartphones, smartwatches, and smart gloves

[26], all capable of tracking human movements. In contrast, non-wearable devices comprise various tools like visual-based systems, intelligent flooring, and radar systems. An illustrative summary of these methodologies is presented in this section, offering a snapshot of the investigations undertaken and a brief overview of diverse applications utilizing these techniques.

Figure 1. Classification of human activity recognition approaches.

Wearable technology has become increasingly useful in capturing detailed data on an individual’s movements and activity patterns through the utilization of sensors placed on the body

[15]. This technology includes various devices such as Global Positioning System (GPS) devices, smartwatches, smartphones, smart shirts, and smart gloves. Its application has made notable contributions to the domains of HAR and human–computer interfaces (HCIs)

[26]. Nevertheless, it is important to acknowledge that every type of device presents its own set of advantages and disadvantages. For instance, GPS-based systems face obstacles when it comes to accurately identifying specific human poses, and experience signal loss in indoor environments

[27]. Smartwatches and smartphones can provide real-time tracking to monitor physical activity and location. They feature monitoring applications that possess the ability to identify health fluctuations and possibly life-threatening occurrences

[28][29]. However, smartwatches have disadvantages such as limited battery life, and users must remember to wear them continuously

[30]. Further, smartphones encounter issues with sensor inaccuracy when they are kept in pockets or purses

[31] and they encounter difficulties in monitoring functions that require direct contact with the body. Other wearable devices, such as the Hexoskin smart shirt

[32] and smart textile gloves developed by Itex

[33], present alternative options for HAR. However, the persistent need to wear these devices imposes limitations on their utilization in a variety of situations such as when individuals need to take a shower or during sleep

[34]. As mentioned before, especially when monitoring older adults, failure to constantly wear monitoring devices can lead to missing unexpected events such as falls

[35].

Non-wearable approaches for HAR utilize ambient sensors like camera-based devices, smart floors, and radar systems. Vision-based systems have shown promise in classifying human poses and detecting falls, leveraging advanced computer vision algorithms and high-quality optical sensors

[36]. However, challenges like data storage needs, processing complexity, ambient light sensitivity, and privacy concerns hinder their general acceptance

[37]. Intelligent floor systems such as carpets and floor tiles provide alternative means for monitoring human movement and posture

[38]. A study on a carpet system displayed its ability to use surface force information for 3D human pose analysis but revealed limitations in detecting certain body positions and differentiating similar movements

[39]. Recently, radar-based HAR has gained interest due to its ease of deployment in diverse environments, insensitivity to ambient lighting conditions, and maintaining user privacy

[18][40].

Mmwave is a subset of radar technology

[41], that is relatively low cost, has a compact form factor, and has high-resolution detection capabilities

[42]. Further, it can penetrate thin layers of some materials such as fabrics, allowing seamless indoor placement in complex living environments

[43]. Commercially available mmwave devices have the capability to create detailed 3D point cloud models of objects. The collected data can be effectively analyzed using edge Artificial Intelligence (AI) algorithms to accurately recreate human movements for HAR applications

[44].

The mmwave radar generates point clouds by emitting electromagnetic waves and capturing their reflections as they interact with the object or person. These point clouds represent the spatial distribution of objects and movements, which are then processed to decipher human activities. However, the fluctuating count of cloud points in each frame from mmwave radar introduces challenges in crafting precise activity classifiers, as these typically require fixed input dimensions and order

[35]. To address this, researchers commonly standardize the data into forms like micro-Doppler signatures

[45][46], image sequences

[47][48][49], or 3D voxel grids

[19][50] before employing machine learning. This standardization often results in the loss of spatial features

[51] and can cause data bloat and related challenges

[20].

The proposed approach uses the PointNet network to overcome constraints faced by directly processing raw point cloud data, thereby retaining fine-grained spatial relationships essential for object tracking

[52]. As shown in

Table 1, the proposed system achieved novel high accuracy compared with prior studies and extracted accurate tracking maps using spatial features. PointNet’s architecture, leveraging shared Multi-Layer Perceptron (MLP), is computationally efficient and lightweight, making it well-suited for real-time HAR applications

[20].

Table 1. Overview on the mmwave radar with machine learning for detecting simple HAR studies.

| Ref. |

Preprocessing Methods |

Sensor Type |

Model |

Activity Detection |

Overall Accuracy |

| [45] |

Micro-Doppler Signatures |

TI AWR1642 |

CNN 1 |

Walking, swinging hands, sitting, and shifting. |

95.19% |

| [46] |

Micro-Doppler Signatures |

TI AWR1642 |

CNN |

Standing, walking, falling, swing, seizure, restless. |

98.7% |

| [53] |

Micro-Doppler Signatures |

TI IWR6843 |

DNN 2 |

Standing, running, jumping jacks, jumping, jogging, squats. |

95% |

| [54] |

Micro-Doppler Signatures |

TI IWR6843ISK |

CNN |

Stand, sit, move toward, away, pick up something from ground, left, right, and stay still. |

91% |

| [55] |

Micro-Doppler |

TI xWR14xx |

RNN 3 |

Stand up, sit down, walk, fall, get in, lie down, |

NA 4 |

| |

Signatures |

TI xWR68xx |

|

roll in, sit in, and get out of bed. |

|

| [56] |

Dual-Micro Motion Signatures |

TI AWR1642 |

CNN |

Standing, sitting, walking, running, jumping, punching, bending, and climbing. |

98% |

| [57] |

Reflection |

Two |

LSTM 5 |

Falling, walking, pickup, stand up, boxing, sitting, |

80% |

| |

Heatmap |

TI IWR1642 |

|

and Jogging. |

|

| [58] |

Doppler Maps |

TI AWR1642 |

PCA 6 |

Fast walking, slow walking (with swinging hands, or without swinging hands), and limping. |

96.1% |

| [59] |

Spatial-Temporal Heatmaps |

TI AWR1642 |

CNN |

14 Common in-home full-body workout. |

97% |

| [47] |

Heatmap Images |

TI IWR1443 |

CNN |

Standing, walking, and sitting. |

71% |

| [48] |

Doppler Images |

TI AWR1642 |

SVM 7 |

Stand up, pick up, drink while standing, walk, sit down. |

95% |

| [49] |

Doppler Images |

TI AWR1642 |

SVM |

Shoulder press, lateral raise, dumbbell, squat, boxing, right and left triceps. |

NA |

| [19] |

Voxelization |

TI IWR1443 |

T-D 8 CNN |

Walking, jumping, jumping jacks, squats and boxing. |

90.47% |

| |

|

|

B-D 9 LSTM |

|

|

| [50] |

Voxelization |

TI IWR1443 |

CNN |

Sitting posture with various directions. |

99% |

| [21] |

Raw Points Cloud |

TI IWR1843 |

PointNet |

Walking, rotating, waving, stooping, and falling. |

95.40% |

| This |

Raw Points Cloud |

TI IWR6843 |

PointNet |

Standing, walking, sitting, lying, falling. |

99.5% |

| Work |

|

|

|

|

|