1. Introduction

Weather and climate prediction play an important role in human history. Weather forecasting serves as a critical tool that underpins various facets of human life and social operations, permeating everything from individual decision-making to large-scale industrial planning. Its significance at the individual level is manifested in its capacity to guide personal safety measures, from avoiding hazardous outdoor activities during inclement weather to taking health precautions in extreme temperatures. This decision-making extends into the agricultural realm, where forecasts inform the timing for planting, harvesting, and irrigation, ultimately contributing to maximized crop yields and stable food supply chains

[1]. The ripple effect of accurate forecasting also reaches the energy sector, where it aids in efficiently managing demand fluctuations, allowing for optimized power generation and distribution. This efficiency is echoed in the transportation industry, where the planning and scheduling of flights, train routes, and maritime activities hinge on weather conditions. Precise weather predictions are key to mitigating delays and enhancing safety protocols

[2]. Beyond these sectors, weather forecasting plays an integral role in the realm of construction and infrastructure development. Adverse conditions can cause project delays and degrade quality, making accurate forecasts a cornerstone of effective project management. Moreover, the capacity to forecast extreme weather events like hurricanes and typhoons is instrumental in disaster management, offering the possibility of early warnings and thereby mitigating loss of life and property

[3].

Although climate prediction is often ignored by human beings in the short term, it has a close relationship with Earth’s life. Global warming and the subsequent rise in sea levels constitute critical challenges with far-reaching implications for the future of our planet. Through sophisticated climate modeling and forecasting techniques, scholars stand to gain valuable insights into the potential ramifications of these phenomena, thereby enabling the development of targeted mitigation strategies. For instance, precise estimations of sea-level changes in future decades could inform rational urban planning and disaster prevention measures in coastal cities. On an extended temporal scale, climate change is poised to instigate considerable shifts in the geographical distribution of numerous species, thereby jeopardizing biodiversity. State-of-the-art climate models integrate an array of variables—encompassing atmospheric conditions, oceanic currents, terrestrial ecosystems, and biospheric interactions—to furnish a nuanced comprehension of environmental transformations

[4]. This integrative approach is indispensable for the formulation of effective global and regional policies aimed at preserving ecological diversity. Economic sectors such as agriculture, fisheries, and tourism are highly susceptible to the vagaries of climate change. Elevated temperatures may precipitate a decline in crop yields, while an upsurge in extreme weather events stands to impact tourism adversely. Longitudinal climate forecasts are instrumental in guiding governmental and business strategies to adapt to these inevitable changes. Furthermore, sustainable resource management, encompassing water, land, and forests, benefits significantly from long-term climate projections. Accurate predictive models can forecast potential water scarcity in specific regions, thereby allowing for the preemptive implementation of judicious water management policies. Climate change is also implicated in a gamut of public health crises, ranging from the proliferation of infectious diseases to an uptick in heatwave incidents. Comprehensive long-term climate models can equip public health agencies with the data necessary to allocate resources and devise effective response strategies.

In the short-term context, weather forecasts are instrumental for agricultural activities such as determining the optimal timing for sowing and harvesting crops, as well as formulating irrigation and fertilization plans. In the energy sector, short-term forecasts facilitate accurate predictions of output levels for wind and solar energy production. For transportation, which encompasses road, rail, aviation, and maritime industries, real-time weather information is vital for operational decisions affecting safety and efficiency. Similarly, construction projects rely on short-term forecasts for planning and ensuring safe operations. In the retail and sales domains, weather forecasts enable businesses to make timely inventory adjustments. For tourism and entertainment, particularly those involving outdoor activities and attractions, short-term forecasts provide essential guidance for day-to-day operations. Furthermore, short-term weather forecasts play a pivotal role in environmental and disaster management by providing early warnings for floods, fires, and other natural calamities. In the medium-to-long-term scenario, weather forecasts have broader implications for strategic planning and risk assessment. In agriculture, these forecasts are used for long-term land management and planning. The insurance industry utilizes medium-to-long-term forecasts to prepare for prospective increases in specific types of natural disasters, such as floods and droughts. Real estate sectors also employ these forecasts for evaluating the long-term impact of climate-related factors like sea level rise. Urban planning initiatives benefit from these forecasts for effective water resource management. For the tourism industry, medium-to-long-term weather forecasts are integral for long-term investments and for identifying regions that may become popular tourist destinations in the future. Additionally, in the realm of public health, long-term climate changes projected through these forecasts can inform strategies for controlling the spread of diseases. In summary, weather forecasts serve as a vital tool for both immediate and long-term decision-making across a diverse range of sectors.

Short-term weather prediction. Short-term weather forecasting primarily targets weather conditions that span from a few hours up to seven days, aiming to deliver highly accurate and actionable information that empowers individuals to make timely decisions like carrying an umbrella or postponing outdoor activities. These forecasts typically decrease in reliability as they stretch further into the future. Essential elements of these forecasts include maximum and minimum temperatures, the likelihood and intensity of various forms of precipitation like rain, snow, or hail, wind speed and direction, levels of relative humidity or dew point temperature, and types of cloud cover such as sunny, cloudy, or overcast conditions

[5]. Visibility distance in foggy or smoky conditions and warnings about extreme weather events like hurricanes or heavy rainfall are also often included. The methodologies for generating these forecasts comprise numerical simulations run on high-performance computers, the integration of observational data from multiple sources like satellites and ground-based stations, and statistical techniques that involve pattern recognition and probability calculations based on historical weather data. While generally more accurate than long-term forecasts, short-term predictions are not without their limitations, often influenced by the quality of the input data, the resolution of the numerical models, and the sensitivity to initial atmospheric conditions. These forecasts play a crucial role in various sectors, including decision-making processes, transportation safety, and agriculture, despite the inherent complexities and uncertainties tied to predicting atmospheric behavior.

Medium-to-long-term climate prediction. Medium-to-long-term climate forecasting (MLTF) concentrates on projecting climate conditions over periods extending from several months to multiple years, in contrast to short-term weather forecasts, which focus more on immediate atmospheric conditions. The time frame of these climate forecasts can be segmented into medium-term, which generally ranges from a single season up to a year, and long-term, which could span years to decades or even beyond

[6]. Unlike weather forecasts, which may provide information on imminent rainfall or snowfall, MLTF centers on the average states or trends of climate variables, such as average temperature and precipitation, ocean-atmosphere interactions like El Niño or La Niña conditions, and the likelihood of extreme weather events like droughts or floods, as well as anticipated hurricane activities

[7]. The projection also encompasses broader climate trends, such as global warming or localized climatic shifts. These forecasts employ a variety of methods, including statistical models based on historical data and seasonal patterns, dynamical models that operate on complex mathematical equations rooted in physics, and integrated models that amalgamate multiple data sources and methodologies. However, the accuracy of medium- to long-term climate forecasting often falls short when compared with short-term weather predictions due to the intricate, multi-scale, and multi-process interactions that constitute the climate system, not to mention the lack of exhaustive long-term data. The forecasts’ reliability can also be influenced by socio-economic variables, human activities, and shifts in policy. Despite these complexities, medium-to-long-term climate projections serve pivotal roles in areas such as resource management, agricultural planning, disaster mitigation, and energy policy formulation, making them not only a multi-faceted, multi-disciplinary challenge but also a crucial frontier in both climate science and applied research.

2. Machine Learning Methods in Weather and Climate Applications

2.1. Climate Prediction Milestone Based on Machine-Learning

Climate prediction methods before 2010. The earliest model in this context is the Precipitation Neural Network Prediction Model, published in 1998. This model serves as an archetype of Basic DNN Models, leveraging Artificial Neural Networks to offer short-term forecasts specifically for precipitation in the Middle Atlantic Region. Advancing to the mid-2000s, the realm of medium-to-long-term predictions saw the introduction of ML-Enhanced Non-Deep-Learning Models, exemplified by KNN-Down-scaling in 2005 and SVM-Down-scaling in 2006. These models employed machine learning techniques like K-Nearest Neighbors and Support Vector Machines, targeting localized precipitation forecasts in the United States and India, respectively. In 2009, the field welcomed another medium-to-long-term model, CRF-Down-scaling, which used Conditional Random Fields to predict precipitation in the Mahanadi Basin.

Climate prediction methods from 2010–2019. During the period from 2010 to 2019, the field of weather prediction witnessed significant technological advancements and diversification in modeling approaches. Around 2015, a notable shift back to short-term predictions was observed with the introduction of Hybrid DNN Models, exemplified by ConsvLSTM. This model integrated Long Short-Term Memory networks with Convolutional Neural Networks to provide precipitation forecasts specifically for Hong Kong. As the decade progressed, models became increasingly specialized.

Climate prediction methods from 2020. Fast forward to 2020, the CapsNet model, a Specific Model, leveraged a novel architecture known as Capsule Networks to predict extreme weather events in North America. By 2021, the scope extended to models like RF-bias-correction and the sea-ice prediction model, focusing on medium-to-long-term predictions. The former employed Random Forests for precipitation forecasts in Iran, while the latter utilized probabilistic deep learning techniques for forecasts in the Arctic region. Recent advancements as of 2022 and 2023 incorporate more complex architectures. Cycle GAN, a 2022 model, utilized Generative Adversarial Networks for global precipitation prediction. PanGu, a 2023 release, employed 3D Neural Networks for predicting extreme weather events globally.

2.2. Classification of Climate Prediction Methods

Time Scale. Models in weather and climate prediction are initially divided based on their temporal range into ’Short-term’ and ’Medium-to-long-term’. Short-term weather prediction focuses on the state of the atmosphere in the short term, usually the weather conditions in the next few hours to days. Medium-to-long-term climate prediction focuses on longer time scales, usually the average weather trends over months, years, or decades. Weather forecasts focus on specific weather conditions in the near term, such as temperature, precipitation, humidity, wind speed, and direction. Climate prediction focuses on long-term weather patterns and trends, such as seasonal or inter-annual variations in temperature and precipitation. In the traditional approach, weather forecasting usually utilizes numerical weather prediction models that predict weather changes in the short term by resolving the equations of atmospheric dynamics; climate prediction usually utilizes climate models that incorporate more complex interacting feedback mechanisms and longer-term external drivers, such as greenhouse gas emissions and changes in solar radiation.

Spatial Scale. Regional meteorology concerns a specified geographic area, such as a country or a continent, and aims to provide detailed insights into the weather and climate phenomena within that domain. The finer spatial resolution of regional models allows for a more nuanced understanding of local geographical and topographical influences on weather patterns, which in turn can lead to more accurate forecasts within that particular area. On the other hand, global meteorology encompasses the entire planet’s atmospheric conditions, providing a broader yet less detailed view of weather and climate phenomena. The spatial resolution of global models is generally coarser compared with regional models.

ML and ML-Enhanced Types. Scholars categorize models into ML and ML-Enhanced types. In ML type, algorithms are directly applied to climate data for pattern recognition or predictive tasks. These algorithms typically operate independently of traditional physical models, relying instead on data-driven insights garnered from extensive climate datasets. Contrastingly, ML-Enhanced models integrate machine learning techniques into conventional physical models to optimize or enhance their performance. Fundamentally, these approaches still rely on physical models for prediction. However, machine learning algorithms serve as auxiliary tools for parameter tuning, feature engineering, or addressing specific limitations in the physical models, thereby improving their overall predictive accuracy and reliability. In this survey, ML-enhanced was divided into three catagories: bias correction, down-scaling, and emulation

[8].

Model. Within each time scale, models are further categorized by their type. These models include: Specific Models: These are unique or specialized neural network architectures developed for particular applications.

Specific DNN Models: Unique or specialized neural network architectures developed for particular applications.

Hybrid DNN Models: These models use a combination of different neural network architectures, such as LSTM + CNN.

Single DNN Models: These models employ foundational Deep Neural Network architectures like ANNs (Artificial Neural Networks), CNNs (Convolutional Neural Networks), and LSTMs (Long Short-Term Memory networks).

Non-Deep-Learning Models: These models incorporate machine learning techniques that do not rely on deep learning, such as Random Forests and Support Vector Machines.

Technique. This category specifies the underlying machine learning or deep learning technique used in a particular model, for example, CNN, LSTM, Random Forest, Probalistic Deep Learning, and GAN.

CNN. A specific type of ANN is the Convolutional Neural Network (CNN), designed to automatically and adaptively learn spatial hierarchies from data

[9]. CNNs comprise three main types of layers: convolutional, pooling, and fully connected

[10]. The convolutional layer applies various filters to the input data to create feature maps, identifying spatial hierarchies and patterns. Pooling layers reduce dimensionality, summarizing features in the previous layer

[11]. Fully connected layers then perform classification based on the high-level features identified

[12]. CNNs are particularly relevant in meteorology for tasks like satellite image analysis, with their ability to recognize and extract spatial patterns

[13]. Their unique structure allows them to capture local dependencies in the data, making them robust against shifts and distortions

[14].

LSTM. Long Short-Term Memory (LSTM) units are a specialized form of recurrent neural network architecture

[15]. Purposefully designed to mitigate the vanishing gradient problem inherent in traditional RNNs, LSTM units manage the information flow through a series of gates, namely the input, forget, and output gates. These gates govern the retention, forgetting, and output of information, allowing LSTMs to effectively capture long-range dependencies and temporal dynamics in sequential data

[15].

Random forest. A technique used to adjust or correct biases in predictive models, particularly in weather forecasting or climate modeling. Random Forest (RF) is a machine learning algorithm used for various types of classification and regression tasks. In the context of bias correction, the Random Forest algorithm would be trained to identify and correct systematic errors or biases in the predictions made by a primary forecasting model.

Probabilistic deep learning. Probabilistic deep learning models in weather forecasting aim to provide not just point estimates of meteorological variables but also a measure of uncertainty associated with the predictions. By leveraging complex neural networks, these models capture intricate relationships between various features like temperature, humidity, and wind speed. The probabilistic aspect helps in quantifying the confidence in predictions, which is crucial for risk assessment and decision-making in weather-sensitive industries.

Generative adversarial networks. Generative Adversarial Networks (GANs) are a class of deep learning models composed of two neural networks: a Generator and a Discriminator. The Generator aims to produce data that closely resembles a genuine data distribution, while the discriminator’s role is to distinguish between real and generated data. During training, these networks engage in a kind of “cat-and-mouse” game, continually adapting and improving—ultimately with the goal of creating generated data so convincing that the Discriminator can no longer tell it apart from real data.

Graph Neural Network. Graph Neural Network (GNN) are designed to work with graph-structured data, capturing the relationships between connected nodes effectively. They operate by passing messages or aggregating information from neighbors and then updating each node’s representation accordingly. This makes GNNs exceptionally good at handling problems like social network analysis, molecular structure analysis, and recommendation systems.

Transformer. A transformer consists of an encoder and a decoder, but its most unique feature is the attention mechanism. This allows the model to weigh the importance of different parts of the input data, making it very efficient for tasks like text summarization, question answering, and language generation.

Name. Some models are commonly cited or recognized under a specific name, such as PanGu or FourCastNet. Some models are named after their technical features.

Event. The type of weather or climatic events that the model aims to forecast is specified under this category. This could range from generalized weather conditions like temperature and precipitation to more extreme weather events.

3. Short-Term Weather Forecast

Weather forecasting aims to predict atmospheric phenomena within a short time-frame, generally ranging from one to three days. This information is crucial for a multitude of sectors, including agriculture, transportation, and emergency management. Factors such as precipitation, temperature, and extreme weather events are of particular interest. Forecasting methods have evolved over the years, transitioning from traditional numerical methods to more advanced hybrid and machine-learning models.

3.1. Model Design

Numerical Weather Model Numerical Weather Prediction (NWP) stands as a cornerstone methodology in the realm of meteorological forecasting, fundamentally rooted in the simulation of atmospheric dynamics through intricate physical models. At the core of NWP lies a set of governing physical equations that encapsulate the holistic behavior of the atmosphere:

The Navier-Stokes Equations

[16]: Serving as the quintessential descriptors of fluid motion, these equations delineate the fundamental mechanics underlying atmospheric flow.

The Thermodynamic Equations

[17]: These equations intricately interrelate the temperature, pressure, and humidity within the atmospheric matrix, offering insights into the state and transitions of atmospheric energy.

The model is fundamentally based on a set of time-dependent partial differential equations, which require sophisticated numerical techniques for solving. The resolution of these equations enables the simulation of the inherently dynamic atmosphere, serving as the cornerstone for accurate and predictive meteorological insights. Within this overarching framework, a suite of integral components is embedded to address specific physical interactions that occur at different resolutions, such as cloud formation, radiation, convection, boundary layers, and surface interactions. Each of these components serves a pivotal role:

-

The Cloud Microphysics Parameterization Scheme is instrumental for simulating the life cycles of cloud droplets and ice crystals, thereby affecting

[18][19] and atmospheric energy balance.

-

Shortwave and Longwave Radiation Transfer Equations elucidate the absorption, scattering, and emission of both solar and terrestrial radiation, which in turn influence atmospheric temperature and dynamics.

-

Empirical or Semi-Empirical Convection Parameterization Schemes simulate vertical atmospheric motions initiated by local instabilities, facilitating the capture of weather phenomena like thunderstorms.

-

Boundary-Layer Dynamics concentrates on the exchanges of momentum, energy, and matter between the Earth’s surface and the atmosphere which are crucial for the accurate representation of surface conditions in the model.

-

Land Surface and Soil/Ocean Interaction Modules simulate the exchange of energy, moisture, and momentum between the surface and the atmosphere, while also accounting for terrestrial and aquatic influences on atmospheric conditions.

These components are tightly coupled with the core atmospheric dynamics equations, collectively constituting a comprehensive, multi-scale framework. This intricate integration allows for the simulation of the complex dynamical evolution inherent in the atmosphere, contributing to more reliable and precise weather forecasting.

In Numerical Weather Prediction (NWP), a critical tool for atmospheric dynamics forecasting, the process begins with data assimilation, where observational data is integrated into the model to reflect current conditions. This is followed by numerical integration, where governing equations are meticulously solved to simulate atmospheric changes over time. However, certain phenomena, like the microphysics of clouds, cannot be directly resolved and are accounted for through parameterization to approximate their aggregate effects. Finally, post-processing methods are used to reconcile potential discrepancies between model predictions and real-world observations, ensuring accurate and reliable forecasts. This comprehensive process captures the complexity of weather systems and serves as a robust method for weather prediction

[20].

MetNet. MetNet

[21] is a state-of-the-art weather forecasting model that integrates the functionality of CNN, LSTM, and auto-encoder units. The CNN component conducts a multi-scale spatial analysis, extracting and abstracting meteorological patterns across various spatial resolutions. In parallel, the LSTM component captures temporal dependencies within the meteorological data, providing an in-depth understanding of weather transitions over time

[15]. Autoencoders are mainly used in weather prediction for data preprocessing, feature engineering, and dimensionality reduction to assist more complex prediction models in making more accurate and efficient predictions. This combined architecture permits a dynamic and robust framework that can adaptively focus on key features in both spatial and temporal dimensions, guided by an embedded attention mechanism

[22][23].

FourCastNet. In response to the escalating challenges posed by global climate change and the increasing frequency of extreme weather phenomena, the demand for precise and prompt weather forecasting has surged. High-resolution weather models serve as pivotal instruments in addressing this exigency, offering the ability to capture finer meteorological features, thereby rendering more accurate predictions

[24][25]. Against this backdrop, FourCastNet

[26] has been conceived, employing ERA5, an atmospheric reanalysis dataset. This dataset is the outcome of a Bayesian estimation process known as data assimilation, fusing observational results with numerical models’ output

[27]. FourCastNet leverages the Adaptive Fourier Neural Operator (AFNO), uniquely crafted for high-resolution inputs, incorporating several significant strides within the domain of deep learning.

The essence of AFNO resides in its symbiotic fusion of the Fourier Neural Operator (FNO) learning strategy with the self-attention mechanism intrinsic to Vision Transformers (ViT)

[28]. While FNO, through Fourier transforms, adeptly processes periodic data and has proven efficacy in modeling complex systems of partial differential equations, the computational complexity for high-resolution inputs is prohibitive. Consequently, AFNO deploys the Fast Fourier Transform (FFT) in the Fourier domain, facilitating continuous global convolution. This innovation reduces the complexity of spatial mixing to

𝑂(𝑁log𝑁), thus rendering it suitable for high-resolution data

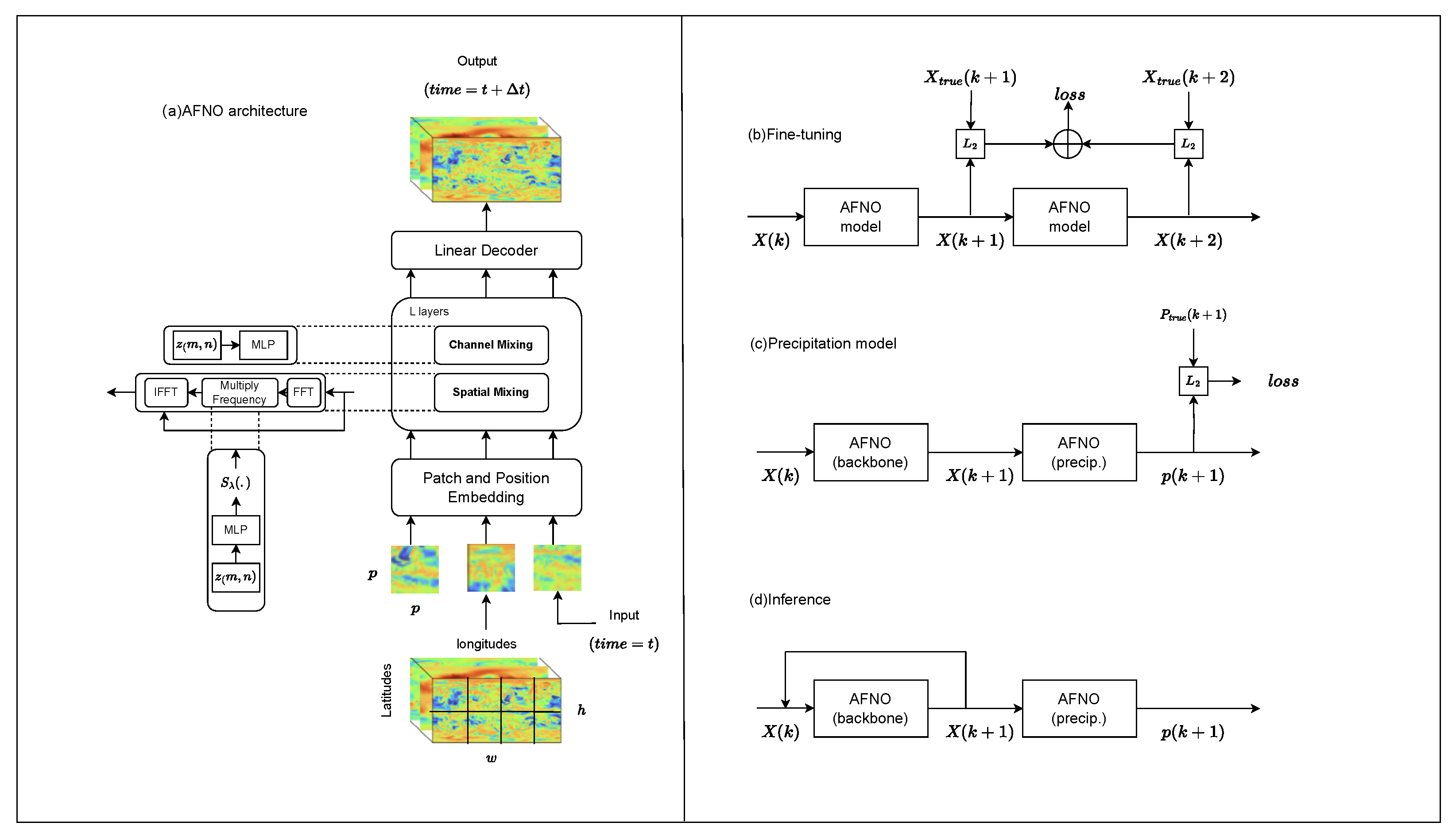

[29]. The workflow of AFNO shown in

Figure 1 encompasses data preprocessing, feature extraction with FNO, feature processing with ViT, spatial mixing for feature fusion, culminating in prediction output, representing future meteorological conditions such as temperature, pressure, and humidity.

Figure 1. (a) The multi-layer transformer architecture; (b) two-step fine-tuning; (c) backbone model; (d) forecast model in free-running autoregressive inference mode.

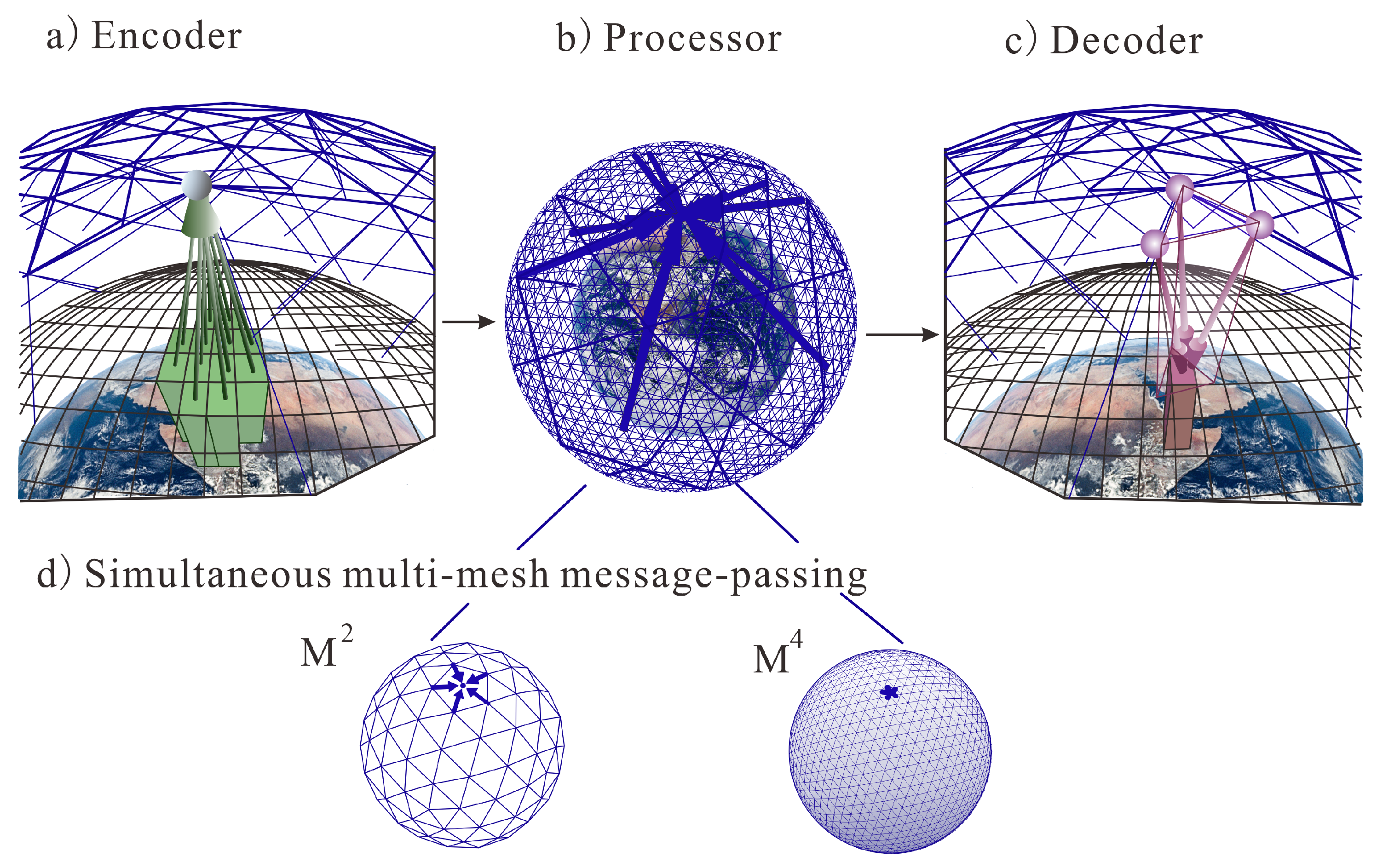

GraphCast. GraphCast represents a notable advance in weather forecasting, melding machine learning with complex dynamical system modeling to pave the way for more accurate and efficient predictions. It leverages machine learning to model complex dynamical systems and showcases the potential of machine learning in this domain. It’s an autoregressive model, built upon graph neural networks (GNNs) and a novel multi-scale mesh representation, trained on historical weather data from the European Centre for Medium-Range Weather Forecasts (ECMWF)’s ERA5 reanalysis archive.

The structure of GraphCast shown in Figure 2 employs an “encode-process-decode” configuration utilizing GNNs to autoregressively generate forecast trajectories. In detail:

Figure 2. (a) The encoder component of the GraphCast architecture maps the input local regions (green boxes) to the nodes of the multigrid graph. (b) The processor component uses learned message passing to update each multigrid node. (c) The decoder component maps the processed multigrid features (purple nodes) to the grid representation. (d) A multi-scale grid set.

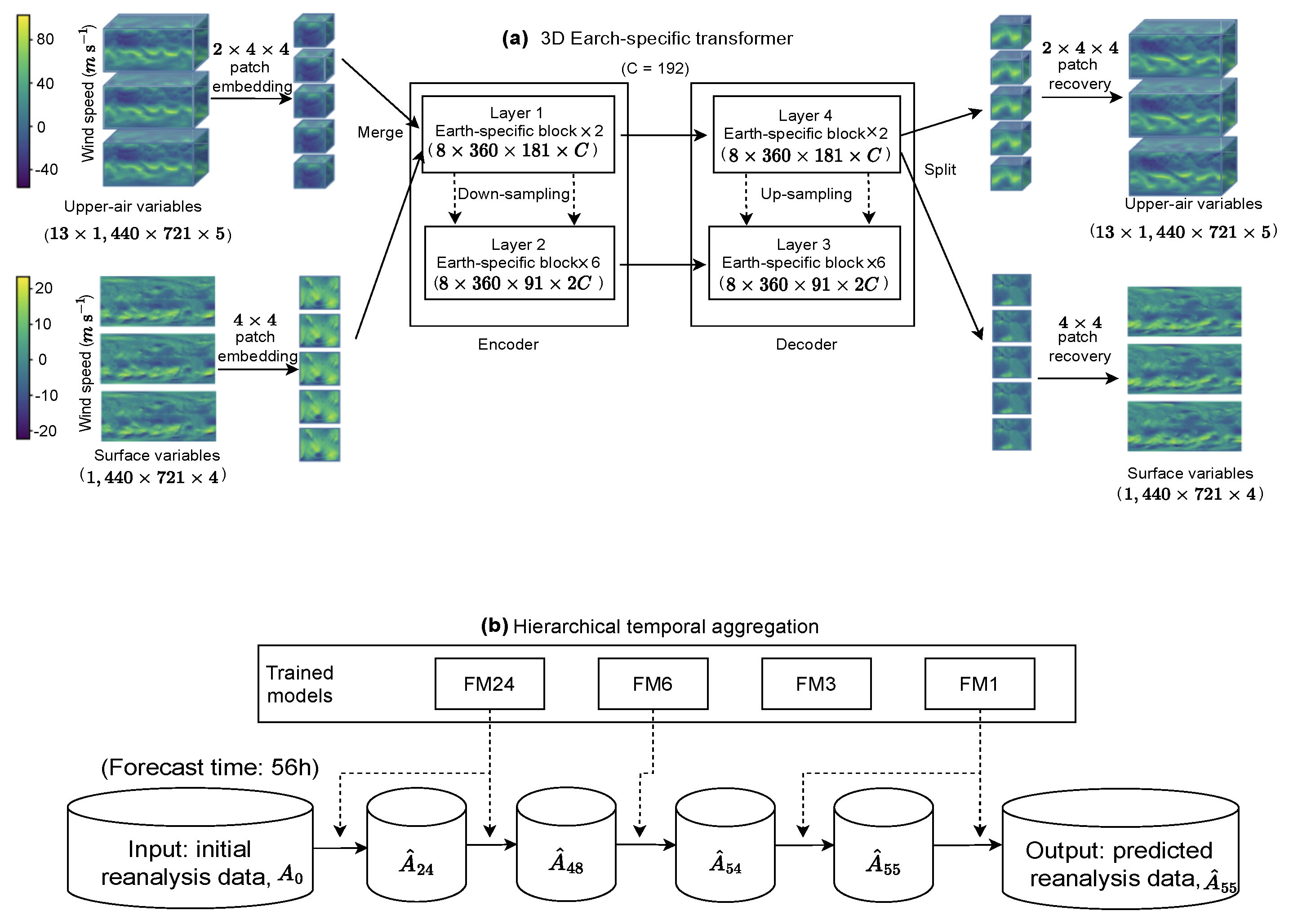

PanGu. In the rapidly evolving field of meteorological forecasting, PanGu emerges as a pioneering model shown in Figure 3, predicated on a three-dimensional neural network that transcends traditional boundaries of latitude and longitude. Recognizing the intrinsic relationship between meteorological data and atmospheric pressure, PanGu incorporates a neural network structure that accounts for altitude in addition to latitude and longitude. The initiation of the PanGu model’s process involves Block Embedding, where the dataset is parsed into smaller subsets, or blocks. This operation not only mitigates spatial resolution and complexity but also facilitates subsequent data management within the network.

Figure 3. Network training and inference strategies. (a) 3DEST architecture. (b) Hierarchical temporal aggregation.

PanGu’s

[30] innovative 3D neural network architecture

[31] offers a groundbreaking perspective for integrating meteorological data, and its suitability for three-dimensional data is distinctly pronounced. Moreover, PanGu introduces a hierarchical time-aggregation strategy, an advancement that ensures the network with the maximum lead time is consistently invoked, thereby curtailing errors. In juxtaposition with running a model like FourCastNet

[26] multiple times, which may accrue errors, this approach exhibits superiority in both speed and precision. Collectively, these novel attributes and methodological advancements position PanGu as a cutting-edge tool in the domain of high-resolution weather modeling, promising transformative potential in weather analysis and forecasting.

MetNet, FourCastNet, GraphCast, and PanGu are state-of-the-art methods in the field of weather prediction, and they share some architectural similarities that can indicate converging trends in this field. All four models initiate the process by embedding or downsampling the input data. FourCastNet uses AFNO, MetNet employs a Spatial Downsampler, and PanGu uses Block Embedding to manage the spatial resolution and complexity of the datasets, while GraphCast maps the input data from the original latitude-longitude grid into a multi-scale internal mesh representation. Spatio-temporal coding is an integral part of all networks; FourCastNet uses pre-training and fine-tuning phases to deal with temporal dependencies, MetNet uses ConvLSTM; PanGu introduces a hierarchical temporal aggregation strategy to manage temporal correlations in the data; and GraphCast employs GNNs to capture and address spatio-temporal dependencies in weather data. Each model employs a specialized approach to understand the spatial relationships within the data. FourCastNet uses AFNO along with Vision Transformers, MetNet utilizes Spatial Aggregator blocks, and PanGu integrates data into a 3D cube via 3D Cube Fusion, while GraphCast translates data into a multi-scale internal mesh. Both FourCastNet and PanGu employ self-attention mechanisms derived from the Transformer architecture for better capturing long-range dependencies in the data. FourCastNet combines FNO with ViT, and PanGu uses Swin Encoding.

3.2. Result Analysis

MetNet: According to the MetNet experiment, at the threshold of 1 mm/h precipitation rate, both MetNet and NWP predictions have high similarity to ground conditions. Evidently, MetNet exhibits a forecasting capability that is commensurate with NWP, distinguished by an accelerated computational proficiency that generally surpasses NWP’s processing speed.

FourCastNet: According to the FourCastNet experiment, FourCastNet can predict wind speed 96 h in advance with extremely high fidelity and accurate fine-scale features. In the experiment, the FourCastNet forecast accurately captured the formation and path of the super typhoon Shanzhu, as well as its intensity and trajectory over four days. It also has a high resolution and demonstrates excellent skills in capturing small-scale features. Particularly noteworthy is the performance of FourcastNet in forecasting meteorological phenomena within a 48 h horizon, which has transcended the predictive accuracy intrinsic to conventional numerical weather forecasting methodologies. This constitutes a significant stride in enhancing the veracity and responsiveness of short-term meteorological projections.

GraphCast: According to the GraphCast experiment, GraphCast demonstrates superior performance in tracking weather patterns, substantially outperforming NWP in various forecasting horizons, notably from 18 h to 4.75 days, as depicted in Figure 1b. It excels at predicting atmospheric river behaviors and extreme climatic events, with significant improvement seen in longer-term forecasts of 5 and 10 days. The model’s prowess extends to accurately capturing extreme heat and cold anomalies, showcasing not just its forecasting capability but a nuanced understanding of meteorological dynamics, thereby holding promise for more precise weather predictions with contemporary data.

PanGu: According to the PanGu experiment, PanGu can almost accurately predict typhoon trajectories during the tracking of strong tropical cyclones Kong Lei and Yu Tu and is 48 h faster than NWP. The advent of 3D Net further heralds a momentous advancement in weather prediction technology. This cutting-edge model outperforms numerical weather prediction models by a substantial margin and possesses the unprecedented ability to replicate reality with exceptional fidelity. It’s not merely a forecasting tool but a near-precise reflection of meteorological dynamics, allowing for a nearly flawless reconstruction of real-world weather scenarios.

4. Medium-to-Long-Term Climate Prediction

4.1. Model Design

Climate Model. Climate models, consisting of fundamental atmospheric dynamics and thermodynamic equations, focus on simulating Earth’s long-term climate system

[32]. Unlike NWP, which targets short-term weather patterns, climate models address broader climatic trends. These models encompass Global Climate Models (GCMs), which provide a global perspective but often at a lower resolution, and Regional Climate Models (RCMs), designed for detailed regional analysis

[33]. The main emphasis is on the average state and variations rather than transient weather events. The workflow of climate modeling begins with initialization by setting boundary conditions, possibly involving centuries of historical data. Numerical integration follows, using the basic equations to model the long-term evolution of the climate system

[34]. Parameterization techniques are employed to represent sub-grid-scale processes like cloud formation and vegetation feedback. The model’s performance and uncertainties are then analyzed and validated by comparing them with observational data or other model results

[35].

Conditional Generative Forecasting [36]. In the intricate arena of medium-to-long-term seasonal climate prediction, the scarcity of substantial datasets since 1979 poses a significant constraint on the rigorous training of complex models like CNNs, thus limiting their predictive efficacy. To navigate this challenge, a pioneering approach to transfer learning has been embraced, leveraging the simulated climate data drawn from CMIP5 (Coupled Model Intercomparison Project Phase 5)

[37] to enhance modeling efficiency and accuracy. The process begins with a pre-training phase, where the CNN is enriched with CMIP5 data to comprehend essential climatic patterns and relationships. This foundational insight then transfers seamlessly to observational data without resetting the model parameters, ensuring a continuous learning trajectory that marries simulated wisdom with empirical climate dynamics. The methodology culminates in a fine-tuning phase, during which the model undergoes subtle refinements to align more closely with the real-world intricacies of medium-to-long-term ENSO forecasting

[5]. This innovative strategy demonstrates the transformative power of transfer learning in addressing the formidable challenges associated with limited sample sizes in medium-to-long-term climate science.

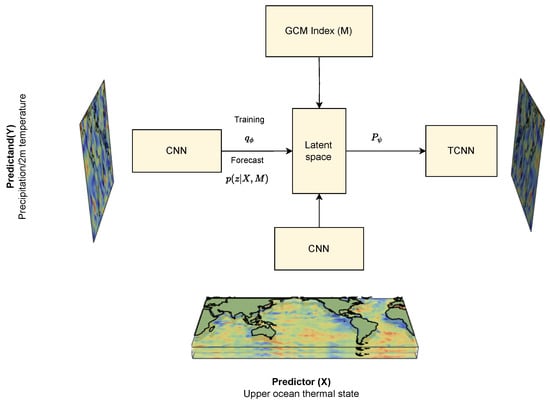

Leveraging 52,201 years of climate simulation data from CMIP5/CMIP6, which serves to increase the sample size, the method for medium-term forecasting employs CNNs and Temporal Convolutional Neural Networks (TCNNs) to extract essential features from high-dimensional geospatial data. This feature extraction lays the foundation for probabilistic deep learning, which determines an approximate distribution of the target variables, capturing the data’s structure and uncertainty

[38]. The model’s parameters are optimized by maximizing the Evidence Lower Bound (ELBO) within the variational inference framework. The structure is shown in

Figure 4. The integration of deep learning techniques with probabilistic modeling ensures accuracy, robustness to sparse data, and flexibility in assumptions, enhancing the precision of forecasts and offering valuable insights into confidence levels and expert knowledge integration.

Figure 4. Conditonal Generative Forecasting (CGF) model.

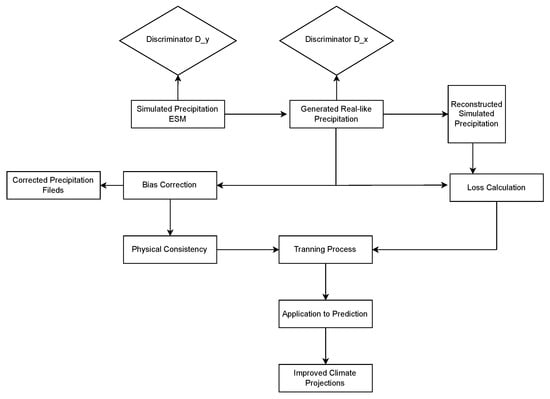

Cycle-Consistent Generative Adversarial Networks. Cycle-Consistent Generative Adversarial Networks (CycleGANs) have been ingeniously applied to the bias correction of high-resolution Earth System Model (ESM) precipitation fields, such as GFDL-ESM4

[39]. This model includes two generators responsible for translating between simulated and real domains, and two discriminators to differentiate between generated and real observations. A key component of this approach is the cycle consistency loss, which ensures a reliable translation between domains coupled with a constraint to maintain global precipitation values for physical consistency. By framing bias correction as an image-to-image translation task, CycleGANs have significantly improved spatial patterns and distributions in climate projections. The model’s utilization of spatial spectral densities and fractal dimension measurements further emphasizes its spatial context awareness, making it a groundbreaking technique in the field of climate science.

The bidirectional mapping strategy of Cycle-Consistent Generative Adversarial Networks (CycleGANs) permits the exploration and learning of complex transformation relationships between two domains without reliance on paired training samples. This attribute holds profound significance, especially in scenarios where only unlabeled data are available for training. In its specific application within climate science, this characteristic of CycleGAN enables precise capturing and modeling of the subtle relationships between real and simulated precipitation data. Through this unique bidirectional mapping shown in Figure 5, the model not only enhances the understanding of climatic phenomena but also improves the predictive accuracy of future precipitation trends. This provides a novel, data-driven methodology for climate prediction and analysis, contributing to the ever-expanding field of computational climate science.

Figure 5. CycleGAN flow chart.

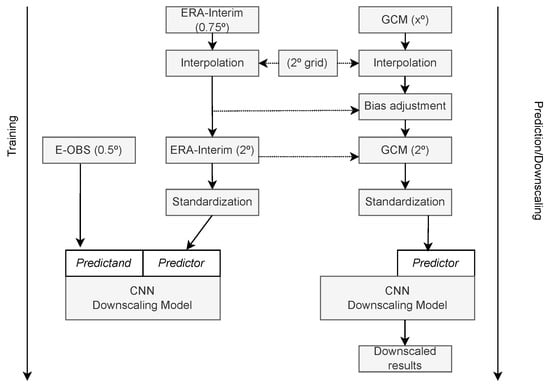

DeepESD. Traditional GCMs, while proficient in simulating large-scale global climatic dynamics

[40][41], exhibit intrinsic limitations in representing finer spatial scales and specific regional characteristics. This inadequacy manifests as a pronounced resolution gap at localized scales, restricting the applicability of GCMs in detailed regional climate studies

[42][43].

In stark contrast, the utilization of CNNs symbolizes a significant breakthrough

[44]. Structurally characterized by hierarchical convolutional layers, CNNs possess the unique ability to articulate complex multi-scale spatial features across disparate scales, commencing with global coarse-grained characteristics and progressively refining to capture intricate regional details. An exemplar implementation of this approach was demonstrated by Baño-Medina et al.

[43], wherein a CNN comprised three convolutional layers with spatial kernels of varying counts (50, 25, and 10, respectively). The transformation process began with the recalibration of ERA-Interim reanalysis data to a 2° regular grid, elevating it to 0.5°

[45][46][47]. This configuration allowed the CNN to translate global atmospheric patterns into high-resolution regional specificity

[48][49].

The nuanced translation from global to regional scales, achieved through sequential convolutional layers, not only amplifies the spatial resolution but also retains the contextual relevance of climatic variables

[50][51]. The first convolutional layer captured global coarse-grained features, with subsequent layers incrementally refining these into nuanced regional characteristics. By the terminal layer, the CNN had effectively distilled complex atmospheric dynamics into a precise, high-resolution grid

[52][53].

This enhancement fosters a more robust understanding of regional climatic processes, ushering in an era of precision and flexibility in climate modeling. The deployment of this technology affirms a pivotal advancement in the field, opening new possibilities for more granulated, precise, and comprehensive examination of climatic processes and future scenarios

[54][55][56]. The introduction of CNNs thus represents a transformative approach shown in

Figure 6 to bridging the resolution gap inherent to traditional GCMs, with substantial implications for future climate analysis and scenario planning.

Figure 6. DeepESD structure.

NNCAM. The design and implementation of the Neural Network Community Atmosphere Model (NNCAM) are architected to leverage advancements in machine learning for improved atmospheric simulations. The architecture is a nuanced blend of traditional General Circulation Models (GCMs), specifically the Super-Parameterized Community Atmosphere Model (SPCAM), and cutting-edge machine learning techniques like Residual Deep Neural Networks (ResDNNs).

In the NNCAM model, the core workflow is divided into several key steps to achieve efficient and accurate climate simulations. First, the dynamic core, which serves as the base component of the model, is responsible for solving the underlying hydrodynamic equations and calculating the current climate state, e.g., temperature, pressure, and humidity, as well as the environmental forcings, e.g., wind and solar radiation. These calculations are then transmitted to the NN-GCM coupler. Upon receiving these data, the coupler further passes them to the neural network parameterization module. This module utilizes pre-trained neural networks, specifically ResDNNs, for faster and more accurate parameterization of the climate. Upon completion of the predictions, these results are fed back to the host GCM, i.e., NNCAM. The host GCM then uses the predictions generated by these neural networks to update the climate state in the model, and based on these updates, it performs the simulation at the next time step.

Overall, the host GCM, as the core of the whole simulation, is not only responsible for the basic climate simulation but also efficiently interacts with the dynamic core and neural network parameterization modules to achieve higher simulation accuracy and computational efficiency. This hierarchical architecture ensures both computational efficiency and high simulation fidelity. It allows for seamless integration and synchronization of the model states and predictions, thereby enabling continuous and efficient operation of NNCAM. The proposed framework represents a significant stride in the realm of atmospheric science, offering a harmonious integration of machine learning and physical simulations to achieve unprecedented accuracy and computational efficiency.

4.2. Result Analysis

CGF: In the utilization of deep probabilistic machine learning techniques, the figure compares the performance of the CGF model using both simulated samples and actual data against the traditional climate model, Cancm4.

CycleGANs: In the context of long-term climate estimation, the application of deep learning for model correction has yielded promising results. As illustrated in the accompanying figure, the diagram delineates the mean absolute errors of different models relative to the W5E5v2 baseline facts. Among these, the error correction technique utilizing Generative Adversarial Networks (GANs) in conjunction with the ISIMIP3BASD physical model has demonstrated the lowest discrepancy. This evidence underscores the efficacy of sophisticated deep-learning methodologies in enhancing the precision of long-term climate estimations, thereby reinforcing their potential utility in climatological research and forecasting applications.

DeepESD: In the conducted study, deep learning has been employed to enhance resolution, resulting in a model referred to as DeepESD. The following figure portrays the Probability Density Functions (PDFs) of precipitation and temperature for the historical period from 1979 to 2005, as expressed by the General Circulation Model (GCM) in red, the Regional Climate Model (RCM) in blue, and DeepESD in green. These are contextualized across regions such as the Alps, the Iberian Peninsula, and Eastern Europe as defined by the PRUDENCE area. In the diagram, solid lines represent the overall mean, while the shaded region includes two standard deviations. Dashed lines depict the distribution mean of each PDF. A clear observation from the graph illustrates that DeepESD maintains higher consistency with observed data in comparison to the other models.

NNCAM: NNCAM has demonstrated proficient simulation of strong precipitation centers across maritime continental tropical regions, Asian monsoon areas, South America, and the Caribbean. The model maintains the spatial pattern and global average of precipitation over the subsequent 5 years in its simulation, showcasing its long-term stability. Overall, in terms of the spatial distribution of multi-annual summer precipitation, NNCAM results are closer to the standard values compared with those from CAM5, with smaller root mean square errors and global average deviations. Additionally, NNCAM operates at a speed that is 30 times faster than traditional models, marking a significant stride in enhancing computational efficiency.