Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Idongesit Ekerete | -- | 1494 | 2023-12-12 02:47:42 | | | |

| 2 | Jessie Wu | Meta information modification | 1494 | 2023-12-12 04:24:16 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Ekerete, I.; Garcia-Constantino, M.; Nugent, C.; Mccullagh, P.; Mclaughlin, J. Sensor Data Fusion Algorithms. Encyclopedia. Available online: https://encyclopedia.pub/entry/52598 (accessed on 28 February 2026).

Ekerete I, Garcia-Constantino M, Nugent C, Mccullagh P, Mclaughlin J. Sensor Data Fusion Algorithms. Encyclopedia. Available at: https://encyclopedia.pub/entry/52598. Accessed February 28, 2026.

Ekerete, Idongesit, Matias Garcia-Constantino, Christopher Nugent, Paul Mccullagh, James Mclaughlin. "Sensor Data Fusion Algorithms" Encyclopedia, https://encyclopedia.pub/entry/52598 (accessed February 28, 2026).

Ekerete, I., Garcia-Constantino, M., Nugent, C., Mccullagh, P., & Mclaughlin, J. (2023, December 12). Sensor Data Fusion Algorithms. In Encyclopedia. https://encyclopedia.pub/entry/52598

Ekerete, Idongesit, et al. "Sensor Data Fusion Algorithms." Encyclopedia. Web. 12 December, 2023.

Copy Citation

Sensor Data Fusion (SDT) algorithms and methods have been utilised in many applications ranging from automobiles to healthcare systems. They can be used to design a redundant, reliable, and complementary system with the intent of enhancing the system’s performance. SDT can be multifaceted, involving many representations such as pixels, features, signals, and symbols.

sensing solution

thermal sensor

Radar sensor

sensor fusion

data mining

in-home

1. Introduction

Sensor Data Fusion (SDT) is the combination of datasets from homogeneous or heterogeneous sensors in order to produce a complementary, cooperative or competitive outcome [1]. Data from multiple sensors can also be fused for better accuracy and reliability [2]. Processes involved in SDT depend primarily on the type of data and algorithms. The processes typically include data integration, aggregation, filtering, estimation, and time synchronisation [1].

1.1. Sensor Data Fusion Architectures

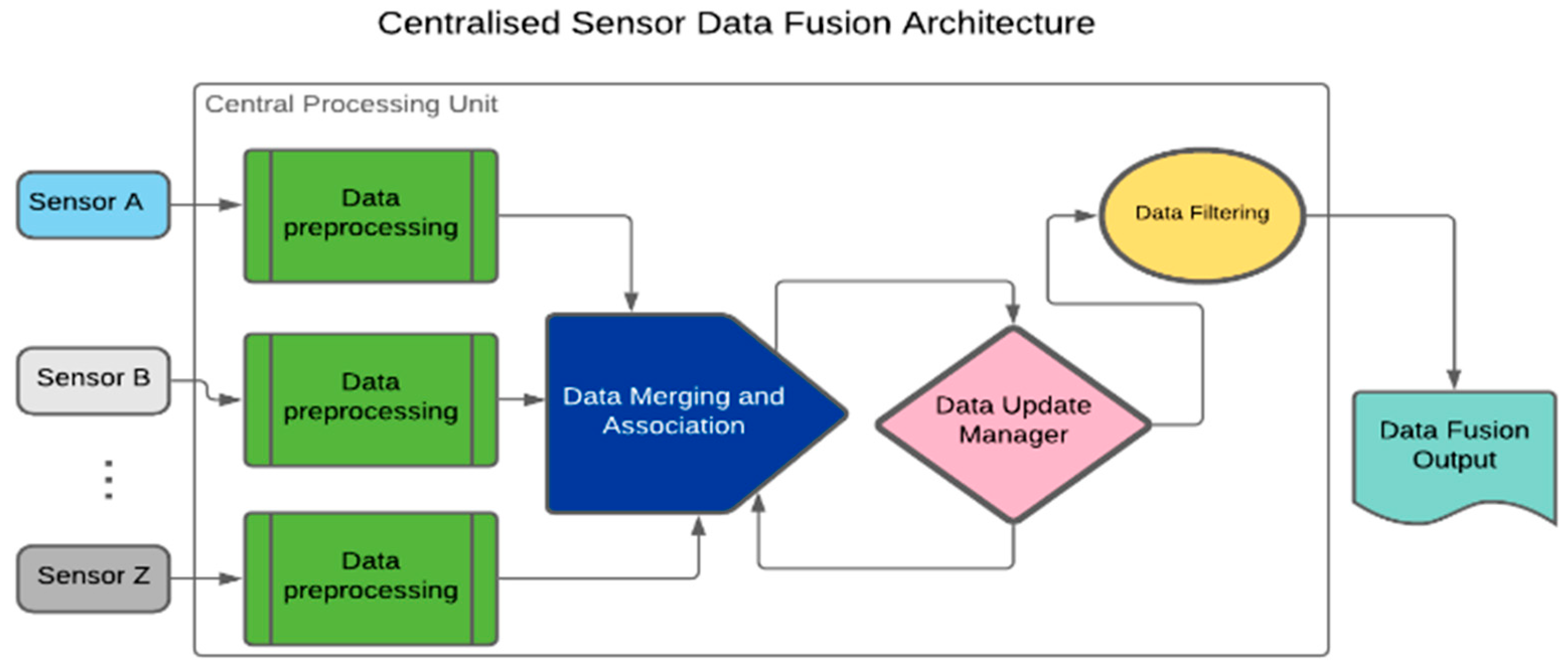

SDT architectures can be categorised into three broad groups, namely centralised, distributed, and hybrid architectures. The centralised architecture is often applied when dealing with homogeneous sensing solutions (SSs) [3]. It involves time-based synchronisation, correction, and transformation of all raw sensing data for central processing. Other steps include data merging and association, updating, and filtering, as presented in Figure 1 [4].

Figure 1. Centralised Sensor Data Fusion architecture outlining the arrangement of processes.

In Figure 1, sensor data are pre-processed in the Central Processing Unit (CPU). The pre-processing procedures entail data cleaning and alignment. The data algorithm requires sub-processes such as data integration, aggregation, and association. Moreover, a Data Update Manager (DUM) algorithm keeps a trail of changes in the output’s status. A DUM is easily implemented in a centralised architecture because of the availability of all raw data in the CPU. Filtering and output prediction follow the data merging and association.

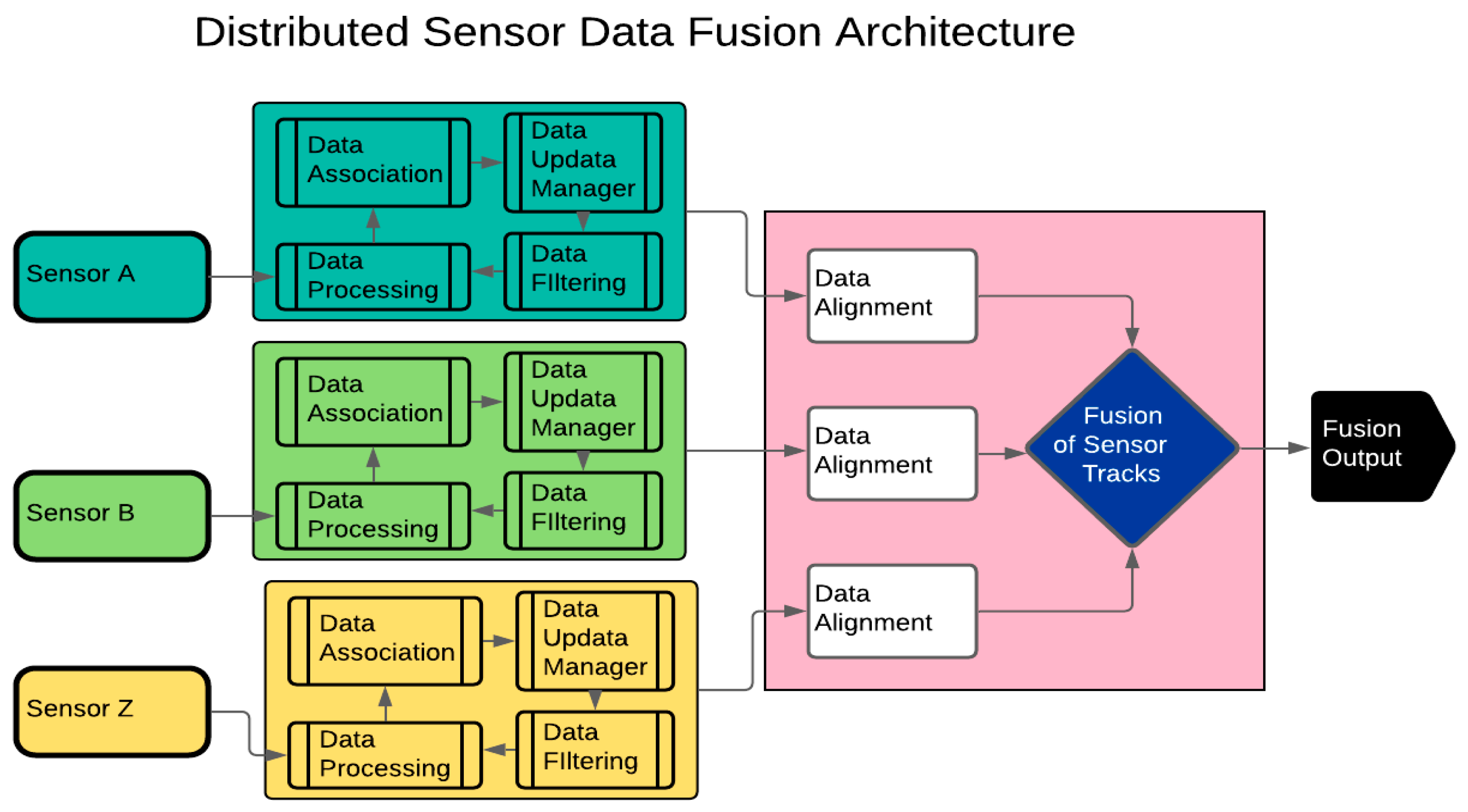

In a distributed SDT architecture, data pre-processing for each sensor takes place separately before the actual fusion process, as presented in Figure 2. Unlike with the centralised architecture, gating, association, local track management, filtering, and prediction are performed locally for each sensor before the fusion of the local tracks (Figure 2) [5]. This architecture is best suited for heterogeneous sensors with dissimilar data frames such as datasets from infrared and Radar sensors [6]. Data filtering for each sensor associated with the distributed SDT architecture can be performed by a Kalman Filter (KF) and extended KF [7].

Figure 2. Distributed Sensor Data Fusion architecture showing pre-processing of sensors’ data before filtering and fusion of sensors’ tracks.

The hybrid SDT architecture unifies the attributes of centralised and distributed architectures. Their capabilities depend on computational workload, communication, and accuracy requirements. The hybrid SDT also has centralised architecture characteristics, such as accurate data association, data tracking, and direct logic implementation. Nevertheless, it is complex and requires high data transfer between the central and local trackers compared with the centralised and distributed architectures. SDT architectures can be implemented using machine learning (ML) and data mining (DM) algorithms.

1.2. Data Mining Concepts

DM is an iterative process for exploratory analysis of unstructured, multi-feature, and varied datasets. It involves the use of machine learning, deep learning, and statistical algorithms to determine patterns, clusters, and classes in a dataset [8]. The two standard analyses with the use of DM tools are descriptive and predictive [9]. Whilst descriptive analysis seeks to identify patterns in a dataset, predictive analysis uses some variables in a dataset to envisage some undefined variables [10].

DM can also be categorised into tasks, models, and methods. Tasks-based DM seeks to discover rules, perform predictive and descriptive modelling, and retrieve contents of interest. DM methods include clustering, classification, association, and time-series analysis [11][12]. Clustering is often used in descriptive research, while classification is always associated with predictive analysis [10].

In DM, there is a slight distinction between classification and clustering. Classification is a supervised machine learning approach to group datasets into predefined classes or labels. On the other hand, clustering involves unlabelled data grouping based on similarities of instances such as inherent characteristics of the datasets [10]. Table 1 presents an overview of classification and clustering techniques.

Table 1. Classification and clustering techniques in data mining.

| Classification Techniques | Application of Classification Techniques | Clustering Techniques | Application of Clustering Techniques |

|---|---|---|---|

| Neural Network | E.g., stock market prediction [13] | Partition-based | E.g., medical datasets analysis [14] |

| Decision Tree | E.g., Banking and finance [15] | Model-based | E.g., multivariate Gaussian mixture model [16] |

| Support Vector Machine | E.g., big data analysis [17] | Grid-based | E.g., large-scale computation [18] |

| Association-based | E.g., high dimensional problems [19] | Density-based | Applications with noise. E.g., DBSCAN [20] |

| Bayesian | E.g., retrosynthesis [21] | Hierarchy-based | E.g., Mood and abnormal activity prediction [22][23] |

Data clustering techniques such as partition-based, model-based, grid-based, density-based and hierarchical clustering can be used for data grouping [8]. Whilst the density-based approach is centred on the discovery of non-linear structures in datasets, model- and grid-based methods utilise neural networks and grids creation, respectively. The Hierarchical Clustering Technique (HCT) involves the structural representation of datasets as binary trees based on similarities of instances. The HCT also accommodates sub-clusters in nested arrangements. The two main approaches in the HCT are division and agglomeration [24].

The Partitioning Clustering Technique (PCT) groups data by optimising an objective function [8]. The PCT is a non-HCT technique that involves partition iterations to improve the accuracy of formed groups. A popular algorithm in PCT is the K-Means++ Algorithm (KMA) [24][25]. The KMA utilises uncovered characteristics in datasets to improve the similarities of instances. It also reduces data complexities by minimising their variance and noise components [25].

2. Object Detection

Kim et al. [26] proposed a Radar and infrared sensor fusion system for object detection based on a Radar ranging concept, which required the use of a calibrated infrared camera alongside the Levenberg–Marquardt optimisation method. The purpose of using dual sensors in [26] was to compensate for the deficiencies of each sensor used in the experiment. The implementation of the fusion system was performed on a car with magenta and green cross marks as calibrated points positioned at different distances. The performance of this experiment using the fusion of sensor data was rated 13 times better compared with baseline methods. Work in [27] proposed the fusion of LiDAR and vision sensors for a multi-channel environment detection system. The fusion algorithm enabled image calibration to remove distortion. The study indicated improved performance in terms of communication reliability and stability compared with non-fusion-based approaches.

3. Automobile Systems

In automated vehicles with driver assist systems, data from front-facing cameras such as vision, LiDAR, Radar, and infrared sensors are combined for collision avoidance and pedestrian, obstacle, distance, and speed detection [28]. The multi-sensor fusion enhanced the redundancy of measured parameters to improve safety since measurement metrics are inferred from multiple sensors before actions are taken. A multimodal advanced driver assist system simultaneously monitors the driver’s interaction to predict risky behaviours that can result in road accidents [28]. Other LiDAR-based sensor fusion research included the use of vision sensors to enhance environmental visualisation [29].

4. Healthcare Applications

Chen and Wang [30] researched the fusion of an ultrasonic and an infrared sensor using the Support Vector Machine (SVM) learning approach. The study used SDT to improve fall detection accuracy by more than 20% compared with a stand-alone sensor on continuous data acquisition. Kovacs and Nagy [31] investigated the use of an ultrasonic echolocation-based aid for the visually impaired using a mathematical model that allowed the fusion of as many sensors as possible, notwithstanding their positions or formations. Huang et al. [32] proposed the fusion of images from a depth sensor and a hyperspectral camera to improve high-throughput phenotyping. The initial results from the technique indicated more accurate information capable of enhancing the precision of the process. Other studies on the fusion of depth with other SSs can be found in [33][34][35]. The work in [36] involved gait parameters’ measurement of people with Parkinson’s disease, by the fusion of depth and vision sensor systems. An accuracy of more than 90% was obtained in the study. Also, in Kepski and Kwolek [37], data from a body-worn accelerometer was fused with depth maps’ metrics from depth sensors to predict falls in ageing adults. The proposed method was highly efficient and reliable, showing the added advantages of sensor fusion. Work in [38] proposed the fusion of an RGB-depth and millimetre wave Radar sensor to assist the visually impaired. Experimental results from the study indicated the extension of the effective range of the sensors and, more importantly, multiple object detection at different angles.

5. Cluster-Based Analysis

The integration of SDT algorithms with ML and DM models can help predict risky behaviours and accidents [30][39][40][41][42]. Work in [43] discussed the use of Cluster-Based Analysis (CBA) for a data-driven correlation of ageing adults that required hip replacement in Ireland. Experimental results from the study suggested three distinct clusters with respect to patients’ characteristics and care-related issues. In [44], data evaluation using CBA helped in clustering healthcare records such as illness and treatment methods. A combined method, including CBA for user activity recognition in smart homes, was proposed in [45]. Experimental results indicated higher probabilities for activity recognition owing to the use of a combination of a K-pattern and artificial neural network. Work in [46][47][48] proposed the use of the CBA method in health-related data analysis. Experimental results indicated the suitability of the method for pattern identification and recognition in datasets.

References

- Jitendra, R. Multi-Sensor Data Fusion with MATLAB; CRC Press: Boca Raton, FL, USA, 2013; Volume 106, ISBN 9781439800058.

- Chen, C.Y.; Li, C.; Fiorentino, M.; Palermo, S. A LIDAR Sensor Prototype with Embedded 14-Bit 52 Ps Resolution ILO-TDC Array. Analog Integr. Circuits Signal Process. 2018, 94, 369–382.

- Al-Dhaher, A.H.G.; Mackesy, D. Multi-Sensor Data Fusion Architecture. In Proceedings of the 3rd IEEE International Workshop on Haptic, Audio and Visual Environments and their Applications—HAVE 2004, Ottawa, ON, Canada, 2–3 October 2004; pp. 159–163.

- Lytrivis, P.; Thomaidis, G.; Amditis, A. Sensor Data Fusion in Automotive Applications; Intech: London, UK, 2009; Volume 490.

- Dhiraj, A.; Deepa, P. Sensors and Their Applications. J. Phys. E 2012, 1, 60–68.

- Elmenreich, W.; Leidenfrost, R. Fusion of Heterogeneous Sensors Data. In Proceedings of the 6th Workshop on Intelligent Solutions in Embedded Systems, WISES’08, Regensburg, Germany, 10–11 July 2008.

- Nobili, S.; Camurri, M.; Barasuol, V.; Focchi, M.; Caldwell, D.G.; Semini, C.; Fallon, M. Heterogeneous Sensor Fusion for Accurate State Estimation of Dynamic Legged Robots. In Proceedings of the 13th Robotics: Science and Systems 2017, Cambridge, MA, USA, 12–16 July 2017.

- King, R.S. Cluster Analysis and Data Mining; David Pallai: Dulles, VA, USA, 2015; ISBN 9781938549380.

- Ashraf, I. Data Mining Algorithms and Their Applications in Education Data Mining. Int. J. Adv. Res. 2014, 2, 50–56.

- Kantardzic, M. Data Mining: Concepts, Models, Methods, and Algorithms, 3rd ed.; IEEE Press: Piscataway, NJ, USA, 2020; Volume 36, ISBN 9781119516040.

- Sadoughi, F.; Ghaderzadeh, M. A Hybrid Particle Swarm and Neural Network Approach for Detection of Prostate Cancer from Benign Hyperplasia of Prostate. Stud. Health Technol. Inform. 2014, 205, 481–485.

- Ghaderzadeh, M. Clinical Decision Support System for Early Detection of Prostate Cancer from Benign Hyperplasia of Prostate. Stud. Health Technol. Inform. 2013, 192, 928.

- Mizuno, H.; Kosaka, M.; Yajima, H. Application of Neural Network to Technical Analysis of Stock Market Prediction. In Proceedings of the 2022 3rd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 27–29 April 2022; pp. 302–306.

- Dharmarajan, A.; Velmurugan, T. Applications of Partition Based Clustering Algorithms: A Survey. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Computing Research, Enathi, India, 26–28 December 2013; pp. 1–5.

- Kotsiantis, S.B. Decision Trees: A Recent Overview. Artif. Intell. Rev. 2013, 39, 261–283.

- Banerjee, A.; Shan, H. Model-Based Clustering BT. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2010; pp. 686–689. ISBN 978-0-387-30164-8.

- Suthaharan, S. Support Vector Machine BT. In Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Suthaharan, S., Ed.; Springer: Boston, MA, USA, 2016; pp. 207–235. ISBN 978-1-4899-7641-3.

- Aouad, L.M.; An-Lekhac, N.; Kechadi, T. Grid-Based Approaches for Distributed Data Mining Applications. J. Algorithm. Comput. Technol. 2009, 3, 517–534.

- Alcalá-Fdez, J.; Alcalá, R.; Herrera, F. A Fuzzy Association Rule-Based Classification Model for High-Dimensional Problems with Genetic Rule Selection and Lateral Tuning. IEEE Trans. Fuzzy Syst. 2011, 19, 857–872.

- Mumtaz, K.; Studies, M.; Nadu, T. An Analysis on Density Based Clustering of Multi Dimensional Spatial Data. Indian J. Comput. Sci. Eng. 2010, 1, 8–12.

- Guo, Z.; Wu, S.; Ohno, M.; Yoshida, R. Bayesian Algorithm for Retrosynthesis. J. Chem. Inf. Model. 2020, 60, 4474–4486.

- Ekerete, I.; Garcia-Constantino, M.; Konios, A.; Mustafa, M.A.; Diaz-Skeete, Y.; Nugent, C.; McLaughlin, J. Fusion of Unobtrusive Sensing Solutions for Home-Based Activity Recognition and Classification Using Data Mining Models and Methods. Appl. Sci. 2021, 11, 9096.

- Märzinger, T.; Kotík, J.; Pfeifer, C. Application of Hierarchical Agglomerative Clustering (Hac) for Systemic Classification of Pop-up Housing (Puh) Environments. Appl. Sci. 2021, 11, 1122.

- Oyelade, J.; Isewon, I.; Oladipupo, O.; Emebo, O.; Omogbadegun, Z.; Aromolaran, O.; Uwoghiren, E.; Olaniyan, D.; Olawole, O. Data Clustering: Algorithms and Its Applications. In Proceedings of the 2019 19th International Conference on Computational Science and Its Applications (ICCSA), St. Petersburg, Russia, 1–4 July 2019; pp. 71–81.

- Morissette, L.; Chartier, S. The K-Means Clustering Technique: General Considerations and Implementation in Mathematica. Tutor. Quant. Methods Psychol. 2013, 9, 15–24.

- Kim, T.; Kim, S.; Lee, E.; Park, M. Comparative Analysis of RADAR-IR Sensor Fusion Methods for Object Detection. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; pp. 1576–1580.

- Lee, G.H.; Choi, J.D.; Lee, J.H.; Kim, M.Y. Object Detection Using Vision and LiDAR Sensor Fusion for Multi-Channel V2X System. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication, ICAIIC 2020; Institute of Electrical and Electronics Engineers Inc., Fukuoka, Japan, 19–21 February 2020; pp. 1–5.

- Rezaei, M. Computer Vision for Road Safety: A System for Simultaneous Monitoring of Driver Behaviour and Road Hazards. Ph.D. Thesis, University of Auckland, Auckland, New Zealand, 2014.

- De Silva, V.; Roche, J.; Kondoz, A.; Member, S. Fusion of LiDAR and Camera Sensor Data for Environment Sensing in Driverless Vehicles. arXiv 2018, arXiv:1710.06230v2.

- Chen, Z.; Wang, Y. Infrared–Ultrasonic Sensor Fusion for Support Vector Machine–Based Fall Detection. J. Intell. Mater. Syst. Struct. 2018, 29, 2027–2039.

- Kovács, G.; Nagy, S. Ultrasonic Sensor Fusion Inverse Algorithm for Visually Impaired Aiding Applications. Sensors 2020, 20, 3682.

- Huang, P.; Luo, X.; Jin, J.; Wang, L.; Zhang, L.; Liu, J.; Zhang, Z. Improving High-Throughput Phenotyping Using Fusion of Close-Range Hyperspectral Camera and Low-Cost Depth Sensor. Sensors 2018, 18, 2711.

- Liu, X.; Payandeh, S. A Study of Chained Stochastic Tracking in RGB and Depth Sensing. J. Control Sci. Eng. 2018, 2018, 2605735.

- Kanwal, N.; Bostanci, E.; Currie, K.; Clark, A.F.A.F. A Navigation System for the Visually Impaired: A Fusion of Vision and Depth Sensor. Appl. Bionics Biomech. 2015, 2015, 479857.

- Shao, F.; Lin, W.; Li, Z.; Jiang, G.; Dai, Q. Toward Simultaneous Visual Comfort and Depth Sensation Optimization for Stereoscopic 3-D Experience. IEEE Trans. Cybern. 2017, 47, 4521–4533.

- Procházka, A.; Vyšata, O.; Vališ, M.; Ťupa, O.; Schätz, M.; Mařík, V. Use of the Image and Depth Sensors of the Microsoft Kinect for the Detection of Gait Disorders. Neural Comput. Appl. 2015, 26, 1621–1629.

- Kepski, M.; Kwolek, B. Event-Driven System for Fall Detection Using Body-Worn Accelerometer and Depth Sensor. IET Comput. Vis. 2018, 12, 48–58.

- Long, N.; Wang, K.; Cheng, R.; Yang, K.; Hu, W.; Bai, J. Assisting the Visually Impaired: Multitarget Warning through Millimeter Wave Radar and RGB-Depth Sensors. J. Electron. Imaging 2019, 28, 013028.

- Salcedo-Sanz, S.; Ghamisi, P.; Piles, M.; Werner, M.; Cuadra, L.; Moreno-Martínez, A.; Izquierdo-Verdiguier, E.; Muñoz-Marí, J.; Mosavi, A.; Camps-Valls, G. Machine Learning Information Fusion in Earth Observation: A Comprehensive Review of Methods, Applications and Data Sources. Inf. Fusion 2020, 63, 256–272.

- Bin Chang, N.; Bai, K. Multisensor Data Fusion and Machine Learning for Environmental Remote Sensing; CRC Press: Boca Raton, FL, USA, 2018; 508p.

- Bowler, A.L.; Bakalis, S.; Watson, N.J. Monitoring Mixing Processes Using Ultrasonic Sensors and Machine Learning. Sensors 2020, 20, 1813.

- Madeira, R.; Nunes, L. A Machine Learning Approach for Indirect Human Presence Detection Using IOT Devices. In Proceedings of the 2016 11th International Conference on Digital Information Management, ICDIM 2016, Porto, Portugal, 19–21 September 2016; pp. 145–150.

- Elbattah, M.; Molloy, O. Data-Driven Patient Segmentation Using K-Means Clustering: The Case of Hip Fracture Care in Ireland. In Proceedings of the ACSW ’17: Proceedings of the Australasian Computer Science Week Multiconference, Geelong, Australia, 30 January–3 February 2017; pp. 3–10.

- Samriya, J.K.; Kumar, S.; Singh, S. Efficient K-Means Clustering for Healthcare Data. Adv. J. Comput. Sci. Eng. 2016, 4, 1–7.

- Bourobou, S.T.M.; Yoo, Y. User Activity Recognition in Smart Homes Using Pattern Clustering Applied to Temporal ANN Algorithm. Sensors 2015, 15, 11953–11971.

- Liao, M.; Li, Y.; Kianifard, F.; Obi, E.; Arcona, S. Cluster Analysis and Its Application to Healthcare Claims Data: A Study of End-Stage Renal Disease Patients Who Initiated Hemodialysis Epidemiology and Health Outcomes. BMC Nephrol. 2016, 17, 25.

- Ekerete, I.; Garcia-Constantino, M.; Diaz, Y.; Nugent, C.; Mclaughlin, J. Fusion of Unobtrusive Sensing Solutions for Sprained Ankle Rehabilitation Exercises Monitoring in Home Environments. Sensors 2021, 21, 7560.

- Negi, N.; Chawla, G. Clustering Algorithms in Healthcare BT. In Intelligent Healthcare: Applications of AI in EHealth; Bhatia, S., Dubey, A.K., Chhikara, R., Chaudhary, P., Kumar, A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 211–224. ISBN 978-3-030-67051-1.

More

Information

Subjects:

Others

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.5K

Revisions:

2 times

(View History)

Update Date:

12 Dec 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No