| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Tanjim Mahmud | -- | 2848 | 2023-11-15 12:19:42 | | | |

| 2 | Camila Xu | -185 word(s) | 2663 | 2023-11-16 01:25:37 | | | | |

| 3 | Camila Xu | Meta information modification | 2663 | 2023-11-16 01:25:56 | | | | |

| 4 | Camila Xu | -166 word(s) | 2497 | 2023-11-17 09:49:24 | | |

Video Upload Options

The proliferation of the internet, especially on social media platforms, has amplified the prevalence of cyberbullying and harassment. Addressing this issue involves harnessing natural language processing (NLP) and machine learning (ML) techniques for the automatic detection of harmful content. However, these methods encounter challenges when applied to low-resource languages like the Chittagonian dialect of Bangla. This entry compares two approaches for identifying offensive language containing vulgar remarks in Chittagonian. The first relies on basic keyword matching, while the second employs machine learning and deep learning techniques. The keyword- matching approach involves scanning the text for vulgar words using a predefined lexicon. Despite its simplicity, this method establishes a strong foundation for more sophisticated ML and deep learning approaches. An issue with this approach is the need for constant updates to the lexicon.

1. Introduction

2. Automatic Vulgar Word Extraction Method with Application to Vulgar Remark Detection in Chittagonian Dialect of Bangla

| Paper | Classifier | Highest Score |

Language | Sample Size |

Class and Ratio | Data Sources |

|---|---|---|---|---|---|---|

| [24] | Multinomial Naive Bayes, Random Forest, Support Vector Machines, |

80% (Accuracy) | Bengali | 2.5K | - | F |

| [25] | Support Vector Machines, Naive Bayes, Decision Tree, K-Nearest Neighbors |

97% (Accuracy) | Bengali | 2.4 K | Non-Bullying Bullying (10%) |

F, T |

| [26] | Linear Support Vector Classification, Logistic Regression, Multinomial Naive Bayes, Random Forest Artificial Neural Network, RNN + LSTM |

82.2% (Accuracy) | Bengali | 4.7 K | Slang (19.57%), Religious, Politically, Positive, Neutral, violated (13.28%), Anti- feminism (0.87%), Hatred (13.15%), Personal attack (12.36%) |

F, Y, News portal |

| [27] | Naive Bayes | 80.57% (Accuracy) | Bengali | 2.665 K | Non-Abusive, Abusive (45.55%) |

Y |

| [28] | Root-Level approach |

68.9% (Accuracy) | Bengali | 300 | Not Bullying, Bullying |

F, Y News portal |

| [30] | Logistic Regression, Support Vector Machines, Stochastic Gradient Descent, Bidirectional LSTM |

89.3% (F1 Score) 82.4% (F1 Score) |

Bengali | 7.245 K | Non Vulgar, Vulgar |

Y |

| [31] | Support Vector Machines, RF, Adaboost |

72.14% (Accuracy) 80% (Precision) |

Bengali | 2 K | Non Abusive, Abusive (78.41%) |

F |

| [32] | Gated Recurrent Units, Support Vector Classification, LinearSVC, Random Forest, Naive Bayes |

70.1% (Accuracy) | Bengali | 5.126 K | Religious comment (14.9%), Hate speech (19.2%), Inciteful (10.77%), Communal hatred (15.67%), Religious hatred (15.68%), Political comment (23.43%) |

F |

| [33] | Logistic Regression, Support Vector Machines, Convolutional Neural Network, BIdirectional LSTM, BERT, LSTM |

78% 91% 89% 84% (F1 Score) |

Bengali | 8.087 K | Personal (43.44%), Religious (14.97%), Geopolitical (29.23%), Political (12.35%) |

F |

| [34] | Logistic Regression, Support Vector Machines, Random Forest, Bidirectional LSTM |

82.7% (F1 Score) | Bengali | 3 K | Non abusive, Abusive (10%) |

Y |

| [36] | Long Short-term Memory, Bidirectional LSTM |

87.5% (Accuracy) | Bengali | 30 K | Not Hate speech, Hate speech (33.33%) |

F, Y |

| [37] | Multinomial Naive Bayes, Multilayer Perceptron, Support Vector Machines, Decision Tree, Random Fores, Stochastic Gradient Descent, K-Nearest Neighbors |

88% (Accuracy) | Bengali | 9.76 K | Non Abusive, abusive (50%) |

F, Y |

| [38] | ELECTRA, Deep Neural Network, BERT |

85% (Accuracy) (BERT), 84.92% (Accuracy) (ELECTRA) |

Bengali | 44.001 K | Troll (23.78%), Religious (17.22%), Sexual (20.29%), Not Bully (34.86%), Threat (3.85%) |

F |

References

- Bangladesh Telecommunication Regulatory Commission. Available online: http://www.btrc.gov.bd/site/page/347df7fe-409f-451e-a415-65b109a207f5/- (accessed on 15 January 2023).

- United Nations Development Programme. Available online: https://www.undp.org/bangladesh/blog/digital-bangladesh-innovative-bangladesh-road-2041 (accessed on 20 January 2023).

- Chittagong City in Bangladesh. Available online: https://en.wikipedia.org/wiki/Chittagong (accessed on 1 April 2023).

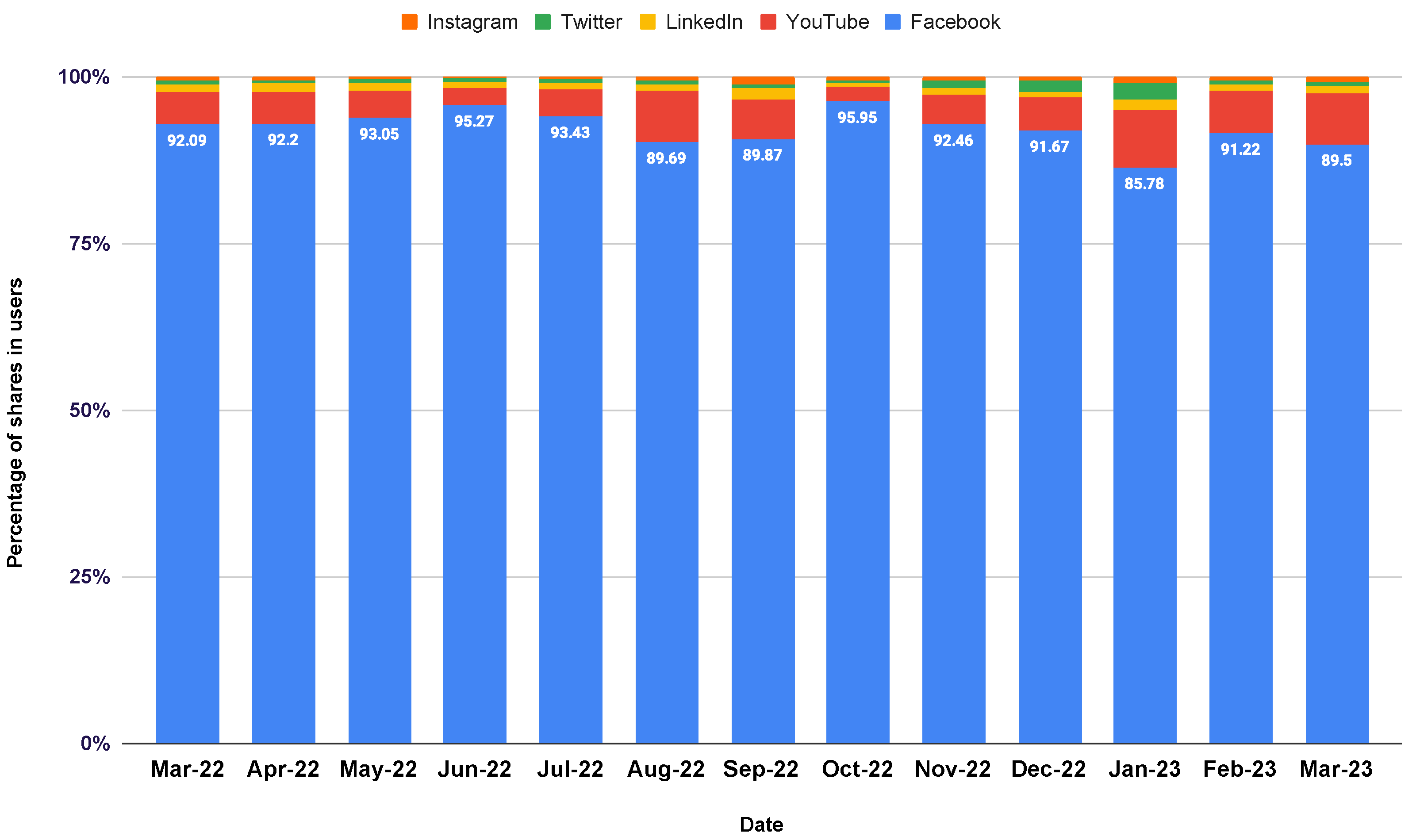

- StatCounter Global Stats. Available online: https://gs.statcounter.com/social-media-stats/all/bangladesh/#monthly-202203-202303 (accessed on 24 April 2023).

- Facebook. Available online: https://www.facebook.com/ (accessed on 28 January 2023).

- imo. Available online: https://imo.im (accessed on 28 January 2023).

- WhatsApp. Available online: https://www.whatsapp.com (accessed on 28 January 2023).

- Addiction Center. Available online: https://www.addictioncenter.com/drugs/social-media-addiction/ (accessed on 28 January 2023).

- Prothom Alo. Available online: https://en.prothomalo.com/bangladesh/Youth-spend-80-mins-a-day-in-Internet-adda (accessed on 28 January 2023).

- United Nations. Available online: https://www.un.org/en/chronicle/article/cyberbullying-and-its-implications-human-rights (accessed on 28 January 2023).

- ACCORD—African Centre for the Constructive Resolution of Disputes. Available online: https://www.accord.org.za/conflict-trends/social-media/ (accessed on 28 January 2023).

- Cachola, I.; Holgate, E.; Preoţiuc-Pietro, D.; Li, J.J. Expressively vulgar: The socio-dynamics of vulgarity and its effects on sentiment analysis in social media. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 2927–2938.

- Wang, N. An analysis of the pragmatic functions of “swearing” in interpersonal talk. Griffith Work. Pap. Pragmat. Intercult. Commun. 2013, 6, 71–79.

- Mehl, M.R.; Vazire, S.; Ramírez-Esparza, N.; Slatcher, R.B.; Pennebaker, J.W. Are women really more talkative than men? Science 2007, 317, 82.

- Wang, W.; Chen, L.; Thirunarayan, K.; Sheth, A.P. Cursing in English on twitter. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, MD, USA, 15–19 February 2014; pp. 415–425.

- Holgate, E.; Cachola, I.; Preoţiuc-Pietro, D.; Li, J.J. Why swear? Analyzing and inferring the intentions of vulgar expressions. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4405–4414.

- Sazzed, S. A lexicon for profane and obscene text identification in Bengali. In Proceedings of the International Conference on Recent Advances in Natural Language Processing (RANLP 2021), Online, 1–3 September 2021; pp. 1289–1296.

- Das, S.; Mahmud, T.; Islam, D.; Begum, M.; Barua, A.; Tarek Aziz, M.; Nur Showan, E.; Dey, L.; Chakma, E. Deep Transfer Learning-Based Foot No-Ball Detection in Live Cricket Match. Comput. Intell. Neurosci. 2023, 2023, 2398121.

- Mahmud, T.; Barua, K.; Barua, A.; Das, S.; Basnin, N.; Hossain, M.S.; Andersson, K.; Kaiser, M.S.; Sharmen, N. Exploring Deep Transfer Learning Ensemble for Improved Diagnosis and Classification of Alzheimer’s Disease. In Proceedings of the 2023 International Conference on Brain Informatics, Hoboken, NJ, USA, 1–3 August 2023; Springer: Cham, Switzerland, 2023; pp. 1–12.

- Wu, Z.; Luo, G.; Yang, Z.; Guo, Y.; Li, K.; Xue, Y. A comprehensive review on deep learning approaches in wind forecasting applications. CAAI Trans. Intell. Technol. 2022, 7, 129–143.

- Gasparin, A.; Lukovic, S.; Alippi, C. Deep learning for time series forecasting: The electric load case. CAAI Trans. Intell. Technol. 2022, 7, 1–25.

- Pinker, S. The Stuff of Thought: Language as a Window into Human Nature; Penguin: London, UK, 2007.

- Andersson, L.G.; Trudgill, P. Bad Language; Blackwell/Penguin Books: London, UK, 1990.

- Eshan, S.C.; Hasan, M.S. An application of machine learning to detect abusive bengali text. In Proceedings of the 2017 20th International Conference of Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 22–24 December 2017; pp. 1–6.

- Akhter, S.; Abdhullah-Al-Mamun. Social media bullying detection using machine learning on Bangla text. In Proceedings of the 2018 10th International Conference on Electrical and Computer Engineering (ICECE), Dhaka, Bangladesh, 20–22 December 2018; pp. 385–388.

- Emon, E.A.; Rahman, S.; Banarjee, J.; Das, A.K.; Mittra, T. A deep learning approach to detect abusive bengali text. In Proceedings of the 2019 7th International Conference on Smart Computing & Communications (ICSCC), Sarawak, Malaysia, 28–30 June 2019; pp. 1–5.

- Awal, M.A.; Rahman, M.S.; Rabbi, J. Detecting abusive comments in discussion threads using naïve bayes. In Proceedings of the 2018 International Conference on Innovations in Science, Engineering and Technology (ICISET), Chittagong, Bangladesh, 27–28 October 2018; pp. 163–167.

- Hussain, M.G.; Al Mahmud, T. A technique for perceiving abusive bangla comments. Green Univ. Bangladesh J. Sci. Eng. 2019, 4, 11–18.

- Das, M.; Banerjee, S.; Saha, P.; Mukherjee, A. Hate Speech and Offensive Language Detection in Bengali. arXiv 2022, arXiv:2210.03479.

- Sazzed, S. Identifying vulgarity in Bengali social media textual content. PeerJ Comput. Sci. 2021, 7, e665.

- Jahan, M.; Ahamed, I.; Bishwas, M.R.; Shatabda, S. Abusive comments detection in Bangla-English code-mixed and transliterated text. In Proceedings of the 2019 2nd International Conference on Innovation in Engineering and Technology (ICIET), Dhaka, Bangladesh, 23–24 December 2019; pp. 1–6.

- Ishmam, A.M.; Sharmin, S. Hateful speech detection in public facebook pages for the bengali language. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 555–560.

- Karim, M.R.; Dey, S.K.; Islam, T.; Sarker, S.; Menon, M.H.; Hossain, K.; Hossain, M.A.; Decker, S. Deephateexplainer: Explainable hate speech detection in under-resourced bengali language. In Proceedings of the 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA), Porto, Portugal, 6–9 October 2021; pp. 1–10.

- Sazzed, S. Abusive content detection in transliterated Bengali-English social media corpus. In Proceedings of the Fifth Workshop on Computational Approaches to Linguistic Code-Switching, Online, 11 June 2021; pp. 125–130.

- Faisal Ahmed, M.; Mahmud, Z.; Biash, Z.T.; Ryen, A.A.N.; Hossain, A.; Ashraf, F.B. Bangla Text Dataset and Exploratory Analysis for Online Harassment Detection. arXiv 2021, arXiv:2102.02478.

- Romim, N.; Ahmed, M.; Talukder, H.; Islam, S. Hate speech detection in the bengali language: A dataset and its baseline evaluation. In Proceedings of the International Joint Conference on Advances in Computational Intelligence, Dhaka, Bangladesh, 20–21 November 2020; Springer: Singapore, 2021; pp. 457–468.

- Islam, T.; Ahmed, N.; Latif, S. An evolutionary approach to comparative analysis of detecting Bangla abusive text. Bull. Electr. Eng. Inform. 2021, 10, 2163–2169.

- Aurpa, T.T.; Sadik, R.; Ahmed, M.S. Abusive Bangla comments detection on Facebook using transformer-based deep learning models. Soc. Netw. Anal. Min. 2022, 12, 24.

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MI, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MI, USA, 2019; pp. 4171–4186.

- Clark, K.; Luong, M.T.; Le, Q.V.; Manning, C.D. Electra: Pre-training text encoders as discriminators rather than generators. In Proceedings of the International Conference on Learning Representations, ICLR 2020, Virtual, 26 April–1 May 2020.