1. Introduction

The rapid advancements in artificial intelligence (AI) and machine learning (ML) hold immense potential for revolutionizing and expediting the arduous and costly process of materials development. In recent decades, AI and ML have ushered in a new era for materials science by leveraging computer algorithms to aid in exploration, understanding, experimentation, modeling, and simulation [1][2]. Working alongside human creativity and ingenuity, these algorithms contribute to the discovery and refinement of novel materials for future technologies.

2. AI Algorithms and ML Models

AI and ML have revolutionized the way we approach problem-solving and decision-making

[3][4][5]. ML, in particular, is a field that focuses on the development and deployment of AI algorithms capable of analyzing data and its properties to determine actions without explicit programming

[6].

Unlike traditional programming, ML algorithms leverage statistical tools to process data and learn from it, allowing them to improve and adapt dynamically as more data becomes available. This concept of “learning” forms the foundation of ML, enabling algorithms to make predictions, recognize patterns, and make informed decisions.

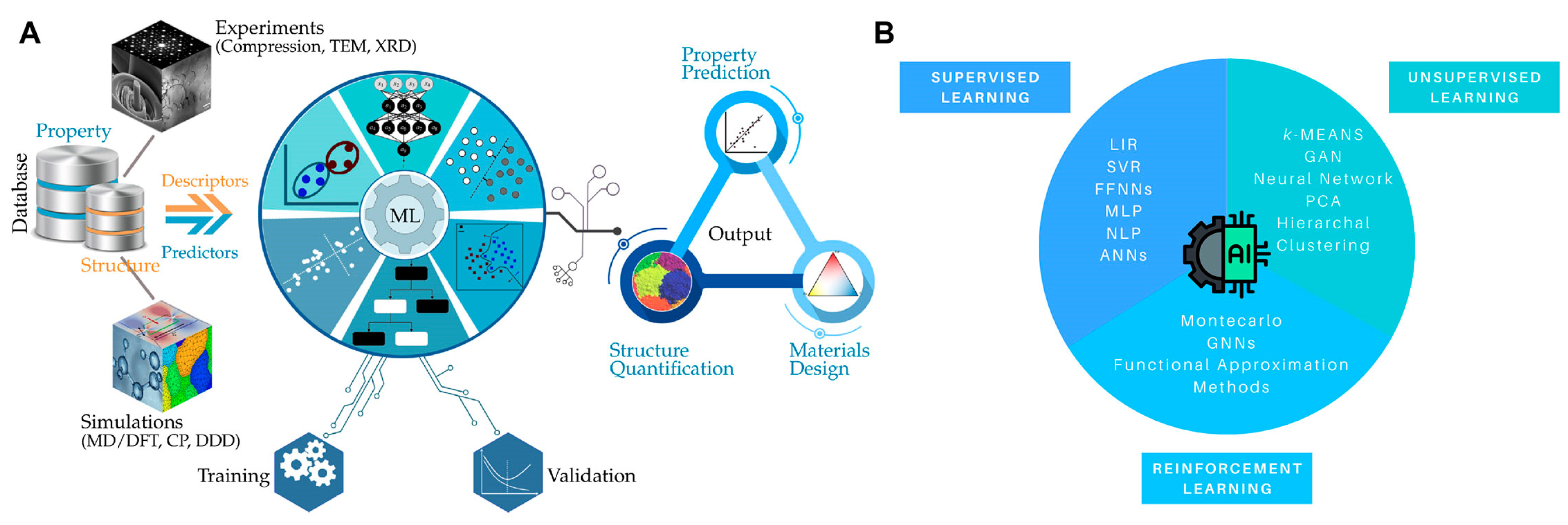

ML algorithms can be broadly categorized into three main types, each serving different purposes: supervised learning, unsupervised learning, and reinforcement learning. These diverse categories of ML algorithms provide a powerful toolkit for solving complex problems, optimizing processes, and extracting valuable insights from data. As illustrated in Figure 1, they form a comprehensive framework that enables AI systems to analyze and interpret data, facilitating intelligent decision-making and automation across various domains.

Figure 1. (

A) A schematic representation of the materials design workflow using AI, consisting of three essential elements: a material dataset, machine learning models capable of learning and interpreting representations for specific tasks using the provided dataset, and output that yields optimized and enhanced material properties for the creation of advanced materials. Reproduced with permission from Ref.

[7]. CC BY 4.0 (

B) An overview of machine learning methodologies highlighting the three primary categories: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning involves training algorithms using labeled data, where inputs and desired outputs are provided to teach the algorithm how to make accurate predictions. Hence, this learning process is based on comparing the calculated output and predicted output, that is, learning refers to computing the error and adjusting the error for achieving the expected output. Examples of such algorithms include linear regression (LIR)

[8], support vector regression (SVR)

[9], feedforward neural networks (FFNNs)

[10], and convolutional neural networks (CNNs)

[11].

In addition to classical supervised ML algorithms such as LIR, SVR, or random forests (RFs), which are useful for predicting mechanical features of materials

[12], researchers have developed artificial neural networks (ANNs) inspired by the interconnected neurons in the human brain to delve into deep data mining

[13]. Among these networks, FFNNs, or multilayer perceptrons (MLPs), have emerged as quintessential and relatively simple models. FFNNs are extensively used in ML and deep learning, featuring multiple layers of interconnected nodes or neurons arranged sequentially. These networks facilitate data flow in a unidirectional manner, from the input layer to the output layer, without any loops or feedback connections.

Each layer, comprising multiple neurons, calculates outputs for the subsequent layer based on inputs received from the preceding layer. The weights or trainable parameters associated with each neuron are optimized to minimize the loss function, allowing the FFNN to learn complex patterns and relationships in the data.

In the field of materials design, FFNNs can be effectively employed in various ways

[14][15][16]. For instance, by providing the composition, processing conditions, and microstructure of a material as inputs, an FFNN can learn the intricate relationship between these factors and properties such as mechanical strength, thermal conductivity, or electrical resistivity. This enables the optimization of material compositions and processing parameters to achieve desired properties. Furthermore, FFNNs can be combined with optimization algorithms to explore and optimize material designs

[17][18]. By treating the FFNN as a surrogate model that approximates the relationship between input variables (e.g., material composition, processing conditions) and desired performance metrics, optimization algorithms can efficiently search the design space to identify optimal material configurations. This application proves particularly valuable when seeking materials with specific properties or performance targets.

However, it is important to note that successful utilization of FFNNs in materials design relies on the availability of high-quality training data, careful feature selection, and a thorough understanding of the model’s limitations and assumptions. Additionally, domain expertise and experimental validation remain crucial in interpreting and verifying the FFNN’s predictions and recommendations.

Besides FFNNs, CNN architectures are gaining widespread attention due to their applications in computer vision and natural language processing (NLP)

[19]. They serve as a specialized type of ANN, specifically designed for analyzing visual data such as images or videos. CNNs possess the ability to automatically learn hierarchical representations of visual patterns and features directly from raw input data. The fundamental operation in CNNs is convolution, which preserves the spatial relationship between pixels. It involves multiplying the image matrix with a filter matrix, where the filter contains trainable weights that are optimized during the training process for effective feature extraction. By employing various filters, CNNs can perform distinct operations such as edge detection on an image. Through the stacking of convolutional layers, simple features gradually combine to form more complex and comprehensive ones.

CNNs have revolutionized computer vision tasks, encompassing image classification, object detection, segmentation, and more

[19]. Their hierarchical structure, parameter sharing, and spatial invariance properties contribute to their efficacy in learning and extracting meaningful features from visual data. Consequently, CNNs have found widespread adoption in numerous domains, including medical imaging, facial recognition, and image-based recommender systems

[20][21].

In the field of material design problems, CNNs hold great potential. With their capability to capture features at different hierarchical levels, CNNs are well-suited for describing the properties of materials, which inherently possess hierarchical structures, particularly in the case of biomaterials

[22].

Overall, CNNs offer a powerful toolset for extracting relevant information from visual data, enabling breakthroughs in a wide range of applications and fostering advancements in fields such as materials engineering and design.

Unsupervised learning, on the other hand, deals with unlabeled data, where the algorithm identifies patterns and structures within the data without explicit guidance. Intriguing and successful categories of unsupervised architectures are generative adversarial networks (GANs), which consist of two neural networks, the generator and the discriminator

[23]. GANs are designed to learn and generate synthetic data that resembles a target dataset, without the need for explicit labels or supervision. The generator network is responsible for generating synthetic data samples. It takes as input random noise or a latent vector and produces data that resembles the target dataset. The generator network usually consists of one or more layers of neural nodes, often using deconvolutional layers to up-sample the noise into a larger output. The discriminator network acts as a binary classifier, distinguishing between real data samples from the target dataset and synthetic data samples generated by the generator network. The discriminator network aims to correctly classify whether a given sample is real or fake. It is trained with labeled data, where real samples are labeled as “real” and synthetic samples as “fake”. GANs have gained significant attention in the field of ML and have shown impressive capabilities in generating realistic and diverse data, including images, text, and audio

[24]. They have also shown potential in unsupervised learning tasks, where the generated data can be used for downstream tasks such as clustering, representation learning, and semi-supervised learning. It’s important to note that GANs require careful tuning, hyperparameter selection, and large amounts of training data to achieve optimal performance. Additionally, evaluation metrics for GANs are an ongoing area of research, as assessing the quality and diversity of generated samples can be subjective.

In a notable study, Mao et al.

[25] introduced a GAN-based approach for designing complex architectured materials with extraordinary properties, such as materials achieving the Hashin-Shtrikman upper bounds on isotropic elasticity. This method involves training neural networks using simulation data from millions of randomly generated architectured materials categorized into different crystallographic symmetries. The advantage of this approach lies in its ability to provide an experience-free and systematic framework that does not require prior knowledge and can be readily applied in diverse applications.

The significance of this methodology extends beyond the design of metamaterials. By leveraging simulation data and ML, it offers a novel and promising avenue for addressing various inverse design problems in materials and structures. This approach opens up exciting possibilities for tackling complex design challenges and exploring new frontiers in materials science and engineering. The work by Mao and colleagues not only contributes a practical and systematic method for designing materials with desired properties but also highlights the potential of combining simulation data and ML in materials research. By harnessing the power of generative adversarial networks, this approach enables the exploration of vast design spaces and paves the way for innovative advancements in materials design and engineering.

Lastly, reinforcement learning focuses on training algorithms through interactions with an environment, where the algorithms learn by receiving feedback and rewards based on their actions. Among this class of ML algorithms, AlphaFold must surely be mentioned. It is a reinforcement learning-based AI system developed by DeepMind that has demonstrated exceptional capabilities in predicting protein structures

[26]. It utilizes deep learning algorithms to accurately predict the 3D structure of proteins, which is a challenging and crucial task in the field of biochemistry and molecular biology. While initially focused on protein folding, the underlying principles and techniques of AlphaFold have the potential for broader applications, including materials problems such as interactive materials design.

Besides this outstanding example, graph neural networks (GNNs) belong to this class of ML algorithms. GNNs are a type of ANN designed to process and analyze graph-structured data

[27]. Graphs are mathematical structures that consist of nodes connected by edges. GNNs are specifically developed to capture and model the relationships and dependencies between nodes in a graph. GNNs operate on graph-structured data, which can represent various real-world systems and relationships. Each node in the graph represents an entity, while the edges denote the connections or relationships between entities. Using GNNs, Guo and Buehler

[28] have developed an approach to design architected materials. The GNN model is integrated with a design algorithm to engineer the topological structures of the architected materials. The authors reported that such sensing method is applicable to design problems of truss-like structures under complex loading condition in additive manufacturing (e.g., 3D printing), architectural design, and civil infrastructure applications, and has the potential to be closely integrated with IoT methods and autonomous sensing and actuation approaches.

ML algorithms used in materials design, along with example applications, are summarized in Table 1.

Table 1. Machine learning algorithms used in materials design and optimization.

3. Materials Informatics Methodologies

Besides the development of AI and ML models for processing and analyzing massive amounts of data, discovering patterns, and making predictions, materials informatics (MI)—a multidisciplinary field that act as a junction between materials science, data science, and AI—has the capabilities to unlock the potential of vast material database management, thus accelerating materials design and development. By integrating MI tools with AI and ML algorithms (namely Hybrid AI), researchers can draw on the multitude of available data and extract valuable insights that were previously unreachable, revolutionizing the way of engineering materials in several fields of application

[37][38][39].

The framework of MI mainly consists of three parts: (1) data acquisition, (2) data representation, and (3) data mining (or data analysis)

[40].

Data acquisition involves obtaining physical and structural properties through simulations or experiments. Data representation focuses on selecting descriptors that capture the essential characteristics of materials within a dataset. Lastly, data mining aims to identify relationships between structural information and desired material properties

[40].

MI methodologies are various and tailored to address specific design challenges. The choice of methodology depends on the nature of the problem and the objectives of the research. Among such methods, the most used for materials design are integrating data modalities, physical-based deep learning, materiomics, and computer vision methodologies. An overview of the main methodologies employed in MI along with their key features is reported in Table 2.

Integrating data modalities are powerful data acquisition tools. Transformer models, in particular, provide a robust framework for combining multiple sources of multimedia data, such as text, images, videos, and graphs, thereby expanding and enhancing datasets. This integration capability has empowered researchers, exemplified by the work of Hsu et al.

[41], to design sustainable materials derived from biocompatible resources with greater efficacy. By leveraging the rich information contained in various data formats, transformer models facilitate the optimization of mechanical properties, starting from the microstructure level

[42]. This integration of data modalities unleashes the potential for comprehensive materials analysis and design, opening up new avenues for advancing the field of sustainable materials. To permeate collected data with significance, physical principles are integrated into deep learning techniques, resulting in efficient simulations. A deep learning method has been used to predict high-fidelity and high-resolution images for stress fields near cracks considering material microstructures

[43]. Additionally, physics-informed neural networks have been utilized to derive data-driven solutions for nonlinear partial differential equations, smoothing the modeling of dynamic problems

[44]. Furthermore, as reported by Lai and co-workers

[45], a data-driven regression model demonstrated the correlation between the crystalline structure and luminescence characteristics of Europium-doped phosphors, enabling the prediction of emission wavelengths.

To achieve superior material properties starting from the design phase, it is fundamental to understand the intricate interplay between the physical, chemical, and topological properties of matter. In this content, materiomics employs analytically driven, simulation-driven, and data-driven procedures to predict complex behaviors by breaking down materials into their hierarchical building blocks. This approach is particularly valuable for bioinspired material design, as it considers relevant scales and draws inspiration from the well-organized structures found in nature

[46]. Furthermore, materiomics offers a promising avenue to ensure the environmental sustainability of manufactured structures, as it considers the life cycle and ecological impact of materials

[47]. Moreover, the use of AI enables the resolution of inverse design problems in order to develop material compositions and structures that fulfill a specific set of target requirements. These requirements often involve challenging requirements, such as enhancing mechanical performance or efficiency while simultaneously reducing weight and cost

[48][49][50][51].

To enhance the interpretability of results in materials engineering, computer vision methodologies, such as graphic rendering and virtual reality, can be implemented. Indeed, Yang et al.

[52] employed an AI-based approach by using molecular dynamics simulations to realize the structure and property quantification of 3D graphene foams with mathematically regulated topologies. In another study the authors, using a limited set of known data and a multiple deep learning architecture, demonstrated the capability to predict missing mechanical information and further analyze intricate 2D and 3D microstructures

[53]. Furthermore, the training of specific algorithms can provide extra information on mechanical features that can optimize the material design process and lead scientists to new discoveries

[54][55][56].

Another data mining tool is represented by the possibility of transfer learning and fine-tuning the algorithms. This methodology considers the adaptation of pre-existing models to address problems that differ from the original one, thereby altering the characteristics of the input data or the reward value

[57]. For instance, Jiang et al.

[58] used a transfer learning algorithm that solved dynamic multi-objective optimization problems to generate an effective initial population pool via reusing past experience to speed up the design process.

Currently, the utilization of large language models (LLMs), such as Chat-GPT, LLaMa, and Bard, is generating significant interest due to the profound impact this technology can have on human life

[59][60]; moreover, its application in material analysis can be considered as a valuable instrument for intelligent material design and prototyping

[61]. One notable advantage is the ability to fine-tune LLMs for specific tasks using a relatively small amount of labeled data, which can even be extracted from the published literature

[62]. This approach eliminates the need to train a new model from scratch. Indeed, the final layers of the neural network can be substituted to adapt the AI parameters, and then the entire model can be trained in significantly less time than training it from scratch would take. These adaptations enable LLMs to be effectively applied in various domains, including dataset mining, molecular modeling, microstructure generation, and material structure extraction

[63][64].

Lastly, another powerful tool in materials research is the autonomous discovery of materials using AI. By harnessing the capabilities of automated experimentation systems, laboratories empower AI to autonomously explore the extensive design space and make informed decisions about which experiments to conduct. This approach revolutionizes the traditional trial-and-error approach to materials discovery by leveraging AI algorithms and ML techniques. The AI system can analyze vast amounts of data, including experimental results, material properties, and synthesis conditions, to identify patterns, correlations, and novel material candidates

[65][66]. Through this autonomous exploration, complex new materials with unprecedented characteristics are unveiled

[67]. An interesting development in this field was presented by Nikolaev et al.

[68] who established the first autonomous experimentation (AE) system for materials development. Initially, the AE system was trained to grow carbon nanotubes with precise growth rates by applying a six-dimensional processing parameter gained after obtaining a deeper understanding of the underlying phenomena. Through an iterative research process spanning 600 autonomous iterations, the AE system successfully identified the optimal growth conditions to achieve the desired growth rate.

Overall, to further enhance the materials discovery process, the coupling of MI tools with AI algorithms demonstrates vitality. This combination deploys the possibility to renovate and transform research methodologies. By leveraging digital strategies, researchers can overcome traditional challenges encountered in materials design, thus boosting the discovery and development of novel materials.

Table 2. Key methodologies in materials informatics.

| Materials Informatics (MI) Tools |

| Type |

Main Features |

References |

| Integrating data modalities |

Integration of different multimedia sources into datasets (text, images, videos, and graph data) |

[41][42] |

| Physics-based deep learning |

Integration of physics models into deep learning settings |

[43][44][45] |

| Materiomics |

Usage of analytically driven, simulation-driven, and data-driven methods to break down materials into their essential building blocks |

[46][47] |

| Inverse design |

Solving inverse design problems |

[48][49][50][51] |

| Computer vision methodologies |

Combination of graphic rendering, virtual reality, and interpretable machine learning |

[52][53] |

| Transfer learning and Fine-tuning |

Adaptation of pre-existing algorithms to a different problem resolution |

[57][58] |

| Large Language Models (LLMs) |

Elaboration of various natures of datasets to predict and generate text and other forms of content |

[61][62][63][64] |

| Autonomous materials discovery |

Autonomous exploration of design space with self-directed decisions regarding experimentation and tests |

[65][66][67][68] |