| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Jenna Seetohul | -- | 3237 | 2023-07-10 12:27:52 | | | |

| 2 | Peter Tang | Meta information modification | 3237 | 2023-07-11 05:41:35 | | | | |

| 3 | Peter Tang | -1 word(s) | 3236 | 2023-07-11 05:41:50 | | |

Video Upload Options

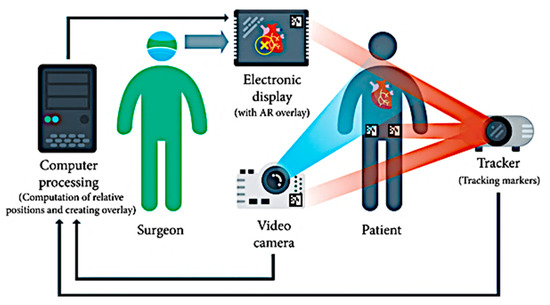

Novel surgical robots are the most sought-after approach in performing repetitive tasks in an accurate manner. Imaging technology has significantly changed the world of robotic surgery, especially when it comes to biopsies, the examination of complex vasculature for catheterization, and the visual estimation of target points for port placement. There is a great need for the image analysis of CT scans and X-rays for the identification of the correct position of an anatomical landmark such as a tumor or polyp. This information is at the core of most augmented reality systems, where development starts with the reconstruction and localization of targets. Hence, the primary role of augmented reality (AR) applications in surgery would be to visualize and guide a user towards a desired robot configuration with the help of intelligent computer vision algorithms.

1. Introduction

2. Hardware Components

2.1. Patient-to-Image Registration Devices

2.2. Object Detection and AR Alignment for Robotic Surgery

3. Software Integration

3.1. Patient-To-Image Registration

3.2. Camera Calibration for Optimal Alignment

3.3. 3D Visualization using Direct Volume Rendering

3.4. Surface Rendering after Segmentation of Pre-Processed Data

3.5. Path Computational Framework for Navigation and Planning

References

- Chen, B.; Marvin, S.; While, A. Containing COVID-19 in China: AI and the robotic restructuring of future cities. Dialogues Hum. Geogr. 2020, 10, 238–241.

- Raje, S.; Reddy, N.; Jerbi, H.; Randhawa, P.; Tsaramirsis, G.; Shrivas, N.V.; Pavlopoulou, A.; Stojmenović, M.; Piromalis, D. Applications of Healthcare Robots in Combating the COVID-19 Pandemic. Appl. Bionics Biomech. 2021, 2021, 7099510.

- Leal Ghezzi, T.; Campos Corleta, O. 30 years of robotic surgery. World J. Surg. 2016, 40, 2550–2557.

- Wörn, H.; Mühling, J. Computer- and robot-based operation theatre of the future in cranio-facial surgery. Int. Congr. Ser. 2001, 1230, 753–759.

- VisAR: Augmented Reality Surgical Navigation. Available online: https://www.novarad.net/visar (accessed on 6 March 2022).

- Proximie: Saving Lives by Sharing the World’s Best Clinical Practice. Available online: https://www.proximie.com/ (accessed on 6 March 2022).

- Brito, P.Q.; Stoyanova, J. Marker versus markerless augmented reality. Which has more impact on users? Int. J. Hum. Comput. Interact. 2018, 34, 819–833.

- Estrada, J.; Paheding, S.; Yang, X.; Niyaz, Q. Deep-Learning- Incorporated Augmented Reality Application for Engineering Lab Training. Appl. Sci. 2022, 12, 5159.

- Rothberg, J.M.; Ralston, T.S.; Rothberg, A.G.; Martin, J.; Zahorian, J.S.; Alie, S.A.; Sanchez, N.J.; Chen, K.; Chen, C.; Thiele, K.; et al. Ultrasound-on-chip platform for medical imaging, analysis, and collective intelligence. Proc. Natl. Acad. Sci. USA 2021, 118, e2019339118.

- Alam, M.S.; Gunawan, T.; Morshidi, M.; Olanrewaju, R. Pose estimation algorithm for mobile augmented reality based on inertial sensor fusion. Int. J. Electr. Comput. Eng. 2022, 12, 3620–3631.

- Attivissimo, F.; Lanzolla, A.M.L.; Carlone, S.; Larizza, P.; Brunetti, G. A novel electromagnetic tracking system for surgery navigation. Comput. Assist. Surg. 2018, 23, 42–52.

- Lee, D.; Yu, H.W.; Kim, S.; Yoon, J.; Lee, K.; Chai, Y.J.; Choi, Y.J.; Koong, H.-J.; Lee, K.E.; Cho, H.S.; et al. Vision-based tracking system for augmented reality to localize recurrent laryngeal nerve during robotic thyroid surgery. Sci. Rep. 2020, 10, 8437.

- Scaradozzi, D.; Zingaretti, S.; Ferrari, A.J.S.C. Simultaneous localization and mapping (SLAM) robotics techniques: A possible application in surgery. Shanghai Chest 2018, 2, 5.

- Konolige, K.; Bowman, J.; Chen, J.D.; Mihelich, P.; Calonder, M.; Lepetit, V.; Fua, P. View-based maps. Int. J. Robot. Res. 2010, 29, 941–957.

- Cheein, F.A.; Lopez, N.; Soria, C.M.; di Sciascio, F.A.; Lobo Pereira, F.; Carelli, R. SLAM algorithm applied to robotics assistance for navigation in unknown environments. J. Neuroeng. Rehabil. 2010, 7, 10.

- Komorowski, J.; Rokita, P. Camera Pose Estimation from Sequence of Calibrated Images. arXiv 2018, arXiv:1809.11066.

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661.

- Lee, T.; Jung, C.; Lee, K.; Seo, S. A study on recognizing multi-real world object and estimating 3D position in augmented reality. J. Supercomput. 2022, 78, 7509–7528.

- Portalés, C.; Gimeno, J.; Salvador, A.; García-Fadrique, A.; Casas-Yrurzum, S. Mixed Reality Annotation of Robotic-Assisted Surgery videos with real-time tracking and stereo matching. Comput. Graph. 2023, 110, 125–140.

- Ghaednia, H.; Fourman, M.S.; Lans, A.; Detels, K.; Dijkstra, H.; Lloyd, S.; Sweeney, A.; Oosterhoff, J.H.; Schwab, J.H. Augmented and virtual reality in spine surgery, current applications and future potentials. Spine J. 2021, 21, 1617–1625.

- Nachabe, R.; Strauss, K.; Schueler, B.; Bydon, M. Radiation dose and image quality comparison during spine surgery with two different, intraoperative 3D imaging navigation systems. J. Appl. Clin. Med. Phys. 2019, 20, 136–145.

- Londoño, M.C.; Danger, R.; Giral, M.; Soulillou, J.P.; Sánchez-Fueyo, A.; Brouard, S. A need for biomarkers of operational tolerance in liver and kidney transplantation. Am. J. Transplant. 2012, 12, 1370–1377.

- Pfefferle, M.; Shahub, S.; Shahedi, M.; Gahan, J.; Johnson, B.; Le, P.; Vargas, J.; Judson, B.O.; Alshara, Y.; Li, O.; et al. Renal biopsy under augmented reality guidance. In Proceedings of the SPIE Medical Imaging, Houston, TX, USA, 16 March 2020.

- Georgi, M.; Patel, S.; Tandon, D.; Gupta, A.; Light, A.; Nathan, A. How is the Digital Surgical Environment Evolving? The Role of Augmented Reality in Surgery and Surgical Training. Preprints.org 2021, 2021100048.

- Calhoun, V.D.; Adali, T.; Giuliani, N.R.; Pekar, J.J.; Kiehl, K.A.; Pearlson, G.D. Method for multimodal analysis of independent source differences in schizophrenia: Combining gray matter structural and auditory oddball functional data. Hum. Brain Mapp. 2006, 27, 47–62.

- Kronman, A.; Joskowicz, L. Image segmentation errors correction by mesh segmentation and deformation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2013; pp. 206–213.

- Tamadazte, B.; Voros, S.; Boschet, C.; Cinquin, P.; Fouard, C. Augmented 3-d view for laparoscopy surgery. In Workshop on Augmented Environments for Computer-Assisted Interventions; Springer: Berlin/Heidelberg, Germany, 2012; pp. 117–131.

- Wang, A.; Wang, Z.; Lv, D.; Fang, Z. Research on a novel non-rigid registration for medical image based on SURF and APSO. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 6, pp. 2628–2633.

- Pandey, P.; Guy, P.; Hodgson, A.J.; Abugharbieh, R. Fast and automatic bone segmentation and registration of 3D ultrasound to CT for the full pelvic anatomy: A comparative study. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1515–1524.

- Hacihaliloglu, I. Ultrasound imaging and segmentation of bone surfaces: A review. Technology 2017, 5, 74–80.

- El-Hariri, H.; Pandey, P.; Hodgson, A.J.; Garbi, R. Augmented reality visualisation for orthopaedic surgical guidance with pre-and intra-operative multimodal image data fusion. Healthc. Technol. Lett. 2018, 5, 189–193.

- Hussain, R.; Lalande, A.; Marroquin, R.; Guigou, C.; Grayeli, A.B. Video-based augmented reality combining CT-scan and instrument position data to microscope view in middle ear surgery. Sci. Rep. 2020, 10, 6767.

- Wittmann, W.; Wenger, T.; Zaminer, B.; Lueth, T.C. Automatic correction of registration errors in surgical navigation systems. IEEE Trans. Biomed. Eng. 2011, 58, 2922–2930.

- Zhang, Y.; Wang, K.; Jiang, J.; Tan, Q. Research on intraoperative organ motion tracking method based on fusion of inertial and electromagnetic navigation. IEEE Access 2021, 9, 49069–49081.

- Jiang, Z.; Gao, Z.; Chen, X.; Sun, W. Remote Haptic Collaboration for Virtual Training of Lumbar Puncture. J. Comput. 2013, 8, 3103–3110.

- Zeng, F.; Wei, F. Hole filling algorithm based on contours information. In Proceedings of the 2nd International Conference on Information Science and Engineering, Hangzhou, China, 4–6 December 2010.

- Wu, C.; Wan, J.W. Multigrid methods with newton-gauss-seidel smoothing and constraint preserving interpolation for obstacle problems. Numer. Math. Theory Methods Appl. 2015, 8, 199–219.