Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Xinshan Zhu | -- | 1522 | 2023-06-23 14:51:28 | | | |

| 2 | Alfred Zheng | Meta information modification | 1522 | 2023-06-26 05:23:47 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Wang, H.; Zhu, X.; Ren, C.; Zhang, L.; Ma, S. Forensic Methods for Image Inpainting. Encyclopedia. Available online: https://encyclopedia.pub/entry/45989 (accessed on 07 February 2026).

Wang H, Zhu X, Ren C, Zhang L, Ma S. Forensic Methods for Image Inpainting. Encyclopedia. Available at: https://encyclopedia.pub/entry/45989. Accessed February 07, 2026.

Wang, Hongquan, Xinshan Zhu, Chao Ren, Lan Zhang, Shugen Ma. "Forensic Methods for Image Inpainting" Encyclopedia, https://encyclopedia.pub/entry/45989 (accessed February 07, 2026).

Wang, H., Zhu, X., Ren, C., Zhang, L., & Ma, S. (2023, June 23). Forensic Methods for Image Inpainting. In Encyclopedia. https://encyclopedia.pub/entry/45989

Wang, Hongquan, et al. "Forensic Methods for Image Inpainting." Encyclopedia. Web. 23 June, 2023.

Copy Citation

The rapid development of digital image inpainting technology is causing serious hidden danger to the security of multimedia information. Efforts have been devoted to developing forensic methods for image inpainting. They can be roughly divided into the following two categories: conventional inpainting forensics methods and deep learning-based inpainting forensics methods.

inpainting forensics

deep convolutional neural network

1. Introduction

With the rapid development of digital image processing techniques, increasingly advanced image editing software provides more convenience and fun for modern human life. Nevertheless, a large number of forged digital images are generated by malicious use of these techniques, which has led to a serious security and trust crisis of digital multimedia. Therefore, image forensics has gradually attracted an increasing concern in the digital multimedia era, such as JPEG compression forensics [1][2], median filtering detection [3], copy-moving and splicing localization [4][5], universal image manipulation detection [6][7], and so on.

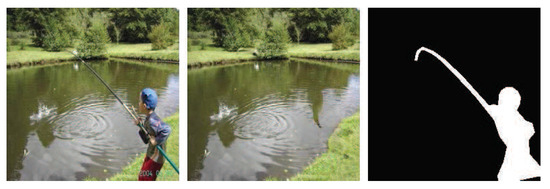

Image inpainting is an effective image editing technique which aims to repair damage or removed image regions based on known image information in a visually plausible manner, as shown in Figure 1. A variety of image inpainting methods have been constantly proposed in recent years, and these can be classified roughly into three categories: the diffusion-based approaches [8][9], the exemplar-based approaches [10][11], and the deep learning (DL)-based approaches [12][13]. Due to its effective and efficient editing ability, image inpainting has been widely applied in many image processing fields [11], such as image restoration, image coding and transmission, photo-editing and virtual restoration of digitized paintings, etc. However, the powerful image editing tool is also conveniently used to maliciously modify an image even by non-professional forgers with less visible traces, which poses a serious threat to multimedia information security.

Figure 1. An illustrative example of image tampering by image inpainting: the real image (left), the inpainted image (middle), and the utilized mask (right).

The major forensic tasks for image inpainting are to locate the inpainted regions of an input image, so inpainting forensics require pixel-wise binary classification at the manipulation level, i.e., binary semantic segmentation. In fact, the goal of binary semantic segmentation is to classify pixels in an image into two categories: foreground and background. Specifically, for the inpainting forensics task, the pixels in the image are classified into inpainted pixels and uninpainted pixels. Generally, this is more difficult than the common manipulation detection, which only makes a decision regarding whether a certain manipulation took place or not.

There has been limited research on inpainting forensics until now. Some traditional forensic methods employ hand-crafted features to identify inpainted pixels. For instance, the features depending on image patch similarity were extracted for the detection of exemplar-based inpainting operation [14][15], and the features based on image Laplacian transform were designed to identify the diffusion-based inpainting operation [16][17]. However, the manipulation traces left by image inpainting on the image are so weak that it is hard to reveal by manually designed features. In addition, the emerging DL-based inpainting methods can not only achieve more realistic inpainting results than traditional methods, but also generate new objects, which brings greater challenges to inpainting forensics. Recently, deep convolutional neural networks (DCNNs) have made great success in many fields [18][19][20] via their powerful learning capabilities. DL-based methods learn the discriminant features and make the decisions for target tasks in a data-driven way and thus bring about a significant performance advantage on large-scale datasets. Inspired by these works, some researchers have made some attempts to develop CNN-based forensics methods, such as median filtering forensics [3], camera model identification [21], copy-move and splicing localization [4], as well as JPEG compression forensics [2]. A few research efforts have been also devoted to deep learning-based forensics for image inpainting [22][23].

2. Forensic Methods for Image Inpainting

A few research efforts have been devoted to developing forensic methods for image inpainting. They can be roughly divided into the following two categories.

2.1. Conventional Inpainting Forensics Methods

The conventional inpainting forensic methods rely on manually designed features to predict the inpainted pixels. Initially, for exemplar-based inpainting [10], a zero-connectivity length (ZCL) feature was designed to measure the similarity among image patches, and the inpainted patches were recognized by a fuzzy membership function of patch similarity [24]. A similar forensics method depending on patch similarity was presented in [25] for video inpainting. However, the similar patch searching process is very time-consuming, especially for a large image. In addition, a high false alarm rate may be provided by these methods for an image with uniform background.

The skipping patch matching was explored for inpainting forensics and copy-move detection in [26]. A two-stage patch searching method based on weight transformation was proposed in [14]. The two patch search methods accelerate the search of suspicious patches, but may cause accuracy loss. Furthermore, by multi-region relations based ZCL features, the inpainted image regions are identified in [14], achieving an improved false alarm rate. The work was further improved by exploiting the greatest ZCL feature and fragment splicing detection in [15]. Meanwhile, the suspicious patch search was sped up by the central pixel mapping method. The resulting problem is that a truly inpainted region is prone to be recognized as some isolated suspicious regions and they might be removed by fragment splicing detection.

The inpainted patch set was determined by the hybrid feature including Euclidean distance, the position distance, and the number of same pixels between two image patches in [27]. Unfortunately, the feature is very weak against the image post-processing operations and the forensics performance is highly image-dependent.

A few works were dedicated to improving the robustness of the inpainting forensics. For the compressed inpainted image, the forensics was performed by computing and segmenting the averaged sum of absolute difference images between the target image and a resaved JPEG compressed image at different quality factors [28]. However, the feature effectiveness is not clear if the image samples are modified using other manipulations. The method in [29] was developed based on high-dimensional feature mining in the discrete cosine transform domain to resist the compression attack. Many combinations of inpainting, compression, filtering, and resampling are recognized by extracting the marginal density and neighboring joint density features in [30]. The obtained classifiers only distinguish the considered specific forgery methods and do not locate the inpainted region.

To detect image tampering by sparsity-based image inpainting schemes [31][32], the forensics method based on canonical correlation analysis (CCA) was proposed in [33]. The method exhibits the advantage of robustness against some image post-processing operations, but has the same drawback as [30]. For diffusion-based inpainting technologies [8][9], a feature set based on the image Laplacian was constructed to identify the inpainted regions in [16]. The performance was further enhanced by weighted least squares filtering and the ensemble classifier in [17]. However, these methods fail to resist even quite weak attacks.

Principally, hand-crafted features for image inpainting are designed according to the observations in some images, which cannot be guaranteed to be valid in all cases. Moreover, the design of robust hand-crafted features is usually very difficult, since no obvious traces are left by image inpainting operations, particularly deep learning-based inpainting methods. In addition, the optimization of classifiers is carried out on a relatively small dataset or in a certain small parameter range and is dependent upon feature extraction, causing the restricted forensic performance.

2.2. Deep Learning-Based Inpainting Forensics Methods

The strategy of the method based on deep learning is different from the conventional one, which can automatically learn the inpainting features and makes decisions in a data-driven way. As the first attempt in [22], the fully convolutional network (FCN) was constructed to locate the tampered regions by exemplar-based inpainting method [10], and the weighted CE loss was designed to tackle the imbalance between the inpainted and normal pixels. The method is significantly superior to the conventional forensics methods in terms of detection accuracy and robustness, which can be further improved by skip connections [34]. A deep learning approach combining CNN and long short-term memory (LSTM) network was proposed in [35] to accomplish the spatially dense prediction for exemplar-based inpainting. As to the network design, attention is mainly concentrated on the improvement of the robustness and false alarm performance. The ResNet-based approach [36] merged the networks of object detection and semantic segmentation. The approach is developed to achieve the hybrid forensic purpose for exemplar-based inpainting, including manipulated localization, recognition, and semantic segmentation. The forensic approach for deep inpainting was first addressed in [23], and an FCN with a high-pass filter was designed to identify the inpainted pixels in an image.

Recently, some of the latest advances in deep learning have also been applied to the design of inpainting forensics methods. A deep forensic network was proposed in [37] which is automatically designed through the one-shot neural structure search algorithm [38] and includes a preprocessing module to enhance inpainting traces. A backbone network with multi-stream structure [39] was employed to establish a progressive network for image manipulation detection and localization [40], which could gradually fine-tune the prediction results from low resolution to high resolution.

DL-based methods can learn the discriminant features directly from the data, avoiding the difficulties of manually extracting features. Moreover, relying on end-to-end learning, DCNNs permit the optimization of the feature extraction and the final decision steps in a unique framework. With these characteristics, DL-based methods manifest a significant performance advantage on large-scale datasets. This motivates us to further investigate the deep learning-based methods for inpainting forensics.

References

- Alipour, N.; Behrad, A. Semantic segmentation of JPEG blocks using a deep CNN for non-aligned JPEG forgery detection and localization. Multimedia Tools Appl. 2020, 79, 8249–8265.

- Bakas, J.; Ramachandra, S.; Naskar, R. Double and triple compression-based forgery detection in JPEG images using deep convolutional neural network. J. Electron. Imaging 2020, 29, 023006.

- Zhang, J.; Liao, Y.; Zhu, X.; Wang, H.; Ding, J. A deep learning approach in the discrete cosine transform domain to median filtering forensics. IEEE Signal Process. Lett. 2020, 27, 276–280.

- Abhishek; Jindal, N. Copy move and splicing forgery detection using deep convolution neural network, and semantic segmentation. Multimedia Tools Appl. 2021, 80, 3571–3599.

- Liu, B.; Pun, C.M. Exposing splicing forgery in realistic scenes using deep fusion network. Inf. Sci. 2020, 526, 133–150.

- Mayer, O.; Stamm, M.C. Forensic similarity for digital images. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1331–1346.

- Mayer, O.; Bayar, B.; Stamm, M.C. Learning unified deep-features for multiple forensic tasks. In Proceedings of the 6th ACM Workshop on Information Hiding and Multimedia Security, Innsbruck, Austria, 20–22 June 2018; pp. 79–84.

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Internationl Conference on Computer Graphics and Interactive Techniques Conference, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424.

- Oliveira, M.M.; Bowen, B.; McKenna, R.; Chang, Y.S. Fast digital image inpainting. In Proceedings of the International Conference on Visualization, Imaging and Image Processing (VIIP 2001), Marbella, Spain, 3–5 September 2001; pp. 106–107.

- Criminisi, A.; Perez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212.

- Ružić, T.; Pižurica, A. Context-aware patch-Based image inpainting using Markov random field modeling. IEEE Trans. Image Process. 2015, 24, 444–456.

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T. Free-form image inpainting with gated convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4470–4479.

- Wan, Z.; Zhang, J.; Chen, D.; Liao, J. High-fidelity pluralistic image mcopletion with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtually, 11–17 October 2021; pp. 4672–4681.

- Chang, I.; Yu, J.C.; Chang, C.C. A forgery detection algorithm for exemplar-based inpainting images using multi-region relation. Image Vis. Comput. 2013, 31, 57–71.

- Liang, Z.; Yang, G.; Ding, X.; Li, L. An efficient forgery detection algorithm for object removal by exemplar-based image inpainting. J. Vis. Commun. Image R. 2015, 30, 75–85.

- Li, H.; Luo, W.; Huang, J. Localization of diffusion-based inpainting in digital images. IEEE Trans. Inf. Forensics Secur. 2017, 12, 3050–3064.

- Zhang, Y.; Liu, T.; Cattani, C.; Cui, Q.; Liu, S. Diffusion-based image inpainting forensics via weighted least squares filtering enhancement. Multimedia Tools Appl. 2021, 80, 30725–30739.

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241.

- Zhu, X.; Li, S.; Gan, Y.; Zhang, Y.; Sun, B. Multi-stream fusion network with generalized smooth L1 loss for single image dehazing. IEEE Trans. Image Process. 2021, 30, 7620–7635.

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 821–830.

- Rafi, A.M.; Tonmoy, T.I.; Kamal, U.; Wu, Q.M.J.; Hasan, M.K. RemNet: Remnant convolutional neural network for camera model identification. Neural Comput. Appl. 2021, 33, 3655–3670.

- Zhu, X.; Qian, Y.; Zhao, X.; Sun, B.; Sun, Y. A deep learning approach to patch-based image inpainting forensics. Signal Process. Image Commun. 2018, 67, 90–99.

- Li, H.; Huang, J. Localization of deep inpainting using high-pass fully convolutional network. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8301–8310.

- Wu, Q.; Sun, S.; Zhu, W.; Li, G.H.; Tu, D. Detection of digital doctoring in exemplar-based inpainted images. In Proceedings of the 2008 International Conference on Machine Learning and Cybernetics, Kunming, China, 12–15 July 2008; Volume 3, pp. 1222–1226.

- Das, S.; Shreyas, G.D.; Devan, L.D. Blind detection method for video inpainting forgery. Int. J. Comput. Appl. 2012, 60, 33–37.

- Bacchuwar, K.S.; Ramakrishnan, K.R. A jump patch-block match algorithm for multiple forgery detection. In Proceedings of the 2013 International Mutli-Conference on Automation, Computing, Communication, Control and Compressed Sensing (iMac4s), Kottayam, India, 22–23 March 2013; pp. 723–728.

- Trung, D.T.; Beghdadi, A.; Larabi, M.C. Blind inpainting forgery detection. In Proceedings of the 2014 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Atlanta, GA, USA, 3–5 December 2014; pp. 1019–1023.

- Zhao, Y.Q.; Liao, M.; Shih, F.Y.; Shi, Y.Q. Tampered region detection of inpainting JPEG images. Optik 2013, 124, 2487–2492.

- Liu, Q.; Zhou, B.; Sung, A.H.; Qiao, M. Exposing inpainting forgery in JPEG images under recompression attacks. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 164–169.

- Zhang, D.; Liang, Z.; Yang, G.; Li, Q.; Li, L.; Sun, X. A robust forgery detection algorithm for object removal by exemplar-based image inpainting. Multimedia Tools Appl. 2018, 77, 11823–11842.

- Xu, Z.; Sun, J. Image inpainting by patch propagation using patch sparsity. IEEE Trans. Image Process. 2010, 19, 1153–1165.

- Li, Z.; He, H.; Tai, H.M.; Yin, Z.; Chen, F. Color-direction patch-sparsity-based image inpainting using multidirection features. IEEE Trans. Image Process. 2014, 24, 1138–1152.

- Jin, X.; Su, Y.; Zou, L.; Wang, Y.; Jing, P.; Wang, Z.J. Sparsity-based image inpainting detection via canonical correlation analysis with low-rank constraints. IEEE Access 2018, 6, 49967–49978.

- Zhu, X.; Qian, Y.; Sun, B.; Ren, C.; Sun, Y.; Yao, S. Image inpainting forensics algorithm based on deep neural network. Acta Opt. Sin. 2018, 38, 1110005-1–1110005-9.

- Lu, M.; Liu, S. A detection approach using LSTM-CNN for object removal caused by exemplar-based image inpainting. Electronics 2020, 9, 858.

- Wang, X.; Wang, H.; Niu, S. An intelligent forensics approach for detecting patch-based image inpainting. Math. Probl. Eng. 2020, 2020, 8892989.

- Wu, H.; Zhou, J. IID-Net: Image inpainting detection network via neural architecture search and attention. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1172–1185.

- Bender, G.; Kindermans, P.J.; Zoph, B.; Vasudevan, V.; Le, Q. Understanding and simplifying one-shot architecture search. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 550–559.

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364.

- Liu, X.; Liu, Y.; Chen, J.; Liu, X. PSCC-Net: Progressive Spatio-Channel Correlation Network for Image Manipulation Detection and Localization. IEEE Trans. Circuits Syst. 2022, 32, 7505–7517.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.0K

Revisions:

2 times

(View History)

Update Date:

26 Jun 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No