Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Eun-Seok Lee | -- | 1539 | 2023-06-22 14:22:14 | | | |

| 2 | Rita Xu | Meta information modification | 1539 | 2023-06-26 03:55:04 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Lee, E.; Shin, B. Vertex Chunk-Based Object Culling. Encyclopedia. Available online: https://encyclopedia.pub/entry/45970 (accessed on 07 February 2026).

Lee E, Shin B. Vertex Chunk-Based Object Culling. Encyclopedia. Available at: https://encyclopedia.pub/entry/45970. Accessed February 07, 2026.

Lee, Eun-Seok, Byeong-Seok Shin. "Vertex Chunk-Based Object Culling" Encyclopedia, https://encyclopedia.pub/entry/45970 (accessed February 07, 2026).

Lee, E., & Shin, B. (2023, June 22). Vertex Chunk-Based Object Culling. In Encyclopedia. https://encyclopedia.pub/entry/45970

Lee, Eun-Seok and Byeong-Seok Shin. "Vertex Chunk-Based Object Culling." Encyclopedia. Web. 22 June, 2023.

Copy Citation

Famous content using the Metaverse concept allows users to freely place objects in a world space without constraints. To render various high-resolution objects placed by users in real-time, various algorithms exist, such as view frustum culling, visibility culling and occlusion culling. These algorithms selectively remove objects outside the camera’s view and eliminate an object that is too small to render.

occlusion culling

metaverse

virtual reality

1. Introduction

In recent years, various technologies for building the Metaverse [1][2] have been studied alongside the advancement of computer graphics and virtual reality technology. Metaverse is a term that combines “meta”, meaning transcendence, and “universe”, referring to the real world, and it provides users with a space where they can engage in various activities in a virtual world and enjoy interactive environments that contain a variety of content. Metaverse is designed to allow users to interact with it, not only on mobile devices and PCs, but also on various platforms such as VR devices and gaming consoles.

To provide more realistic and high-quality graphics in the Metaverse, various three-dimensional real-time rendering technologies are needed, including high-resolution three-dimensional meshes and realistic lighting effects. As the number of objects that need to be visualized on the screen increases, these rendering technologies require high-performance processors, such as high-performance CPUs or GPUs, and large amounts of memory. In the Metaverse, where interactions occur between users and various objects, it is important to allow users to freely place objects of various types without constraints and show them to others. In the case of a digital twin that needs to respond in both the real and virtual worlds, these features are even more necessary. Therefore, as the number of high-resolution objects placed in the world increases, Metaverse platforms on mobile devices, portable gaming consoles, and regular office PCs, which have relatively weak computing power, experience performance degradation and overheating issues. To address these problems, various algorithms are being researched to reduce the overhead of computing operations.

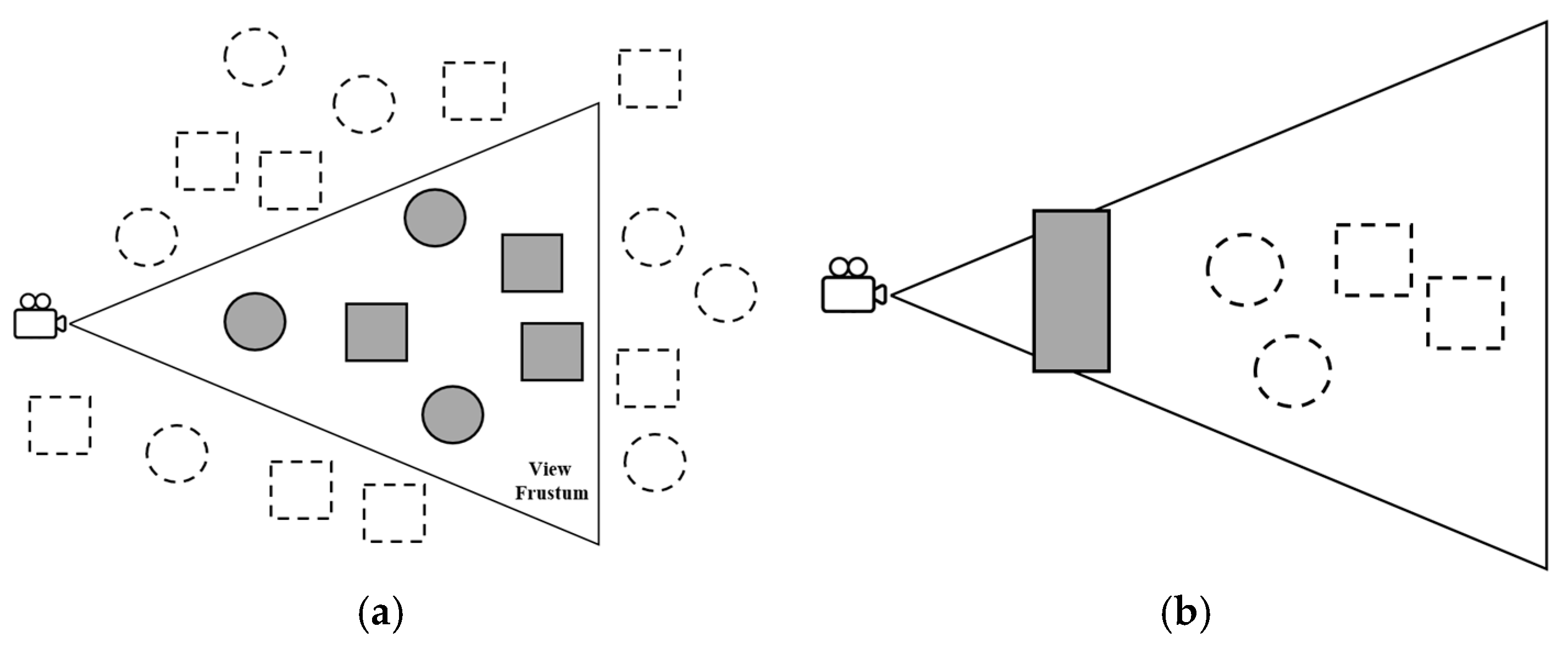

Recently, object culling techniques have emerged as essential technologies in Metaverse platforms, including game engines [3], to accelerate the rendering of various objects in real-time. Object culling is a technique that removes or simplifies objects that are not visible on the screen to minimize unnecessary rendering tasks. For example, view frustum culling (VFC) [4] and occlusion culling [5], shown in Figure 1, are representative object culling techniques. VFC is a method of processing objects that are not visible on the screen but are limited to objects within the frustum. The view frustum is constructed based on information, such as camera position, field of view and aspect ratio, and only the objects within the view frustum are selected for culling. By doing so, unnecessary objects outside the view frustum do not need to be rendered, resulting in improved rendering performance.

Figure 1. Examples of (a) VFC and (b) Occlusion Culling.

Object culling is a technique used in real-time rendering to improve performance by excluding objects that are not visible to the viewer. Occlusion culling, which is based on the same principle as VFC, selectively excludes objects that are within the viewer’s field of view but obscured by other objects, reducing unnecessary computations. To achieve this, the objects within the viewer’s field of view are rendered in advance to create a depth buffer, which is used to determine which objects to exclude from rendering. Collision detection [6] or rasterization techniques [7] can be used to determine which objects are not visible.

Object culling can significantly reduce rendering time as the number of excluded objects increases, making it a useful technique in large Metaverse applications where many people are interacting. However, there is a disadvantage that it does not always improve performance. When most objects are not culled, computational time is required to select the objects to cull, in addition to the rendering operations, which can actually decrease rendering performance.

To perform object culling, a common method is to inspect all objects to select the objects to use in the next frame. However, this is highly inefficient, so hierarchical search methods based on bounding volume hierarchies have been proposed. HROC (Hierarchical Raster Occlusion Culling) [8] is a method that performs occlusion culling using hierarchical data structures on the GPU, reducing the computational time required to determine unnecessary objects for rendering. However, this method also has the disadvantage of becoming slower when the number of objects to be processed by the GPU reaches its limit, as it may take longer to perform rendering without object culling.

2. Vertex Chunk-Based Object Culling

Object culling is an essential technique in real-time rendering that efficiently accelerates the rendering process by removing objects that do not affect the final rendering result. With various hardware-based features added to recent GPUs, these techniques are being more effectively utilized. Generally, scenes are most affected by viewing conditions, so the selection process of objects to cull is crucial and dependent on the viewing condition. VFC is a typical example that utilizes the viewing condition. This technique selects only the objects within the field of view to render, effectively culling objects outside the field of view to improve rendering performance. Typically, objects are rendered if their bounding volume falls within the view frustum.

The geometry shader [9] is a GPU pipeline stage [10] that enables the selection and culling of geometric data during on-line processing. Using this stage, the VFC technique [11][12] can efficiently remove objects outside the field of view in a single pass. However, this technique cannot select and remove objects within obstructed areas due to occluders. Occlusion culling is a method of selecting and culling objects located in obstructed areas by occluders.

Recent GPUs perform fragment-level culling effectively by using techniques, such as early depth test (early Z) [7] or Hierarchical-Z(Hi-Z) [13], to remove unnecessary fragment operations on the object’s unwanted areas. However, these methods cannot perform culling at the object level, so object operations must be performed through draw calls, which is a disadvantage.

To select objects more efficiently, techniques using depth buffers with a hierarchical structure have been researched [14][15][16]. These techniques quickly find the obstructed areas by occluders, and select and cull objects located in these areas. This method uses a low-resolution depth buffer to efficiently reduce the amount of computation, solving the problems of existing object-level rendering techniques. However, it requires a two-pass rendering process.

To quickly select objects in a single pass, techniques using bounding volumes are available. These techniques improve the speed of creating the depth buffer by using methods such as Box, Sphere, and K-DOP [12][17][18]. Since they effectively reduce the geometric data of objects using high-resolution meshes, these techniques can resolve the bottleneck that occurs in the rasterization stage when creating the first depth buffer in scenes with many objects.

Methods that use hierarchical structures, such as Bounding Volume Hierarchy [19], have been proposed to efficiently perform occlusion calculations in larger scenes with more objects. Among these methods, research that utilizes temporal coherence [8][20] proposes to update the hierarchy only for objects whose positions have changed since the previous frame, which reduces the cost of updating the hierarchy for objects that have not moved.

With the advancement of game engines [21] used to build the metaverse, various fields have started utilizing object culling techniques. In metaverses based on real-world environments such interior areas [22], acceleration for lighting processing is essential. Therefore, there are ongoing research efforts to integrate occlusion culling techniques with lighting rendering algorithms. The Particle-k-d tree [23] is a method that performs probabilistic occlusion culling based on ray tracing, which has achieved speed improvements in fixed viewpoints. In the field of lighting, research has also been conducted on methods for culling rays that do not intersect with objects in order to achieve real-time lighting for dynamic entities [24], not just objects.

For molecular-level simulations, such as fluid dynamics, a method has been proposed that divides particles into groups to define occluders for particle-level occlusion culling [5]. This method improves the rendering performance of particle-based rendering by grouping and removing certain particles, instead of performing rendering for all particles. Particles, such as fluids, are challenging to generate accurate geometric data, and occlusion culling is only possible when particle positions align perfectly at the pixel level and each particle is opaque. Performing such calculations for massive particles is inefficient, so grouping them allows for defining occludees for spatial regions, enabling culling and selectively choosing particle data required for rendering more simply and quickly than before.

In the holography domain, performance improvement methods utilizing occlusion culling have also been proposed [25][26]. Computer-generated holography visualizes using point sources as units. These point sources, representing color units in the spatial domain for hologram creation, define occludees in the spatial region through spatial data structures, such as octrees. By reducing calculations for specific regions due to the optical nature of reconstructing computer-generated holographic images, efficient spatial-level operations can be achieved for hologram devices.

In the modern metaverse environment, where realistic images need to be rendered in real-time on mobile platforms, it is advantageous to create accurate occluders that precisely hide objects. Therefore, instead of methods [27] that reduce power consumption by minimizing computations through occlusion culling, an approach [28] has been proposed to automatically place occluders in locations where occlusion occurs frequently. This method places low-resolution occluders in crucial locations, allowing exclusion of high-resolution meshes from rendering calculations, thus efficiently reducing computations.

References

- Dionisio, J.D.N.; Burns, W.G., III; Gilbert, R. 3D Virtual Worlds and the Metaverse: Current Status and Future Possibilities. ACM Comput. Surv. 2013, 45, 1–38.

- Gaafar, A.A. Metaverse In Architectural Heritage Documentation & Education. Adv. Ecol. Environ. Res. 2021, 6, 66–86.

- Gregory, J. Game Engine Architecture, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2018; ISBN 978-1-351-97427-1.

- Lee, E.-S.; Lee, J.-H.; Shin, B.-S. Bimodal Vertex Splitting: Acceleration of Quadtree Triangulation for Terrain Rendering. IEICE Trans. Inf. Syst. 2014, E97-D, 1624–1633.

- Ibrahim, M.; Rautek, P.; Reina, G.; Agus, M.; Hadwiger, M. Probabilistic Occlusion Culling Using Confidence Maps for High-Quality Rendering of Large Particle Data. IEEE Trans. Vis. Comput. Graph. 2022, 28, 573–582.

- System for Collision Detection Between Deformable Models Built on Axis Aligned Bounding Boxes and GPU Based Culling-ProQuest. Available online: https://www.proquest.com/openview/ee52f6ff878bc46c41bc5f5967089c25/1?pq-origsite=gscholar&cbl=18750&diss=y (accessed on 27 March 2023).

- Kubisch, C. OpenGL 4.4 Scene Rendering Techniques. NVIDIA Corp. 2014. Available online: https://on-demand.gputechconf.com/gtc/2014/presentations/S4379-opengl-44-scene-rendering-techniques.pdf (accessed on 27 March 2023).

- Lee, G.B.; Jeong, M.; Seok, Y.; Lee, S. Hierarchical Raster Occlusion Culling. Comput. Graph. Forum. 2021, 40, 489–495.

- Mukundan, R. The Geometry Shader. In 3D Mesh Processing and Character Animation: With Examples Using OpenGL, OpenMesh and Assimp; Mukundan, R., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 73–89. ISBN 978-3-030-81354-3.

- Haar, U.; Aaltonen, S. GPU-Driven Rendering Pipelines. SIGGRAPH Adv. Real-Time Render. Games Course 2015, 2, 4.

- Sunar, M.S.; Zin, A.M.; Sembok, T.M.T. Improved View Frustum Culling Technique for Real-Time Virtual Heritage Application. Int. J. Virtual Real. 2008, 7, 43–48.

- Assarsson, U.; Moller, T. Optimized View Frustum Culling Algorithms for Bounding Boxes. J. Graph. Tools 2000, 5, 9–22.

- Greene, N.; Kass, M.; Miller, G. Hierarchical Z-Buffer Visibility. In 20th Annual Conference on Computer Graphics and Interactive Techniques-SIGGRAPH ’93; ACM Press: New York, NY, USA, 1993; pp. 231–238.

- Klosowski, J.Y.; Silva, C.T. The Prioritized-Layered Projection Algorithm for Visible Set Estimation; IEEE: Piscataway, NJ, USA, 2000; Volume 6, pp. 108–123. Available online: https://ieeexplore.ieee.org/abstract/document/856993 (accessed on 1 May 2023).

- Ho, P.C.; Wang, W. Occlusion Culling Using Minimum Occluder Set and Opacity Map. In Proceedings of the 1999 IEEE International Conference on Information Visualization (Cat. No. PR00210), London, UK, 14–16 July 1999; pp. 292–300.

- Nießner, M.; Loop, C. Patch-Based Occlusion Culling for Hardware Tessellation; Computer Graphics International: Poole, UK, 2012; pp. 1–9.

- Fünfzig, C.; Fellner, D. Easy Realignment of K-DOP Bounding Volumes. Graph. Interface 2003, 3, 257–264.

- Siwei, H.; Baolong, L. Review of Bounding Box Algorithm Based on 3D Point Cloud. Int. J. Adv. Netw. Monit. Control. 2021, 6, 18–23.

- Zhang, H.; Manocha, D.; Hudson, T.; Hoff, K.E. Visibility Culling Using Hierarchical Occlusion Maps. In 24th Annual Conference on Computer Graphics and Interactive Techniques-SIGGRAPH ’97; ACM Press: New York, NY, USA, 1997; pp. 77–88.

- Coorg, S.; Teller, S. Temporally Coherent Conservative Visibility (Extended Abstract). In Proceedings of the Twelfth Annual Symposium on Computational Geometry, Philadelphia, PA, USA, 24–26 May 1996; pp. 78–87.

- Duanmu, X.; Lan, G.; Chen, K.; Shi, X.; Zhang, L. 3D Visual Management of Substation Based on Unity3D. In Proceedings of the 2022 4th International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 9–11 December 2022; pp. 427–431.

- Oksanen, M. 3D Interior Environment Optimization for VR. Bachelor’s Thesis; Turku University of Applied Science. Available online: http://www.theseus.fi/handle/10024/788282 (accessed on 2 June 2023).

- Walloner, P. Acceleration of P-k-d Tree Traversal Using Probabilistic Occlusion. Bachelor’s Thesis, University of Stuttgart, Institute for Visualization and Interactive Systems, Stuttgart, Germany, 2022.

- Xu, Y.; Jiang, Y.; Zhang, J.; Li, K.; Geng, G. Real-Time Ray-Traced Soft Shadows of Environmental Lighting by Conical Ray Culling; Association for Computing Machinery: New York, NY, USA, 2022; Volume 5.

- Wang, F.; Ito, T.; Shimobaba, T. High-Speed Rendering Pipeline for Polygon-Based Holograms. Photonics Res. 2023, 11, 313–328.

- Martinez-Carranza, J.; Kozacki, T.; Kukołowicz, R.; Chlipala, M.; Idicula, M.S. Occlusion Culling for Wide-Angle Computer-Generated Holograms Using Phase Added Stereogram Technique. Photonics 2021, 8, 298.

- Corbalán-Navarro, D.; Aragón, J.L.; Anglada, M.; Parcerisa, J.-M.; González, A. Triangle Dropping: An Occluded-Geometry Predictor for Energy-Efficient Mobile GPUs. ACM Trans. Archit. Code Optim. 2022, 19, 39:1–39:20.

- Wu, K.; He, X.; Pan, Z.; Gao, X. Occluder Generation for Buildings in Digital Games. Comput. Graph. Forum 2022, 41, 205–214.

More

Information

Subjects:

Computer Science, Theory & Methods

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

743

Revisions:

2 times

(View History)

Update Date:

26 Jun 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No