1. Application to Unmanned Vehicles and Robotics

Visual feedback was provided via a forward-facing camera placed on the hull of the drone. Brain activity was used to move the quadcopter through an obstacle course. The subjects were able to quickly pursue a series of foam ring targets by passing through them in a real-world environment. They obtained up to

90.5%90.5% of all valid targets through the course, and the movement was performed in an accurate and continuous way. The performance of such a system was quantified by using metrics suitable for asynchronous BCI. The results provide an affordable framework for the development of multidimensional BCI control in telepresence robotics. The study showed that BCI can be effectively used to accomplish complex control in a three-dimensional space. Such an application can be beneficial both to people with severe disabilities as well as in industrial environments. In fact, the authors of

[1] faced problems related to typical control applications where the BCI acts as a controller that moves a simple object in a structured environment. Such a study follows previous research endeavors: the works in

[2][3] that showed the ability of users to control the flight of a virtual helicopter with 2D control, and the work of

[4], that demonstrated 3D control by leveraging a motor imagery paradigm with intelligent control strategies.

Applications on different autonomous robots are under investigation. For example, the study of

[5] proposed a new humanoid navigation system directly controlled through an asynchronous sensorimotor rhythm-based BCI system. Their approach allows for flexible robotic motion control in unknown environments using a camera vision. The proposed navigation system includes posture-dependent control architecture and is comparable with the previous mobile robot navigation system that depends on an agent-based model.

2. Application to “Smart Home” and Virtual Reality

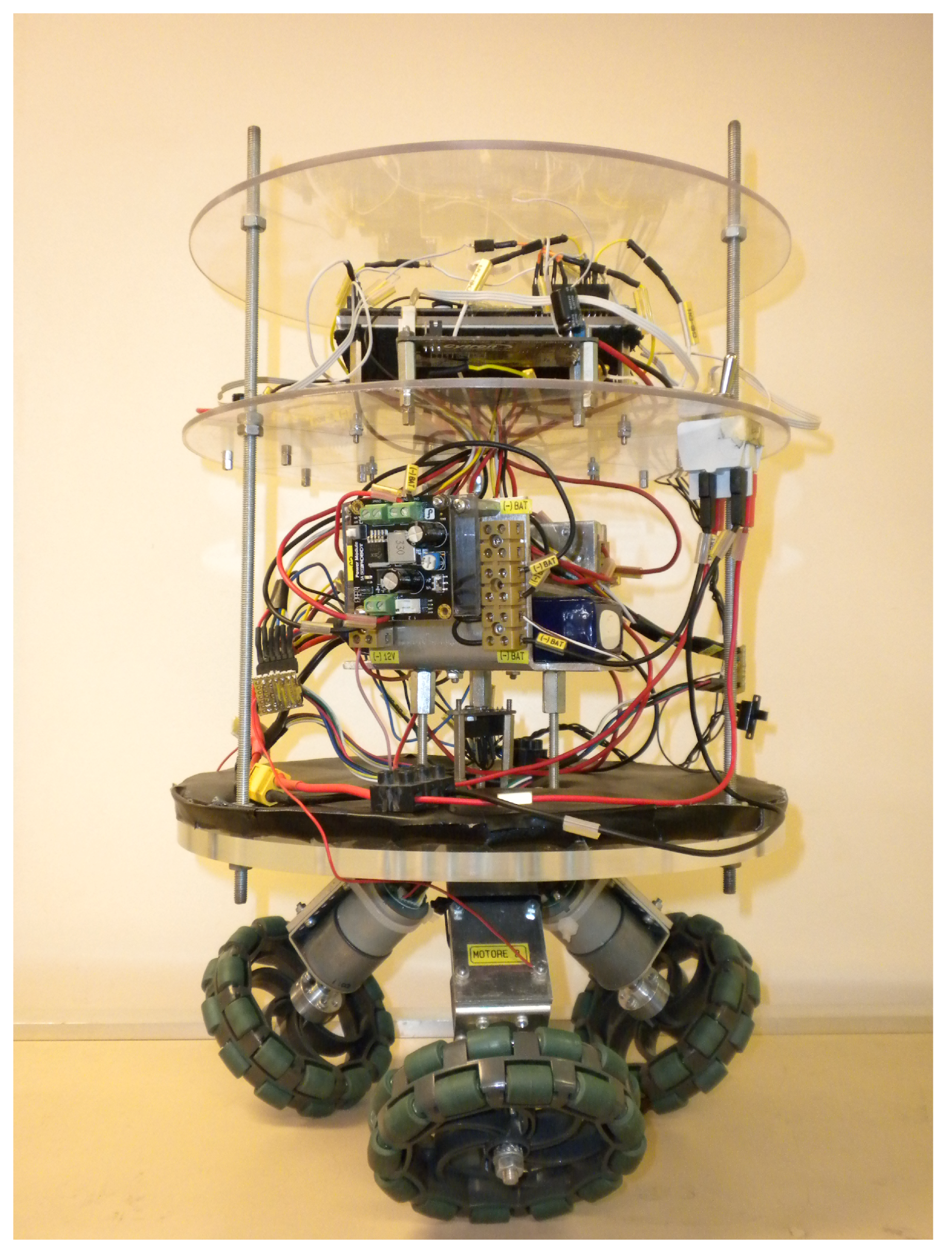

Applications of EEG-based brain–computer interfaces are emerging in “Smart Homes”. BCI technology can be used by disabled people to improve their independence and to maximize their residual capabilities at home. In the last years, novel BCI systems were developed to control home appliances. A prototypical three-wheel, small-sized robot for smart-home applications used to perform experiments is shown in Figure 1.

Figure 1. A prototypical three-wheel, small-sized robot for smart-home applications used to perform experiments (from the Department of Information Engineering at the Università Politecnica delle Marche).

The aim of the study

[6] was to improve the quality of life of disabled people through BCI control systems during some daily life activity such as opening/closing doors, switching on and off lights, controlling televisions, using mobile phones, sending massages to people in their community, and operating a video camera. To accomplish such goals, the authors of the study

[6] proposed a real-time wireless EEG-based BCI system based on commercial EMOTIV EPOC headset. EEG signals were acquired by an EMOTIV EPOC headset and transmitted through a Bluetooth module to a personal computer. The received EEG data were processed by the software provided by EMOTIV, and the results were transmitted to the embedded system to control the appliances through a Wi-Fi module. A dedicated graphical user interface (GUI) was developed to detect a key stroke and to convert it to a predefined command.

In the studies of

[7][8], the authors proposed the integration of the BCI technique with universal plug and play (UPnP) home networking for smart house applications. The proposed system can process EEG signals without transmitting them to back-end personal computers. Such flexibility, the advantages of low-power-consumption and of using small-volume wireless physiological signal acquisition modules, and embedded signal processing modules make this technology be suitable for various kinds of smart applications in daily life.

The study of

[9] evaluated the performances of an EEG-based BCI system to control smart home applications with high accuracy and high reliability. In said study, a P300-based BCI system was connected to a virtual reality system that can be easily reconfigurable and therefore constitutes a favorable testing environment for real smart homes for disabled people. The authors of

[10] proposed an implementation of a BCI system for controlling wheelchairs and electric appliances in a smart house to assist the daily-life activities of its users. Tests were performed by a subject achieving satisfactory results.

Virtual reality concerns human–computer interaction, where the signals extracted from the brain are used to interact with a computer. With advances in the interaction with computers, new applications have appeared: video games

[11] and virtual reality developed with noninvasive techniques

[12][13].

3. Application to Mobile Robotics and Interaction with Robotic Arms

The EEG signals of a subject can be recorded and processed appropriately in order to differentiate between several cognitive processes or “mental tasks”. BCI-based control systems use such mental activity to generate control commands in a device or a robot arm or a wheelchair

[14][15]. As previously said, BCIs are systems that can bypass conventional channels of communication (i.e., muscles and speech) to provide direct communication and control between the human brain and physical devices by translating different patterns of brain activity into commands in real time. This kind of control can be successfully applied to support people with motor disabilities to improve their quality of life, to enhance the residual abilities, or to replace lost functionality

[16]. For example, with regard to individuals affected by neurological disabilities, the operation of an external robotic arm to facilitate handling activities could take advantage of these new communication modalities between humans and physical devices

[17]. Some functions such as those connected with the abilities to select items on a screen by moving a cursor in a three-dimensional scene is straightforward using BCI-based control

[18][19]. However, a more sophisticated control strategy is required to accomplish the control tasks at more complex levels because most external effectors (mechanical prosthetics, motor robots, and wheelchairs) posses more degrees of freedom. Moreover, a major feature of brain-controlled mobile robotic systems is that these mobile robots require higher safety since they are used to transport disabled people

[16]. In BCI-based control, EEG signals are translated into user intentions.

In synchronous protocols, usually P300 and SSVEP-BCIs based on external stimulation are adopted. For asynchronous protocols, event-related de-synchronization, and ERS, interfaces independent of external stimuli are used. In fact, since asynchronous BCIs do not require any external stimulus, they appear more suitable and natural for brain-controlled mobile robots, where users need to focus their attention on robot driving but not on external stimuli.

Another aspect is related to two different operational modes that can be adopted in brain-controlled mobile robots

[16]. One category is called “direct control by the BCI”, which means that the BCI translates EEG signals into motion commands to control robots directly. This method is computationally less complex and does not require additional intelligence. However, the overall performance of these brain-controlled mobile robots mainly depends on the performance of noninvasive BCIs, which are currently slow and uncertain

[16]. In other words, the performance of the BCI systems limits that of the robots. In the second category of brain-controlled robots, a shared control was developed, where a user (using a BCI) and an intelligent controller (such as an autonomous navigation system) share the control over the robots. In this case, the performance of robots depend on their intelligence. Thus, the safety of driving these robots can be better ensured, and even the accuracy of intention inference of the users can be improved. This kind of approach is less compelling for the users, but their reduced effort translates into higher computational cost. The use of sensors (such as laser sensors) is often required.

4. Application to Robotic Arms, Robotic Tele-Presence, and Electrical Prosthesis

Manipulator control requires more accuracy in space target reaching compared with the wheelchair and other devices control. Control of the movement of a cursor in a three-dimensional scene is the most significant pattern in BCI-based control studies

[20][21]. EEG changes, normally associated with left-hand, right-hand, or foot movement imagery, can be used to control cursor movement

[21].

Several research studies

[15][22][23][24][25][26] presented applications aimed at the control of a robot or a robotic arm to assist people with severe disabilities in a variety of tasks in their daily life. In most of the cases, the focus of these papers is on the different methods adopted to classify the action that the robot arm has to perform with respect to the mental activity recorded by BCI. In the contributions

[15][22], a brain–computer interface is used to control a robot’s end-effector to achieve a desired trajectory or to perform pick/place tasks. The authors use an asynchronous protocol and a new LDA-based classifier to differentiate between three mental tasks. In

[22], in particular, the system uses radio-frequency identification (RFID) to automatically detect objects that are close to the robot. A simple visual interface with two choices, “move” and “pick/place”, allows the user to pick/place the objects or to move the robot. The same approach is adopted in the research framework described in

[24], where the user has to concentrate his/her attention on the option required in order to execute the action visualized on the menu screen. In the work of

[23], an interactive, noninvasive, synchronous BCI system is developed to control in a whole three-dimensional workspace a manipulator having several degrees of freedom.

Using a robot-assisted upper-limb rehabilitation system, in the work of

[27], the patient’s intention is translated into a direct control of the rehabilitation robot. The acquired signal is processed (through wavelet transform and LDA) to classify the pattern of left- and right-upper-limb motor imagery. Finally, a personal computer triggers the upper-limb rehabilitation robot to perform motor therapy and provides a virtual feedback.

In the study of

[28], the authors showed how BCI-based control of a robot moving at a user’s home can be successfully reached after a training period. P300 is used in

[26] to discern which object the robot should pick up and which location the robot should take the object to. The robot is equipped with a camera to frame objects. The user is instructed to attend to the image of the object, while the border around each image is flashed in a random order. A similar procedure is used to select a destination location. From a communication viewpoint, the approach provides cues in a synchronous way. The research study

[5] deals with a similar approach but with an asynchronous BCI-based direct-control system for humanoid robot navigation. The experimental procedures consist of offline training, online feedback testing, and real-time control sessions. Five healthy subjects controlled a humanoid robot navigation to reach a target in an indoor maze by using their EEGs based on real-time images obtained from a camera on the head of the robot.

Brain–computer interface-based control has been adopted also to manage hand or arm prosthesis

[29][30]. In such cases, patients were subjected to a training period, during which they learned to use their motor imagery. In particular, in the work described in

[29], tetraplegic patients were trained to control the opening and closing of their paralyzed hand by means of orthosis by an EEG recorded over the sensorimotor cortex.

5. Application to Wheelchair Control and Autonomous Vehicles

Power wheelchairs are traditionally operated by a joystick. One or more switches change the function that is controlled by the joystick. Not all persons who could experience increased mobility by using a powered wheelchair possess the necessary cognitive and neuromuscular capacity needed to navigate a dynamic environment with a joystick. For these users, “shared” control approach coupled with an alternative interface is indicated. In a traditional shared control system, the assistive technology assists the user in path navigation. Shared control systems typically can work in several modes that vary the assistance provided (i.e., user autonomy) and rely on several movement algorithms. The authors of

[31] suggest that shared control approaches can be classified in two ways: (1) mode changes triggered by the user via a button and (2) mode changes hard-coded to occur when specific conditions are detected.

Most of the current research related to BCI-based control of wheelchair shows applications of synchronous protocols

[32][33][34][35][36][37]. Although synchronous protocols showed high accuracy and safety

[33], low response efficiency and inflexible path option can represent a limit for wheelchair control in the real environment.

Minimization of user involvement is addressed by the work in

[31], through a novel semi-autonomous navigation strategy. Instead of requiring user control commands at each step, the robot proposes actions (e.g., turning left or going forward) based on environmental information. The subject may reject the action proposed by the robot if he/she disagrees with it. Given the rejection of the human subject, the robot takes a different decision based on the user’s intention. The system relies on the automatic detection of interesting navigational points and on a human–robot dialog aimed at inferring the user’s intended action.

The authors of the research work

[32] used a discrete approach for the navigation problem, in which the environment is discretized and composed by two regions (rectangles of 1 m

22, one on the left and the other on the right of the start position), and the user decides where to move next by imagining left or right limb movements. In

[33][34], a P300-based (slow-type) BCI is used to select the destination in a list of predefined locations. While the wheelchair moves on virtual guiding paths ensuring smooth, safe, and predictable trajectories, the user can stop the wheelchair by means of a faster BCI. In fact, the system switches between the fast and the slow BCIs depending on the state of the wheelchair. The paper

[35] describes a brain-actuated wheelchair based on a synchronous P300 neurophysiological protocol integrated in a real-time graphical scenario builder, which incorporates advanced autonomous navigation capabilities (shared control). In the experiments, the task of the autonomous navigation system was to drive the vehicle to a given destination while also avoiding obstacles (both static and dynamic) detected by the laser sensor. The goal/location was provided by the user by means of a brain–computer interface.

The contributions of

[36][37] describe a BCI based on SSVEPs to control the movement of an autonomous robotic wheelchair. The signals used in this work come from individuals who are visually stimulated. The stimuli are black-and-white checkerboards flickering at different frequencies.

Asynchronous protocols have been suggested for the BCI-based wheelchair control in

[38][39][40]. The authors of

[38] used beta oscillations in the EEG elicited by imagination of movements of a paralysed subject for a self-paced asynchronous BCI control. The subject, immersed in a virtual street populated with avatars, was asked to move among the avatars toward the end of the street, to stop by each avatar, and to talk to them. In the experiments described in

[39], a human user makes path planning and fully controls a wheelchair except for automatic obstacle avoidance based on a laser range finder. In the experiments reported in

[40], two human subjects were asked to mentally drive both a real and a simulated wheelchair from a starting point to a goal along a prespecified path.

Several recent papers describe BCI applications where wheelchair control is multidimensional. In fact, it appears that control commands from a single modality were not enough to meet the criteria of multi-dimensional control. The combination of different EEG signals can be adopted to give multiple control (simultaneous or sequential) commands. The authors of

[41][42] showed that hybrid EEG signals, such as SSVEP and motor imagery, could improve the classification accuracy of brain–computer interfaces. The authors of

[43][44] adopted the combination of P300 potential and MI or SSVEP to control a brain-actuated wheelchair. In this case, multi-dimensional control (direction and speed) is provided by multiple commands. In the paper of

[45], the authors proposed a hybrid BCI system that combines MI and SSVEP to control the speed and direction of a wheelchair synchronously. In this system, the direction of the wheelchair was given by left- and right-hand imagery. The idle state without mental activities was decoded to keep the wheelchair moving along the straight direction. Synchronously, SSVEP signals induced by gazing at specific flashing buttons were used to accelerate or decelerate the wheelchair. To make it easier for the reader to identify the references in this section,

Table 1 summarizes the papers about BCI applications presented in each subsection.

Table 1. BCI applications to automation.

| Reference Paper |

Application to Unmanned Vehicles and Robotics |

Application to Mobile Robotics and Interaction with Robotic Arms |

Application to Robotic Arms, Robotic Tele-Presence and Electrical Prosthesis |

Application to Wheelchair Control and Autonomous Vehicles |

| [46] |

X |

|

|

|

| [47] |

X |

|

|

|

| [48] |

X |

|

|

|

| [49] |

X |

|

|

|

| [50] |

X |

|

|

|

| [51] |

X |

|

|

|

| [52] |

X |

|

|

|

| [53] |

X |

|

|

|

| [54] |

X |

|

|

|

| [55] |

X |

|

|

|

| [56] |

X |

|

|

|

| [57] |

X |

|

|

|

| [11] |

X |

|

|

|

| [16] |

X |

|

|

|

| [58] |

X |

|

|

|

| [1] |

X |

|

|

|

| [2] |

X |

|

|

|

| [3] |

X |

|

|

|

| [4] |

X |

|

|

|

| [5] |

X |

|

|

|

| [14] |

|

X |

|

|

| [15] |

|

X |

|

|

| [16] |

|

X |

|

|

| [17] |

|

X |

|

|

| [18] |

|

X |

|

|

| [19] |

|

X |

|

|

| [20] |

|

|

X |

|

| [21] |

|

|

X |

|

| [15] |

|

|

X |

|

| [22] |

|

|

X |

|

| [23] |

|

|

X |

|

| [24] |

|

|

X |

|

| [25] |

|

|

X |

|

| [26] |

|

|

X |

|

| [27] |

|

|

X |

|

| [28] |

|

|

X |

|

| [29] |

|

|

X |

|

| [30] |

|

|

X |

|

| [31] |

|

|

|

X |

| [32] |

|

|

|

X |

| [33] |

|

|

|

X |

| [35] |

|

|

|

X |

| [36] |

|

|

|

X |

| [37] |

|

|

|

X |

| [38] |

|

|

|

X |

| [39] |

|

|

|

X |

| [41] |

|

|

|

X |

| [42] |

|

|

|

X |

| [43] |

|

|

|

X |

| [44] |

|

|

|

X |

| [45] |

|

|

|

X |