| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Kaziwa Saleh | -- | 4971 | 2023-04-04 02:29:10 | | | |

| 2 | Kaziwa Saleh | -1539 word(s) | 3432 | 2023-04-04 02:48:11 | | | | |

| 3 | Camila Xu | Meta information modification | 3432 | 2023-04-04 08:13:32 | | | | |

| 4 | Camila Xu | Meta information modification | 3432 | 2023-04-24 07:52:46 | | |

Video Upload Options

The generative adversarial network (GAN) is a structured probabilistic model that consists of two networks, a generator that captures the data distributions and a discriminator that decides whether the produced data come from the actual data distribution or from the generator. The two networks train in a two-player minimax game fashion until the generator can generate samples that are similar to the true samples, and the discriminator can no longer distinguish between the real and the fake samples. Although current computer vision systems are closer to the human intelligence when it comes to comprehending the visible world than previously, their performance is hindered when objects are partially occluded. Since we live in a dynamic and complex environment, we encounter more occluded objects than fully visible ones. Therefore, instilling the capability of amodal perception into those vision systems is crucial. However, overcoming occlusion is difficult and comes with its own challenges. GAN, on the other hand, is renowned for its generative power in producing data from a random noise distribution that approaches the samples that come from real data distributions.

1. Introduction

2. GAN in Amodal Completion

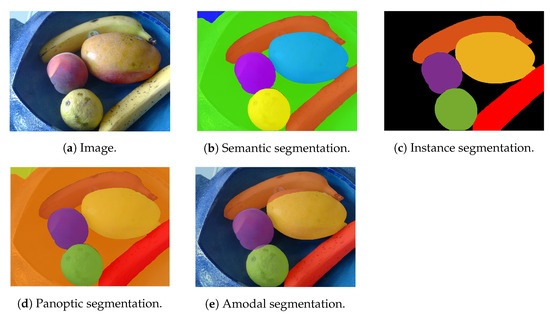

2.1. Amodal Segmentation

2.2. Order Recovery

2.3. Amodal Appearance Reconstruction

2.3.1. Generic Object Completion

2.3.2. Face Completion

2.3.3. Attribute Classification

2.3.4. Miscellaneous Applications

2.4. Training Data

References

- Thielen, J.; Bosch, S.E.; van Leeuwen, T.M.; van Gerven, M.A.; van Lier, R. Neuroimaging findings on amodal completion: A review. i-Perception 2019, 10, 2041669519840047.

- Saleh, K.; Szénási, S.; Vámossy, Z. Occlusion Handling in Generic Object Detection: A Review. In Proceedings of the 2021 IEEE 19th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 21–23 January 2021; pp. 477–484.

- Wang, Z.; She, Q.; Ward, T.E. Generative adversarial networks in computer vision: A survey and taxonomy. ACM Comput. Surv. (CSUR) 2021, 54, 1–38.

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196.

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784.

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232.

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 214–223.

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134.

- Ao, J.; Ke, Q.; Ehinger, K.A. Image amodal completion: A survey. In Computer Vision and Image Understanding; Elsevier: Amsterdam, The Netherlands, 2023; p. 103661.

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5840–5848.

- Yan, X.; Wang, F.; Liu, W.; Yu, Y.; He, S.; Pan, J. Visualizing the invisible: Occluded vehicle segmentation and recovery. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7618–7627.

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1947–1962.

- Zhou, Q.; Wang, S.; Wang, Y.; Huang, Z.; Wang, X. Human De-occlusion: Invisible Perception and Recovery for Humans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3691–3701.

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative image inpainting with contextual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5505–5514.

- Yan, X.; Wang, F.; Liu, W.; Yu, Y.; He, S.; Pan, J. Visualizing the invisible: Occluded vehicle segmentation and recovery. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7618–7627.

- Zhang, Q.; Liang, Q.; Liang, H.; Yang, Y. Removal and Recovery of the Human Invisible Region. Symmetry 2022, 14, 531.

- Zheng, C.; Dao, D.S.; Song, G.; Cham, T.J.; Cai, J. Visiting the Invisible: Layer-by-Layer Completed Scene Decomposition. Int. J. Comput. Vis. 2021, 129, 3195–3215.

- Dhamo, H.; Navab, N.; Tombari, F. Object-driven multi-layer scene decomposition from a single image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5369–5378.

- Dhamo, H.; Tateno, K.; Laina, I.; Navab, N.; Tombari, F. Peeking behind objects: Layered depth prediction from a single image. Pattern Recognit. Lett. 2019, 125, 333–340.

- Mani, K.; Daga, S.; Garg, S.; Narasimhan, S.S.; Krishna, M.; Jatavallabhula, K.M. Monolayout: Amodal scene layout from a single image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1689–1697.

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Free-form image inpainting with gated convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4471–4480.

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5840–5848.

- Zhan, X.; Pan, X.; Dai, B.; Liu, Z.; Lin, D.; Loy, C.C. Self-supervised scene de-occlusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3784–3792.

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 14–17 May 2018; pp. 85–100.

- Ehsani, K.; Mottaghi, R.; Farhadi, A. Segan: Segmenting and generating the invisible. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6144–6153.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Kahatapitiya, K.; Tissera, D.; Rodrigo, R. Context-aware automatic occlusion removal. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1895–1899.

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 2017, 30, 5767–5777.

- Cai, J.; Han, H.; Cui, J.; Chen, J.; Liu, L.; Zhou, S.K. Semi-supervised natural face de-occlusion. IEEE Trans. Inf. Forensics Secur. 2020, 16, 1044–1057.

- Chen, Y.A.; Chen, W.C.; Wei, C.P.; Wang, Y.C.F. Occlusion-aware face inpainting via generative adversarial networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1202–1206.

- Cheung, Y.M.; Li, M.; Zou, R. Facial Structure Guided GAN for Identity-preserved Face Image De-occlusion. In Proceedings of the 2021 International Conference on Multimedia Retrieval, Taipei, Taiwan, 21 August 2021; pp. 46–54.

- Li, Y.; Liu, S.; Yang, J.; Yang, M.H. Generative face completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3911–3919.

- Mathai, J.; Masi, I.; AbdAlmageed, W. Does generative face completion help face recognition? In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–8.

- Liu, H.; Zheng, W.; Xu, C.; Liu, T.; Zuo, M. Facial landmark detection using generative adversarial network combined with autoencoder for occlusion. Math. Probl. Eng. 2020, 2020, 1–8.

- Cai, J.; Hu, H.; Shan, S.; Chen, X. Fcsr-gan: End-to-end learning for joint face completion and super-resolution. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–8.

- Li, C.; Ge, S.; Zhang, D.; Li, J. Look through masks: Towards masked face recognition with de-occlusion distillation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3016–3024.

- Dong, J.; Zhang, L.; Zhang, H.; Liu, W. Occlusion-aware gan for face de-occlusion in the wild. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6.

- Jabbar, A.; Li, X.; Assam, M.; Khan, J.A.; Obayya, M.; Alkhonaini, M.A.; Al-Wesabi, F.N.; Assad, M. AFD-StackGAN: Automatic Mask Generation Network for Face De-Occlusion Using StackGAN. Sensors 2022, 22, 1747.

- Li, Z.; Hu, Y.; He, R.; Sun, Z. Learning disentangling and fusing networks for face completion under structured occlusions. Pattern Recognit. 2020, 99, 107073.

- Jabbar, A.; Li, X.; Iqbal, M.M.; Malik, A.J. FD-StackGAN: Face De-occlusion Using Stacked Generative Adversarial Networks. KSII TRansactions Internet Inf. Syst. (TIIS) 2021, 15, 2547–2567.

- Duan, Q.; Zhang, L. Look more into occlusion: Realistic face frontalization and recognition with boostgan. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 214–228.

- Duan, Q.; Zhang, L.; Gao, X. Simultaneous face completion and frontalization via mask guided two-stage GAN. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3761–3773.

- Fabbri, M.; Calderara, S.; Cucchiara, R. Generative adversarial models for people attribute recognition in surveillance. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6.

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434.

- Fulgeri, F.; Fabbri, M.; Alletto, S.; Calderara, S.; Cucchiara, R. Can adversarial networks hallucinate occluded people with a plausible aspect? Comput. Vis. Image Underst. 2019, 182, 71–80.

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556.

- Papadopoulos, D.P.; Tamaazousti, Y.; Ofli, F.; Weber, I.; Torralba, A. How to make a pizza: Learning a compositional layer-based gan model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8002–8011.

- Zhang, K.; Wu, D.; Yuan, C.; Qin, X.; Wu, H.; Zhao, X.; Zhang, L.; Du, Y.; Wang, H. Random Occlusion Recovery with Noise Channel for Person Re-identification. In Proceedings of the International Conference on Intelligent Computing. Springer, Shenzhen, China, 12–15 August 2020; pp. 183–191.

- Tagore, N.K.; Chattopadhyay, P. A bi-network architecture for occlusion handling in Person re-identification. Signal Image Video Process. 2022, 16, 1–9.

- Wang, X.; Shrivastava, A.; Gupta, A. A-fast-rcnn: Hard positive generation via adversary for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2606–2615.

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448.

- Han, G.; Zhou, W.; Sun, N.; Liu, J.; Li, X. Feature fusion and adversary occlusion networks for object detection. IEEE Access 2019, 7, 124854–124865.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99.

- Zhao, C.; Lv, X.; Dou, S.; Zhang, S.; Wu, J.; Wang, L. Incremental generative occlusion adversarial suppression network for person ReID. IEEE Trans. Image Process. 2021, 30, 4212–4224.

- Wu, D.; Zhang, K.; Zheng, S.J.; Hao, Y.T.; Liu, F.Q.; Qin, X.; Cheng, F.; Zhao, Y.; Liu, Q.; Yuan, C.A.; et al. Random occlusion recovery for person re-identification. J. Imaging Sci. Technol. 2019, 63, 30405.

- Cong, K.; Zhou, M. Face Dataset Augmentation with Generative Adversarial Network. J. Phys. Conf. Ser. 2022, 2218, 012035.