GAN is a structured probabilistic model that consists of two networks, a generator that captures the data distributions and a discriminator that decides whether the produced data come from the actual data distribution or from the generator. The two networks train in a two-player minimax game fashion until the generator can generate samples that are similar to the true samples, and the discriminator can no longer distinguish between the real and the fake samples.

Despite the various modifications, GAN is challenging to train and evaluate. However, due to its generative power and outstanding performance, it has a significantly large number of applications in computer vision, bio-metric systems, medical field, etc. In the challenging field of amodal completion, GAN has had significant impact because it can help in reconstructing and perceiving what is being occluded.

2. GAN in Amodal Completion

The taxonomy of the challenges in amodal completion is presented by Ao et al. [

13]. The following sections present how GAN has been used to address each challenge.

2.1. Amodal Segmentation

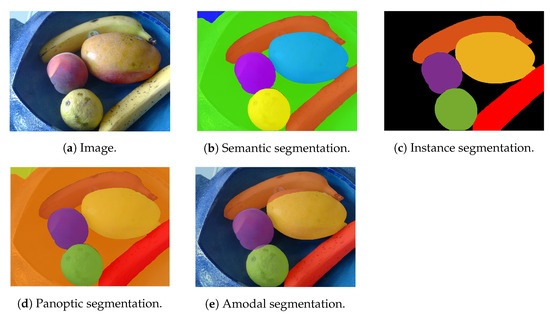

Image segmentation tasks such as semantic segmentation, instance segmentation, or panoptic segmentation solely predict the visible shape of the objects in a scene. Therefore, these tasks mainly operate with modal perception. Amodal segmentation, on the other hand, works with amodal perception. It estimates the shape of an object beyond the visible region, i.e., the visible mask (also called the modal mask) and the mask for the occluded region, from the local and the global visible visual cues (see

Figure 3).

Figure 3. Different types of image segmentation.

Amodal segmentation is rather challenging, especially if the occluder is of a different category (e.g., the occlusion between vehicles and pedestrians). The visible region may not hold sufficient information to help in determining the whole extent of the object. Contrariwise, if the occluder is an instance of the same category (e.g., occlusion between pedestrians), since the features of both objects are similar, it becomes difficult for the model to estimate where the boundary of one object ends and the second one begins. In either case, the visible region plays a significant role in guiding the amodal mask generation process. Therefore, most existing methods require the modal mask as input. To alleviate the need for a manually annotated modal mask, many works apply a pre-trained instance segmentation network to obtain the visible mask and utilize it as input.

One of the common architectures in amodal segmentation is coarse-to-fine (also called initial-to-refined) architecture, where the initial stage produces a coarse output from the input image, which is then further refined in the refinement step. The output of the second stage is evaluated by a single [

63] or multiple discriminators [

67]. For example in [

67], the authors implement an object discriminator, which uses a Stack-GAN structure [

68], to enforce the output mask to be similar to a real vehicle, and an instance discriminator with a standard GAN structure which aims at producing an output mask similar to the ground-truth mask.

To assist GAN in producing a better amodal mask, various techniques are implemented. Such as implementing an additional parsing branch to enforce semantic guidance of body parts of a human mask and improve the final amodal mask [

60]. Or implementing contextual attention layers [

65] to encourage the generator to concentrate on both the global contextual and local features [

63]. In addition to this, synthetic instances similar to the occluded object are useful, because they can be used as a reference by the model [

67]. A priori knowledge is also beneficial, such as utilizing various human poses for human deocclusion [

66] .

2.2. Order Recovery

In order to apply any de-occlusion or completion process, it is essential to determine the occlusion relationship and identify the depth order between the overlapping components of a scene. Other processes such as amodal segmentation and content completion depend on the predicted occlusion order to accomplish their tasks. Therefore, vision systems need to distinguish the occluders from the occludees, and to determine whether an occlusion exists between the objects. Order recovery is vital in many applications, such as semantic scene understanding, autonomous driving, and surveillance systems.

The existing approaches either implement a generator with a single discriminator to produce layered representation of the scene [

71] [

73] , or a generator with multiple discriminators [

69][

70]. The latter enforces inter-domain consistency [

69] and similarity of the predicted static and dynamic objects in the scene to the ground-truth representations [

70].

2.3. Amodal Appearance Reconstruction

Recently, there has been a significant progress in image inpainting methods, such as the works in [

65,

74]. However, these models recover the plausible content of a missing area with no knowledge about which object is involved in that part. On the contrary, amodal appearance reconstruction (also known as amodal content completion) models require identifying individual elements in the scene, and recognizing the partially visible objects along with their occluded areas, to predict the content for the invisible regions.

Therefore, the majority of the existing frameworks follow a multi-stage process to address the problem of amodal segmentation and amodal content completion as one problem. Therefore, they depend on the segmentator to infer the binary segmentation mask for the occluded and non-occluded parts of the object. The mask is then forwarded as input to the amodal completion module, which tries to fill in the RGB content for the missing region indicated by the mask.

Among the three sub-tasks of amodal completion, GAN is most widely used in amodal content completion. In this section, the usage of GAN in amodal content completion for a variety of computer vision applications is discussed.

2.3.1. Generic Object Completion

GANs are unable to estimate and learn the structure in the image implicitly with no additional information about the structures or annotations regarding the foreground and background objects during training. Therefore, Xiong et al. [

63] propose a model that is made up of a contour detection module, a contour completion module, and an image completion module. The first two modules learn to detect and complete the foreground contour. Then, the image completion module is guided by the completed contour to determine the position of the foreground and the background pixels. The experiments show that, under the guide of the contour completion, the model can generate completed images with less artifacts and complete objects with more natural boundaries. However, the model will fail to produce results without artifacts and color discrepancy around the holes due to implementing vanilla convolutions in extracting the features.

Therefore, Zhan et al. [

75] use CGAN and partial convolution [

62] to regenerate the content of the missing region. The authors apply the concept of partial completion to de-occlude the objects in an image. In the case of an object hidden by multiple other objects, the partial completion is performed by considering one object at a time. The model partially completes both the mask and the appearance of the object in question through two networks, namely Partial Completion Network-mask (PCNet-M) and Partial Completion Network-content (PCNet-C), respectively. A self-supervised approach is implemented to produce labeled occluded data to train the networks, i.e., a masked region is obtained by positioning a randomly selected occluder from the dataset on top of the concerned object.

Ehsani et al. [

76] trained a GAN-based model dubbed SeGAN. The model consists of a segmentator which is a modified ResNet-18 [

77], and a painter which is a CGAN. The segmentator produces the full segmentation mask (amodal mask) of the objects including the occluded parts. On the other hand, the painter, which consists of a generator and a discriminator, takes in the output from the segmentator and reproduces the appearance of the hidden parts of the object based on the amodal mask. The final output from the generator is a de-occluded RGB image which is then fed into the discriminator.

Furthermore, Kahatapitiya et al. [

78] aim to detect and remove the unrelated occluders, and inpaint the missing pixels to produce an occlusion-free image. The unrelated objects are identified based on the context of the image and a language model. The image inpainter is based on the contextual attention model by Yu et al. [

65], which employs a coarse-to-fine model. In the first stage, the mask is coarsely filled in. Then, the second stage utilizes a local and a global WGAN-GP [

56] to enhance the quality of the generated output from the coarse stage. A contextual attention layer is implemented to attend to similar feature patches from distant pixels. The local and global WGAN-GP enforce global and local consistency of the inpainted pixels [

65].

2.3.2. Face Completion

Occlusion is usually present in faces. The occluding objects can be glasses, scarf, food, cup, microphone, etc. The performance of biometric and surveillance systems can degrade when faces are obstructed or covered by other objects, which raises a security concern. However, compared to background completion, facial images are more challenging to complete since they contain more appearance variations, especially around the eyes and the mouth.

In the following, the existing works for face completion are categorized based on their architectFur

e.

A single generator and discriminator: Cai et

al. [79] present an Occlusion-Aware GAN (OA-GAN), with

a single

generator and discriminator, that alleviates the need for an occlusion mask as an input. Through using paired images with known mask of artificial occlusions and natural images without occlusion masks, the model learns in a semi-supervised way. The generator has an occlusion-aware network and a rmore, face completion

network. The first network estimates the mask for the area where the occlusion is present, which is fed into the second network. The latter then completes the missing region based on the mask.

Likewise, Chen et al. [80] depend on their proposed OA-GAN to automatiis essential in sec

ally identify the occlu

ded region and inpaint it. They train a DCGAN on occlusion-free facial images, and use it to detect the corrupted regions. During the inpainting process, a binary matrix is maintained, which indicates the presence of occlusion in each pixel. The detection of occluded region alleviates the need for any prior knowledge of the location and type of the occlusion masks.

Farity and surveillance applic

ia

l Structure Guided GAN (FSG-GAN) [81] is a twtio

-stage model with a sin

gle generator and discriminator. In the first part, a variational auto-encoder estimates the facial structure which is combined with the occluded image and fed into the generator of the second stage. The generator (UNet), guided by the facial structure knowledge, synthesizes the deoccluded image. A multi-receptive fields discriminator encourages a more natural and less ambiguous appearance of the output image. Nevertheless, the model cannot remove occlusion in a face image with large posture well, and it cannot correctly predict the facial structure under severe occlusions, which leads to unpleasant results.

Multiple discriminators: Several os, as it can improve the resistance of

the existing works employ multiple discriminators to ensure that the completed facial image is semantically valid and consistent with the context of the image. Li et al. [82] traface identificati

n a mo

del with a generator, a local discriminator, a global discriminator, and a parsing network to generate an occlusion-free facial image. The original image is masked with a randomly positioned noisy square and fed into the generator which is designed as an auto-encoder to fill the missing pixels. The discriminators, which are binary classifiers, enhance the semantic quality of the reconstructed pixels. Meanwhile, the parsing network enforces the harmony of the generated part and the present content. The model can handle various masks of different positions, sizes, and shapesn and recognition models to occlusion.

SimilVar

ly, Mathai et al. [83] ious

e a

n encoder–decoder for the generator, a Patch-GAN-based local discriminator, and a WGAN-GP [56]-basrchite

d global disc

riminator to address occlusions on distinctive areas of a face and inpaint them. Consequently, the model’s ability in recognizing faces improves. To minimize the effect of the masked area on the extracted features, two convolutional gating mechanisms are experimented: hard gating mechanism known as partial convolutions [62] and a soft tures are implemented in the existing

ating method based on sigmoid function.

Liu et al. [84] also follow

the same appro

ach by implementing a generator (autoencoder), a local discriminator, and a global discriminator. A self-attention mechanism is applied in the global discriminator to enforce complex geometric constrains on the global image structure, and model long-range dependencies.

Moreoverrks for face completion, such as,

Ca

i et al. [85] pres

ent FCSR-GAN to create a high-resolution deoccluded image from a low-resolution facial image with partial occlusions. At first, the model is pre-trained for face completion to recover the missing region. Afterward, the entire framework is trained end-to-end. The generator comprises a face completion unit and a face super-resolution unit. The low-resolution occluded input image is fed into the face completion module to fill the missing region. The face completion unit follows an encoder–decoder layout and the overall architecture is similar to the generative face completion by Li et al. a single generator and discriminator [

8279]

. Then, the occlusion-free image is fed into the face super-resolution module which adopts a SRGAN [

8680]

.

Furthermore, face completion can improve the resistance of face identification and recognition models to occlusion. The authors in [

8781]

propose a two-unit de-occlusion distillation pipeline. In the de-occlusion unit,

a GAN is implemented to recover the appearance of pixels covered by the mask. Similar to the previously mentioned works, the output of the generator is evaluated by local and global dmultiple discriminators

. In [82][83] the distillation unit[84][85][87],

a pre-trained face recognition m

odel is employed as a teacher, and its knowledge is used to train the student model to identify masked faces by learning representations for recovered faces with similar clustering behaviors as the original ones. This teaches the student model how to fill in the information gap in appearance space and in identity space.

Multiple generators: In contrast to the OA-GAN presented by Cai et al. ultiple generators [

79], the authors of [88]

propose a two-stage OA-GAN framework with two generators and two discriminators. While the generators (G11,

and G2) are m

ade u

p of a UNet encoder–decoder architecture, PatchGAN is adopted in the discriminators. G1 takes an occl

uded input

image and disentangles the mask of the image to produce a synthesized occlusion. G2 thiple

n takes the output from G1 in order to remove the occlusions and generat

e a deo

ccluded image. Therefore, the occlusion generator (i.e., G1) plays a furs and

amental role in the deocclusion process. The failure in the occlusion generator produces incorrect images.

Multiple generators and discriminators: Whdiscrimi

le usin

g multiple discriminators

ensures the consistency and the validity of the produced image, some available works employ multiple generators, especially when tackling multiple problems. For example, Jabbar et al. [

89]

present a framework known as Automatic Mask Generation Network for Face Deocclusion using Stacked GAN (AFD-StackGAN) that is composed of two stages to automatically extract the mask of the occluded area and recover its content. The first stage employs an encoder–decoder in its generator to generate the binary segmentation mask for the invisible region. The produced mask is further refined with erosion and dilation morphological techniques. The second stage eliminates the mask object and regenerates the corrupted pixels through two pair of generators and discriminators. The occluded input image and the extracted occlusion mask are fed into the first generator to produce a completed image. The initial output from the first generator is enhanced by rectifying any missing or incorrect content in it. Two PatchGAN discriminators are implemented against the result of the generators to ensure that the restored face’s appearance and structural consistency are retained.

In the same way, Li et al. [

90]

employ, two generators and three domain-specific discriminators in their proposed framework called disentangling and fusing GAN (DF-GAN). They treat face completion as disentangling and fusing of clean faces and occlusions. This way, they remove the need for paired samples of occluded images and their congruent clean images. The framework works with three domains that correspond to the distribution of occluded faces, clean faces, and structured occlusions. In the disentangling module, an occluded facial image is fed into an encoder which encodes it to the disentangled representations. Thereafter, two decoders produce the corresponding deoccluded image and occlusion, respectively. In other words, the disentangling network learns how to separate the structured occlusions and the occlusion-free images. The fusing network, on the other hand, combines the latent representations of clean faces and occlusions, and creates the corresponding occluded facial image, i.e., it learns how to generate images with structured occlusions.

Coarse-to-fine architecture: Conversely to the previously mentioned works where one output is generated, Jabbar et al. and coarse-to-fine architecture [

91]

propose a two-stage Face De-occlusion using Stacked Generative Adversarial Network (FD-StackGAN) model that follows the coarse-to-fine approach. The model attempts to remove the occlusion mask and fill in the affected area. In the first stage, the network produces an initial deoccluded facial image. The second stage refines the initial generated image to create a more visually plausible image that is similar to the real image. Similar to AF-StackGAN, FD-StackGAN can handle various regions in the facial images with different structures and surrounding backgrounds.

Likewise, Duan and Zhang [

92]

address the problem of deoccluding and recognizing face profiles with large-pose variations and occlusions through BoostGAN, which has a coarse-to-fine structure. In the coarse part, i.e., multi-occlusion frontal view generator, an encoder–decoder network is used for eliminating occlusion and producing multiple intermediate deoccluded faces. Subsequently, the coarse outputs are refined through a boosting network for photo-realistic and identity-preserved face generation. Consequently, the discriminator has a multi-input structure.

Since BoostGAN is a one-stage framework, it cannot handle de-occlusion and frontalization concurrently, which means that it loses the discriminative identity information. Furthermore, BoostGAN fails to employ the mask guided noise prior information. To address these, Duan et al. [

93]

perform face frontalization and face completion simultaneously.

They propose an end-to-end mask guided two-stage GAN (TSGAN) framework. Each stage has its own generator and discriminator, while the first stage contains the face deocclusion module, the second one contains face frontalization module. Another module named mask-attention module (MAM) is deployed in both stages. The MAM encourages the face deocclusion module to concentrate more on missing regions and fills them based on the masked image input. The recovered image is fed into the second stage to obtain the final frontal image.

2.3.3. Attribute Classification

With the availability of surveillance cameras, the task of object detection and tracking through its visual appearance in a surveillance footage has gained prominence. Furthermore, there are other characteristics of people that are essential to fully understand an observed scene. The task of recognizing the people attributes (age, sex, race, etc.) and the items they hold (backpacks, bags, phone, etc.) is called attribute classification.

However, occluding the person in question by another person may lead to incorrectly classifying the attributes of the occluder instead of the occludee. Furthermore, the quality of the images from the surveillance cameras is usually low. Therefore, Fabbri et al. [

108] focus on the poor resolution and occlusion challenges in recognizing the attribute of people such as gender, race, clothing, etc., in surveillance systems. The authors propose a model based on DCGAN [

109] to improve the quality of images in order to overcome the mentioned problems.

The model has three networks, one for attribute classification from the full body images, and the other two networks attempt to enhance the resolution and recover from occlusion. Eliminating the occlusion produces an image without noise and the residual of other subjects that could result in misclassification. However, under severe occlusions, the reconstructed image still contains the remaining of the occluder and the model fails to keep the parts of the image that should stay unmodified.

Similarly, Fulgeri et al. [

110] tackle the occlusion issue by implementing a combination of UNet and GAN architecture. The model requires as input the occluded person image and its corresponding attributes. The generator takes the input and restores the image. The output is then forwarded to three networks: ResNet-101 [

77], VGG-16 [

111], and the discriminator to calculate the loss. The loss is backpropagated to update the weights of the generator.

2.3.4. Miscellaneous Applications

In addition to the previously mentioned applications, GAN is used for amodal content completion in various categories of data.

Food: Papadopoulos et al. [

112] present a compositional layer-based generative network called PizzaGAN that follows the steps of a recipe to make a pizza. The framework contains a pair of modules to add and remove all instances of each recipe component. A Cycle-GAN [

6] is used to design each module. In the case of adding an element to the existing image, the module produces the appearance and the mask of the visible pixels in the new layer. Moreover, the removal module learns how to fill the holes that are left from the erased layer and generate the mask of the removed pixels.

Vehicles: Yan et al. [

67] propose a two-part model to recover the amodal mask of a vehicle and the appearance of its hidden regions iteratively. To tackle both tasks, the model is composed of two parts: a segmentation completion module and an appearance recovery module. The first network is to complement the segmentation mask of the vehicle’s invisible region. In order to complete the content of the occluded region, the appearance recovery module has a generator with a two-path network structure. The first path accepts the input image, the recovered mask from the segmentation completion module, and the modal mask, while learning how to fill in the colors of the hidden pixels. The other path requires the recovered mask and the ground-truth complete mask and learns how to use the image context to inpaint the whole foreground vehicle. The two paths share parameters, which increases the ability of the generator. To enhance the quality of the recovered image, it is taken through the whole model several times.

Humans: The process of matching the same person in images taken by multiple cameras is referred to as Person re-identification (ReID). In surveillance systems where the purpose is to track and identify the individuals, ReID is essential. However, the stored images usually have low resolution and are blurry because they are from ordinary surveillance cameras [

113]. Additionally, occlusion by other individuals and/or objects is most likely to occur since each camera has a different angle of view. Hence, some important features become difficult to recognize.

To tackle the challenge of person re-identification under occlusion, Tagore et al. [

114] design a bi-network architecture with an Occlusion Handling GAN (OHGAN) module. An image with synthetic added occlusion is fed into the generator which is based on UNet architecture and produces an occlusion-free image by learning a non-linear project mapping function between the input image and the output image. Afterward, the discriminator computes the metric difference between the generated image and the original one.

On the other hand, Zhang et al. [

66] attempt to complete the mask and the appearance of an occluded human through a two-stage network. First, the amodal completion stage predicts the amodal mask of the occluded person. Afterward, the content recovery network completes the RGB appearance of the invisible area. The latter uses a UNet architecture in the generator, with local and global discriminators to ensure that the output image is consistent with the global semantics while enhancing the clarity and contrast of the local regions. The generator adds a Visible Guided Attention (VGA) module to the skip connections. The VGA module computes a relational feature map to guide the low-level features to complete by concatenating the high-level features with the next-level features. The relational feature map represents the relation between the pixels inside and outside the occluded area.

The process of extracting feature maps is similar to the self-attention mechanism in SAGAN by Zhang et al. [54].

2.4. Training Data

Supervised learning frameworks require annotated ground-truth data to train a model. These data can be either from a manually annotated dataset, a synthetic occluded data from 3D computer-generated images, or by superimposing a part of an object/image on another object. For example, Ehsani et al. [

76] train their model (SeGAN) on a photo-realistic synthetic dataset, and Zhan et al. [

75] apply a self-supervised approach to generate annotated training data. However, a model trained with synthetic data may fail when it is tested on real-world data, and human-labeled data are costly, time-consuming, and susceptible to subjective judgments.

GAN is implemented to generate training data for several categories:

Generic objects: It is nearly impossible to cover all the probable occlusions, and the likelihood of appearance of some occlusion cases is rather small. Therefore, Wang et al. [

115] aim to utilize the data to improve the performance of the object detection in the case of occlusions. They utilize an adversarial network to generate hard examples with occlusions, and use them to train a Fast-RCNN [

116]. Consequently, the detector becomes invariant to occlusions and deformations. Their model contains an Adversarial Spatial Dropout Network (ASDN), which takes as input features from an image patch and predicts a dropout mask that is used to create occlusion such that it would be difficult for Fast-RCNN to classify.

Likewise, Han et al. [

117] apply an adversarial network to produce occluded adversary samples to train an object detector. The model, named Feature Fusion and Adversary Networks (FFAN), is based on Faster RCNN [

118] and consists of a feature fusion network and an adversary occlusion network, and while the feature fusion module produces a feature map of high resolution and high semantic information to detect small objects more effectively, the adversary occlusion module produces occlusion on the feature map of the object thus outputs an adversary training sample that would be hard for the detector to discriminate. Meanwhile, the detector becomes better in classifying the generated occluded adversary samples through self-learning. Over time, the detector and the adversary occlusion network learn and compete with each other to enhance the performance of the model.

The occlusions produced by adversary networks in [

115,

117] may lead to over-generalization, because they are similar to other class instances. For example, the occluded wheels of a bicycle results in misclassifying a wheel chair as a bike.

Humans: Zhao et al. [

119] augment the input data to produce easy-to-hard occluded samples with different sizes and positions of the occlusion mask to increase the variation of occlusion patterns. They address the issue of ReID under occlusion through an Incremental Generative Occlusion Adversarial Suppression (IGOAS) framework. The network contains two modules, an incremental generative occlusion (IGO) block, and a global adversarial suppression (G&A) module. IGO takes the input data through augmentation and generates easy occluded samples. Then, it progressively enlarges the size of the occlusion mask with the number of training iterations. Thus, the model becomes more robust against occlusion as it learns harder occlusion incrementally rather than hardest ones directly. On the other hand, G&A consists of a global branch which extracts global features of the input data, and an adversarial suppression branch that weakens the response of the occluded region to zero and strengthens the response to non-occluded areas.

Furthermore, to increase the number of samples per identity for person ReID, Wu et al. [

120] use a GAN network to synthesize labeled occluded data. Specifically, the authors impose block rectangles on the images to create random occlusion on the original person images which the model then tries to complete. The completed images that are similar but not identical to the original input are labeled with the same annotation as the corresponding raw image. Similarly, Zhang et al. [

113] follow the same strategy to expand the original training set, expect that an additional noise channel is applied on the generated data to adjust the label further.

Face images: Cong and Zhou [

106] propose an improved GAN to generate occluded face images. The model is based on DCGAN with an added S-coder. The purpose of the S-coder is to force the generator to produce multi-class target images. The network is further optimized through Wasserstein distance and the cycle consistency loss from CycleGAN. However, only sunglasses and facial masks are considered as occlusive elements.