| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Yong-Ping Zheng | -- | 2271 | 2023-02-24 10:12:29 | | | |

| 2 | Lindsay Dong | Meta information modification | 2271 | 2023-02-27 03:26:51 | | | | |

| 3 | Lindsay Dong | Meta information modification | 2271 | 2023-02-27 03:27:22 | | |

Video Upload Options

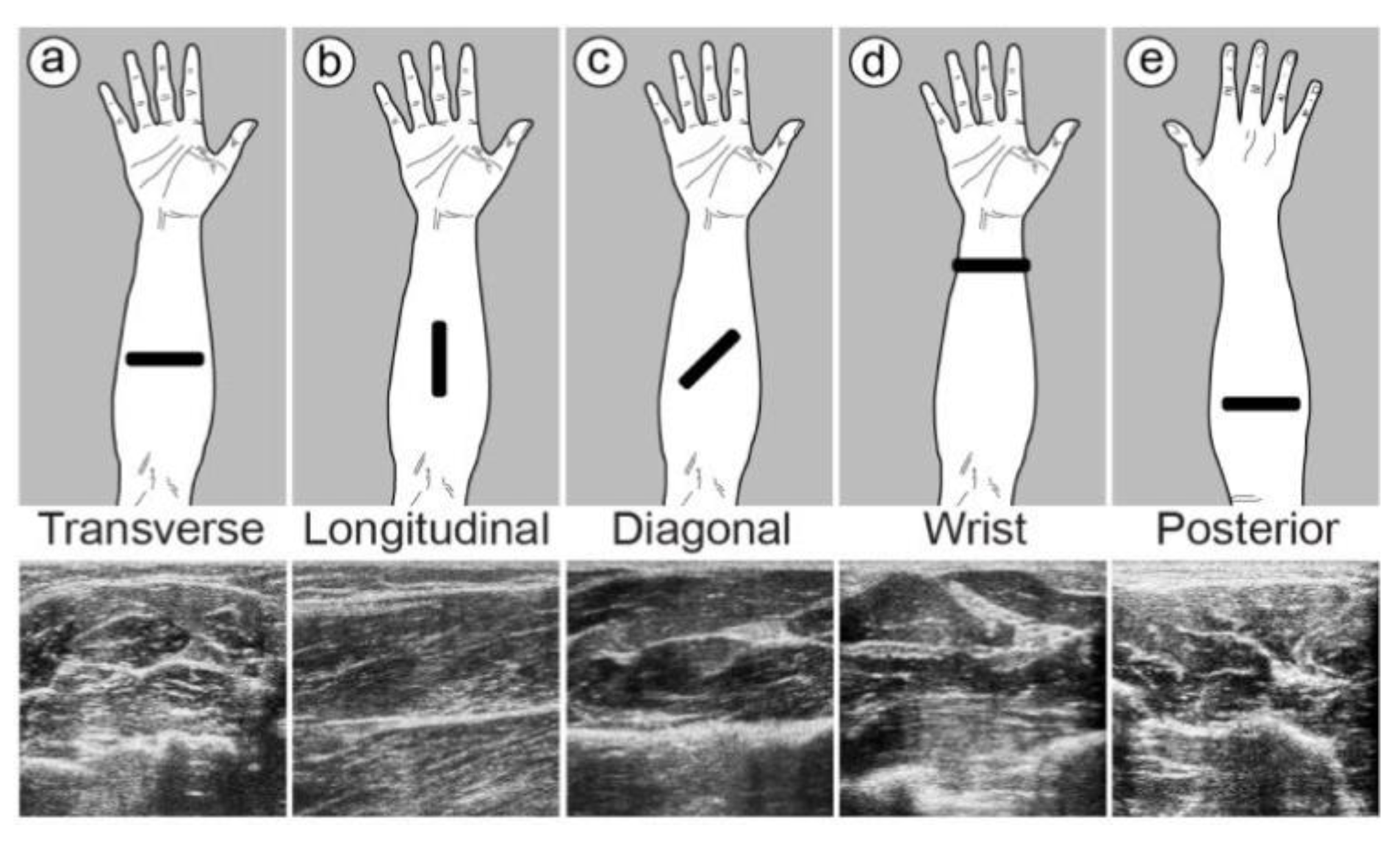

A ground-breaking study by Zheng et al. investigated whether ultrasound imaging of the forearm might be used to control a powered prosthesis, and the term “sonomyography” (SMG) was coined by the group. Ultrasound signals have recently garnered the interest of researchers in the area of HMIs because they can collect information from both superficial and deep muscles and so provide more comprehensive information than other techniques. Due to the great spatiotemporal resolution and specificity of ultrasound measurements of muscle deformation, researchers have been able to infer fine volitional motor activities, such as finger motions and the dexterous control of robotic hands.

1. Introduction

2. Sonomyography (SMG)

To retain performance, a prosthesis that responds to the user’s physiological signals must be fast to respond. EEG, sEMG, and other intuitive interfaces are capable of detecting neuromuscular signals prior to the beginning of motion; therefore, they are predicted to appear before the motion itself [38][39][40]. However, ultrasound imaging can detect skeletal muscle kinematic and kinetic characteristics [41], which indicate the continued creation of cross bridges during motor unit recruitment and prior to the generation of muscular force [39][42], and these changes occur during sarcomere shortening, when muscle force exceeds segment inertial forces, and before the beginning of joint motion [39]. It is important to note that the changes in kinetic and kinematic ultrasonography properties of muscles occur prior to joint motion. As a result, prosthetic hands will be able to respond more quickly in the present and future.

2.1. Ultrasound Modes Used in SMG

2.2. Muscle Location and Probe Fixation

2.3. Feature Extraction Algorithm

2.4. Artificial Intelligence in Classification

References

- Van Dijk, L.; van der Sluis, C.K.; van Dijk, H.W.; Bongers, R.M. Task-oriented gaming for transfer to prosthesis use. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 1384–1394.

- Liu, H.; Dong, W.; Li, Y.; Li, F.; Geng, J.; Zhu, M.; Chen, T.; Zhang, H.; Sun, L.; Lee, C. An epidermal sEMG tattoo-like patch as a new human–machine interface for patients with loss of voice. Microsyst. Nanoeng. 2020, 6, 16.

- Jiang, N.; Dosen, S.; Muller, K.-R.; Farina, D. Myoelectric control of artificial limbs—Is there a need to change focus? . IEEE Signal Process. Mag. 2012, 29, 150–152.

- Nazari, V.; Pouladian, M.; Zheng, Y.-P.; Alam, M. A Compact and Lightweight Rehabilitative Exoskeleton to Restore Grasping Functions for People with Hand Paralysis. Sensors 2021, 21, 6900.

- Nazari, V.; Pouladian, M.; Zheng, Y.-P.; Alam, M. Compact Design of A Lightweight Rehabilitative Exoskeleton for Restoring Grasping Function in Patients with Hand Paralysis. Res. Sq. 2021. Preprits.

- Zhang, Y.; Yu, C.; Shi, Y. Designing autonomous driving HMI system: Interaction need insight and design tool study. In Proceedings of the International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; pp. 426–433.

- Young, S.N.; Peschel, J.M. Review of human–machine interfaces for small unmanned systems with robotic manipulators. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 131–143.

- Wilde, M.; Chan, M.; Kish, B. Predictive human-machine interface for teleoperation of air and space vehicles over time delay. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; pp. 1–14.

- Morra, L.; Lamberti, F.; Pratticó, F.G.; La Rosa, S.; Montuschi, P. Building trust in autonomous vehicles: Role of virtual reality driving simulators in HMI design. IEEE Trans. Veh. Technol. 2019, 68, 9438–9450.

- Bortole, M.; Venkatakrishnan, A.; Zhu, F.; Moreno, J.C.; Francisco, G.E.; Pons, J.L.; Contreras-Vidal, J.L. The H2 robotic exoskeleton for gait rehabilitation after stroke: Early findings from a clinical study. J. Neuroeng. Rehabil. 2015, 12, 54.

- Pehlivan, A.U.; Losey, D.P.; O’Malley, M.K. Minimal assist-as-needed controller for upper limb robotic rehabilitation. IEEE Trans. Robot. 2015, 32, 113–124.

- Zhu, C.; Luo, L.; Mai, J.; Wang, Q. Recognizing Continuous Multiple Degrees of Freedom Foot Movements With Inertial Sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 431–440.

- Russell, C.; Roche, A.D.; Chakrabarty, S. Peripheral nerve bionic interface: A review of electrodes. Int. J. Intell. Robot. Appl. 2019, 3, 11–18.

- Yildiz, K.A.; Shin, A.Y.; Kaufman, K.R. Interfaces with the peripheral nervous system for the control of a neuroprosthetic limb: A review. J. Neuroeng. Rehabil. 2020, 17, 43.

- Taylor, C.R.; Srinivasan, S.; Yeon, S.H.; O’Donnell, M.; Roberts, T.; Herr, H.M. Magnetomicrometry. Sci. Robot. 2021, 6, eabg0656.

- Ng, K.H.; Nazari, V.; Alam, M. Can Prosthetic Hands Mimic a Healthy Human Hand? Prosthesis 2021, 3, 11–23.

- Geethanjali, P. Myoelectric control of prosthetic hands: State-of-the-art review. Med. Devices Evid. Res. 2016, 9, 247–255.

- Mahmood, N.T.; Al-Muifraje, M.H.; Saeed, T.R.; Kaittan, A.H. Upper prosthetic design based on EMG: A systematic review. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Duhok, Iraq, 9–10 September 2020; p. 012025.

- Mohebbian, M.R.; Nosouhi, M.; Fazilati, F.; Esfahani, Z.N.; Amiri, G.; Malekifar, N.; Yusefi, F.; Rastegari, M.; Marateb, H.R. A Comprehensive Review of Myoelectric Prosthesis Control. arXiv 2021, arXiv:2112.13192.

- Cimolato, A.; Driessen, J.J.; Mattos, L.S.; De Momi, E.; Laffranchi, M.; De Michieli, L. EMG-driven control in lower limb prostheses: A topic-based systematic review. J. NeuroEngineering Rehabil. 2022, 19, 43.

- Ortenzi, V.; Tarantino, S.; Castellini, C.; Cipriani, C. Ultrasound imaging for hand prosthesis control: A comparative study of features and classification methods. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics (ICORR), Singapore, 11–14 August 2015; pp. 1–6.

- Guo, J.-Y.; Zheng, Y.-P.; Kenney, L.P.; Bowen, A.; Howard, D.; Canderle, J.J. A comparative evaluation of sonomyography, electromyography, force, and wrist angle in a discrete tracking task. Ultrasound Med. Biol. 2011, 37, 884–891.

- Ribeiro, J.; Mota, F.; Cavalcante, T.; Nogueira, I.; Gondim, V.; Albuquerque, V.; Alexandria, A. Analysis of man-machine interfaces in upper-limb prosthesis: A review. Robotics 2019, 8, 16.

- Haque, M.; Promon, S.K. Neural Implants: A Review Of Current Trends And Future Perspectives. Eurpoe PMC 2022. Preprint.

- Kuiken, T.A.; Dumanian, G.; Lipschutz, R.D.; Miller, L.; Stubblefield, K. The use of targeted muscle reinnervation for improved myoelectric prosthesis control in a bilateral shoulder disarticulation amputee. Prosthet. Orthot. Int. 2004, 28, 245–253.

- Miller, L.A.; Lipschutz, R.D.; Stubblefield, K.A.; Lock, B.A.; Huang, H.; Williams, T.W., III; Weir, R.F.; Kuiken, T.A. Control of a six degree of freedom prosthetic arm after targeted muscle reinnervation surgery. Arch. Phys. Med. Rehabil. 2008, 89, 2057–2065.

- Wadikar, D.; Kumari, N.; Bhat, R.; Shirodkar, V. Book recommendation platform using deep learning. Int. Res. J. Eng. Technol. IRJET 2020, 7, 6764–6770.

- Memberg, W.D.; Stage, T.G.; Kirsch, R.F. A fully implanted intramuscular bipolar myoelectric signal recording electrode. Neuromodulation Technol. Neural Interface 2014, 17, 794–799.

- Hazubski, S.; Hoppe, H.; Otte, A. Non-contact visual control of personalized hand prostheses/exoskeletons by tracking using augmented reality glasses. 3D Print. Med. 2020, 6, 6.

- Johansen, D.; Cipriani, C.; Popović, D.B.; Struijk, L.N. Control of a robotic hand using a tongue control system—A prosthesis application. IEEE Trans. Biomed. Eng. 2016, 63, 1368–1376.

- Otte, A. Invasive versus Non-Invasive Neuroprosthetics of the Upper Limb: Which Way to Go? Prosthesis 2020, 2, 237–239.

- Fonseca, L.; Tigra, W.; Navarro, B.; Guiraud, D.; Fattal, C.; Bó, A.; Fachin-Martins, E.; Leynaert, V.; Gélis, A.; Azevedo-Coste, C. Assisted grasping in individuals with tetraplegia: Improving control through residual muscle contraction and movement. Sensors 2019, 19, 4532.

- Fang, C.; He, B.; Wang, Y.; Cao, J.; Gao, S. EMG-centered multisensory based technologies for pattern recognition in rehabilitation: State of the art and challenges. Biosensors 2020, 10, 85.

- Briouza, S.; Gritli, H.; Khraief, N.; Belghith, S.; Singh, D. A Brief Overview on Machine Learning in Rehabilitation of the Human Arm via an Exoskeleton Robot. In Proceedings of the 2021 International Conference on Data Analytics for Business and Industry (ICDABI), Sakheer, Bahrain, 25–26 October 2021; pp. 129–134.

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep learning in human activity recognition with wearable sensors: A review on advances. Sensors 2022, 22, 1476.

- Zadok, D.; Salzman, O.; Wolf, A.; Bronstein, A.M. Towards Predicting Fine Finger Motions from Ultrasound Images via Kinematic Representation. arXiv 2022, arXiv:2202.05204.

- Yan, J.; Yang, X.; Sun, X.; Chen, Z.; Liu, H. A lightweight ultrasound probe for wearable human–machine interfaces. IEEE Sens. J. 2019, 19, 5895–5903.

- Begovic, H.; Zhou, G.-Q.; Li, T.; Wang, Y.; Zheng, Y.-P. Detection of the electromechanical delay and its components during voluntary isometric contraction of the quadriceps femoris muscle. Front. Physiol. 2014, 5, 494.

- Dieterich, A.V.; Botter, A.; Vieira, T.M.; Peolsson, A.; Petzke, F.; Davey, P.; Falla, D. Spatial variation and inconsistency between estimates of onset of muscle activation from EMG and ultrasound. Sci. Rep. 2017, 7, 42011.

- Wentink, E.; Schut, V.; Prinsen, E.; Rietman, J.S.; Veltink, P.H. Detection of the onset of gait initiation using kinematic sensors and EMG in transfemoral amputees. Gait Posture 2014, 39, 391–396.

- Lopata, R.G.; van Dijk, J.P.; Pillen, S.; Nillesen, M.M.; Maas, H.; Thijssen, J.M.; Stegeman, D.F.; de Korte, C.L. Dynamic imaging of skeletal muscle contraction in three orthogonal directions. J. Appl. Physiol. 2010, 109, 906–915.

- Jahanandish, M.H.; Fey, N.P.; Hoyt, K. Lower limb motion estimation using ultrasound imaging: A framework for assistive device control. IEEE J. Biomed. Health Inform. 2019, 23, 2505–2514.

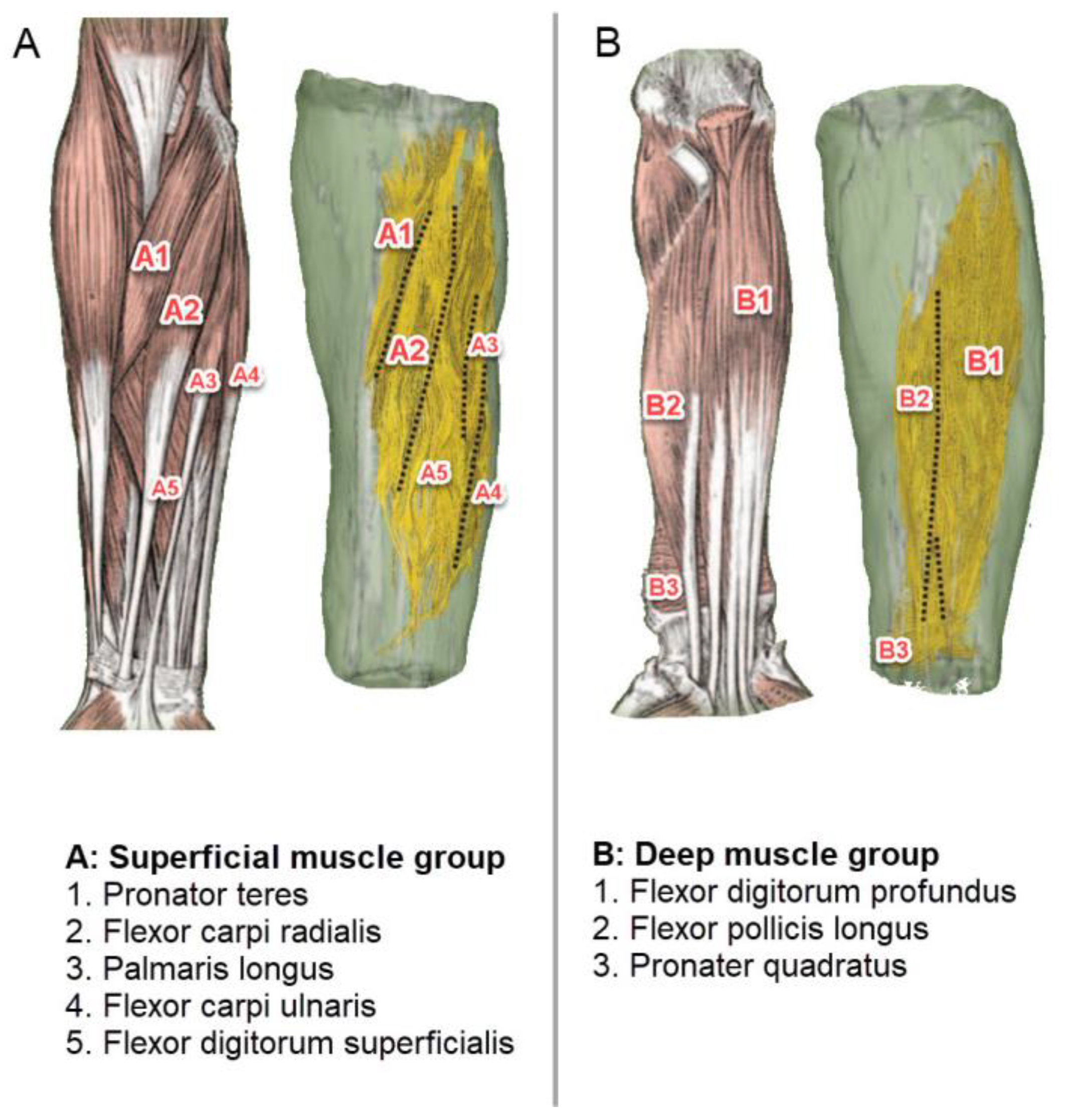

- Froeling, M.; Nederveen, A.J.; Heijtel, D.F.; Lataster, A.; Bos, C.; Nicolay, K.; Maas, M.; Drost, M.R.; Strijkers, G.J. Diffusion-tensor MRI reveals the complex muscle architecture of the human forearm. J. Magn. Reson. Imaging 2012, 36, 237–248.

- Akhlaghi, N.; Dhawan, A.; Khan, A.A.; Mukherjee, B.; Diao, G.; Truong, C.; Sikdar, S. Sparsity analysis of a sonomyographic muscle–computer interface. IEEE Trans. Biomed. Eng. 2019, 67, 688–696.

- McIntosh, J.; Marzo, A.; Fraser, M.; Phillips, C. Echoflex: Hand gesture recognition using ultrasound imaging. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1923–1934.

- Shi, J.; Guo, J.-Y.; Hu, S.-X.; Zheng, Y.-P. Recognition of finger flexion motion from ultrasound image: A feasibility study. Ultrasound Med. Biol. 2012, 38, 1695–1704.

- Akhlaghi, N.; Baker, C.A.; Lahlou, M.; Zafar, H.; Murthy, K.G.; Rangwala, H.S.; Kosecka, J.; Joiner, W.M.; Pancrazio, J.J.; Sikdar, S. Real-time classification of hand motions using ultrasound imaging of forearm muscles. IEEE Trans. Biomed. Eng. 2015, 63, 1687–1698.

- Shi, J.; Chang, Q.; Zheng, Y.-P. Feasibility of controlling prosthetic hand using sonomyography signal in real time: Preliminary study. J. Rehabil. Res. Dev. 2010, 47, 87–98.