Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Khandaker Asif Ahmed | -- | 1237 | 2023-01-17 13:03:07 | | | |

| 2 | Conner Chen | + 1 word(s) | 1238 | 2023-01-18 04:31:08 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Khan, M.A.R.; Rostov, M.; Rahman, J.S.; Ahmed, K.A.; Hossain, M.Z. Human Signal Sources. Encyclopedia. Available online: https://encyclopedia.pub/entry/40293 (accessed on 02 March 2026).

Khan MAR, Rostov M, Rahman JS, Ahmed KA, Hossain MZ. Human Signal Sources. Encyclopedia. Available at: https://encyclopedia.pub/entry/40293. Accessed March 02, 2026.

Khan, Md Ayshik Rahman, Marat Rostov, Jessica Sharmin Rahman, Khandaker Asif Ahmed, Md Zakir Hossain. "Human Signal Sources" Encyclopedia, https://encyclopedia.pub/entry/40293 (accessed March 02, 2026).

Khan, M.A.R., Rostov, M., Rahman, J.S., Ahmed, K.A., & Hossain, M.Z. (2023, January 17). Human Signal Sources. In Encyclopedia. https://encyclopedia.pub/entry/40293

Khan, Md Ayshik Rahman, et al. "Human Signal Sources." Encyclopedia. Web. 17 January, 2023.

Copy Citation

Different signals are generated from different parts of the human body.

machine learning

human-robot interaction

signals

robots

1. Human Signal Sources

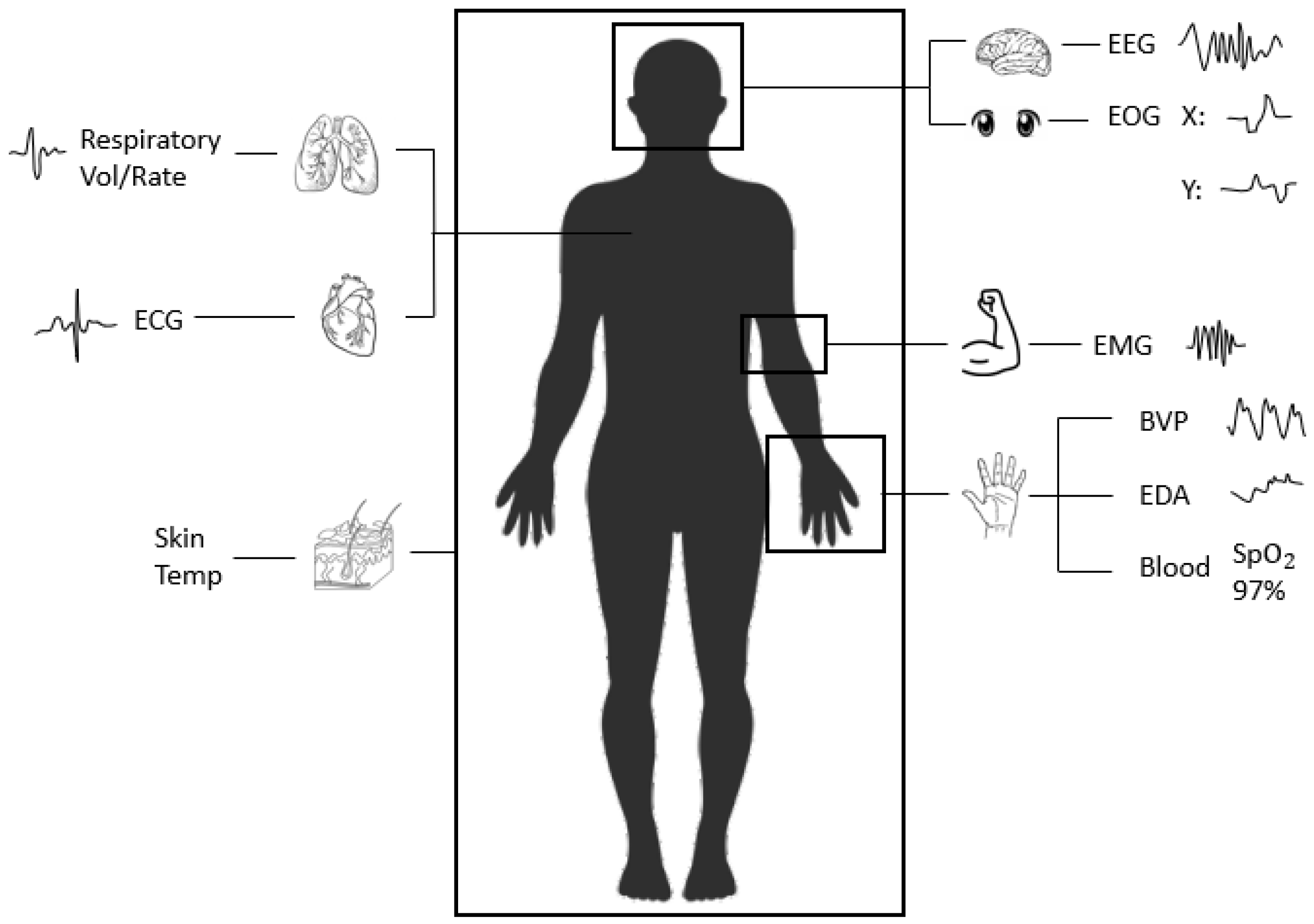

Different signals are generated from different parts of the human body. It can be found seven signal sources and Figure 1 has illustrated their originating places.

Figure 1. Several physiological signals with sources.

2. Brain Signals

The signals that are generated from the brain or related to brain activity are considered brain signals. Brain activity can be defined as the neurons sending signals to each other [1]. Two types of signals fall into this category: Electroencephalogram (EEG) and Electrooculography (EOG). Measuring brain activities through EEG is a difficult process. Electroencephalograms (EEG) measure the surface potential of the scalp. Although the brain is insulated from the outside by the skull and other tissue, this electrical activity still presents minuscule changes in the electrical potential across the scalp [2]. These changes can be recorded. However, since these voltages fluctuate in the micro-volt range [3], EEG measurements are very prone to noise. However, as EEG is directly measured by the brain’s activity, it has some obvious impacts to our mental state.

Electrooculography (EOG) measures the electrical potential between the front and the back of the eye [4]. Like other electrical potential physiological sensors, this is accomplished through several surface electrodes. This time, the electrodes are placed above and below or left and right of the eye to be measured. Eye position trackers are also often used in this field, although eye position is more voluntarily controlled. Both EOG and eye position have become increasingly relevant in emotion recognition studies [5]. One reason for this is the role eye contact plays in human and primate behavior. Studies have shown that the decoding of facial expressions is significantly impeded without clear eye contact [6].

3. Heart Signals

The driving force of the cardiovascular system, the heart, plays a key role in our physiology. Even characteristics that can be taken at a non-invasive level, such as blood pressure [7], contain information that can be used to infer emotional state. The most common example of this is determining whether a person is stressed or not. When stressed, the body releases a hormone that increases heart rate and blood pressure, and affects many other cardiovascular interactions [8]. These signals can be obtained through blood pressure sensors.

Blood volume pulse (BVP) measures how much blood moves to and from a site over time. This signal is usually captured through optical, non-invasive means using a photoplethysmogram (PPG). A PPG works through shining light, usually in the infrared wavelengths, onto the body surface of interest [9]. Since tissues reflect light more than the hemoglobin found in red blood cells [10], measuring the amount of light that returns to the device can give an idea of how much blood is in the site. These devices are so widespread now that they have been integrated into many smartphones [11] and smart watches [12] created in the last decade. Although BVP signals have successfully been used to measure peoples’ heart rates in recent years [13], these signals can provide a lot more information than just that. The amplitude of the pulses and how much volume is being transferred can provide key insights into the cardiovascular health of a patient as well as their mental state [10].

Electrocardiograms (ECG) measure the heart’s electrical activity. It accomplishes this by sensing the voltage of conductors placed on the chest surface, i.e., electrodes. Historically, this has been used to evaluate how the heart functions in a patient [14]. However, due to the strong connection between heart activity and mental state, it is now a mainstream physiological measurement for effective computing.

4. Skin Signals

Electrodermal activity (EDA) is the umbrella term for any electrical changes that occur within the skin. Like ECG, this is measured through surface electrodes, often placed on the body’s extremities. One signal that is measured under EDA is the galvanic skin response (GSR). GSR, a measure of the conductivity of human skin, can provide an indication of changes in stress levels in the human body [15]. Depending on our emotional state’s intensity, our skin produces sweat at different rates [16]. This results in the electrical conductance of our skin changing, which can then be measured.

In addition to conductance, our skin temperature (ST) also changes in response to several internal and external stimuli. External stimuli, such as ambient temperature and any applied heat sources, are of little use to researchers studying physiological signals. However, internal causes of skin temperature change are of great interest. Emotionally charged music causes skin temperatures to rise or fall [17]. Therefore, emotional states are linked to skin temperature, and it may be possible to infer patients’ emotions through skin temperature. Skin temperature is measured either through contactless or contact methods, but recently, researchers have taken great interest in contactless methods, such as infrared cameras, that measure temperatures by collecting radiation emitted by the surface [18].

5. Lungs Signals

Lung signals are physiological data of great interest in numerous studies. Breathing has been proven to change in response to emotional changes [19]. Typically, respiration data comes in the form of respiration volume (RV) and respiration rate (RR) and can be recorded by a single device, such as a wearable strain sensor [20]. Their implementation varies widely, with some new methods even going contactless [21], but often a belt with several sensors is used, such as one by Neulog [22]. Oxygen saturation [23] can also contain information regarding emotional state. SpO2 and HbO2 are measures of oxygen saturation.

6. Imaging Signals

Facial expression recognition from imaging signals is potentially a great technique for emotion recognition. Facial expressions are a great way to detect human emotions, as human faces most often portray their internal emotional states [24]. General facial expression recognition involves three key phases—preprocessing, feature extraction, and classification [25]. The process of emotion recognition from facial expressions can be performed from either facial images or video extracts that comprise facial expressions. While, in most cases, the images used for the recognition are 2D, depth information can also be incorporated into these 2D images using 3D sensors [26].

Our bodily expressions can also be a significant source of information regarding our emotions. Postural, kinematic, and geometrical features can be conveyors of human emotions [27]. Our emotional states also reflect in our walking and sitting actions [28]. Motion data collected using the RGB sensors are used for detection purposes [27].

7. Gait Sequences

Human gait refers to the walking style of a person. Alongside uniquely identifying the walker, it can also be used for detecting the walker’s emotions [29]. Kinetic or motion data collected by motion capture sensors, in combination with neural network algorithms, can be very effective in recognizing human emotions from gait sequences [30][31]. Traditionally, gait was measured by motion capture systems, force plates, electromyography, etc.; however, the emergence of modern technologies, such as accelerometers, electrogoniometers, gyroscopes, in-shoe pressure sensors, etc., has made gait analysis much easier and more efficient [32].

8. Speech Signals

Speech is the most commonly used and one of the most important mediums of communication for humans. The signals generated from human voice or audio clips are considered speech signals. Speech signals can contain information regarding the message, speaker, language, and emotion [33]. Therefore, speech signals have been of great interest to researchers for emotion recognition. Similar to imaging signals, the general approach for speech emotion recognition has three stages; signal preprocessing, feature extraction, and feature classification [34]. Besides audio extracts, speech signals are usually collected from smartphones and wearable devices where local interaction of on-body, environmental, and location sensor modalities are merged [35].

References

- Brain Basics: The Life and Death of a Neuron. Available online: https://www.ninds.nih.gov/health-information/public-education/brain-basics/brain-basics-life-and-death-neuron (accessed on 19 December 2022).

- Louis, E.K.S.; Frey, L.; Britton, J.; Hopp, J.; Korb, P.; Koubeissi, M.; Lievens, W.; Pestana-Knight, E. Electroencephalography (EEG): An Introductory Text and Atlas of Normal and Abnormal Findings in Adults. Child. Infants 2016.

- Malmivuo, J.; Plonsey, R. 13.1 INTRODUCTION. In Bioelectromagnetism; Oxford University Press: Oxford, UK, 1995.

- Soundariya, R.; Renuga, R. Eye movement based emotion recognition using electrooculography. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5.

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion recognition using eye-tracking: Taxonomy, review and current challenges. Sensors 2020, 20, 2384.

- Schurgin, M.; Nelson, J.; Iida, S.; Ohira, H.; Chiao, J.; Franconeri, S. Eye movements during emotion recognition in faces. J. Vis. 2014, 14, 14.

- Nickson, C. Non-Invasive Blood Pressure. 2020. Available online: https://litfl.com/non-invasive-blood-pressure/ (accessed on 19 December 2022).

- Porter, M. What Happens to Your Body When You’Re Stressed—and How Breathing Can Help. 2021. Available online: https://theconversation.com/what-happens-to-your-body-when-youre-stressed-and-how-breathing-can-help-97046 (accessed on 19 December 2022).

- Joseph, G.; Joseph, A.; Titus, G.; Thomas, R.M.; Jose, D. Photoplethysmogram (PPG) signal analysis and wavelet de-noising. In Proceedings of the 2014 Annual International Conference on Emerging Research Areas: Magnetics, Machines and Drives (AICERA/iCMMD), Kottayam, India, 24–26 July 2014; pp. 1–5.

- Jones, D. The Blood Volume Pulse—Biofeedback Basics. 2018. Available online: https://www.biofeedback-tech.com/articles/2016/3/24/the-blood-volume-pulse-biofeedback-basics (accessed on 19 December 2022).

- Tyapochkin, K.; Smorodnikova, E.; Pravdin, P. Smartphone PPG: Signal processing, quality assessment, and impact on HRV parameters. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 4237–4240.

- Pollreisz, D.; TaheriNejad, N. A simple algorithm for emotion recognition, using physiological signals of a smart watch. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 2353–2356.

- Tayibnapis, I.R.; Yang, Y.M.; Lim, K.M. Blood Volume Pulse Extraction for Non-Contact Heart Rate Measurement by Digital Camera Using Singular Value Decomposition and Burg Algorithm. Energies 2018, 11, 1076.

- John Hopkins Medicine. Electrocardiogram. Available online: https://www.hopkinsmedicine.org/health/treatment-tests-and-therapies/electrocardiogram (accessed on 19 December 2022).

- Shi, Y.; Ruiz, N.; Taib, R.; Choi, E.; Chen, F. Galvanic skin response (GSR) as an index of cognitive load. In Proceedings of the CHI’07 Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 2651–2656.

- Farnsworth, B. What is GSR (Galvanic Skin Response) and How Does It Work. 2018. Available online: https://imotions.com/blog/learning/research-fundamentals/gsr/ (accessed on 19 December 2022).

- McFarland, R.A. Relationship of skin temperature changes to the emotions accompanying music. Biofeedback Self-Regul. 1985, 10, 255–267.

- Ghahramani, A.; Castro, G.; Becerik-Gerber, B.; Yu, X. Infrared thermography of human face for monitoring thermoregulation performance and estimating personal thermal comfort. Build. Environ. 2016, 109, 1–11.

- Homma, I.; Masaoka, Y. Breathing rhythms and emotions. Exp. Physiol. 2008, 93, 1011–1021.

- Chu, M.; Nguyen, T.; Pandey, V.; Zhou, Y.; Pham, H.N.; Bar-Yoseph, R.; Radom-Aizik, S.; Jain, R.; Cooper, D.M.; Khine, M. Respiration rate and volume measurements using wearable strain sensors. NPJ Digit. Med. 2019, 2, 1–9.

- Massaroni, C.; Lopes, D.S.; Lo Presti, D.; Schena, E.; Silvestri, S. Contactless monitoring of breathing patterns and respiratory rate at the pit of the neck: A single camera approach. J. Sens. 2018, 2018, 4567213.

- Neulog. Respiration Monitor Belt Logger Sensor NUL-236. Available online: https://neulog.com/respiration-monitor-belt/ (accessed on 19 December 2022).

- Kwon, K.; Park, S. An optical sensor for the non-invasive measurement of the blood oxygen saturation of an artificial heart according to the variation of hematocrit. Sens. Actuators A Phys. 1994, 43, 49–54.

- Tian, Y.; Kanade, T.; Cohn, J.F. Facial expression recognition. In Handbook of Face Recognition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 487–519.

- Revina, I.M.; Emmanuel, W.S. A survey on human face expression recognition techniques. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 619–628.

- Li, J.; Mi, Y.; Li, G.; Ju, Z. CNN-based facial expression recognition from annotated rgb-d images for human–robot interaction. Int. J. Humanoid Robot. 2019, 16, 1941002.

- Piana, S.; Stagliano, A.; Odone, F.; Verri, A.; Camurri, A. Real-time automatic emotion recognition from body gestures. arXiv 2014, arXiv:1402.5047.

- Ahmed, F.; Bari, A.H.; Gavrilova, M.L. Emotion recognition from body movement. IEEE Access 2019, 8, 11761–11781.

- Xu, S.; Fang, J.; Hu, X.; Ngai, E.; Guo, Y.; Leung, V.; Cheng, J.; Hu, B. Emotion recognition from gait analyses: Current research and future directions. arXiv 2020, arXiv:2003.11461.

- Bhatia, Y.; Bari, A.H.; Hsu, G.S.J.; Gavrilova, M. Motion capture sensor-based emotion recognition using a bi-modular sequential neural network. Sensors 2022, 22, 403.

- Janssen, D.; Schöllhorn, W.I.; Lubienetzki, J.; Fölling, K.; Kokenge, H.; Davids, K. Recognition of emotions in gait patterns by means of artificial neural nets. J. Nonverbal Behav. 2008, 32, 79–92.

- Higginson, B.K. Methods of running gait analysis. Curr. Sport. Med. Rep. 2009, 8, 136–141.

- Koolagudi, S.G.; Rao, K.S. Emotion recognition from speech: A review. Int. J. Speech Technol. 2012, 15, 99–117.

- Vogt, T.; André, E. Comparing feature sets for acted and spontaneous speech in view of automatic emotion recognition. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 474–477.

- Kanjo, E.; Younis, E.M.; Ang, C.S. Deep learning analysis of mobile physiological, environmental and location sensor data for emotion detection. Inf. Fusion 2019, 49, 46–56.

More

Information

Subjects:

Health Care Sciences & Services

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.8K

Revisions:

2 times

(View History)

Update Date:

18 Jan 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No