Video Upload Options

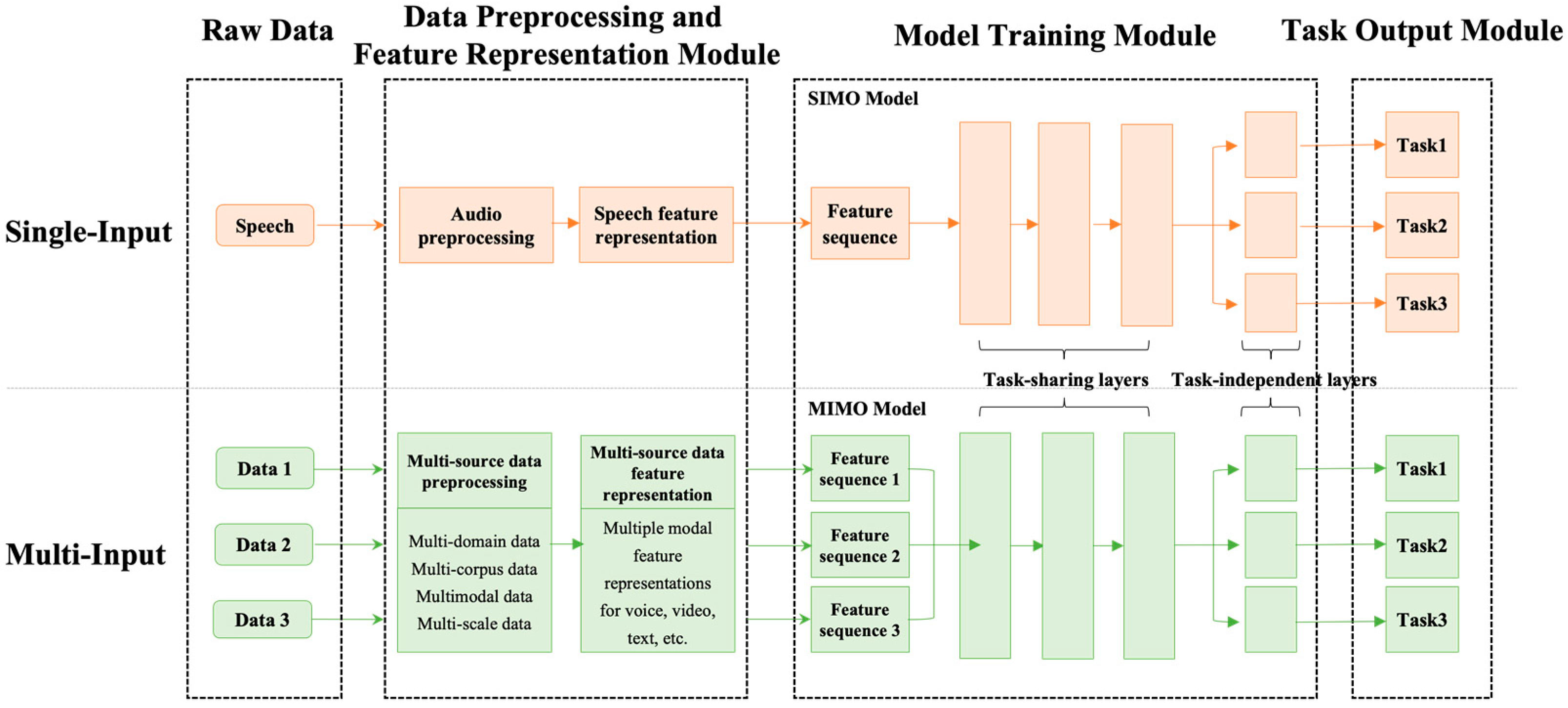

Speech emotion recognition (SER), a rapidly evolving task that aims to recognize the emotion of speakers, has become a key research area in affective computing. Various languages in multilingual natural scenarios extremely challenge the generalization ability of SER, causing the model performance to decrease quickly, and driving researchers to ask how to improve the performance of multilingual SER. To solve this problem, an explainable Multitask-based Shared Feature Learning (MSFL) model is proposed for multilingual SER. The introduction of multi-task learning (MTL) can provide related task information of language recognition for MSFL, improve its generalization in multilingual situations, and further lay the foundation for learning MSFs.

1. Introduction

2. Deep Learning for Speech Emotion Recognition

3. Multi-Task Learning for Speech Emotion Recognition

References

- Dellaert, F.; Polzin, T.; Waibel, A. Recognizing Emotion in Speech. In Proceedings of the Fourth International Conference on Spoken Language Processing, ICSLP ’96, Philadelphia, PA, USA, 3–6 October 1996; Volume 3, pp. 1970–1973.

- Savchenko, A.V.; Savchenko, L.V.; Makarov, I. Classifying Emotions and Engagement in Online Learning Based on a Single Facial Expression Recognition Neural Network. IEEE Trans. Affect. Comput. 2022, 13, 2132–2143.

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Research. 2022, 21, 5485–5551.

- Zhong, P.; Wang, D.; Miao, C. EEG-Based Emotion Recognition Using Regularized Graph Neural Networks. IEEE Trans. Affect. Comput. 2022, 13, 1290–1301.

- Li, H.F.; Chen, J.; Ma, L.; Bo, H.J.; Xu, C.; Li, H.W. Dimensional Speech Emotion Recognition Review. Ruan Jian Xue Bao/J. Softw. 2020, 31, 2465–2491. (In Chinese)

- Kakuba, S.; Poulose, A.; Han, D.S. Attention-Based Multi-Learning Approach for Speech Emotion Recognition with Dilated Convolution. IEEE Access 2022, 10, 122302–122313.

- Jiang, P.; Xu, X.; Tao, H.; Zhao, L.; Zou, C. Convolutional-Recurrent Neural Networks with Multiple Attention Mechanisms for Speech Emotion Recognition. IEEE Trans. Cogn. Dev. Syst. 2022, 14, 1564–1573.

- Guo, L.; Wang, L.; Dang, J.; Chng, E.S.; Nakagawa, S. Learning Affective Representations Based on Magnitude and Dynamic Relative Phase Information for Speech Emotion Recognition. Speech Commun. 2022, 136, 118–127.

- Vögel, H.-J.; Süß, C.; Hubregtsen, T.; Ghaderi, V.; Chadowitz, R.; André, E.; Cummins, N.; Schuller, B.; Härri, J.; Troncy, R.; et al. Emotion-Awareness for Intelligent Vehicle Assistants: A Research Agenda. In Proceedings of the 1st International Workshop on Software Engineering for AI in Autonomous Systems, Gothenburg, Sweden, 28 May 2018; pp. 11–15.

- Tanko, D.; Dogan, S.; Burak Demir, F.; Baygin, M.; Engin Sahin, S.; Tuncer, T. Shoelace Pattern-Based Speech Emotion Recognition of the Lecturers in Distance Education: ShoePat23. Appl. Acoust. 2022, 190, 108637.

- Huang, K.-Y.; Wu, C.-H.; Su, M.-H.; Kuo, Y.-T. Detecting Unipolar and Bipolar Depressive Disorders from Elicited Speech Responses Using Latent Affective Structure Model. IEEE Trans. Affect. Comput. 2020, 11, 393–404.

- Merler, M.; Mac, K.-N.C.; Joshi, D.; Nguyen, Q.-B.; Hammer, S.; Kent, J.; Xiong, J.; Do, M.N.; Smith, J.R.; Feris, R.S. Automatic Curation of Sports Highlights Using Multimodal Excitement Features. IEEE Trans. Multimed. 2019, 21, 1147–1160.

- Vogt, T.; André, E. Improving Automatic Emotion Recognition from Speech via Gender Differentiation. In Proceedings of the Fifth International Conference on Language Resources and Evaluation (LREC’06); European Language Resources Association (ELRA): Genoa, Italy, 2006.

- Mill, A.; Allik, J.; Realo, A.; Valk, R. Age-Related Differences in Emotion Recognition Ability: A Cross-Sectional Study. Emotion 2009, 9, 619–630.

- Latif, S.; Qayyum, A.; Usman, M.; Qadir, J. Cross Lingual Speech Emotion Recognition: Urdu vs. Western Languages. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 19 December 2018; pp. 88–93.

- Ding, N.; Sethu, V.; Epps, J.; Ambikairajah, E. Speaker Variability in Emotion Recognition—An Adaptation Based Approach. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 5101–5104.

- Feraru, S.M.; Schuller, D.; Schuller, B. Cross-Language Acoustic Emotion Recognition: An Overview and Some Tendencies. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 125–131.

- Eyben, F.; Scherer, K.R.; Schuller, B.W.; Sundberg, J.; André, E.; Busso, C.; Devillers, L.Y.; Epps, J.; Laukka, P.; Narayanan, S.S.; et al. The Geneva Minimalistic Acoustic Parameter Set (GeMAPS) for Voice Research and Affective Computing. IEEE Trans. Affect. Comput. 2016, 7, 190–202.

- Schuller, B.; Steidl, S.; Batliner, A.; Burkhardt, F.; Devillers, L.; Müller, C.; Narayanan, S.S. The INTERSPEECH 2010 Paralinguistic Challenge. In Proceedings of the Interspeech 2010, ISCA, Chiba, Japan, 26–30 September 2010; pp. 2794–2797.

- Qadri, S.A.A.; Gunawan, T.S.; Kartiwi, M.; Mansor, H.; Wani, T.M. Speech Emotion Recognition Using Feature Fusion of TEO and MFCC on Multilingual Databases. In Proceedings of the Recent Trends in Mechatronics Towards Industry 4.0; Ab. Nasir, A.F., Ibrahim, A.N., Ishak, I., Mat Yahya, N., Zakaria, M.A., Abdul Majeed, A.P.P., Eds.; Springer: Singapore, 2022; pp. 681–691.

- Origlia, A.; Galatà, V.; Ludusan, B. Automatic Classification of Emotions via Global and Local Prosodic Features on a Multilingual Emotional Database. In Proceedings of the Fifth International Conference Speech Prosody 2010, Chicago, IL, USA, 10–14 May 2010.

- Bandela, S.R.; Kumar, T.K. Stressed Speech Emotion Recognition Using Feature Fusion of Teager Energy Operator and MFCC. In Proceedings of the 2017 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, 3–5 July 2017; pp. 1–5.

- Rao, K.S.; Koolagudi, S.G. Robust Emotion Recognition Using Sentence, Word and Syllable Level Prosodic Features. In Robust Emotion Recognition Using Spectral and Prosodic Features; Rao, K.S., Koolagudi, S.G., Eds.; SpringerBriefs in Electrical and Computer Engineering; Springer: New York, NY, USA, 2013; pp. 47–69. ISBN 978-1-4614-6360-3.

- Araño, K.A.; Gloor, P.; Orsenigo, C.; Vercellis, C. When Old Meets New: Emotion Recognition from Speech Signals. Cogn Comput 2021, 13, 771–783.

- Wang, C.; Ren, Y.; Zhang, N.; Cui, F.; Luo, S. Speech Emotion Recognition Based on Multi-feature and Multi-lingual Fusion. Multimed. Tools Appl. 2022, 81, 4897–4907.

- Sun, L.; Chen, J.; Xie, K.; Gu, T. Deep and Shallow Features Fusion Based on Deep Convolutional Neural Network for Speech Emotion Recognition. Int. J. Speech Technol. 2018, 21, 931–940.

- Yao, Z.; Wang, Z.; Liu, W.; Liu, Y.; Pan, J. Speech Emotion Recognition Using Fusion of Three Multi-Task Learning-Based Classifiers: HSF-DNN, MS-CNN and LLD-RNN. Speech Commun. 2020, 120, 11–19.

- Al-onazi, B.B.; Nauman, M.A.; Jahangir, R.; Malik, M.M.; Alkhammash, E.H.; Elshewey, A.M. Transformer-Based Multilingual Speech Emotion Recognition Using Data Augmentation and Feature Fusion. Appl. Sci. 2022, 12, 9188.

- Issa, D.; Fatih Demirci, M.; Yazici, A. Speech Emotion Recognition with Deep Convolutional Neural Networks. Biomed. Signal Process. Control. 2020, 59, 101894.

- Li, X.; Akagi, M. Improving Multilingual Speech Emotion Recognition by Combining Acoustic Features in a Three-Layer Model. Speech Commun. 2019, 110, 1–12.

- Heracleous, P.; Yoneyama, A. A Comprehensive Study on Bilingual and Multilingual Speech Emotion Recognition Using a Two-Pass Classification Scheme. PLoS ONE 2019, 14, e0220386.

- Sagha, H.; Matějka, P.; Gavryukova, M.; Povolny, F.; Marchi, E.; Schuller, B. Enhancing Multilingual Recognition of Emotion in Speech by Language Identification. In Proceedings of the Interspeech 2016, ISCA, San Francisco, CA, USA, 8 September 2016; pp. 2949–2953.

- Bertero, D.; Kampman, O.; Fung, P. Towards Universal End-to-End Affect Recognition from Multilingual Speech by ConvNets. arXiv 2019, arXiv:1901.06486.

- Neumann, M.; Thang Vu, N. goc Cross-Lingual and Multilingual Speech Emotion Recognition on English and French. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5769–5773.

- Zehra, W.; Javed, A.R.; Jalil, Z.; Khan, H.U.; Gadekallu, T.R. Cross Corpus Multi-Lingual Speech Emotion Recognition Using Ensemble Learning. Complex Intell. Syst. 2021, 7, 1845–1854.

- Sultana, S.; Iqbal, M.Z.; Selim, M.R.; Rashid, M.M.; Rahman, M.S. Bangla Speech Emotion Recognition and Cross-Lingual Study Using Deep CNN and BLSTM Networks. IEEE Access 2022, 10, 564–578.

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Schuller, B.W. Self Supervised Adversarial Domain Adaptation for Cross-Corpus and Cross-Language Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2022.

- Tamulevičius, G.; Korvel, G.; Yayak, A.B.; Treigys, P.; Bernatavičienė, J.; Kostek, B. A Study of Cross-Linguistic Speech Emotion Recognition Based on 2D Feature Spaces. Electronics 2020, 9, 1725.

- Fu, C.; Dissanayake, T.; Hosoda, K.; Maekawa, T.; Ishiguro, H. Similarity of Speech Emotion in Different Languages Revealed by a Neural Network with Attention. In Proceedings of the 2020 IEEE 14th International Conference on Semantic Computing (ICSC), San Diego, CA, USA, 3–5 February 2020; pp. 381–386.

- Caruana, R. Multitask Learning. Mach. Learn. 1997, 28, 41–75.

- Lee, S. The Generalization Effect for Multilingual Speech Emotion Recognition across Heterogeneous Languages. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 5881–5885.

- Zhang, Y.; Liu, Y.; Weninger, F.; Schuller, B. Multi-Task Deep Neural Network with Shared Hidden Layers: Breaking down the Wall between Emotion Representations. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4990–4994.

- Sharma, M. Multi-Lingual Multi-Task Speech Emotion Recognition Using Wav2vec 2.0. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 6907–6911.

- Gerczuk, M.; Amiriparian, S.; Ottl, S.; Schuller, B.W. EmoNet: A Transfer Learning Framework for Multi-Corpus Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2021.

- Akçay, M.B.; Oğuz, K. Speech Emotion Recognition: Emotional Models, Databases, Features, Preprocessing Methods, Supporting Modalities, and Classifiers. Speech Commun. 2020, 116, 56–76.

- Wang, W.; Cao, X.; Li, H.; Shen, L.; Feng, Y.; Watters, P. Improving Speech Emotion Recognition Based on Acoustic Words Emotion Dictionary. Nat. Lang. Eng. 2020, 27, 747–761.

- Hsu, J.-H.; Su, M.-H.; Wu, C.-H.; Chen, Y.-H. Speech Emotion Recognition Considering Nonverbal Vocalization in Affective Conversations. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1675–1686.

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Epps, J. Direct Modelling of Speech Emotion from Raw Speech. In Proceedings of the Interspeech 2019, ISCA, Graz, Austria, 15 September 2019; pp. 3920–3924.

- Wu, X.; Cao, Y.; Lu, H.; Liu, S.; Wang, D.; Wu, Z.; Liu, X.; Meng, H. Speech Emotion Recognition Using Sequential Capsule Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3280–3291.

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780.

- Wang, J.; Xue, M.; Culhane, R.; Diao, E.; Ding, J.; Tarokh, V. Speech Emotion Recognition with Dual-Sequence LSTM Architecture. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6474–6478.

- Graves, A.; Jaitly, N.; Mohamed, A. Hybrid Speech Recognition with Deep Bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278.

- Wang, Y.; Zhang, X.; Lu, M.; Wang, H.; Choe, Y. Attention Augmentation with Multi-Residual in Bidirectional LSTM. Neurocomputing 2020, 385, 340–347.

- Mirsamadi, S.; Barsoum, E.; Zhang, C. Automatic Speech Emotion Recognition Using Recurrent Neural Networks with Local Attention. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2227–2231.

- Hu, D.; Wei, L.; Huai, X. DialogueCRN: Contextual Reasoning Networks for Emotion Recognition in Conversations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 7042–7052.

- Zhang, Y.; Yang, Q. An Overview of Multi-Task Learning. Natl. Sci. Rev. 2018, 5, 30–43.

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Qadir, J.; Schuller, B.W. Survey of Deep Representation Learning for Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2021.

- Zhang, Z.; Wu, B.; Schuller, B. Attention-Augmented End-to-End Multi-Task Learning for Emotion Prediction from Speech. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6705–6709.

- Li, Y.; Zhao, T.; Kawahara, T. Improved End-to-End Speech Emotion Recognition Using Self Attention Mechanism and Multitask Learning. In Proceedings of the Interspeech 2019, ISCA, Graz, Austria, 15 September 2019; pp. 2803–2807.

- Fu, C.; Liu, C.; Ishi, C.T.; Ishiguro, H. An End-to-End Multitask Learning Model to Improve Speech Emotion Recognition. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Virtual, 18–21 January 2021; pp. 1–5.

- Li, X.; Lu, G.; Yan, J.; Zhang, Z. A Multi-Scale Multi-Task Learning Model for Continuous Dimensional Emotion Recognition from Audio. Electronics 2022, 11, 417.

- Thung, K.-H.; Wee, C.-Y. A Brief Review on Multi-Task Learning. Multimed Tools Appl 2018, 77, 29705–29725.

- Xia, R.; Liu, Y. A Multi-Task Learning Framework for Emotion Recognition Using 2D Continuous Space. IEEE Trans. Affect. Comput. 2017, 8, 3–14.

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Epps, J.; Schuller, B.W. Multi-Task Semi-Supervised Adversarial Autoencoding for Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2022, 13, 992–1004.

- Atmaja, B.T.; Akagi, M. Dimensional Speech Emotion Recognition from Speech Features and Word Embeddings by Using Multitask Learning. APSIPA Trans. Signal Inf. Process. 2020, 9, e17.

- Kim, J.-W.; Park, H. Multi-Task Learning for Improved Recognition of Multiple Types of Acoustic Information. IEICE Trans. Inf. Syst. 2021, E104.D, 1762–1765.

- Chen, Z.; Badrinarayanan, V.; Lee, C.-Y.; Rabinovich, A. GradNorm: Gradient Normalization for Adaptive Loss Balancing in Deep Multitask Networks. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 3 July 2018; pp. 794–803.