Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Lee, J.D.; Nguyen, O.; Lin, Y.; Luu, D.; Kim, S.; Amini, A.; Lee, S.J. Applications of Facial Scanners in Dentistry. Encyclopedia. Available online: https://encyclopedia.pub/entry/38067 (accessed on 07 February 2026).

Lee JD, Nguyen O, Lin Y, Luu D, Kim S, Amini A, et al. Applications of Facial Scanners in Dentistry. Encyclopedia. Available at: https://encyclopedia.pub/entry/38067. Accessed February 07, 2026.

Lee, Jason D., Olivia Nguyen, Yu-Chun Lin, Dianne Luu, Susie Kim, Ashley Amini, Sang J. Lee. "Applications of Facial Scanners in Dentistry" Encyclopedia, https://encyclopedia.pub/entry/38067 (accessed February 07, 2026).

Lee, J.D., Nguyen, O., Lin, Y., Luu, D., Kim, S., Amini, A., & Lee, S.J. (2022, December 06). Applications of Facial Scanners in Dentistry. In Encyclopedia. https://encyclopedia.pub/entry/38067

Lee, Jason D., et al. "Applications of Facial Scanners in Dentistry." Encyclopedia. Web. 06 December, 2022.

Copy Citation

Facial scanning accuracy has proven to be clinically acceptable for use in dental applications. Accuracy is measured by comparing the scan to a control model and measuring the deviations between the two. Scanners with deviation values less than 2 mm are considered acceptable. Deviation values for facial scanners range between 140 and 1330 μm. Reported accuracy for most facial scanners was approximately 500 μm, which is within the limits for clinical acceptability.

dentistry

facial scanner

virtual patient

1. Introduction: Significance of Facial Scanners in Dentistry

The continuous advancement of digital technologies has led to major innovations in dental techniques and workflows. The use of intraoral scanners and CAD/CAM technology for the restoration of teeth and dental implants has become commonplace. More recently, facial scanners have found a foothold in the digital dental workflow. This technology uses optical scanning techniques to digitally capture and present a detailed three dimensional representation of a subject’s face and head. Subsequently, the data can be utilized for a multitude of analyses including patient treatment planning, diagnosis, and communication.

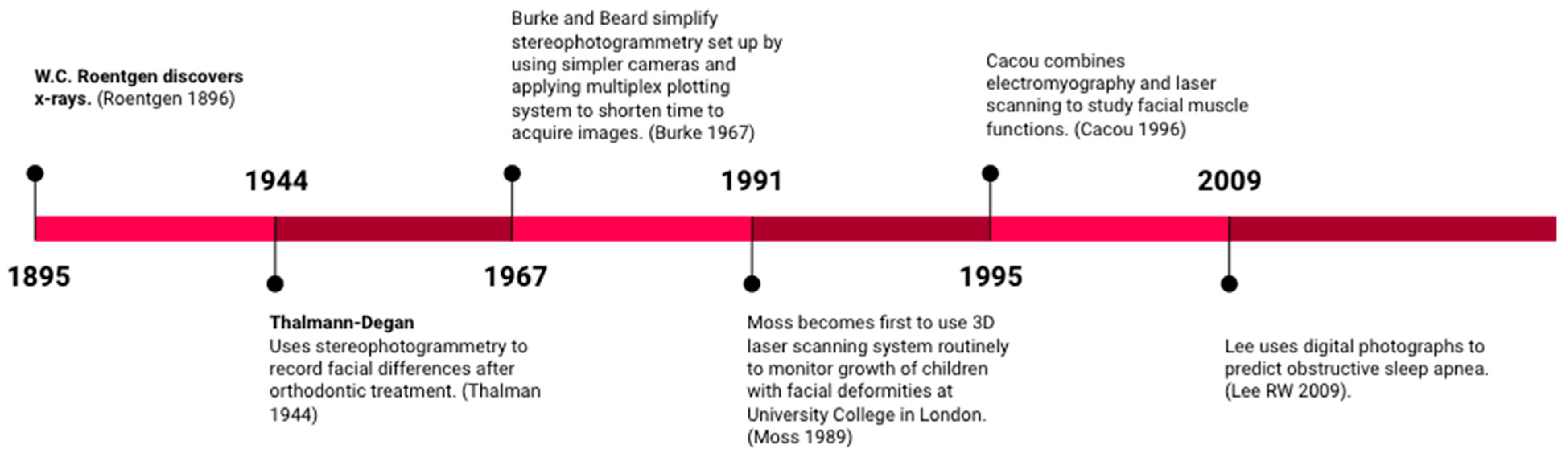

Despite the recent resurgence of facial scanning technology in dentistry, facial scans have a relatively long history of applications in the dental field, some of which are highlighted in Figure 1. In 1991, Moss and colleagues became the first to use a 3D laser scanning system routinely in a clinical setting [1]. They monitored the growth of children with facial deformities at the Department of Orthodontics at University College in London. Soon after, in 1995, Cacou and colleagues combined electromyography and laser scanning technology to study facial muscle functions. In 2009, Lee et al. used features of craniofacial surface structures from digital photographs to predict obstructive sleep apnea [2]. As technological innovation amplifies, facial scanning systems will become more accessible and affordable in the dental field enticing novel applications.

Figure 1. Historical timeline of imaging technology.

2. Diagnostic Records and the Virtual Patient

A detailed collection of patient information, history, and records must be done in order to form predictable treatment plans and execute successful dental treatment. Facial scanners have the potential to digitize and replace conventional extraoral records, analog facebow, occlusal analysis, and diagnostic wax-ups. The progress made in obtaining a facebow record is reviewed, tracking the mandible and virtually designing a smile, all of which can be used to create a virtual patient. Information provided by this virtual patient allows the practitioner to digitally plan treatment across multiple facets of dentistry such as prosthetic and implant rehabilitation, smile design, orthognathic surgery, and maxillofacial prosthodontics. This system provides information that facilitates digital communication between providers, patients and laboratory technicians in order to achieve a more predictable end result.

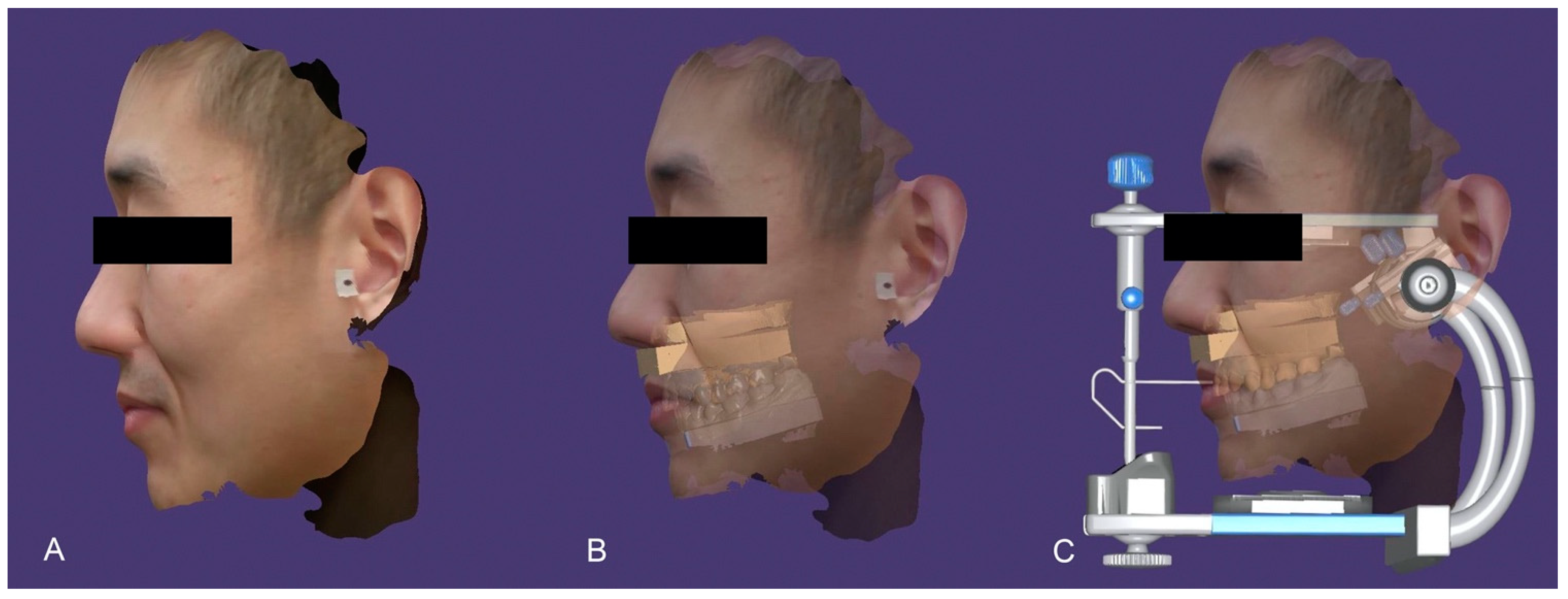

Routine digital radiography and photography provide standard diagnostic information critical to the development of an accurate diagnosis and treatment plan. With the advent of facial scanners, additional extraoral tissue and facial structure data can be acquired and superimposed to form a 3D virtual model of the patient. A virtual patient is created by merging digital diagnostic records such as a face scan, virtual facebow, intraoral scan and cone-beam computed technology (CBCT) via “best fit” analysis, in which certain landmarks are identified in different sets of scan information and superimposed to align the data files as seen in Figure 2 [3]. However, each imaging modality exists within its own operating system. For instance, CBCT images are in a Digital Imaging and Communications in Medicine (DICOM) format, facial scans are stored as object (OBJ) files, and intraoral scans typically are stereolithography (STL) files. The combination of these pieces of information can be complicated.

Figure 2. Virtual patient workflow. Facial scan captured (A), Facial scan merged with intraoral scan (B), Facial scan and intraoral scan mounted on a virtual articulator (C).

Another approach to combining scan data was proposed by Ayoub et al., who utilized a method that superimposed a facial scan utilizing stereophotogrammetry with a CT scan of the patient’s underlying bone by converting both data sets into virtual reality modelling language (VRML) [4]. Ten landmarks were chosen on both surfaces to facilitate superimposition. The landmarks chosen were right and left inner canthus, right and left outer canthus, nose, right and left alar cartilage, right and left chillion, and pronasale. The study calculated registration errors within ±1.5 mm between surfaces that were aligned.

Joda and Gallucci discussed a technique of superimposing DICOM, OBJ, and STL files by utilizing a landmark consistent within all three data sets [3]. For dentistry, the patient’s existing dentition is a common landmark used to overlay the three files to create a 3D virtual patient under static conditions. This allows for the simulation of treatment planning, management of patient expectations, and facilitation of effective communication with patients and colleagues, and non-invasive acquisition of clinical documentation. However, the common concern regarding the inability to generate a virtual patient through a single application that can utilize all three file types presently exists. Joda and Gallucci also suggested developing a commercially available system that is able to incorporate patient facial movements and fuse them onto DICOM, STL, and OBJ files to create a 4D virtual patient.

Developing a 4D virtual patient would require incorporating virtual articulation data. Virtual facebows would be used to orient digital models within the virtual space with respect to a reference plane and then the models articulated with respect to each other. Different methods reported in literature for transferring the virtual facebow include: facial scanning with reference points, photographs, and stereophotogrammetry. Virtual facebow and articulators are advantageous in that they circumvent common issues experienced with conventional manual articulating techniques such as material distortion, errors during orientation and positioning, and difficulties simulating patient data in 3D [5]. Elimination of these challenges could increase efficiency and decrease complications at the time of prosthesis delivery.

Lam et al. described a technique for mounting scanned data on a virtual articulator by registering the patient’s horizontal plane in the natural head position [6]. A 3D facial scan was taken of the patient in this position and oriented using a reference board to create a X, Y, Z axis. The teeth were scanned intraorally in maximum intercuspation with an intraoral scanner (True definition scanner, 3M ESPE, St. Paul, MN). An additional 3D facial scan was taken with a facebow to register the relation of the maxilla. Markers on the transfer device were used as reference points to align the outside of the face to the maxilla and the dental arch was correctly positioned to the face. CAD-CAM software (Exocad GmbH, Darmstadt, Germany) oriented the virtual patient model to the virtual articulator. After calibration, the horizontal plane was registered to the virtual model and aligned to the virtual articulator at the transverse horizontal axis. Deviations in this proposed method were less than 1 degree and 1 mm for five repeated measurements on the same patient. This technique had repeatable results and is a good alternative to using a CBCT to register the position of the maxilla, circumventing radiation exposure. A digitized facial scan also provides abundant reference points, especially in edentulous cases or any case where reference points are minimal and technicians must rely on analog reference points provided by the dentist. The technician may place lines and planes on the “digital skull” to correctly determine Frankfort horizontal plane, Camper’s line, patient’s horizontal line, amongst others to use as occlusal plane reference points [7].

In a series of studies by Solaberrieta et al., various methods for relation of models via virtual articulation were proposed [8]. In 2015, their study used an extraoral light scanner (ATOS Compact Scan 5 M; GOM mbH, Braunschweig, Germany) to locate 6 reference points that were located on the head and jaws of the patient through a device worn on the patient’s head. Points 1–3 were located on the horizontal plane and points 4–6 were on the patient’s occlusal plane. These were then directly transferred to the virtual articulator with a reverse engineering software (Geomagic Design X; 3D Systems, Littleton, CO, USA). The position of the maxilla was compared to results from a conventional pantograph. Although an advantage of this proposed methodology was that it did not require a physical articulator or facebow, deviation values suggested that this procedure be improved before use in orthognathic or restorative treatment.

Another technique presented by Solaberrieta et al. used an intraoral scanner, digital camera, and reverse engineering software to virtually locate casts onto a virtual articulator [9]. Intraoral scans created digital casts of the maxilla and mandible. Adhesive points were applied directly to the patient’s face with two points next to both temporomandibular joints and a third point on the infraorbital point. A facebow fork which included an elastomeric impression was placed into the subject’s mouth. Photographs were taken of the face to obtain a 3D image of the head with target points related to the facebow. The impression and facebow fork were scanned. Using reverse engineering software, the facebow fork was aligned to the maxillary jig. This proposed method allowed for the location of a terminal transverse hinge axis through the use of posterior landmarks near the temporomandibular joints.

More recently, Inoue et al. compared a novel method of mounting virtual casts using landmarks from facial scans, to virtual casts mounted using average values, and those mounted based on a traditional facebow [10]. The Bergstrom points and orbitale were marked on the patient prior to capturing the facial scan. The facial scans were then merged with the intraoral scans using a target bitefork (SNAP; Degrees of Freedom Inc., Marlboro, VT, USA), and the previously marked facial landmarks were used to align this file to the virtual articulator. They compared positional differences between the mounted virtual casts in the different groups and found no significant difference in the mounting accuracy when comparing the facial scanner and the traditional facebow groups. However, a statistically significant difference was found in the virtually mounted casts using average values to those mounted with a facebow. They concluded that virtual mounting using landmarks from a facial scan could be a more accurate option for virtual articulation versus the use of average values.

An accurate diagnostic reproduction of a patient’s mandibular movements is important for the fabrication of prostheses without occlusal interferences. A factor to consider in eliminating interferences with virtual articulation is precise registration of the patient’s sagittal condylar inclination (SCI). Hong and Noh used the Christensen method of measuring SCI with a protrusive bite record using a facial scan and intraoral scan [11]. A custom bite registration device was 3D printed with round markers for the subject to bite while additional markers were placed on the infraorbital and on left and right Beyron points to record the hinge axis. A facial scan was captured using a mobile phone with a dedicated application. The intraoral scan was taken with the patient in maximal intercuspal position and protrusive interocclusal position. The scans were superimposed by a 3D image analysis software (Geomagic Control X; 3D Systems, Luxembourg, Germany) and the Frankfort horizontal plane and midsagittal plane were established. The angle between a line projected to the midsagittal plane and the Frankfort horizontal plane was measured to obtain the SCI. With this technique, incorporation of SCI within the virtual articulator enables customization of the occlusal surfaces of designed prosthesis to prevent interferences.

Facial scanners can also be used to record mandibular movements. In a study assessing occlusal dynamics with a structured light facial scanner, non-reflective targets were placed on the facial surface of the mandibular incisors and used to digitally record the motion of the mandible and temporomandibular joints by merging the motion data with the patient’s CBCT. This proposed technique was suggested for application during the fabrication of dental prostheses or diagnosis of temporomandibular disease [12]. In another digital method to record mandibular movement, real time jaw movements of scanned casts were created by transforming target tracking data of four incisors in the maxilla and mandible. With the further development of techniques for virtually recording mandibular movements, practitioners may have the opportunity to digitally check occlusal contacts during eccentric movement [13] and simulate articular guidance [14].

3. Smile Design

In the past, smile design was done in 2D by physically cutting and annotating printed photographs in order to simulate desired final results of treatments. 2D plans would then be converted into a 3D model through an additive wax up, which was transferred to the patient via a physical mock-up [15]. The greatest drawback of such multi-step analog conversions is the risk of introducing error and distortion into the workflow [15]. With advances in technology and digital cameras, digital smile design protocols and systems were created to be used in conjunction with Keynote, PowerPoint, or specialized programs to streamline the design process and make the final results more predictable. In digital workflows with facial scanners, the patient’s facial features are recorded digitally, and the conversions that were once done by hand can be done virtually, eliminating the introduction of those errors [16].

Utilizing a patient’s facial scan to create a 3D virtual patient model also allows for faster and better communication within an interdisciplinary team and with the patient. Effective communication between the patient, clinician, and lab technician is vital when it comes to esthetics. Since esthetics are very subjective and dependent on many factors, a virtual model of the patient enables the clinician and patient to effectively collaborate to customize treatment on a digital platform. 3D virtual smile design is also valuable as a non-invasive, fast, and inexpensive tool to motivate patients to accept treatment. Simulation of future treatment that provides the patient a better opportunity to visualize how the planned dental prosthesis can affect their esthetics.

4. Obstructive Sleep Apnea (OSA)

According to American Heart Association scientific statement, obstructive sleep apnea syndrome is a breathing disorder associated with partial or complete cessation in airflow of the upper airways during sleep, leading to abrupt reduction in oxygen levels to 80–89%, increased heart rates, and risk of stroke. Obesity and craniofacial abnormalities are key predisposing factors for airway collapse, although this varies between ethnicities. Currently, polysomnography (PSG) test is the gold standard to diagnose OSA. During the test, the patient is required to take an overnight sleep study with sensors to monitor breathing patterns, heart rate, electrical activity of heart, brain, eyes, and body movements. Unfortunately, extensive wait times and costs for PSG leave many patients undiagnosed, posing a public health issue.

Since the 1980′s, studies have looked into the relationship between craniofacial abnormalities and upper airway collapsibility [17][18][19][20]. Detection of a correlation between craniofacial structure and airway collapse can be identified through the use of a facial scanner as a possible alternative diagnostic tool for OSA. 2D photographs have been used to classify OSA since photos are easily obtained and inexpensive [21][22]. In a study that classified the clinical phenotypes of the disease, facial landmarks were placed on photographs of patients, who had completed a PSG to test for those with and without OSA [23]. The landmarks recorded, face width, mandibular length, eye width, and cervic

facial scannerarking by hand and automatic landmarking was similar at 69–70%. Further application of landmarks to facial scans that create facial depth maps could generate more information about facial morphology than a simple 2D image [24].

In a recent study, 3D facial scans (3dMDface; 3dMD, Atlanta, GA, USA) were analyzed to predict the presence and severity of OSA through development of a mathematical algorithm for OSA clinical assessment. This mathematical algorithm involved linear measurements, the shortest distance between two points, and geodesic measurements, the shortest distance between two points on a curved surface. They were able to predict OSA with a 91% accuracy when linear and geodesic measurements from the 3D images were merged into one algorithm [25]. This research provided an innovative push towards more stream-lined and accurate diagnostic tools for OSA using facial scanners, in comparison to 2D photographic analysis.

Advancements in OSA treatment have also benefited from facial scanners. Customized CPAP masks and compared the air leak and comfort to commercially designed CPAP masks [26]. A facial scanner (3dMDface; 3dMD, Atlanta, GA, USA) was used to 3D-print the mask and silicone was injected to make the cushion that contacts the skin. In a sample size of 6 subjects, the study concluded that many found the customized mask to be highly comfortable and air leak rates to be similar to the subject’s favored commercially made mask.

Though there are limited studies on the use of facial scanners for OSA patients currently, the technology has significant potential in advancing the field of OSA. Diagnosis of OSA can be substantially improved since PSG is time consuming, expensive, and resource-draining. Developments in OSA diagnostic technology show promise in allowing for easier diagnosis and accuracy in comparison to the current standard. The challenge remains to translate scientific findings to clinical applications, but with OSA being quite underdiagnosed and untreated, there is significant promise for growth in this field [27].

References

- Moss, J.P.; Coombes, A.M.; Linney, A.D.; Campos, J. Methods of three dimensional analysis of patients with asymmetry of the face. Proc. Finn. Dent. Soc. Suom. Hammaslaak. Toim. 1991, 87, 139–149.

- Karatas, O.H.; Toy, E. Three-dimensional imaging techniques: A literature review. Eur. J. Dent. 2014, 8, 132–140.

- Joda, T.; Gallucci, G.O. The virtual patient in dental medicine. Clin. Oral Implants. Res. 2015, 26, 725–726.

- Ayoub, A.F.; Xiao, Y.; Khambay, B.; Siebert, J.P.; Hadley, D. Towards building a photo-realistic virtual human face for craniomaxillofacial diagnosis and treatment planning. Int. J. Oral Maxillofac. Surg. 2007, 36, 423–428.

- Lepidi, L.; Galli, M.; Mastrangelo, F.; Venezia, P.; Joda, T.; Wang, H.; Li, J. Virtual Articulators and Virtual Mounting Procedures: Where Do We Stand? J. Prosthodont. Off. J. Am. Coll. Prosthodont. 2020, 30, 24–35.

- Lam, W.Y.H.; Hsung, R.T.C.; Choi, W.W.S.; Luk, H.W.K.; Pow, E.H.N. A 2-part facebow for CAD-CAM dentistry. J. Prosthet. Dent. 2016, 116, 843–847.

- Schweiger, J. 3D Facial Scanning. Published December 2018. Available online: https://www.zirkonzahn.com/assets/files/publications/EN-Dental-Dialogue-2018-12-web.pdf (accessed on 29 December 2020).

- Solaberrieta, E.; Garmendia, A.; Minguez, R.; Brizuela, A.; Pradies, G. Virtual facebow technique. J. Prosthet. Dent. 2015, 114, 751–755.

- Solaberrieta, E.; Mínguez, R.; Barrenetxea, L.; Otegi, J.R.; Szentpétery, A. Comparison of the accuracy of a 3-dimensional virtual method and the conventional method for transferring the maxillary cast to a virtual articulator. J. Prosthet. Dent. 2015, 113, 191–197.

- Inoue, N.; Scialabba, R.; Lee, J.D. A comparison of virtually mounted dental casts from traditional facebow records, average values, and 3D facial scans. J. Prosthet. Dent. 2022.

- Hong, S.J.; Noh, K. Setting the sagittal condylar inclination on a virtual articulator by using a facial and intraoral scan of the protrusive interocclusal position: A dental technique. J. Prosthet. Dent. 2021, 125, 392–395.

- Kwon, J.H.; Im, S.; Chang, M.; Kim, J.E.; Shim, J.S. A digital approach to dynamic jaw tracking using a target tracking system and a structured-light three-dimensional scanner. J. Prosthodont. Res. 2019, 63, 115–119.

- Kim, J.E.; Park, J.H.; Moon, H.S.; Shim, J.S. Complete assessment of occlusal dynamics and establishment of a digital workflow by using target tracking with a three-dimensional facial scanner. J. Prosthodont. Res. 2019, 63, 120–124.

- Stavness, I.K.; Hannam, A.G.; Tobias, D.L.; Zhang, X. Simulation of dental collisions and occlusal dynamics in the virtual environment. J. Oral Rehabil. 2016, 43, 269–278.

- Antolín, A.; Rodríguez, N.A.; Crespo, J.A. Digital Flow in Implantology Using Facial Scanner. Published 2018. Available online: https://www.semanticscholar.org/paper/Digital-Flow-in-Implantology-Using-Facial-Scanner-Antol%C3%ADn-Rodr%C3%ADguez/0397531202d32a61f18337de99e4b3acf546206b (accessed on 23 December 2020).

- Lin, W.S.; Harris, B.T.; Phasuk, K.; Llop, D.R.; Morton, D. Integrating a facial scan, virtual smile design, and 3D virtual patient for treatment with CAD-CAM ceramic veneers: A clinical report. J. Prosthet. Dent. 2018, 119, 200–205.

- Jamieson, A.; Guilleminault, C.; Partinen, M.; Quera-Salva, M.A. Obstructive sleep apneic patients have craniomandibular abnormalities. Sleep 1986, 9, 469–477.

- Ferguson, K.A.; Ono, T.; Lowe, A.A.; Ryan, C.F.; Fleetham, J.A. The relationship between obesity and craniofacial structure in obstructive sleep apnea. Chest 1995, 108, 375–381.

- Kushida, C.A.; Efron, B.; Guilleminault, C. A predictive morphometric model for the obstructive sleep apnea syndrome. Ann. Intern. Med. 1997, 127 8 Pt 1, 581–587.

- Lee, R.W.W.; Vasudavan, S.; Hui, D.; Prvan, T.; Petocz, P.; Darendeliler, M.A.; Cistulli, P.A. Differences in Craniofacial Structures and Obesity in Caucasian and Chinese Patients with Obstructive Sleep Apnea. Sleep 2010, 33, 1075–1080. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2910536/ (accessed on 29 December 2020).

- Lee, C.H.; Kim, J.-W.; Lee, H.J.; Yun, P.-Y.; Kim, D.-Y.; Seo, B.S.; Yoon, I.-Y.; Mo, J.-H. An investigation of upper airway changes associated with mandibular advancement device using sleep videofluoroscopy in patients with obstructive sleep apnea. Arch. Otolaryngol. Head Neck Surg. 2009, 135, 910–914.

- Espinoza-Cuadros, F.; Fernández-Pozo, R.; Toledano, D.T.; Alcázar-Ramírez, J.D.; López-Gonzalo, E.; Hernández-Gómez, L.A. Speech Signal and Facial Image Processing for Obstructive Sleep Apnea Assessment. Comput. Math. Methods Med. 2015, 2015, 489761.

- Balaei, A.T.; Sutherland, K.; Cistulli, P.A.; de Chazal, P. Automatic detection of obstructive sleep apnea using facial images. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 215–218.

- Islam, S.M.S.; Mahmood, H.; Al-Jumaily, A.A.; Claxton, S. Deep Learning of Facial Depth Maps for Obstructive Sleep Apnea Prediction. In Proceedings of the 2018 International Conference on Machine Learning and Data Engineering (ICMLDE), Sydney, Australia, 3–7 December 2018; pp. 154–157.

- Eastwood, P.; Gilani, S.Z.; McArdle, N.; Hillman, D.; Walsh, J.; Maddison, K.; Goonewardene, B.M.; Mian, A. Predicting sleep apnea from three-dimensional face photography. J. Clin. Sleep Med. 2020, 16, 493–502.

- Duong, K.; Glover, J.; Perry, A.; Olmstead, D.; Colarusso, P.; Ungrin, M.; MacLean, J.; Martin, A. Customized Facemasks for Continuous Positive Airway Pressure: Feasibility Study in Healthy Adults Volunteers. Am. J. Respir. Crit. Care Med. 2020, 201, A2432.

- Luyster, F.S.; Buysse, D.J.; Strollo, P.J. Comorbid insomnia and obstructive sleep apnea: Challenges for clinical practice and research. J. Clin. Sleep Med. JCSM Off. Publ. Am. Acad. Sleep Med. 2010, 6, 196–204.

More

Information

Subjects:

Dentistry, Oral Surgery & Medicine

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

3.0K

Revisions:

3 times

(View History)

Update Date:

06 Dec 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No