Video Upload Options

Test generation is the process of creating a set of test data or test cases for testing the adequacy of new or revised software applications. Test Generation is seen to be a complex problem and though a lot of solutions have come forth most of them are limited to toy programs. Test Generation is one aspect of software testing. Since testing is labor-intensive, accounting for nearly one third of the cost of the system development, the problem of generating quality test data quickly, efficiently and accurately is seen to be important.

1. Basic Concepts

1.1. Mathematical Modelling

A program P could be considered as a function, P:S → R, where S is the set of all possible inputs and R the set of all possible outputs. An input variable of function P is mapped to an input parameter of P. P(x) denotes execution of program for certain input x.[1][2]

1.2. Control-Flow Graph

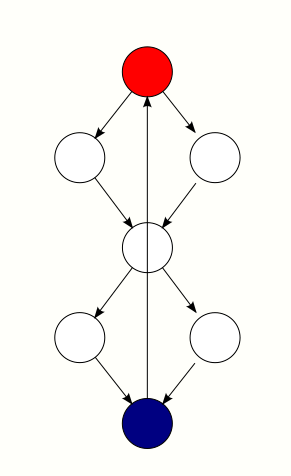

A Control-Flow Graph of a program P is a directed graph G = (N, E, s, e) consisting of a set of nodes N and a set of edges E = {(n, m)|n, m ∈ N } connecting the nodes.[1][2]

Each node denotes a basic block which itself is a sequence of instructions. It is important to note that in every basic block the control enters through the entry node and leaves at the end without stopping or branching except at the end. Basically, a block is always executed as a whole. The entry and exit nodes are two special nodes denoted by s and e respectively.

An edge in a control-flow graph represents possible transfer of control. All edges have associated with them a condition or a branch predicate. The branch predicate might be the empty predicate which is always true. In order to traverse the edge the condition of the edge must hold. If a node has more than one outgoing edge the node is a condition and the edges are called branches.

2. Test Data Generators

Based on the Mathematical Modelling above we can simply state the Test Data Generator Problem as: Given a program P and a path u, generate input x ∈ S, so that x traverses path u.

2.1. Random Test Data Generators

Random test data generation is probably the simplest method for generation of test data. The advantage of this is that it can be used to generate input for any type of program. Thus to generate test data we can randomly generate a bit stream and let it represent the data type needed. However, random test data generation does not generate quality test data as it does not perform well in terms of coverage. Since the data generated is based solely on probability it cannot accomplish high coverage as the chances of it finding semantically small faults is quite low.[3]

If a fault is only revealed by a small percentage of the program input it is said to be a semantically small fault. For an example of a semantically small fault consider the following code:

void test(char x, char y) { if (x == y) printf("Equal"); else printf("Not Equal"); }

It is easy to see that the probability of execution of the first statement is significantly lesser than that of the second statement. As the structures in it grow complex so does the probability of its execution. Thus, such semantically small faults are hard to find using random test data generation.

However, Random Test Data Generation is usually used as a benchmark as it has the lowest acceptable rate of generating test data.

2.2. Goal-Oriented Test Data Generators

The Goal-Oriented approach provides a guidance towards a certain set of paths. The Test Data Generators in this approach generate an input for any path u instead of the usual approach of generating input from the entry to the exit of a block of code. Thus, the generator can find any input for any path p which is a subset of the path u. This drastically reduces the risk of generating relatively infeasible paths and provides a way to direct the search. Two methods follow this technique:

- The Chaining approach

- Assertion-oriented approach.

Chaining approach

The chaining approach is an extension of the goal-oriented approach. It is seen that the main limitation of the test data generation methods is that only the control-flow graph is used to generate the test data. This limited knowledge may make our selection harder. Thus, it is seen that the path-oriented approach usually has to generate a large number of paths before it finds the "right" path. This is because the path selection is blind.[4] The chaining approach tries to identify a chain of nodes that are vital to the execution of the goal node. The chaining approach starts by executing for any arbitrary input x. The search program, during the execution of each branch decides whether to continue execution through this branch or if an alternative branch be taken because the current branch does not lead to the goal node. If it is observed that execution flow is undesirable then search algorithms are used to automatically find new input to change the flow execution. However, if for this point also the search process cannot find input X to change the flow of execution then the chaining approach attempts to alter the flow at node p due to which an alternative branch at p can be executed.[4][5]

Assertion-oriented approach

The assertion-oriented approach is an extension of the chaining approach. In this approach assertions - that is constraint conditions are inserted. This can be done either manually or automatically. If the program doesn't hold on execution there is an error in the program or the assertion.

Therefore, when an assertion is executed it must hold. Suppose we have code as follows:

void test(int a) { int b, c; b = a - 1; assertion(b != 0); c = (1 / b); }

In the above code, the program should hold at the assertion statement. If the assertion does not hold it means that the path followed leads to an error. Thus, the goal of this approach is to find any path to an assertion that does not hold. The other major advantage of this approach is that all the other methods expect the value of an execution of the generated test data to be calculated from some other source than the code. However, in this approach it is not necessary since expected value is provided with the assertion.

2.3. Pathwise Test Data Generators

Pathwise Test Data Generation is considered to be one of the best approaches to Test Data Generation. This approach does not give the generator the choice of selecting between multiple paths but just gives it one specific path for it to work on. Hence, the name Pathwise Test Data Generator. Thus, except for the fact that this method uses specific paths, it is quite similar to Goal-Oriented test data generation. The use of specific paths leads to a better knowledge and prediction of coverage. However, this also makes it harder to generate the needed test data.

Pathwise test data generators require two inputs from the user:

- The program to be tested

- Testing criterion (e.g.: path coverage, statement coverage, etc.)

If systems are solely based on the control-flow graph to select specific paths it more often than not leads to the selection of infeasible paths. In view of this, mechanisms have been proposed for a constraint based test data generation.[6] These mechanisms focus on fault-based testing introducing deliberate changes in the code. These deliberate changes are called as "mutants" and this type of testing is called Mutation Testing.

2.4. Intelligent Test Data Generators

Intelligent Test Data Generators depend on sophisticated analysis of the code to guide the search of the test data. Intelligent Test Data Generators are essentially utilize one of the test data generation method coupled with the detailed analysis of the code. This approach may generate test data quicker than the other approaches but the analysis required for the utilization of this approach over a wide variety of programs is quite complex and requires a great deal of insight to anticipate the different situations that may arise.[7][8] There are open-source packages for this such as DataGenerator.[9]

2.5. Chaotic Data Generators

Chaotic Data Generators generate data from a chaotic attractor. The chaotic attractor generates non-repeating data, and a small change in initial conditions in the attractor may cause a large change later in data generated.[10]

2.6. Hypermedia Generators

Hypermedia Generators generate hypertext, hypervolumes, and hypermovies.

2.7. Quantum Data Generators

A Quantum Data Generator generates qubits according to some other data generator. In this case, qubits are the data.

2.8. Test Data Based on Production

It is possible to extract test data from executions observed in production. For instance, Tiwari et al. capture runtime objects in Java applications, serialize them, and reuse them as test resources for regression testing.[11]

3. Test Assertion Generators

Once we have some test data, one needs a test oracle to assess the behavior of the program under test for the given test input. There are different kinds of oracles that can be used.[12]

One can also improve the assertions for existing test data. For instance, one can generate and add new assertions in existing test cases. This is what the DSpot system does in the context of the Java programming language: it does dynamic analysis of JUnit test cases and generate missing assertions.[13]

4. Test Case Generators

While test data generators generate only inputs, test case generators synthesize full test cases. For example, in an object-oriented language, a test case contains object creations and method calls. When one maximizes coverage, the generated test cases have no assertion.

// Example of generated test case that covers the constructor and two methods. @Test void generatedJunitTest() { Account b = new BankAccount(0); b.deposit(7); b.withdraw(3); }

To add assertions in the generated test cases, one must have a test oracle. For instance, one can use a reference implementation that has an oracle. Then, the expected value of the generated assertions is the actual value returned by the reference implementation.

@Test void generatedJunitTest() { Account b = new BankAccount(0); b.deposit(7); b.withdraw(3); assertEquals(4, b.getAmount()); }

EvoSuite[14] is an example of such a test case generator for Java.

5. Problems of Test Generation

Test generation is highly complex. The use of dynamic memory allocation in most of the code written in industry is the most severe problem that the Test Data Generators face as the usage of the software then becomes highly unpredictable, due to this it becomes harder to anticipate the paths that the program could take making it nearly impossible for the Test Data Generators to generate exhaustive Test Data. However, in the past decade significant progress has been made in tackling this problem better by the use of genetic algorithms and other analysis algorithms. The following are problem areas that are encountered while implementing the test data generation techniques for actual industry used code.[2]

5.1. Arrays and Pointers

Arrays and pointers can be considered to have similar constructs and also suffer from the same kind of problems. Arrays and pointers create problems during symbolic execution as they complicate the substitution since their values are not known. Also, in order to generate input for arrays and pointers, there are multiple complicating factors like the index of the array or the structure of the input that needs to be given to the pointer. This issue is further compounded by the possibility of dynamic allocation in arrays and pointers.

5.2. Objects

Objects, due to their dynamic nature, pose a problem for test generation. This is further compounded by the use of other object oriented features. All of this makes it hard to determine which code will be called at runtime. An attempt has been made to address the problem of Object-Oriented Code by use of mutation.[15]

5.3. Loops

Loops that vary their behaviour depending on the input variables are potentially problematic as it is difficult to anticipate the path that could be taken. However, if the given path is specific and doesn't change behavior, the loops cause no problem. There are a few techniques that have been suggested to solve this potential problem with loops.[16]

5.4. Modules

A program usually consists of modules which then itself consists of functions. Two solutions have been proposed for generating test data for such functions:[16]

- Brute Force Solution: Inline the called functions into the target

- Analyzing the Called Functions: Analyze the called functions first and generate path predicates for those functions.

However, often source code of the modules is not accessible and hence a complete static analysis is not always possible.

5.5. Infeasible Paths

To generate test data so as to traverse a path involves solving a system of equations. If there are no solutions then the path given is infeasible. However, in this we are limited by the problem of undecidable nature of the system of equations. The most common method adopted is to set a highest number of iterations to be done before declaring the path as in-feasible.

5.6. Constraint Satisfaction

As the name suggests, constraint satisfaction is the process of finding a solution that conforms to a set of constraints that the variables must satisfy. A solution is therefore a vector of variables that satisfies all constraints. Constraint satisfaction is a difficult problem to solve and hence is not usually properly implemented. All the programs need to satisfy a constraint in some way or the other. There have been many methods like iterative relaxation, genetic algorithms, etc. which allow solving for constraints.[6] [7]

5.7. Readability of Generated Tests

One of challenges of test generation is readability: when the generated tests are meant to be committed to the test suite, developers have been able to easily understand the generated test cases. However, generated test cases often contain obscure generated variable names.[17] One way to overcome this problem is that, instead of generating new tests, to improve existing tests already written by humans. This is known as test amplification.[18]

References

- Korel, Bogdan (August 1990). "Automated Software Test Data Generation". IEEE Transactions on Software Engineering 16 (8): 870–879. doi:10.1109/32.57624. https://dx.doi.org/10.1109%2F32.57624

- Edvardsson, Jon (October 1999). "A Survey on Automatic Test Data Generation". Proceedings of the Second Conference on Computer Science and Engineering in Linkoping.

- Offutt, J.; J. Hayes (1996). "A Semantic Model of Program Faults". International Symposium on Software Testing and Analysis.

- Korel, Bogdan (1990). "A Dynamic Approach of Automated Test Data Generation". Conference on Software Maintenance.

- Ferguson, Roger; Bogdan Korel (1996). "The Chaining Approach for Software Test Data Generation". ACM. http://www.cparity.com/projects/AcmClassification/samples/226158.pdf.

- DeMillo, R.A.; Offutt A.J. (September 1991). "Constraint-based automatic test data generation". IEEE Transactions on Software Engineering 19 (6): 640. doi:10.1109/32.232028. https://dx.doi.org/10.1109%2F32.232028

- Pargas, Roy; Harrold, Mary; Peck, Robert (1999). "Test Data Generation using Genetic Algorithms". Journal of Software Testing, Verification and Reliability 9 (4): 263–282. doi:10.1002/(sici)1099-1689(199912)9:4<263::aid-stvr190>3.0.co;2-y. http://www.cc.gatech.edu/~harrold/6340/cs6340_fall2009/Readings/pga.pdf.

- Michael, C.C.; McGraw, G.E.; Schatz, M.A.; Walton, C.C. (1997). "Genetic algorithms for dynamic test data generation". Proceedings 12th IEEE International Conference Automated Software Engineering. pp. 307–308. doi:10.1109/ASE.1997.632858. ISBN 978-0-8186-7961-2. https://dx.doi.org/10.1109%2FASE.1997.632858

- "The DataGenerator". https://finraos.github.io/DataGenerator/.

- "Chaos Data Generator". http://sprott.physics.wisc.edu/cdg.htm.

- Tiwari, Deepika; Zhang, Long; Monperrus, Martin; Baudry, Benoit (2021). "Production Monitoring to Improve Test Suites". IEEE Transactions on Reliability: 1–17. doi:10.1109/TR.2021.3101318. ISSN 0018-9529. https://ieeexplore.ieee.org/document/9526340/.

- Barr, Earl T.; Harman, Mark; McMinn, Phil; Shahbaz, Muzammil; Yoo, Shin (2015-05-01). "The Oracle Problem in Software Testing: A Survey". IEEE Transactions on Software Engineering 41 (5): 507–525. doi:10.1109/TSE.2014.2372785. ISSN 0098-5589. https://dx.doi.org/10.1109%2FTSE.2014.2372785

- Danglot, Benjamin; Vera-Pérez, Oscar Luis; Baudry, Benoit; Monperrus, Martin (2019). "Automatic test improvement with DSpot: a study with ten mature open-source projects" (in en). Empirical Software Engineering 24 (4): 2603–2635. doi:10.1007/s10664-019-09692-y. ISSN 1573-7616. https://hal.archives-ouvertes.fr/hal-01923575/document.

- Fraser, Gordon; Arcuri, Andrea (2011). "EvoSuite". Proceedings of the 19th ACM SIGSOFT symposium and the 13th European conference on Foundations of software engineering - SIGSOFT/FSE '11 (New York, New York, USA: ACM Press). doi:10.1145/2025113.2025179. http://dx.doi.org/10.1145/2025113.2025179.

- Seater, Robert; Gregory Dennis. Automated Test Data Generation with SAT. http://live.iugaza.edu.ps/NR/rdonlyres/Electrical-Engineering-and-Computer-Science/6-883Fall-2005/5DC306AF-F74F-454F-9296-97B11CDCFC31/0/test_generation.pdf.

- Ramamoorthy, C. V.; S. F. Ho; W. T. Chen (December 1976). "On the Automated Generation of Program Test Data". IEEE Transactions on Software Engineering SE-2 (4): 293–300. doi:10.1109/tse.1976.233835. https://dx.doi.org/10.1109%2Ftse.1976.233835

- Grano, Giovanni; Scalabrino, Simone; Gall, Harald C.; Oliveto, Rocco (2018). "An empirical investigation on the readability of manual and generated test cases". Proceedings of the 26th Conference on Program Comprehension - ICPC '18. pp. 348–351. doi:10.1145/3196321.3196363. ISBN 9781450357142. https://www.zora.uzh.ch/id/eprint/150985/1/150985.pdf.

- Danglot, Benjamin; Vera-Perez, Oscar; Yu, Zhongxing; Zaidman, Andy; Monperrus, Martin; Baudry, Benoit (2019). "A snowballing literature study on test amplification" (in en). Journal of Systems and Software 157: 110398. doi:10.1016/j.jss.2019.110398. https://dx.doi.org/10.1016%2Fj.jss.2019.110398