Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Pawel Kasprowski | -- | 1611 | 2022-11-22 20:01:12 | | | |

| 2 | Conner Chen | + 11 word(s) | 1622 | 2022-11-23 07:41:17 | | | | |

| 3 | Conner Chen | Meta information modification | 1622 | 2022-11-25 01:24:24 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Birawo, B.; Kasprowski, P. Eye Movement Events. Encyclopedia. Available online: https://encyclopedia.pub/entry/35872 (accessed on 07 February 2026).

Birawo B, Kasprowski P. Eye Movement Events. Encyclopedia. Available at: https://encyclopedia.pub/entry/35872. Accessed February 07, 2026.

Birawo, Birtukan, Pawel Kasprowski. "Eye Movement Events" Encyclopedia, https://encyclopedia.pub/entry/35872 (accessed February 07, 2026).

Birawo, B., & Kasprowski, P. (2022, November 22). Eye Movement Events. In Encyclopedia. https://encyclopedia.pub/entry/35872

Birawo, Birtukan and Pawel Kasprowski. "Eye Movement Events." Encyclopedia. Web. 22 November, 2022.

Copy Citation

Eye tracking is the process of tracking the movement of the eyes to know exactly where and for how long a person is looking. Classifying raw eye-tracker data into eye movement events reduces the complexity of eye movement analysis.

eye tracking

eye movement events

fixations

1. Introduction

Eye tracking is the process of tracking the movement of the eyes to know exactly where and for how long a person is looking [1]. The primary purpose of eye movement is to direct the eyes towards the targeted object and keep it at the center of the fovea to provide a clear vision of the object. Eye tracking is used in various research fields such as cognitive science, psychology, neurology, engineering, medicine and marketing, to mention some [2]. Human–computer interaction is another example of applications—it is beneficial for disabled people to interact with a computer through gaze [3]. Eye tracking can also be used to monitor and control automobile drivers [4]. It is thus highly interdisciplinary and used in various fields, which is also reflected in how eye-tracking hardware and software have been developed over the years [5]. To extract useful information, the raw eye movements are typically converted into so-called events. This process is named event detection.

The goal of eye movement event detection in eye-tracking research is to extract events, such as fixations, saccades, post-saccadic oscillations, smooth pursuits from the stream of raw eye movement data on a set of basic criteria and rules which are appropriate for the recorded data. This classification of recorded raw eye-tracking data into events is based on some assumptions about fixation durations, saccadic amplitudes and saccadic velocities [6]. Classifying raw eye-tracker data into eye movement events reduces the complexity of eye movement analysis [7]. The classification may be done by algorithms that are considered more objective, faster and more reproducible than manual human coding.

The event detection procedure in eye tracking is associated with many challenges. One of these is that many different types of disturbances and noises may occur in the recorded signal, which originates from the individual differences among the users and eye trackers. This variability between individuals and signal qualities may create signals that are difficult for analysis. Therefore, the challenge is to develop robust algorithms that are flexible enough to be used for signals with different types of events and disturbances and that can handle different types of eye trackers and different individuals. Another challenge of eye movement event detection in eye tracking signals is evaluating and comparing various detection algorithms. In various signal processing applications, the algorithm evaluation is performed by calculating the performance of simulated signals. However, the challenge is constructing simulated eye-tracking signals that can capture the disturbances and variations in raw signals to such an extent that they are helpful and authentic for performance evaluation. Moreover, due to the lack of a standard procedure for evaluating different event detection algorithms, it is not easy to compare the detection performances of various algorithms from different researchers [5].

In the past, researchers conducted a manual, time-consuming event detection. For example, ref. [8] devised a method to analyze eye movements at a rate of 10,000 s (almost three hours) of analysis time for 1 s of recorded data. Monty in [9] remarks that it is common to spend days processing data collected only in minutes. However, nowadays, event detection is almost exclusively done by applying a detection algorithm to the raw recorded eye-tracking data. For a long time, two broad classes of algorithms were used for eye movement event detection: The first class is the dispersion-based algorithms that detect fixations and assume the rest to be saccades [10]. The most well-known dispersion-based algorithm is the I-DT algorithm by Salvucci and Goldberg [11]. These algorithms detect the event by defining a spatial box that the raw recorded data must fit for a particular minimum time. The second class is the velocity-based algorithms that detect saccades and assume the rest to be fixations. The most well-known velocity-based algorithm is the I-VT algorithm [6][8]. These algorithms classify eye movements by calculating their velocity and comparing it to a predefined threshold.

2. Eye Movement Events

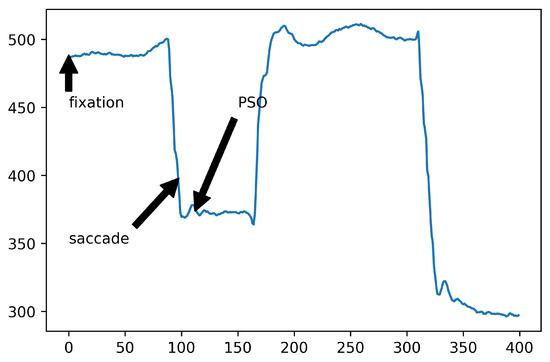

As was already mentioned, raw eye movements are typically divided into events. This section will discuss different types of eye movement events. Eye-tracking signals do not only consist of different types of events of eye movement but also noise from different sources and blinks. Therefore, an event detection algorithm needs to consider such problems. The most often used event types are discussed further in the following subsections. An example is also shown in Figure 1.

Figure 1. Graphical presentation of eye movement events for the horizontal axis.

Figure 1 shows an example of fixations, saccade and post-saccadic oscillations (PSO) in terms of position over time on the horizontal axis.

2.1. Fixations

A fixation is a movement when the eye is more or less still and focuses on an object. The purpose of the fixation movement is to stabilize the object on the fovea for clear vision. Fixation events may include three different types of distinct small movements: tremor, slow drift and microsaccades [7]. Tremor movement is a small wave-like eye motion with a frequency below 150 Hz and an amplitude around 0.01∘. The exact function of tremors still needs to be determined. Drift is a slow motion of the eye that co-occurs with tremor and it takes the eye away from the center of the fixation. A microsaccade is the fastest movement of the fixational eye movements, with a duration of about 25 ms. The role of a microsaccade movement is to quickly bring the eye back to its original position [7].

2.2. Saccades

A saccade is a rapid eye movement from one fixation point to another. A typical saccade has a duration between 30 and 80 ms and velocity between 30∘/s and 500∘/s [12]. There is a relationship between a saccade’s duration, amplitude and velocity. This relationship suggests that the larger saccades have larger velocities and last longer than the shorter ones [13]. The time from the onset of the stimulus to the initiation of the eye movement (called saccadic latency) is around 200 ms. It includes the time it takes for the central nervous system to determine whether a saccade should be initiated or not and, in this case, calculate the distance that the eye should move and transmit the neural pulses to the muscles that help to move the eyes [12]. Correct detection of saccades is essential because it is believed that a human brain does not “see” the image during the saccade. This phenomenon is called the saccadic suppression [14][15].

2.3. Smooth Pursuits

A smooth pursuit movement is performed when the eyes track a slowly moving object. It can only be performed when there is a moving object to follow. The latency of the smooth pursuit is around 100 ms and it is slightly shorter than the latency of saccadic movements [12]. It refers to the time it takes for the eye to start moving from the onset of the target object’s location. A smooth pursuit eye movement event can generally be divided into two stages: open-loop and closed-loop stages [16]. The initiation stage of the smooth pursuit is the pre-programmed open-loop stage, where the eye accelerates to catch up with the moving target. The closed-loop stage starts when the eye has caught up with the target and follows it with a velocity similar to that of the target object. In order to be able to follow the moving object in the closed-loop stage, the velocity of the moving object is estimated and compared to the velocity of the eye. If the velocity of the moving object and eye are different, for example, the eye lags behind the moving object, a catch-up saccade movement is performed to catch up with the object again. If the stimulus only consists of one moving target object that moves predictably, the eye will be able to follow it more accurately and with fewer catch-up saccades [16].

2.4. Post-Saccadic Oscillations

Rapid oscillatory movements that may occur immediately after the saccade are called PSO. They can be described as oscillatory movements or instabilities that occur at the end of a saccade [16]. Post-saccadic oscillations are characterized by a slight wobbling movement that leads to fixation after a saccade. The cause of the PSO still needs to be clarified. Some researchers believe that it is caused by the recording device [17] and others believe the eye itself naturally wobbles after a saccade [12]. The PSOs are the type of eye movement for which there is typically the most substantial disagreement between manual raters. However, they are events that occur during recording eye movements and can influence the characteristics of fixations and saccades events [18]. PSOs are typically very short events with a duration of about 10–40 ms with an amplitude of 0.5–2∘ and velocities of 20–140∘/s [18].

2.5. Glissades

Another largely unexplored reason behind the variation in event detection results is the behavior of the eye at the end of many saccades, which indicates that the eye sometimes does not fix directly on the object but undershoots or overshoots it and then needs to do an additional corrective short saccade. Such an event is called a glissade. According to [19], glissades happen after about 50% of saccades, so they have a significant impact on the accurate measurement of saccade offset and onset of the subsequent fixation. Therefore, frequently the glissade is treated as a separate class of eye movement [20]. This movement is also known as a dynamic overshoot (rapid postsaccadic movement [21]) or a glissadic overshoot (slower postsaccadic movement [22]). Researchers have observed that glissades rarely occur simultaneously in both eyes [21]. Although frequently reported in the literature, it is only sometimes explicitly taken into account by event detection algorithms. Glissades are therefore treated unsystematically and differently across algorithms and even within the same algorithm; one glissade may be assigned to the saccade, whereas the next one is merged with the fixation [20].

References

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037.

- Punde, P.A.; Jadhav, M.E.; Manza, R.R. A study of eye tracking technology and its applications. In Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM), Aurangabad, India, 5–6 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 86–90.

- Naqvi, R.A.; Arsalan, M.; Park, K.R. Fuzzy system-based target selection for a NIR camera-based gaze tracker. Sensors 2017, 17, 862.

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.S.; Park, K.R. Deep learning-based gaze detection system for automobile drivers using a NIR camera sensor. Sensors 2018, 18, 456.

- Braunagel, C.; Geisler, D.; Stolzmann, W.; Rosenstiel, W.; Kasneci, E. On the necessity of adaptive eye movement classification in conditionally automated driving scenarios. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016.

- Kasneci, E.; Kübler, T.C.; Kasneci, G.; Rosenstiel, W.; Bogdan, M. Online Classification of Eye Tracking Data for Automated Analysis of Traffic Hazard Perception. In Proceedings of the ICANN, Sofia, Bulgaria, 10–13 September 2013.

- Larsson, L. Event Detection in Eye-Tracking Data for Use in Applications with Dynamic Stimuli. Ph.D. Thesis, Department of Biomedical Engineering, Faculty of Engineering LTH, Lund University, Lund, Sweden, 4 March 2016.

- Hartridge, H.; Thomson, L. Methods of investigating eye movements. Br. J. Ophthalmol. 1948, 32, 581.

- Monty, R.A. An advanced eye-movement measuring and recording system. Am. Psychol. 1975, 30, 331–335.

- Zemblys, R.; Niehorster, D.C.; Holmqvist, K. gazeNet: End-to-end eye-movement event detection with deep neural networks. Behav. Res. Methods 2019, 51, 840–864.

- Salvucci, D.D.; Goldberg, J.H. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 71–78.

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; OUP: Oxford, UK, 2011.

- Bahill, A.T.; Clark, M.R.; Stark, L. The main sequence, a tool for studying human eye movements. Math. Biosci. 1975, 24, 191–204.

- Matin, E. Saccadic suppression: A review and an analysis. Psychol. Bull. 1974, 81, 899.

- Krekelberg, B. Saccadic suppression. Curr. Biol. 2010, 20, R228–R229.

- Wyatt, H.J.; Pola, J. Smooth pursuit eye movements under open-loop and closed-loop conditions. Vis. Res. 1983, 23, 1121–1131.

- Deubel, H.; Bridgeman, B. Fourth Purkinje image signals reveal eye-lens deviations and retinal image distortions during saccades. Vis. Res. 1995, 35, 529–538.

- Nyström, M.; Hooge, I.; Holmqvist, K. Post-saccadic oscillations in eye movement data recorded with pupil-based eye trackers reflect motion of the pupil inside the iris. Vis. Res. 2013, 92, 59–66.

- Flierman, N.A.; Ignashchenkova, A.; Negrello, M.; Thier, P.; De Zeeuw, C.I.; Badura, A. Glissades are altered by lesions to the oculomotor vermis but not by saccadic adaptation. Front. Behav. Neurosci. 2019, 13, 194.

- Nyström, M.; Holmqvist, K. An adaptive algorithm for fixation, saccade and glissade detection in eyetracking data. Behav. Res. Methods 2010, 42, 188–204.

- Kapoula, Z.; Robinson, D.; Hain, T. Motion of the eye immediately after a saccade. Exp. Brain Res. 1986, 61, 386–394.

- Weber, R.B.; Daroff, R.B. Corrective movements following refixation saccades: Type and control system analysis. Vis. Res. 1972, 12, 467–475.

More

Information

Subjects:

Biophysics

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.7K

Revisions:

3 times

(View History)

Update Date:

25 Nov 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No