Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Mohamed Dhiaeddine Messaoudi | -- | 4701 | 2022-11-07 04:13:30 | | | |

| 2 | Catherine Yang | Meta information modification | 4701 | 2022-11-07 04:51:35 | | | | |

| 3 | Catherine Yang | Meta information modification | 4701 | 2022-11-07 04:52:35 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Messaoudi, M.D.; Menelas, B.J.; Mcheick, H. Navigation Systems for the Visually Impaired. Encyclopedia. Available online: https://encyclopedia.pub/entry/33159 (accessed on 28 February 2026).

Messaoudi MD, Menelas BJ, Mcheick H. Navigation Systems for the Visually Impaired. Encyclopedia. Available at: https://encyclopedia.pub/entry/33159. Accessed February 28, 2026.

Messaoudi, Mohamed Dhiaeddine, Bob-Antoine J. Menelas, Hamid Mcheick. "Navigation Systems for the Visually Impaired" Encyclopedia, https://encyclopedia.pub/entry/33159 (accessed February 28, 2026).

Messaoudi, M.D., Menelas, B.J., & Mcheick, H. (2022, November 07). Navigation Systems for the Visually Impaired. In Encyclopedia. https://encyclopedia.pub/entry/33159

Messaoudi, Mohamed Dhiaeddine, et al. "Navigation Systems for the Visually Impaired." Encyclopedia. Web. 07 November, 2022.

Copy Citation

The visually impaired suffer greatly while moving from one place to another. They face challenges in going outdoors and in protecting themselves from moving and stationary objects, and they also lack confidence due to restricted mobility. Due to the recent rapid rise in the number of visually impaired persons, the development of assistive devices has emerged as a significant research field.

navigation method

blind people

visually impaired

1. Braille Signs Tools

Individuals with visual impairments must remember directions, as it is difficult to note them down. If the visually impaired lose their way, the main way forward is to find somebody who can help them. While Braille signs can be a decent solution here, the difficulty with this methodology is that it cannot be used as a routing tool [1]. These days, numerous public regions, such as emergency clinics, railroad stations, instructive structures, entryways, lifts, and other aspects of the system, are outfitted with Braille signs to simplify the route for visually impaired individuals. Regardless of how Braille characters can help visually impaired individuals know their area, they do not help to find a path.

2. Smart Cane Tools

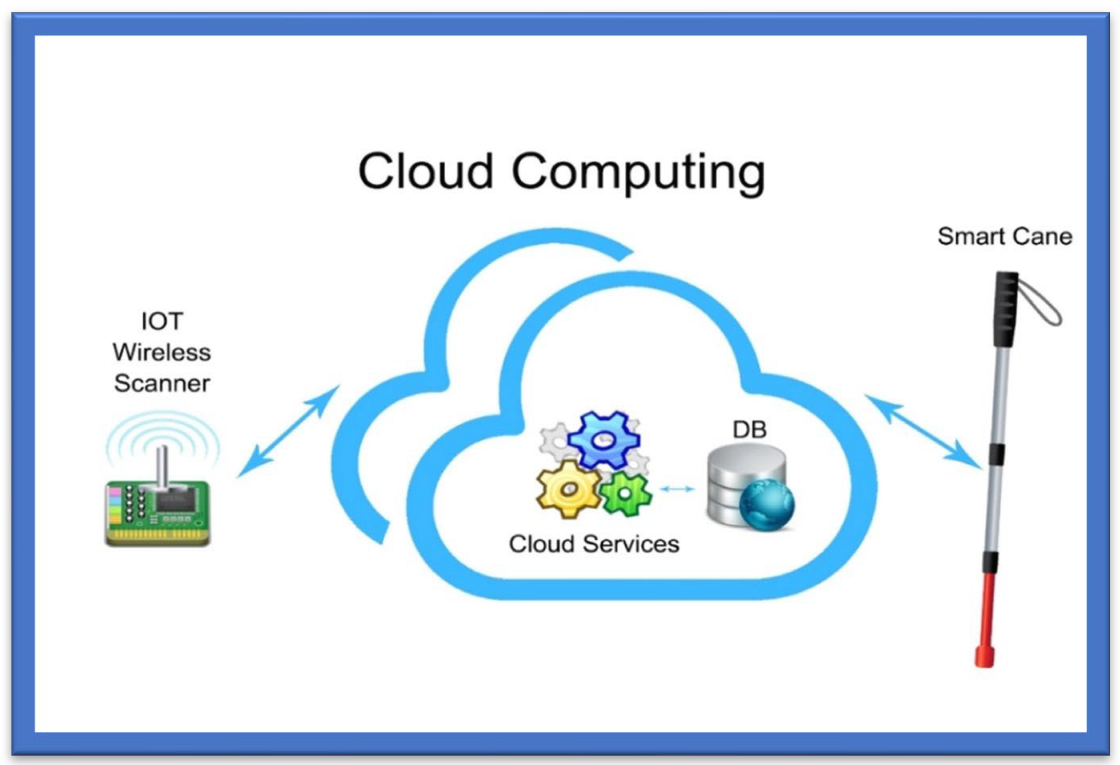

Smart canes help the visually impaired navigate their surroundings and detect what appears in front of them, either big or small, which is impossible to detect and identify (the size) with simple walking sticks. A smart guiding cane detects the obstacle, and the microphone produces a sound in the intelligent system deployed in the cane. The cane also helps to detect a dark or bright environment [2]. For indoor usage, an innovative cane navigation system was proposed [3] that uses IoT and cloud networks. The intelligent cane navigation system is capable of collecting the data transmitted to the cloud network; an IoT scanner is also attached to the cloud network. The concept is shown in Figure 1.

Figure 1. Smart Cane Navigation System.

The Smart Cane Navigation System comprises the camera, microcontrollers, and accelerometers that send audio messages. A cloud service is exploited in the navigation system to assist the user in navigating from one point to another. This navigation system fundamentally assists visually impaired or blind people in navigating and detecting the fastest route. Nearby objects are detected, and users are warned via a sound buzzer and a sonar [4]. Cloud services acquire the position of the cane and route to the destination, and these data go from the Wi-Fi Arduino board to the cloud. The system then uses a Gaussian model for the triangular-based position estimation. The cloud service is linked to the database that stores the shortest, safest, and longest paths. It outputs three lights: red, when objects are greater than 15 m; yellow, between 5 and 15 m; and green, less than 15 m. Distance is calculated by sound emission and echoes, which is the cheapest way of calculating distance, and a text-to-audio converter warns of possible hurdles or obstacles. Experiments have shown that this navigation system is quite effective in suggesting the fastest/shortest route to the users and identifying the hurdles or obstacles:

-

The system uses a cloud-based approach to navigate different routes. A Wi-Fi Arduino board in this cane connects to a cloud-based system;

-

The sound echoes and emissions are used to calculate the distance, and the user obtains a voice-form output;

-

The system was seen to be very efficient in detecting hurdles and suggesting the shortest and fastest routes to the visually impaired via a cloud-based approach.

3. Voice-Operated Tools

This outdoor voice-operated navigation system is based on G.P.S., ultrasonic sensors, and voice. This outdoor navigation system provides alerts for the current position of the users and guidance for traveling. The problem with this system is that it failed in obstacle detection and warning alerts [5]. Another navigation system uses a microcontroller to detect the obstacles and a feedback system that alerts the users about obstacles through voice and vibration [6].

4. Roshni

Roshni is an indoor navigation system that navigates through voice messages by pressing keys on a mobile unit. The position of the users in Roshni is identified by sonar technology by mounting ultrasonic modules at regular intervals on the ceiling. Roshni is portable, free to move anywhere, and unaffected by environmental changes. It needs a detailed interior map of the building that limits it only to indoor navigation [2].

Roshni application tools are easy to use, as the system operates by pressing mobile keys and guides the visually impaired using voice messages. Since it remains unaffected by a change in environment, it is easily transportable. The system is limited to indoor locations, and the user must provide a map of the building before the system can be used.

5. RFID-Based Map-Reading Tools

RFID is the fourth category of wireless technology used to facilitate visually impaired persons for indoor and outdoor activities. This technology is based on the “Internet of Things paradigm” through an IoT physical layer that helps the visually impaired navigate in their surroundings by deploying low-cost, energy-efficient sensors. The short communication range leaves this RFID technology incapable of being deployed in the landscape spatial range. In [7], an indoor navigation system for blind and older adults was proposed, based on the RFID technique, to assist disabled people by offering and enabling self-navigation in indoor surroundings. The goal of creating this approach was to handle and manage interior navigation challenges while taking into consideration the accuracy and dynamics of various environments. The system was composed of two modules for navigation and localization—that is, a server and a wearable module containing a microcontroller, ultrasonic sensor, RFID, Wi-Fi module, and voice control module. The results showed 99% accuracy in experiments. The time the system takes to locate the obstacle is 0.6 s.

Another map-reading system based on RFID provides solutions for visually disabled persons to pass through public places using an RFID tag grid, a Bluetooth interface, a RFID cane reader, and a personal digital assistant [8]. This system is costly, however, and there is a chance of collision in heavy traffic. A map-reading system is relatively expensive because of the hardware units it includes, and its limitation is that it is unreliable for areas with heavy traffic.

Another navigation system based on passive RFID proposed in [9] is equipped with a digital compass to assist the visually impaired. The RFID transponders are mounted on the floor, as tactile paving, to build RFID networks. Localization and positioning are equipped with a digital compass, and the guiding directions are given using voice commands. Table 1 incorporates detailed information about RFID-based navigation tools with recommended models for the visually impaired.

Table 1. Summary of Various RFID-Based Navigation Tools.

| Ref. Paper | Title | Proposed Model |

|---|---|---|

| [10] | Mobile audio navigation interfaces for the blind | Drishti system combines the ultrasonic sensor for indoor navigation and G.P.S. for outdoor navigation for blind people. |

| [11] | BLI-NAV embedded navigation system for blind people | Comprised of G.P.S. and path detectors, which detect the path and determine the shortest obstacle-free route. |

| [12] | A pocket-PC-based navigational aid for blind individuals | Pocket PC-based Electronic Travel Aid (E.T.A.) warns users of obstacles via audio-based instructions. |

| [13] | A blind navigation system using RFID for indoor environments | A wireless mesh-based navigation system that warns the users about obstacles via a headset with microphone. |

| [14] | Design and development of navigation system using RFID technology | This system uses an RFID reader mounted on the end of the stick that reads the transponder tags installed on the tactile paving. |

6. Wireless Network-Based Tools

Wireless network-based solutions for navigation and indoor positioning include various approaches, such as cellular communication networks, Wi-Fi networks, ultra-wideband (U.W.B.) sensors, and Bluetooth [15]. The indoor positioning is highly reliable in the wireless network approach and easy to use for blind persons. Table 2 summarises various studies based on the wireless networks for VI people.

Table 2. Summary of Various Wireless-Network-Based Navigation Tools.

| Ref. Paper | Model Used | Detailed Technology Used |

|---|---|---|

| [16] | Syndrome-Enabled Unsupervised Learning for Neural Network-Based Polar Decoder and Jointly Optimized Blind Equalizer | 1. This neural network-based approach uses a polar decoder to aid CRC-enabled syndrome loss, B.P.P. decoder. 2. This approach has not been evaluated under varying channels. |

| [17] | Wireless Sensor Network Based Personnel Positioning Scheme used in Coal Mines with Blind Areas | This model determines the 3D positions by coordinate transformation and corrects the localization errors using real-time personal local with the help of a location engine. |

| [18] | A model based on ultrasonic spectacles and waist belts for blind people | This model detects obstacles within 500 cm. It uses a microcontroller-based embedded system to process real-time data gathered using ultrasonic sensors. |

7. Augmented White Cane Tools

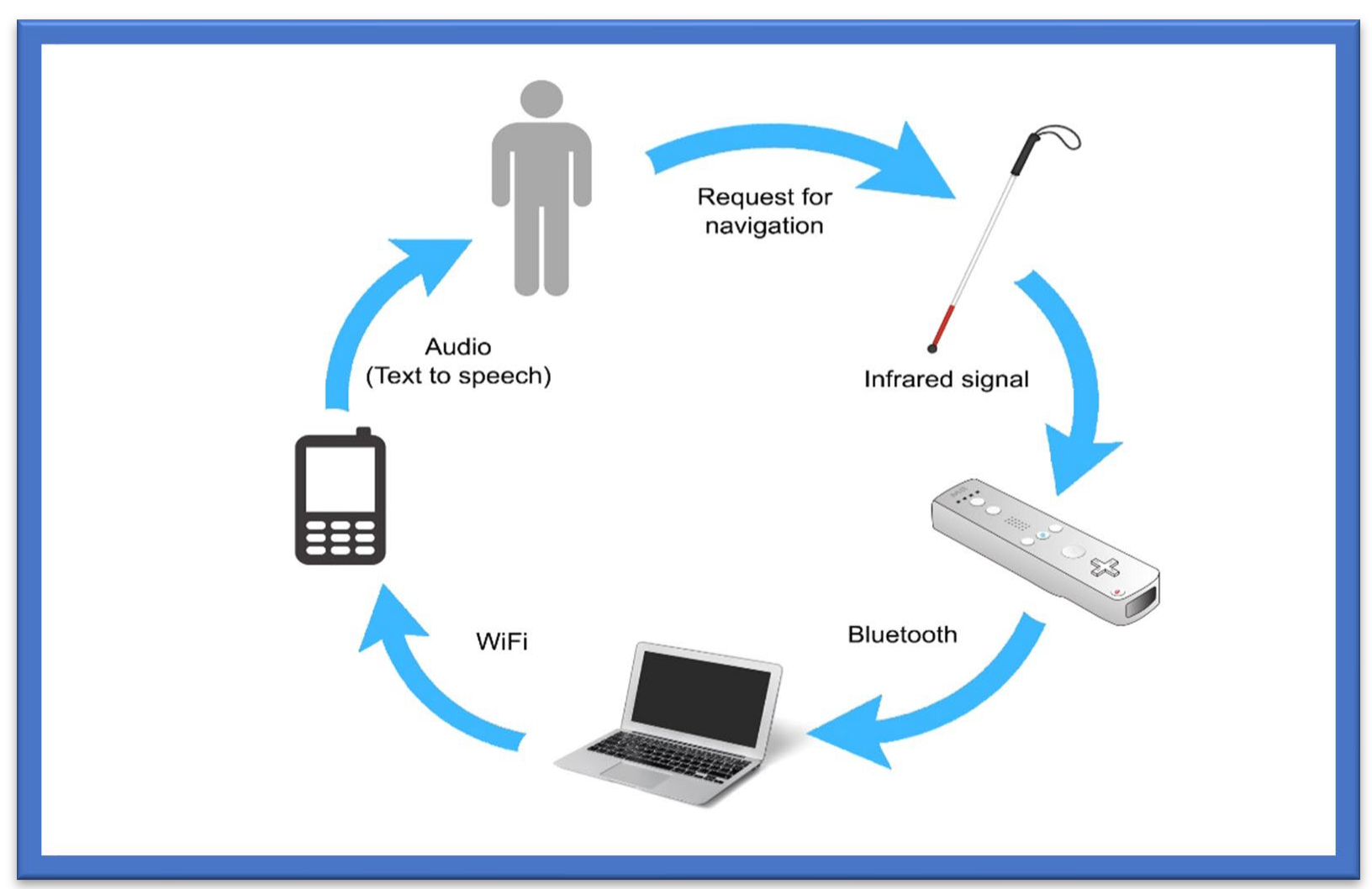

The augmented white cane-based system is an indoor navigation system specifically designed to help the visual impaired move freely in indoor environments [19]. The prime purpose of the white cane navigation system is to provide real-time navigation information, which helps the users to make decisions appropriately, for example on the route to be followed in an indoor space. The system obtains access to the physical environment, called a micro-navigation system, to provide such information. Possible obstacles should be detected, and the intentional movements of the users should be known to help users decide on movements. The solution uses the interaction among several components. The main components comprising this system are the two infrared cameras. The computer has a software application, in running form, which coordinates the system. A smartphone is needed to deliver the information related to navigation, as shown in Figure 2.

Figure 2. Augmented White Cane.

The white cane helps determine the user’s position and movement. It includes several infrared L.E.D.s with a button to activate and deactivate the system. The cane is the most suitable object to represent the position, assisted by an Augmented Objects Development Process (AODeP). To make an object augmented, many requirements can be identified: (1) the object should be able to emit the infrared light that the Wiimote could capture, (2) the user should wear it to obtain his location or position, (3) it should be smaller in size so that it does not hinder the user’s movement, and (4) it should minimize the cognitive effort required to use it:

-

The white cane provides real-time navigation by studying the physical indoor environment by using a micro-navigation system;

-

The two infrared cameras and a software application make indoor navigation more reliable and accurate;

-

The whole system takes input through infrared signals to provide proper navigation.

8. Ultrasonic Sensor-Based Tools

This ultrasonic sensor-based system comprises a microcontroller with synthetic speech output and a portable device that guides users to walking points. The principle of reflection of a high frequency is used in this system to detect obstacles. These instructions or guidelines are given in vibro-tactile form for reducing navigation difficulties. The limit of such a system is the blockage of the ultrasound signals by the wall, thus resulting in less accurate navigation. A user’s movement is constantly tracked by an RFID unit using an indoor navigation system designed for the visually impaired. The user is given the guidelines and instructions via a tactile compass and wireless connection [20].

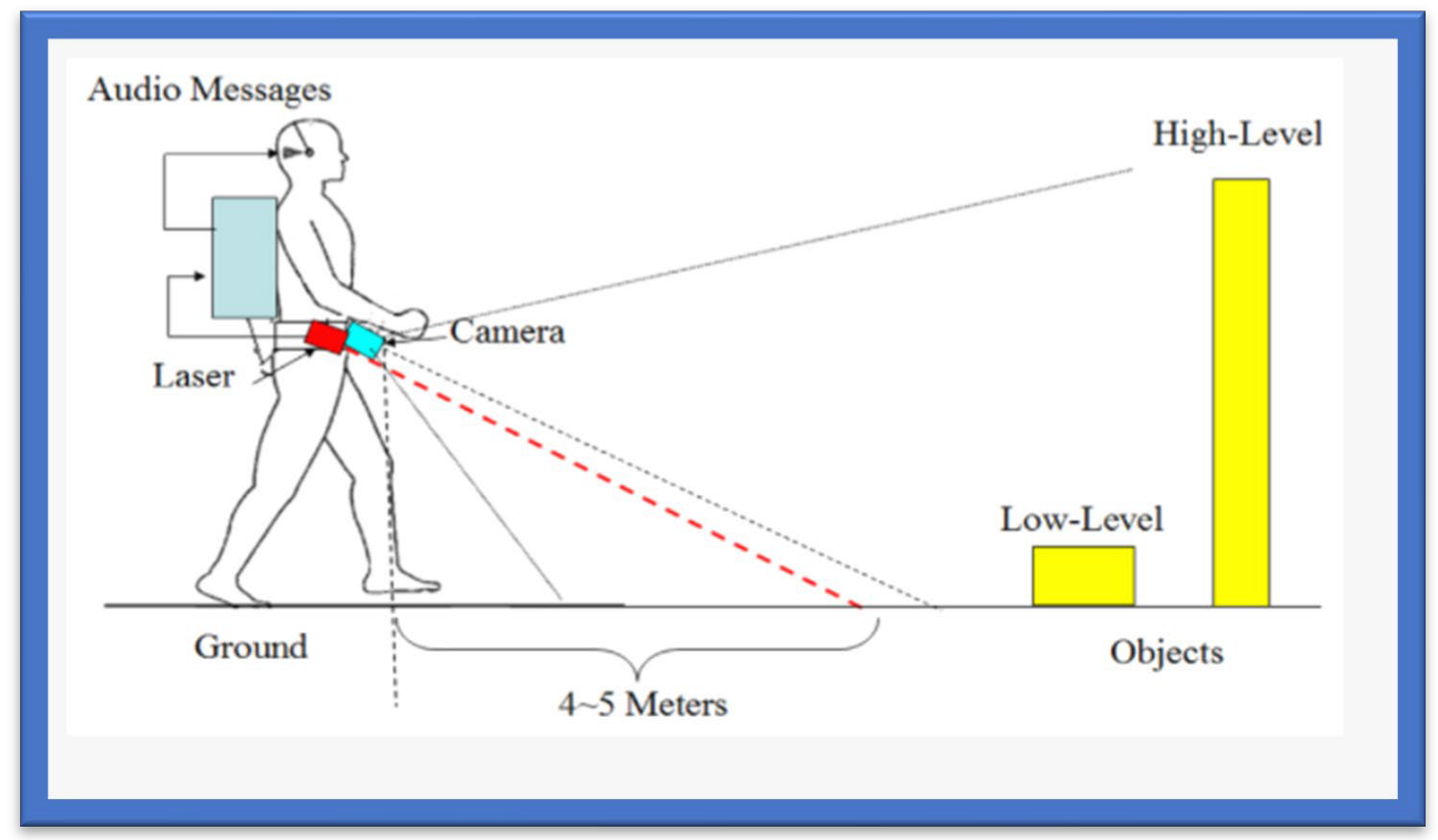

9. Blind Audio Guidance Tools

The blind audio guidance system is based on an embedded system, which uses an ultrasonic sensor for measuring the distance, an A.V.R sound system for the audio instructions, and an I.R.R. sensor to detect objects as shown in Figure 3. The primary functions performed by this system are detecting paths and recognizing the environment. Initially, the ultrasonic sensors receive the visual signals and then convert them into auditory information. This system reduces the training time required to use the white cane. However, the issue concerns identifying the users’ location globally [21]. Additionally, Table 3 provides various blind audio guidance system features relating to Distance Measurement, Audio Instructions, and Hardware Costs.

Figure 3. The Blind Audio Guidance System.

Table 3. Properties of the Blind Guidance System.

| Blind Audio Guidance System | |

|---|---|

| Distance Measurement | The ultrasonic sensor facilitates the visually impaired by measuring distances accurately. |

| Audio Instructions | I.R.R. sensor detects obstacles and provides instant audio instructions to blind people. |

| Hardware Costs | The system comprises different hardware components that might be a little expensive. However, it remains one of the most reliable systems. |

10. Voice and Vibration Tools

This system is developed using an ultrasonic sensor for the detection of obstacles. People with any visual impairment or blindness are more sensitive to hearing than others, so this navigation system gives alerts via voice and vibration feedback. The system works both outdoors and indoors. The alert mobility of the users and different intensity levels are provided [22]. Table 4 incorporates the properties of voice and vibration navigation tools used for the visually impaired.

Table 4. Properties of Voice and Vibration Navigation Tools.

| Voice and Vibration-Based Navigation System | |

|---|---|

| Better Obstacle Detection | The ultrasonic sensor accurately detects any obstacles in range of a visually impaired person. |

| Fast and Reliable Alerts | The ultrasonic sensor provides better navigation with voice and vibration feedback providing proper guidance. |

| Multipurpose | The system can be used for both indoor and outdoor environments. |

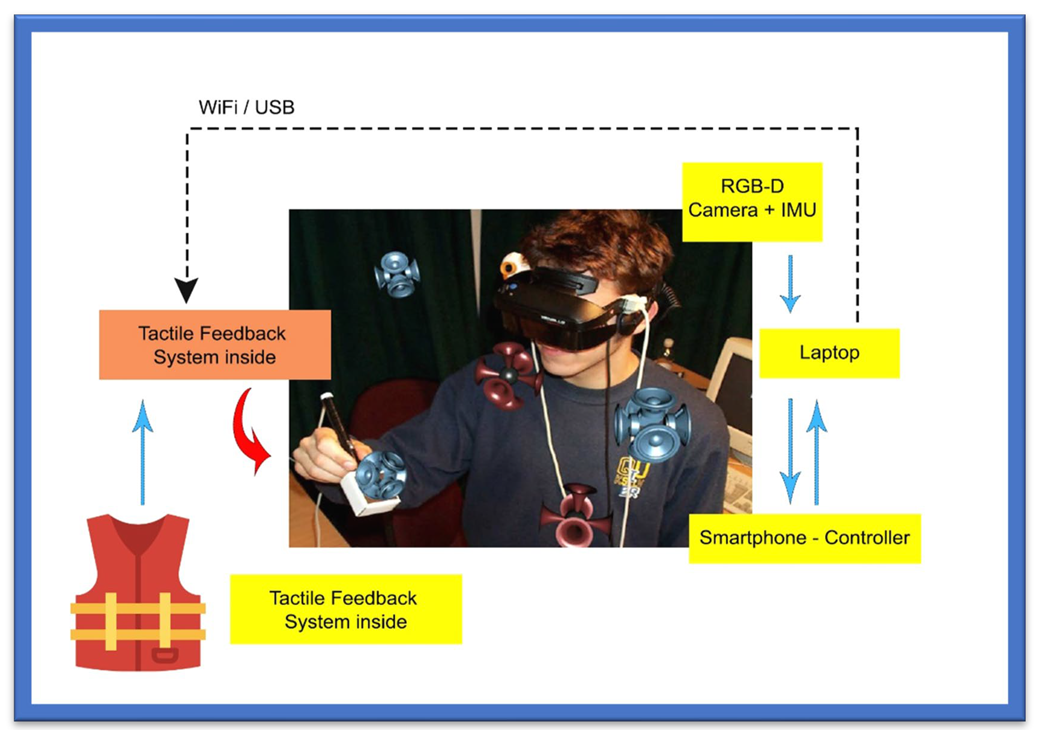

11. RGB-D Sensor-Based Tools

This navigation system is based upon an RGB-D sensor with range expansion. A consumer RGB-D camera supports range-based floor segmentation to obtain information about the range as shown in Figure 4. The RGB sensor also supports colour sensing and object detection. The user interface is given using sound map information and audio guidelines or instructions [23][24]. Table 5 provides information on RGB-D sensor-based navigation tools with their different properties for VI people.

Figure 4. RGB-D Sensor-Based System.

Table 5. Properties of RGB-D Sensor-Based Navigation Tools.

| RGB-D Sensor-Based System | |

|---|---|

| RGB Sensor | RGB sensor facilitates all the visually impaired with color sensing and object detection. |

| Camera-Based System | The RGB-D camera is used to support range-based floor segmentation. |

| Expensive | The multiple hardware components, including RGB-D sensors, make it an expensive option for blind people. |

12. Cellular Network-Based Tools

A cellular network system allows mobile phones to communicate with others [25]. According to a research study [26], a simple way to localize cellular devices is to use the Cell-ID, which operates in most cellular networks. Studies [27][28] have proposed a hybrid approach that uses a combination of wireless local area networks, Bluetooth, and a cellular communication network to improve indoor navigation and positioning performance. However, such positioning is unstable and has a significant navigation error due to cellular towers and radiofrequency signal range. Table 6 summarizes the information based on different cellular approaches for indoor environments with positioning factors.

Table 6. Properties of Cellular Network-Based Navigation Tools.

| Cellular Network-Based System | |

|---|---|

| Cellular Based Approach | Mobile phone communication makes this system work by using cellular towers to define the location. |

| Good for Indoors | The system works better in indoor environments. |

| Poor Positioning | The positioning is not very accurate due to the large signal ranges of cellular towers. |

13. Bluetooth-Based Tools

Bluetooth is a commonly used wireless protocol based on the IEEE 802.15.1 standard. The precision of this method is determined by the number of connected Bluetooth cells [29]. A 3D indoor navigation system proposed by Cruz and Ramos [30] is based on Bluetooth. In this navigation system, pre-installed transmitters are not considered helpful for applications with critical requirements. An approach that combines Bluetooth beacons and Google Tango was proposed in [31]. Table 7 provides an overview of Bluetooth-based approaches used for the visually impaired in terms of cost and environment.

Table 7. Properties of Bluetooth-Based Navigation Tools.

| Bluetooth-Based Approach | |

|---|---|

| Bluetooth-Based | The system mainly relies on Bluetooth networks to stay precise. |

| Not Expensive | The system is cheap as it relies on a Bluetooth network using pre-installed transmitters. |

| 3D Indoor System | An indoor navigation system proposed by Cruz Ramos is also based on Bluetooth. |

14. System for Converting Images into Sound

Depth sensors generate images that humans usually acquire with their eyes and hands. Different designs convert spatial data into sound, as sound can precisely guide the users. Many approaches in this domain are inspired by auditory substitution devices that encode visual scenes from the video camera and generate sounds as an acoustic representation known as “soundscape”. Rehri et al. [32] proposed a system that improves navigation without vision. It is a personal guidance system based on the clear advantage of virtual sound guidance over spatial language. The authors argued that it is easy and quick to perceive and understand spatial information.

In Nair et al. [33], a method of image recognition was presented for blind individuals with the help of sound in a simple yet powerful approach that can help blind persons see the world with their ears. Nevertheless, image recognition using the sound process becomes problematic when the complexity of the image increases. At first, the sound is removed using Gaussian blur. In the second step, the edges of images are filtered out by finding the gradients. In the third step, non-maximum suppression is applied to trace along the image edges. After that, threshold values are marked using the canny edge detector. After acquiring complete edge information, the sound is generated.

These different technologies are very effective for blind and the visually impaired and help them feel more confident and self-dependent. They can move, travel, play, and read books more than sighted people do. Technology is growing and is enhancing the ways B.V.I. communicates to the world more confidently.

15. Infrared L.E.D.-Based Tools

Next comes the infrared L.E.D. category, suitable for producing periodic signals in indoor environments. The only drawback of this technology is that the “line of sight (L.O.S.)” must be accessible among L.E.D. and detectors. Moreover, it is a technology for short-range communication [34]. In this study, a “mid-range portable positioning system” was designed using L.E.D. for the visually impaired. It helps determine orientation, location, and distance to destination for those with weak eyesight and is 100% accurate for the partially blind. The system comprises two techniques: infrared intensity and ultrasound “time of flight T.O.F.”. The ultrasonic T.O.F. structure comprises an ultrasonic transducer, beacon, and infrared L.E.D. circuits.

On the other hand, the receiver includes an ultrasonic sensor, infrared sensor, geomagnetic sensor, and signal processing unit. The prototype also includes beacons of infrared L.E.D. and receivers. The system results showed 90% accuracy for the fully visually impaired in indoor and outdoor environments.

15.1. Text-to-Speech Tools

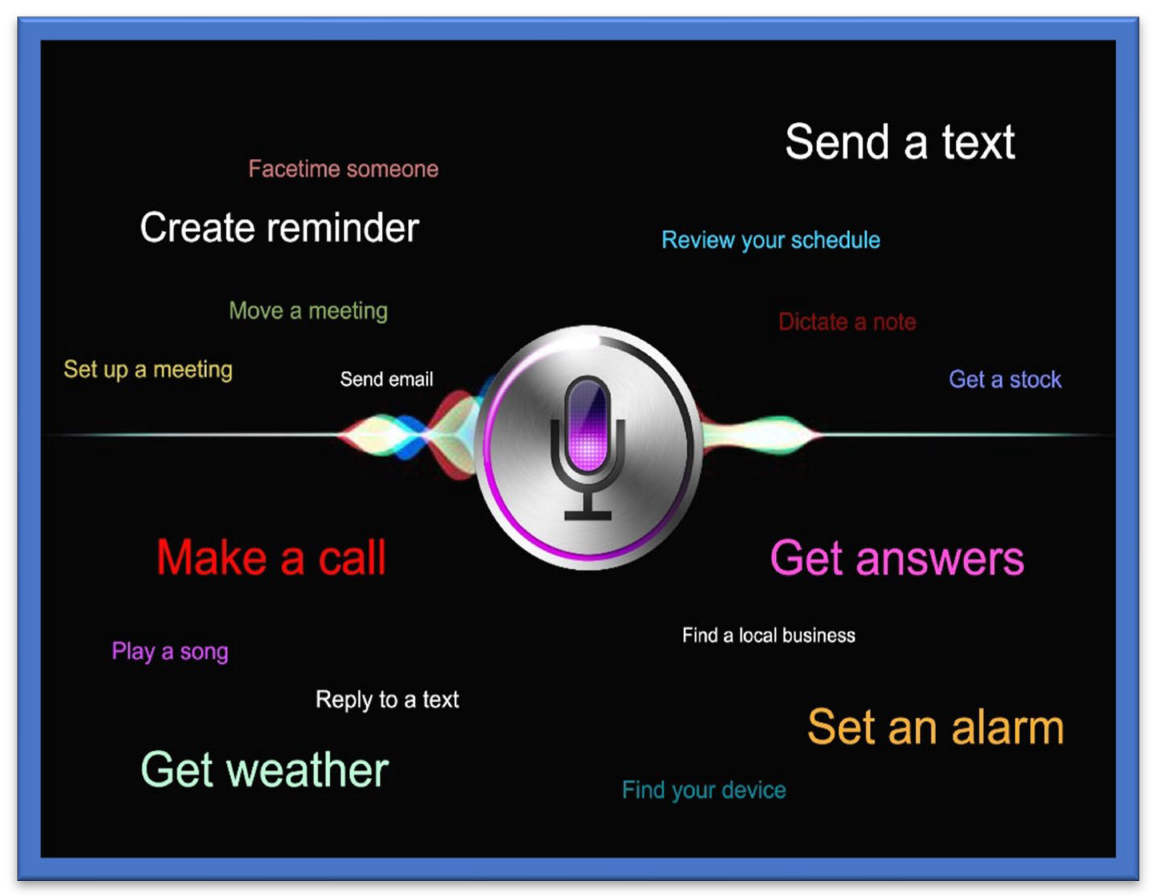

One of the most used and recently developed Text-to-Speech Tools (TTS) is the Google TTS, a screen reader application that Google has designed and that uses Android O.S.S. to read the text out loud over the screen. It supports various languages. This device was built entirely based upon “DeepMind’s speech” synthesis expertise. In this tool, the API sends the audio or voice to the person in almost human voice quality (Cloud Text-to-Speech, n.d). OpenCV tools and libraries have been used to capture the image, from which the text is decoded, and the character recognition processes are then completed. The written text is encoded through the machine using O.C.R. technology. The OpenCV library was recommended for its convenience of handling and use compared to the other P.C.C. or electronic devices platforms [35]. An ultrasonic sensor-based TTS tool was designed to give vibration sensing for the blind to help them to easily recognize and identify a bus name and its number at a bus stop using audio processing techniques. The system was designed using M.A.T.L.A.B. for implementing image capture. Most simulations are performed using O.C.R. in M.A.T.L.A.B. to convert the text into speech [36]. A text-to-voice technique is also presented; most of the commercial G.P.S. devices developed by Inc., such as TomTom Inc., Garmin Inc., etc., use this technique. Real-time performance is achieved based on the spoken navigation instructions [37]. An ideal illustration of combining text-to-voice techniques and voice search methods is Siri, which is shown in Figure 5 Siri is available for iOS, an operating system (O.S.S.) for Apple’s iPhone. It is easy to interact or talk to Siri and receive a response in a human-like voice. This system helps people with low vision and people who are blind or visually impaired to use it in daily life with both voice input and synthesized voice output.

Figure 5. Siri for iPhone.

A Human–Computer Interface (HCI)-based wearable indoor navigation system is presented by [38]. An excellent example of an audio-based system is Google Voice search. To effectively use such systems, proper training is required [39][40].

15.2. Speech-to-Text Tools

Amazon has designed and developed an S.T.T. tool named “Amazon transcribe” that uses the deep learning algorithm known as A.S.R., which converts the voice into text in a matter of seconds and does so precisely. These tools are used by the blind and the visually impaired, and they are also used to translate customer service calls, automate subtitles, and create metadata for media assets to generate the searchable archive [41]. I.B.M. Watson has also developed its own S.T.T. tool to convert audio and voice to text form. The developed technology uses the DL AI algorithm, which applies the language structure, grammar, and composition of voice and audio signals to transcribe and convert the human voice/audio into written text [42].

Table 8 shows the complete evaluation and analysis of current systems to help visually impaired users confidently move in their environment.

16. Feedback Tools for VI

In this section, different feedback tools for VI people are explained.

16.1. Tactical Compass

The feedback for effective and accurate direction in an electronic travel aid for VI is a challenging task. The authors of [43] presented a Tactile Compass to guide the VI person during the traveling to address this problem. This compass is a handheld device that guides a VI person continuously by providing directions with the help of a pointing needle. Two lab experiments that tested the system demonstrated that a user can reach the goal with an average deviation of 3.03°.

16.2. SRFI Tool

To overcome the information acquisition problem in VI people, the authors of [44] presented a method based on auditory feedback and a triboelectric nanogenerator. This tool is called a ripple-inspired fingertip interactor (S.R.F.I.) and is self-powered. It assists the VI person by giving feedback to deliver information, and due to its refined structure, it gives high-quality text information to the user. Based on three channels, it can recognise Braille letters and deliver feedback to VI about information acquisition.

16.3. Robotic System Based on Haptic Feedback

To support VI people in sports, the authors of [45] introduced a robotic system based on haptic feedback. The runner’s position is determined with the help of a drone, and information is delivered with the help of the left lower leg haptic feedback, which guides the user in the desired direction. The system is assessed outdoors to give proper haptic feedback and is tested on three modalities: vibration during the swing, stance, and continuous.

16.4. Audio Feedback-Based Voice-Activated Portable Braille

A portable device named Voice Activated Braille helps give a VI person information about specific characters. Arduino helps direct the VI person. This system can be beneficial for VI by guiding them. It is a partial assistant and helps the VI to read easily.

16.5. Adaptive Auditory Feedback System

This system helps a VI person while using the desktop, based on continuous switching between speech and the non-speech feedback. Using this system, a VI person does not need continuous instructions. The results of sixteen experiments that assessed the system revealed that it delivers an efficient performance.

16.6. Olfactory and Haptic for VI person

The authors of [46] introduced a method based on Olfactory and Haptic for VI people. This method is introduced to help VI in entertainment in education and is designed to offer opportunities to VI for learning and teaching. A 3D system, it can be used to touch a 3D object. Moreover, the smell and sound are released from the olfactory device. This system was assessed by the VI and blind people with the help of a questionnaire.

16.7. Hybrid Method for VI

This system guides the VI person in indoor and outdoor environments. A hybrid system based on the sensor and traditional stick, it guides the user with the help of a sensor and auditory feedback.

16.8. Radar-Based Handheld Device for VI

A handheld device based on radar has been presented for VI people. In this method, the distance received by the radar sensor is converted to tactile-based information, which is mapped into the array based on the vibration actuators. With the help of an information sensor and tactile stimulus, the VI user can be guided around obstacles. Table 8 gives a detailed overview of the navigation application for the visually impaired. The study involves various models and applications with their feasibility and characteristics report and discusses the merits and drawbacks of every application along with the features.

Table 8. Navigation Applications for Visually Impaired Users.

| Ref. Paper | Name | Components/Application | Device/Application Features | Price/Usability and Wearability/User Feedback | Drawbacks/User Acceptance | Specific Characteristics |

|---|---|---|---|---|---|---|

| [47] | Maptic | Sensor device, user-friendly feedback capabilities, cell phone |

|

Unknown/Wearable/Instant feedback | Lower body and ground obstacles detection | Object detection |

| [48][49] | Microsoft Soundscape | Cell phone, beacons application |

|

Free/Handheld device/Audio | No obstacle detection | Ease of use |

| [50] | SmartCane | Sensor, SmartCane, vibration function | Detects obstacles | Affordable/Handheld device/Quick feedback | Navigation guidance not supported | Detection speed Accuracy of obstacle detection |

| [51] | WeWalk | Sensor device, cane feedback, smartphone |

|

Price starts from USD 599/Wearable (weighs about 252 g/0.55 pounds Audio-related and instant feedback |

No obstacle detection and number of scenario description when in use | Ease of use Prioritization of user’s requirements Availability of application |

| [52] | Horus | Headphone with the bone-conducted facility Supports two cameras with an additional battery and powerful GPU. |

|

Price USD 2000/Wearable/Audio related feedback | Navigation guidance not supported | Less power consumption Reliability and voice assistant Accuracy of obstacles |

| [53] | Ray Electronic Mobility Aid |

Ultrasonic device |

|

Price USD 395/Handheld weighs 60 g/Audio-related and instant feedback | Navigation guidance not supported | Object classification accuracy Incorporated user feedback Accurate obstacle detection and classification |

| [54] | UltraCane | Dual Range, Ultrasonic with narrow beam, Cane |

|

Price USD 590/Handheld/instant feedback | Navigation guidance not supported | Power consumption Battery life Ultrasonic Cane |

| [55] | BlindSquare | Cell Phone |

|

Price USD 39.99/Handheld/Audio feedback enabled | Navigation guidance not supported | Ease of use Availability of application |

| [56] | Envision Glasses |

Wearable glasses with additional cameras |

|

Price USD 2099/wearable device weighs 46 g/Audio oriented | No Obstacle detection Navigation guidance not supported | Object classification Bar code scanning Voice supported application Performance |

| [57] | Eye See | Helmet with integrated cameras and laser |

|

Unknown/wearable/Audio | Navigation guidance not supported. | Obstacle detection, O.C.R. Incorporated, Text to voice conversion. |

| [58] | Nearby Explorer |

Cell phone |

|

Free/Handheld/Audio and instant feedback | No obstacle detection | Object identification User’s requirement prioritization Tracking history |

| [59] | Seeing Eye G.P.S |

Cell phone |

|

Unknown/Handheld/Audio | No obstacle detection | User preference G.P.S. based navigation |

| [60] | PathVu Navigation |

Cell phone | Give alerts about sidewalk problem | Free/Handheld/Audio | No obstacle detection Navigation guidance not supported | Catering offside obstacles through alerts |

| [61] | Step-hear | Cell phone |

|

Free/Handheld/Audio | No obstacle detection | Availability of application to public G.P.S. based navigation |

| [62] | InterSection Explorer |

Cell phone | Streets information | Free/Handheld/Audio | No obstacle detection Navigation guidance not supported | Predefined routes. |

| [63] | LAZARILLO APP |

Cell phone |

|

Free/Handheld/Audio | No obstacle detection | Availability of application to public G.P.S. based navigation User requirement prioritization |

| [64] | Lazzus APP | Cell phone |

|

Price USD 29.99/Handheld/Audio | No obstacle detection | User requirement preference Predefined crossings on routes. |

| [65] | Sunu Band | Sensor’s device | Upper detection of body | Price USD 299/Handheld/Instant feedback | Lower body and ground obstacles are not detected | Object detection |

| [66] | Ariadne G.P.S. | Cell phone |

|

Price USD 4.99/Handheld/Audio | No obstacle detection | Accurate Map Exploration G.P.S. based navigation |

| [67] | Aira | Cell phone | Sighted person support | Price USD 99 for 120 min/ Handheld/Audio | Expensive to use and privacy concerns | Tightly coupled for security Ease of use |

| [68] | Be My Eyes | Cell Phone | Sighted person support | Free/Handheld/Audio | Privacy concerns | Ease of use |

| [69] | BrainPort | The handheld video camera controller | Detection of objects | Expensive/wearable and handheld/Instant Feedback | No navigation guidance | Accurate detection of objects/obstacles |

References

- Vaz, R.; Freitas, D.; Coelho, A. Blind and Visually Impaired Visitors’ Experiences in Museums: Increasing Accessibility through Assistive Technologies. Int. J. Incl. Mus. 2020, 13, 57–80.

- Dian, Z.; Kezhong, L.; Rui, M. A precise RFID indoor localization system with sensor network assistance. China Commun. 2015, 12, 13–22.

- Park, S.; Choi, I.-M.; Kim, S.-S.; Kim, S.-M. A portable mid-range localization system using infrared LEDs for visually impaired people. Infrared Phys. Technol. 2014, 67, 583–589.

- Chen, H.; Wang, K.; Yang, K. Improving RealSense by Fusing Color Stereo Vision and Infrared Stereo Vision for the Visually Impaired. In Proceedings of the 2018 International Conference on Information Science and System, Zhengzhou, China, 20–22 July 2018.

- Hairuman, I.F.B.; Foong, O.-M. OCR Signage Recognition with Skew & Slant Correction for Visually Impaired People. In Proceedings of the 2011 11th International Conference on Hybrid Intelligent Systems (HIS), Melacca, Malaysia, 5–8 December 2011; pp. 306–310.

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Autonomous Smart White Cane Navigation System for Indoor Usage. Technologies 2020, 8, 37.

- Bai, J.; Liu, D.; Su, G.; Fu, Z. A Cloud and Vision-Based Navigation System Used for Blind People. In Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies—AIACT 17, Wuhan, China, 7–9 April 2017.

- Oladayo, O.O. A Multidimensional Walking Aid for Visually Impaired Using Ultrasonic Sensors Network with Voice Guidance. Int. J. Intell. Syst. Appl. 2014, 6, 53–59.

- Barberis, C.; Andrea, B.; Giovanni, M.; Paolo, M. Experiencing Indoor Navigation on Mobile Devices. IT Prof. 2013, 16, 50–57.

- Yusro, M.; Hou, K.-M.; Pissaloux, E.; Ramli, K.; Sudiana, D.; Zhang, L.-Z.; Shi, H.-L. Concept and Design of SEES (Smart Environment Explorer Stick) for Visually Impaired Person Mobility Assistance. In Advances in Intelligent Systems and Computing; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 245–259.

- Karkar, A.; Al-Maadeed, S. Mobile Assistive Technologies for Visual Impaired Users: A Survey. In Proceedings of the 2018 International Conference on Computer and Applications (ICCA), Beirut, Lebanon, 25–26 August 2018; pp. 427–433.

- Phung, S.L.; Le, M.C.; Bouzerdoum, A. Pedestrian lane detection in unstructured scenes for assistive navigation. Comput. Vis. Image Underst. 2016, 149, 186–196.

- Singh, L.S.; Mazumder, P.B.; Sharma, G.D. Comparison of drug susceptibility pattern of Mycobacterium tuberculosis assayed by MODS (Microscopic-observation drug-susceptibility) with that of PM (proportion method) from clinical isolates of North East India. IOSR J. Pharm. IOSRPHR 2014, 4, 1–6.

- Bossé, P.S. A Plant Identification Game. Am. Biol. Teach. 1977, 39, 115.

- Sahoo, N.; Lin, H.-W.; Chang, Y.-H. Design and Implementation of a Walking Stick Aid for Visually Challenged People. Sensors 2019, 19, 130.

- Ross, D.A.; Lightman, A. Talking Braille. In Proceedings of the 7th International ACM SIGACCESS Conference on Computers and Accessibility—Assets ’05, Baltimore, MD, USA, 9–12 October 2005; p. 98.

- Kuc, R. Binaural sonar electronic travel aid provides vibrotactile cues for landmark, reflector motion and surface texture classification. IEEE Trans. Biomed. Eng. 2002, 49, 1173–1180.

- Ulrich, I.; Borenstein, J. The GuideCane-applying mobile robot technologies to assist the visually impaired. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2001, 31, 131–136.

- Santhosh, S.S.; Sasiprabha, T.; Jeberson, R. BLI-NAV Embedded Navigation System for Blind People. In Proceedings of the Recent Advances in Space Technology Services and Climate Change 2010 (RSTS & CC-2010), Chennai, India, 13–15 November 2010.

- Aladren, A.; Lopez-Nicolas, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Syst. J. 2014, 10, 922–932.

- Teng, C.-F.; Chen, Y.-L. Syndrome-Enabled Unsupervised Learning for Neural Network-Based Polar Decoder and Jointly Optimized Blind Equalizer. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 177–188.

- Liu, Z.; Li, C.; Wu, D.; Dai, W.; Geng, S.; Ding, Q. A Wireless Sensor Network Based Personnel Positioning Scheme in Coal Mines with Blind Areas. Sensors 2010, 10, 9891–9918.

- Bhatlawande, S.S.; Mukhopadhyay, J.; Mahadevappa, M. Ultrasonic Spectacles and Waist-Belt for Visually Impaired and Blind Person. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012.

- dos Santos, A.D.P.; Medola, F.O.; Cinelli, M.J.; Ramirez, A.R.G.; Sandnes, F.E. Are electronic white canes better than traditional canes? A comparative study with blind and blindfolded participants. Univers. Access Inf. Soc. 2020, 20, 93–103.

- Wicab Inc. BrainPort Technology Tongue Interface Characterization Tactical Underwater Navigation System (TUNS); Wicab Inc.: Middleton, WI, USA, 2008.

- Higuchi, H.; Harada, A.; Iwahashi, T.; Usui, S.; Sawamoto, J.; Kanda, J.; Wakimoto, K.; Tanaka, S. Network-Based Nationwide RTK-GPS and Indoor Navigation Intended for Seamless Location Based Services. In Proceedings of the 2004 National Technical Meeting of The Institute of Navigation, San Diego, CA, USA, 26–28 January 2004; pp. 167–174.

- Caffery, J.; Stuber, G. Overview of radiolocation in CDMA cellular systems. IEEE Commun. Mag. 1998, 36, 38–45.

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An Indoor Navigation System for the Visually Impaired. Sensors 2012, 12, 8236–8258.

- Nivishna, S.; Vivek, C. Smart Indoor and Outdoor Guiding System for Blind People using Android and IOT. Indian J. Public Health Res. Dev. 2019, 10, 1108.

- Mahmud, N.; Saha, R.K.; Zafar, R.B.; Bhuian, M.B.H.; Sarwar, S.S. Vibration and Voice Operated Navigation System for Visually Impaired Person. In Proceedings of the 2014 International Conference on Informatics, Electronics & Vision (ICIEV), Dhaka, Bangladesh, 23–24 May 2014.

- Grubb, P.W.; Thomsen, P.R.; Hoxie, T.; Wright, G. Filing a Patent Application. In Patents for Chemicals, Pharmaceuticals, and Biotechnology; Oxford University Press: Oxford, UK, 2016.

- Rehrl, K.; Göll, N.; Leitinger, S.; Bruntsch, S.; Mentz, H.-J. Smartphone-Based Information and Navigation Aids for Public Transport Travellers. In Location Based Services and TeleCartography; Springer: Berlin/Heidelberg, Germany, 2004; pp. 525–544.

- Zhou, J.; Yeung, W.M.-C.; Ng, J.K.-Y. Enhancing Indoor Positioning Accuracy by Utilizing Signals from Both the Mobile Phone Network and the Wireless Local Area Network. In Proceedings of the 22nd International Conference on Advanced Information Networking and Applications (AINA 2008), Gino-Wan, Japan, 25–28 March 2008.

- Rehrl, K.; Leitinger, S.; Bruntsch, S.; Mentz, H. Assisting orientation and guidance for multimodal travelers in situations of modal change. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, Vienna, Austria, 13–16 September 2005; pp. 407–412.

- Nair, V.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Evaluating the usability and performance of an indoor navigation assistant for blind and visually impaired people. Assist. Technol. 2020, 34, 289–299.

- Kumar, A.V.J.; Visu, A.; Raj, S.M.; Prabhu, T.M.; Kalaiselvi, V.K.G. Penpal-Electronic Pen Aiding Visually Impaired in Reading and Visualizing Textual Contents. In Proceedings of the 2011 IEEE International Conference on Technology for Education, Chennai, India, 14–16 July 2011; pp. 171–176.

- Loomis, J.M.; Klatzky, R.L.; Golledge, A.R.G. Navigating without Vision: Basic and Applied Research. Optom. Vis. Sci. 2001, 78, 282–289.

- Krishnan, K.G.; Porkodi, C.M.; Kanimozhi, K. Image Recognition for Visually Impaired People by Sound. In Proceedings of the 2013 International Conference on Communication and Signal Processing, Melmaruvathur, India, 3–5 April 2013.

- Freitas, D.; Kouroupetroglou, G. Speech technologies for blind and low vision persons. Technol. Disabil. 2008, 20, 135–156.

- Geetha, M.N.; Sheethal, H.V.; Sindhu, S.; Siddiqa, J.A.; Chandan, H.C. Survey on Smart Reader for Blind and Visually Impaired (BVI). Indian J. Sci. Technol. 2019, 12, 1–4.

- Halimah, B.Z.; Azlina, A.; Behrang, P.; Choo, W.O. Voice Recognition System for the Visually Impaired: Virtual Cognitive Approach. In Proceedings of the 2008 International Symposium on Information Technology, Dubrovnik, Croatia, 23–26 June 2008.

- Latha, L.; Geethani, V.; Divyadharshini, M.; Thangam, P. A Smart Reader for Blind People. Int. J. Eng. Adv. Technol. 2019, 8, 1566–1568.

- Choi, J.; Gill, H.; Ou, S.; Lee, J. CCVoice: Voice to Text Conversion and Management Program Implementation of Google Cloud Speech API. KIISE Trans. Comput. Pr. 2019, 25, 191–197.

- Nakajima, M.; Haruyama, S. New indoor navigation system for visually impaired people using visible light communication. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 37.

- Introduction to Amazon Web Services. In Machine Learning in the AWS Cloud; Wiley: Hoboken, NJ, USA, 2019; pp. 133–149.

- Khan, A.; Khusro, S. An insight into smartphone-based assistive solutions for visually impaired and blind people: Issues, challenges and opportunities. Univers. Access Inf. Soc. 2020, 20, 265–298.

- Xue, L.; Zhang, Z.; Xu, L.; Gao, F.; Zhao, X.; Xun, X.; Zhao, B.; Kang, Z.; Liao, Q.; Zhang, Y. Information accessibility oriented self-powered and ripple-inspired fingertip interactors with auditory feedback. Nano Energy 2021, 87, 106117.

- Huang, S.; Ishikawa, M.; Yamakawa, Y. An Active Assistant Robotic System Based on High-Speed Vision and Haptic Feedback for Human-Robot Collaboration. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 3649–3654.

- Mon, C.S.; Yap, K.M.; Ahmad, A. A Preliminary Study on Requirements of Olfactory, Haptic and Audio Enabled Application for Visually Impaired in Edutainment. In Proceedings of the 2019 IEEE 9th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Kota Kinabalu, Malaysia, 27–28 April 2019; pp. 249–253.

- Core77.Com. Choice Rev. Online 2007, 44, 44–3669.

- Hill, M.E. Soundscape. In Oxford Music Online; Oxford University Press: Oxford, UK, 2014.

- Khanna, R. IBM SmartCloud Cost Management with IBM Cloud Orchestrator Cost Management on the Cloud. In Proceedings of the 2016 IEEE International Conference on Cloud Computing in Emerging Markets (CCEM), Bangalore, India, 19–21 October 2016; pp. 170–172.

- Chen, Q.; Khan, M.; Tsangouri, C.; Yang, C.; Li, B.; Xiao, J.; Zhu, Z. CCNY Smart Cane. In Proceedings of the 2017 IEEE 7th Annual International Conference on CYBER Technology in Automation, Control and Intelligent Systems (CYBER), Honolulu, HI, USA, 31 July–4 August 2017; pp. 1246–1251.

- Catalogue: In Les Pratiques Funéraires en Pannonie de l’époque Augustéenne à la fin du 3e Siècle; Archaeopress Publishing Ltd.: Oxford, UK, 2020; p. 526.

- Dávila, J. Iterative Learning for Human Activity Recognition from Wearable Sensor Data. In Proceedings of the 3rd International Electronic Conference on Sensors and Applications, Online, 15–30 November 2016; MDPI: Basel, Switzerland, 2016; p. 7. Available online: https://sciforum.net/conference/ecsa-3 (accessed on 25 June 2022).

- Kumpf, M. A new electronic mobility aid for the blind—A field evaluation. Int. J. Rehabil. Res. 1987, 10, 298–300.

- Li, J.; Yang, B.; Chen, Y.; Wu, W.; Yang, Y.; Zhao, X.; Chen, R. Evaluation of a Compact Helmet-Based Laser Scanning System for Aboveground and Underground 3d Mapping. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 215–220.

- Wise, E.; Li, B.; Gallagher, T.; Dempster, A.G.; Rizos, C.; Ramsey-Stewart, E.; Woo, D. Indoor Navigation for the Blind and Vision Impaired: Where Are We and Where Are We Going? In Proceedings of the 2012 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sydney, Australia, 13–15 November 2012; pp. 1–7.

- Satani, N.; Patel, S.; Patel, S. AI Powered Glasses for Visually Impaired Person. Int. J. Recent Technol. Eng. 2020, 9, 316–321.

- Smart object detector for visually impaired. Spéc. Issue 2017, 3, 192–195.

- Chen, H.-E.; Lin, Y.-Y.; Chen, C.-H.; Wang, I.-F. BlindNavi: A Navigation App for the Visually Impaired Smartphone User. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems—CHI EA ’15, Seoul, Korea, 18–23 April 2015; ACM Press: New York, NY, USA, 2015.

- Harris, P. Female Genital Mutilation Awareness, CFAB, London, 2014. Available online: http://www.safeandsecureinfo.com/fgm_awareness/fgm_read_section1.html Female Genital Mutilation: Recognising and Preventing FGM, Home Office, London, 2014. Available free: http://www.safeguardingchildrenea.co.uk/resources/female-genital-mutilation-recognising-preventing-fgm-free-online-training. Child Abus. Rev. 2015, 24, 463–464.

- Tolesa, L.D.; Chala, T.F.; Abdi, G.F.; Geleta, T.K. Assessment of Quality of Commercially Available Some Selected Edible Oils Accessed in Ethiopia. Arch. Infect. Dis. Ther. 2022, 6.

- English, K. Working With Parents of School Aged Children: Staying in Step While Keeping a Step Ahead. Perspect. Hear. Hear. Disord. Child. 2000, 10, 17–20.

- Bouchard, B.; Imbeault, F.; Bouzouane, A.; Menelas, B.A.J. Developing serious games specifically adapted to people suffering from Alzheimer. In Proceedings of the International Conference on Serious Games Development and Applications, Bremen, Germany, 26–29 September 2012; pp. 243–254.

- Menelas, B.A.J.; Otis, M.J.D. Design of a serious game for learning vibrotactile messages. In Proceedings of the 2012 IEEE International Workshop on Haptic Audio Visual Environments and Games (HAVE 2012), Munich, Germany, 8–9 October 2012; pp. 124–129.

- Menelas, B.A.J.; Benaoudia, R.S. Use of haptics to promote learning outcomes in serious games. Multimodal Technol. Interact. 2017, 1, 31.

- Ménélas, B.; Picinalli, L.; Katz, B.F.; Bourdot, P. Audio haptic feedbacks for an acquisition task in a multi-target context. In Proceedings of the 2010 IEEE Symposium on 3D User Interfaces (3DUI), Waltham, MA, USA, 20–21 March 2010; pp. 51–54.

- Menelas, B.A.J.; Picinali, L.; Bourdot, P.; Katz, B.F. Non-visual identification, localization, and selection of entities of interest in a 3D environment. J. Multimodal User Interfaces 2014, 8, 243–256.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

3.3K

Revisions:

3 times

(View History)

Update Date:

07 Nov 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No