Video Upload Options

In Bell tests, there may be problems of experimental design or set-up that affect the validity of the experimental findings. These problems are often referred to as "loopholes". See the article on Bell's theorem for the theoretical background to these experimental efforts (see also John Stewart Bell). The purpose of the experiment is to test whether nature is best described using a local hidden-variable theory or by the quantum entanglement theory of quantum mechanics. The "detection efficiency", or "fair sampling" problem is the most prevalent loophole in optical experiments. Another loophole that has more often been addressed is that of communication, i.e. locality. There is also the "disjoint measurement" loophole which entails multiple samples used to obtain correlations as compared to "joint measurement" where a single sample is used to obtain all correlations used in an inequality. To date, no test has simultaneously closed all loopholes. Ronald Hanson of the Delft University of Technology claims the first Bell experiment that closes both the detection and the communication loopholes. (This was not an optical experiment in the sense discussed below; the entangled degrees of freedom were electron spins rather than photon polarization.) Nevertheless, correlations of classical optical fields also violate Bell's inequality. In some experiments there may be additional defects that make "local realist" explanations of Bell test violations possible; these are briefly described below. Many modern experiments are directed at detecting quantum entanglement rather than ruling out local hidden-variable theories, and these tasks are different since the former accepts quantum mechanics at the outset (no entanglement without quantum mechanics). This is regularly done using Bell's theorem, but in this situation the theorem is used as an entanglement witness, a dividing line between entangled quantum states and separable quantum states, and is as such not as sensitive to the problems described here. In October 2015, scientists from the Kavli Institute of Nanoscience reported that the quantum nonlocality phenomenon is supported at the 96% confidence level based on a "loophole-free Bell test" study. These results were confirmed by two studies with statistical significance over 5 standard deviations which were published in December 2015. However, Alain Aspect writes that No experiment can be said to be totally loophole-free.

1. Loopholes

1.1. Detection Efficiency, or Fair Sampling

In Bell tests, one problem is that detection efficiency may be less than 100%, and this is always the case in optical experiments. This problem was noted first by Pearle in 1970,[1] and Clauser and Horne (1974) devised another result intended to take care of this. Some results were also obtained in the 1980s but the subject has undergone significant research in recent years. The many experiments affected by this problem deal with it, without exception, by using the "fair sampling" assumption (see below).

This loophole changes the inequalities to be used; for example the CHSH inequality:

- [math]\displaystyle{ \big|E(AC') + E(AD')\big| + \big|E(BC') - E(BD')\big| \le 2 }[/math]

is changed. When data from an experiment is used in the inequality one needs to condition on that a "coincidence" occurred, that a detection occurred in both wings of the experiment. This will change[2] the inequality into

- [math]\displaystyle{ \big|E(AC'|\text{coinc.}) + E(AD'|\text{coinc.})\big| + \big|E(BC'|\text{coinc.}) - E(BD'|\text{coinc.})\big| \le \frac 4{\eta} - 2 }[/math]

In this formula, the [math]\displaystyle{ \eta }[/math] denotes the efficiency of the experiment, formally the minimum probability of a coincidence given a detection on one side.[2][3] In Quantum mechanics, the left-hand side reaches [math]\displaystyle{ 2\sqrt{2} }[/math], which is greater than two, but for a non-100% efficiency the latter formula has a larger right-hand side. And at low efficiency (below [math]\displaystyle{ 2/\left(\sqrt{2} + 1\right) }[/math] ≈ 83%), the inequality is no longer violated.

All optical experiments are affected by this problem, having typical efficiencies around 5–30%. Several non-optical systems such as trapped ions,[4] superconducting qubits[5] and NV centers[6] have been able to bypass the detection loophole. Unfortunately, they are all still vulnerable to the communication loophole.

There are tests that are not sensitive to this problem, such as the Clauser-Horne test, but these have the same performance as the latter of the two inequalities above; they cannot be violated unless the efficiency exceeds a certain bound. For example, if one uses the so-called Eberhard inequality, the bound is 2/3.[7]

Fair sampling assumption

Usually, the fair sampling assumption (alternatively, the no-enhancement assumption) is used in regard to this loophole. It states that the sample of detected pairs is representative of the pairs emitted, in which case the right-hand side in the equation above is reduced to 2, irrespective of the efficiency. This comprises a third postulate necessary for violation in low-efficiency experiments, in addition to the (two) postulates of local realism. There is no way to test experimentally whether a given experiment does fair sampling, as the correlations of emitted but undetected pairs is by definition unknown.

Double detections

In many experiments the electronics are such that simultaneous + and − counts from both outputs of a polariser can never occur, only one or the other being recorded. Under quantum mechanics, they will not occur anyway, but under a wave theory the suppression of these counts will cause even the basic realist prediction to yield unfair sampling. However, the effect is negligible if the detection efficiencies are low.

1.2. Communication, or locality

The Bell inequality is motivated by the absence of communication between the two measurement sites. In experiments, this is usually ensured simply by prohibiting any light-speed communication by separating the two sites and then ensuring that the measurement duration is shorter than the time it would take for any light-speed signal from one site to the other, or indeed, to the source. In one of Alain Aspect's experiments, inter-detector communication at light speed during the time between pair emission and detection was possible, but such communication between the time of fixing the detectors' settings and the time of detection was not. An experimental set-up without any such provision effectively becomes entirely "local", and therefore cannot rule out local realism. Additionally, the experiment design will ideally be such that the settings for each measurement are not determined by any earlier event, at both measurement stations.

John Bell supported Aspect's investigation of it[8] and had some active involvement with the work, being on the examining board for Aspect’s PhD. Aspect improved the separation of the sites and did the first attempt on really having independent random detector orientations. Weihs et al. improved on this with a distance on the order of a few hundred meters in their experiment in addition to using random settings retrieved from a quantum system.[9] Scheidl et al. (2010) improved on this further by conducting an experiment between locations separated by a distance of 144 km (89 mi).[10]

1.3. Coincidence Loophole

In many experiments, especially those based on photon polarization, pairs of events in the two wings of the experiment are only identified as belonging to a single pair after the experiment is performed, by judging whether or not their detection times are close enough to one another. This generates a new possibility for a local hidden variables theory to "fake" quantum correlations: delay the detection time of each of the two particles by a larger or smaller amount depending on some relationship between hidden variables carried by the particles and the detector settings encountered at the measurement station. This loophole was noted by A. Fine in 1980 and 1981, by S. Pascazio in 1986, and by J. Larsson and R. D. Gill in 2004. It turns out to be more serious than the detection loophole in that it gives more room for local hidden variables to reproduce quantum correlations, for the same effective experimental efficiency: the chance that particle 1 is accepted (coincidence loophole) or measured (detection loophole) given that particle 2 is detected.

The coincidence loophole can be ruled out entirely simply by working with a pre-fixed lattice of detection windows which are short enough that most pairs of events occurring in the same window do originate with the same emission and long enough that a true pair is not separated by a window boundary.

1.4. Memory Loophole

In most experiments, measurements are repeatedly made at the same two locations. Under local realism, there could be effects of memory leading to statistical dependence between subsequent pairs of measurements. Moreover, physical parameters might be varying in time. It has been shown that, provided each new pair of measurements is done with a new random pair of measurement settings, that neither memory nor time inhomogeneity have a serious effect on the experiment.[11][12][13]

1.5. Sources of Error in (Optical) Bell Tests

In Bell tests, if there are sources of error (that are not accounted for by the experimentalists) that might be of enough importance to explain why a particular experiment gives results in favor of quantum entanglement as opposed to local realism, they are called loopholes. Here some examples of existing and hypothetical experimental errors are explained. There are of course sources of error in all physical experiments. Whether or not any of those presented here have been found important enough to be called loopholes, in general or because of possible mistakes by the performers of some known experiment found in literature, is discussed in the subsequent sections. There are also non-optical Bell tests, which are not discussed here.[5]

Example of typical experiment

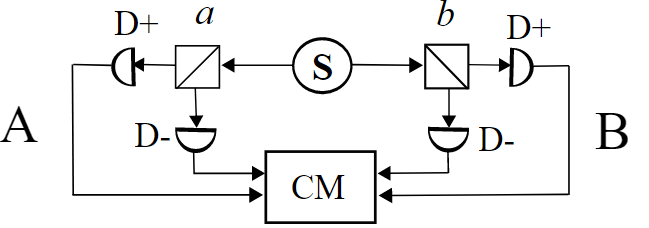

The source S is assumed to produce pairs of "photons," one pair at a time with the individual photons sent in opposite directions. Each photon encounters a two-channel polarizer whose orientation can be set by the experimenter. Emerging signals from each channel are detected and coincidences counted by the "coincidence monitor" CM. It is assumed that any individual photon has to go one way or the other at the polarizer. The entanglement hypothesis states that the two photons in a pair (due to their common origin) share a wave function, so that a measurement on one of the photons affects the other instantaneously, no matter the separation between them. This effect is termed the EPR paradox (although it is not a true paradox). The local realism hypothesis on the other hand states that measurement on one photon has no influence whatsoever on the other.

As a basis for our description of experimental errors consider a typical experiment of CHSH type (see picture to the right). In the experiment the source is assumed to emit light in the form of pairs of particle-like photons with each photon sent off in opposite directions. When photons are detected simultaneously (in reality during the same short time interval) at both sides of the "coincidence monitor" a coincident detection is counted. On each side of the coincidence monitor there are two inputs that are here named the "+" and the "−" input. The individual photons must (according to quantum mechanics) make a choice and go one way or the other at a two-channel polarizer. For each pair emitted at the source ideally either the + or the - input on both sides will detect a photon. The four possibilities can be categorized as ++, +−, −+ and −−. The number of simultaneous detections of all four types (hereinafter [math]\displaystyle{ N_{++} }[/math], [math]\displaystyle{ N_{+-} }[/math], [math]\displaystyle{ N_{-+} }[/math] and [math]\displaystyle{ N_{--} }[/math]) is counted over a time span covering a number of emissions from the source. Then the following is calculated:

- [math]\displaystyle{ E = \frac{N_{++} + N_{--} - N_{+-} - N_{-+}}{N_{++} + N_{--} + N_{+-} + N_{-+}} }[/math]

This is done with polarizer [math]\displaystyle{ a }[/math] rotated into two positions [math]\displaystyle{ a }[/math] and [math]\displaystyle{ a' }[/math], and polarizer [math]\displaystyle{ b }[/math] into two positions [math]\displaystyle{ b }[/math] and [math]\displaystyle{ b' }[/math], so that we get [math]\displaystyle{ E(a,b) }[/math], [math]\displaystyle{ E(a,b') }[/math], [math]\displaystyle{ E(a',b) }[/math] and [math]\displaystyle{ E(a',b') }[/math]. Then the following is calculated:

- [math]\displaystyle{ S = E(a,b) - E(a,b') + E(a',b) + E(a',b') }[/math]

Entanglement and local realism give different predicted values on S, thus the experiment (if there are no substantial sources of error) gives an indication to which of the two theories better corresponds to reality.

Sources of error in the light source

The principal possible errors in the light source are:

- Failure of rotational invariance: The light from the source might have a preferred polarization direction, in which case it is not rotationally invariant.

- Multiple emissions: The light source might emit several pairs at the same time or within a short timespan causing error at detection.[14]

Sources of error in the optical polarizer

- Imperfections in the polarizer: The polarizer might influence the relative amplitude or other aspects of reflected and transmitted light in various ways.

Sources of error in the detector or detector settings

- The experiment may be set up as not being able to detect photons simultaneously in the "+" and "−" input on the same side of the experiment. If the source may emit more than one pair of photons at any one instant in time or close in time after one another, for example, this could cause errors in the detection.

- Imperfections in the detector: failing to detect some photons or detecting photons even when the light source is turned off (noise).

1.6. Free Choice of Detector Orientations

The experiment requires choice of the detectors' orientations. If this free choice were in some way denied then another loophole might be opened, as the observed correlations could potentially be explained by the limited choices of detector orientations. Thus, even if all experimental loopholes are closed, superdeterminism may allow the construction of a local realist theory that agrees with experiment.[15]

References

- Philip M. Pearle (1970). "Hidden-Variable Example Based upon Data Rejection". Phys. Rev. D 2 (8): 1418–25. doi:10.1103/PhysRevD.2.1418. Bibcode: 1970PhRvD...2.1418P. https://dx.doi.org/10.1103%2FPhysRevD.2.1418

- Jan-Åke Larsson (1998). "Bell's inequality and detector inefficiency". Phys. Rev. A 57 (5): 3304–8. doi:10.1103/PhysRevA.57.3304. Bibcode: 1998PhRvA..57.3304L. http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-87169.

- I. Gerhardt; Q. Liu; A. Lamas-Linares; J. Skaar; V. Scarani et al. (2011). "Experimentally faking the violation of Bell's inequalities". Phys. Rev. Lett. 107 (17): 170404. doi:10.1103/PhysRevLett.107.170404. PMID 22107491. Bibcode: 2011PhRvL.107q0404G.

- M.A. Rowe; D. Kielpinski; V. Meyer; C.A. Sackett; W.M. Itano et al. (2001). "Experimental violation of a Bell's inequality with efficient detection". Nature 409 (6822): 791–94. doi:10.1038/35057215. PMID 11236986. Bibcode: 2001Natur.409..791K. https://deepblue.lib.umich.edu/bitstream/2027.42/62731/1/409791a0.pdf.

- Ansmann, M.; Wang, H.; Bialczak, R. C.; Hofheinz, M.; Lucero, E. et al. (24 September 2009). "Violation of Bell's inequality in Josephson phase qubits". Nature 461 (7263): 504–506. doi:10.1038/nature08363. PMID 19779447. Bibcode: 2009Natur.461..504A. https://dx.doi.org/10.1038%2Fnature08363

- Pfaff, W.; Taminiau, T. H.; Robledo, L.; Bernien, H.; Markham, M. et al. (2013). "Demonstration of entanglement-by-measurement of solid-state qubits". Nature Physics 9 (1): 29–33. doi:10.1038/nphys2444. Bibcode: 2013NatPh...9...29P. https://dx.doi.org/10.1038%2Fnphys2444

- P.H. Eberhard (1993). "Background level and counter efficiencies required for a loophole-free Einstein-Podolsky-Rosen experiment". Physical Review A 47 (2): 747–750. doi:10.1103/PhysRevA.47.R747. PMID 9909100. https://dx.doi.org/10.1103%2FPhysRevA.47.R747

- J. S. Bell (1980). "Atomic-cascade photons and quantum-mechanical nonlocality". Comments at. Mol. Phys. 9: 121–126. Reprinted as J. S. Bell (1987). "Chapter 13". Speakable and Unspeakable in Quantum Mechanics. Cambridge University Press. p. 109.

- G. Weihs; T. Jennewein; C. Simon; H. Weinfurter; A. Zeilinger (1998). "Violation of Bell's inequality under strict Einstein locality conditions". Phys. Rev. Lett. 81 (23): 5039–5043. doi:10.1103/PhysRevLett.81.5039. Bibcode: 1998PhRvL..81.5039W. https://dx.doi.org/10.1103%2FPhysRevLett.81.5039

- T. Scheidl (2010). "Violation of local realism with freedom of choice". Proc. Natl. Acad. Sci. 107 (46): 19708–19713. doi:10.1073/pnas.1002780107. PMID 21041665. Bibcode: 2010PNAS..10719708S. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pmcentrez&artid=2993398

- Barrett, Jonathan; Collins, Daniel; Hardy, Lucien; Kent, Adrian; Popescu, Sandu (2002). "Quantum nonlocality, Bell inequalities and the memory loophole". Phys. Rev. A 66 (4): 042111. doi:10.1103/PhysRevA.66.042111. Bibcode: 2002PhRvA..66d2111B. https://dx.doi.org/10.1103%2FPhysRevA.66.042111

- Gill, Richard D. (2003). "Accardi contra Bell (cum mundi): The Impossible Coupling". in M. Moore. Mathematical Statistics and Applications: Festschrift for Constance van Eeden. IMS Lecture Notes — Monograph Series. 42. Beachwood, Ohio: Institute of Mathematical Statistics. pp. 133–154.

- Gill, Richard D. (2002). "Time, Finite Statistics, and Bell's Fifth Position". Proceedings of the Conference Foundations of Probability and Physics - 2 : Växjö (Soland), Sweden, June 2-7, 2002. 5. Växjö University Press. pp. 179–206.

- Fasel, Sylvain; Alibart, Olivier; Tanzilli, Sébastien; Baldi, Pascal; Beveratos, Alexios et al. (2004-11-01). "High-quality asynchronous heralded single-photon source at telecom wavelength". New Journal of Physics 6 (1): 163. doi:10.1088/1367-2630/6/1/163. Bibcode: 2004NJPh....6..163F. https://dx.doi.org/10.1088%2F1367-2630%2F6%2F1%2F163

- Kaiser, David (November 14, 2014). "Is Quantum Entanglement Real?". nytimes.com. https://www.nytimes.com/2014/11/16/opinion/sunday/is-quantum-entanglement-real.html.