Video Upload Options

The microcomputer revolution (or personal computer revolution or digital revolution) is a phrase used to describe the rapid advances of microprocessor-based computers from esoteric hobby projects to a commonplace fixture of homes in industrial societies during the 1970s and 1980s. Prior to 1977, the only contact most of the population had with computers was through utility bills, bank and payroll services, or computer-generated junk mail. Within a decade, computers became common consumer goods. The advent of affordable personal computers has had lasting impact on education, business, music, social interaction, and entertainment.

1. Microprocessor and Cost Reduction

The minicomputer ancestors of the modern personal computer used early integrated circuit (microchip) technology, which reduced size and cost, but they contained no microprocessor. This meant that they were still large and difficult to manufacture just like their mainframe predecessors. After the "computer-on-a-chip" was commercialized, the cost to manufacture a computer system dropped dramatically. The arithmetic, logic, and control functions that previously occupied several costly circuit boards were now available in one integrated circuit, making it possible to produce them in high volume. Concurrently, advances in the development of solid state memory eliminated the bulky, costly, and power-hungry magnetic core memory used in prior generations of computers.

After the 1971 introduction of the Intel 4004, microprocessor costs declined rapidly. In 1974 the American electronics magazine Radio-Electronics described the Mark-8 computer kit, based on the Intel 8008 processor. In January of the following year, Popular Electronics magazine published an article describing a kit based on the Intel 8080, a somewhat more powerful and easier to use processor. The Altair 8800 sold remarkably well even though initial memory size was limited to a few hundred bytes and there was no software available. However, the Altair kit was much less costly than an Intel development system of the time and so was purchased by hobbyists who formed users' groups, notably the Homebrew Computer Club, and companies interested in developing microprocessor control for their own products. Expansion memory boards and peripherals were soon listed by the original manufacturer, and later by plug compatible manufacturers. The very first Microsoft product was a 4 kilobyte paper tape BASIC interpreter, which allowed users to develop programs in a higher-level language. The alternative was to hand-assemble machine code that could be directly loaded into the microcomputer's memory using a front panel of toggle switches, pushbuttons and LED displays. While the hardware front panel emulated those used by early mainframe and minicomputers, after a very short time interaction through a terminal was the preferred human/machine interface, and front panels became extinct.

2. The Home Computer Revolution

In the late 1970s and early 1980s, from about 1977 to 1983, it was widely predicted [1] that computers would soon revolutionize many aspects of home and family life as they had business practices in the previous decades.[2] Mothers would keep their recipe catalog in "kitchen computer" databases and turn to a medical database for help with child care, fathers would use the family's computer to manage family finances and track automobile maintenance. Children would use disk-based encyclopedias for school work and would be avid video gamers. Home automation would bring about the intelligent home of the 1980s. Using Videotex, NAPLPS or some sort of as-yet unrealized computer technology, television would gain interactivity. The personalized newspaper was a commonly predicted application. Morning coffee would be brewed automatically under computer control. The same computer would control the house lighting and temperature. Robots would take the garbage out, and be programmable to perform new tasks by the home computer. Electronics were expensive, so it was generally assumed that each home would have only one multitasking computer for the entire family to use in a timesharing arrangement, with interfaces to the various devices it was expected to control.

| “ | The single most important item in 2008 households is the computer. These electronic brains govern everything from meal preparation and waking up the household to assembling shopping lists and keeping track of the bank balance. Sensors in kitchen appliances, climatizing units, communicators, power supply and other household utilities warn the computer when the item is likely to fail. A repairman will show up even before any obvious breakdown occurs. Computers also handle travel reservations, relay telephone messages, keep track of birthdays and anniversaries, compute taxes and even figure the monthly bills for electricity, water, telephone and other utilities. Not every family has its private computer. Many families reserve time on a city or regional computer to serve their needs. The machine tallies up its own services and submits a bill, just as it does with other utilities.[3] |

” |

| — Mechanix Illustrated, November 1968 edition | ||

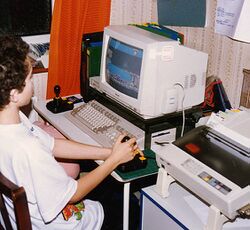

All this was predicted to be commonplace sometime before the end of the decade, but virtually every aspect of the predicted revolution would be delayed or prove entirely impractical. The computers available to consumers of the time period just weren't powerful enough to perform any single task required to realize this vision, much less do them all simultaneously. The home computers of the early 1980s could not multitask. Even if they could, memory capacities were too small to hold entire databases or financial records, floppy disk-based storage was inadequate in both capacity and speed for multimedia work, and the graphics of the systems could only display blocky, unrealistic images and blurry, jagged text. Before long, a backlash set in—computer users were "geeks", "nerds" or worse, "hackers". The North American video game crash of 1983 soured many on home computer technology. The computers that were purchased for use in the family room were either forgotten in closets or relegated to basements and children's bedrooms to be used exclusively for games and the occasional book report. In 1977, referring to computers used in home automation at the dawn of the home computer era, Digital Equipment Corporation CEO Ken Olsen is quoted as saying "There is no reason for any individual to have a computer in his home"[4]

It took another 10 years for technology to mature, for the graphical user interface to make the computer approachable for non-technical users, and for the internet to provide a compelling reason for most people to want a computer in their homes. Predicted aspects of the revolution were left by the wayside or modified in the face of an emerging reality. The cost of electronics dropped precipitously and today many families have a computer for each family member. Encyclopedias, recipe catalogs and medical databases are kept online and accessed over the world wide web not stored locally on floppy disks or CD-ROM. TV has yet to gain substantial interactivity; instead, the web has evolved alongside television, but the HTPC and internet video sites such as YouTube, Netflix and Hulu may one day replace traditional broadcast and cable television. Our coffee may be brewed automatically every morning, but the computer is a simple one embedded in the coffee maker, not under external control. As of 2008, robots are just beginning to make an impact in the home, with Roomba and Aibo leading the charge.

This delay wasn't out of keeping with other technologies newly introduced to an unprepared public. Early motorists were widely derided with the cry of "Get a horse!"[5] until the automobile was accepted. Television languished in research labs for decades before regular public broadcasts began. In an example of changing applications for technology, before the invention of radio, the telephone was used to distribute opera and news reports, whose subscribers were denounced as "illiterate, blind, bedridden and incurably lazy people".[6] Likewise, the acceptance of computers into daily life today is a product of continuing refinement of both technology and perception.

3. Transition from Revolution to Mainstream

During the 1980s, while many households were buying their first computers, the dominant operating system used by businesses was MS-DOS running on IBM PC compatible hardware. Impelled by increasing component standardization, and the move to offshore manufacturing, such hardware dropped drastically in price towards the end of the decade, until systems with specs similar to the IBM PC XT were selling for under $1000 by 1988,[7] when just under 20% of US households had a computer.[8]

These white box PCs would consolidate the diverse microcomputer market. Many consumers began using DOS-based computers at work, and saw value in purchasing compatible systems for home use as well. The prior home computer architectures from companies such as Commodore and Atari were abandoned in favor of the more "compatible" IBM PC platform. Some pioneering models of low cost PCs intended for home use were the Leading Edge Model D, Tandy 1000 and Epson Equity series. Many games advertised Tandy-compatible graphics as supported hardware.

The advent of 3D graphics in games and the popularization of the World Wide Web caused computer use to surge during the 1990s — by 1997 US computer ownership stood at 35% and household expenditure on computer equipment more than tripled.[9] Many competing computer platforms and CPU architectures fell out of favor over the years until by 2010 the x86 architecture was the most common in use for the desktop computer.

References

- The Computer Revolution from eNotes.com http://www.enotes.com/1980-media-american-decades/computer-revolution

- The computer revolution from The Eighties Club http://eightiesclub.tripod.com/id325.htm

- Berry, James R. (November 1968). "40 Years in the Future". Mechanix Illustrated. http://blog.modernmechanix.com/2008/03/24/what-will-life-be-like-in-the-year-2008/. Retrieved 2008-07-15.

- http://www.snopes.com/quotes/kenolsen.asp snopes.com: Ken Olsen

- Horseless Classrooms from the Hawaii Education & Research Network http://kalama.doe.hawaii.edu/hern/articles/horseless_p2.html

- Clement Ader from Beb's Old Phones http://www.bobsoldphones.net/Pages/Essays/Ader/Ader.htm

- "Computer Changes In The 1980s". http://www.thepeoplehistory.com/80scomputers.html.

- "Chronology of Personal Computers". http://www.islandnet.com/~kpolsson/comphist/comp1987.htm.

- "Computer ownership up sharply in the 1990s". http://www.bls.gov/opub/ted/1999/Apr/wk1/art01.htm.