| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Jason Zhu | -- | 1228 | 2022-10-19 01:50:38 |

Video Upload Options

Roadrunner was a supercomputer built by IBM for the Los Alamos National Laboratory in New Mexico, USA. The US$100-million Roadrunner was designed for a peak performance of 1.7 petaflops. It achieved 1.026 petaflops on May 25, 2008, to become the world's first TOP500 LINPACK sustained 1.0 petaflops system. In November 2008, it reached a top performance of 1.456 petaFLOPS, retaining its top spot in the TOP500 list. It was also the fourth-most energy-efficient supercomputer in the world on the Supermicro Green500 list, with an operational rate of 444.94 megaflops per watt of power used. The hybrid Roadrunner design was then reused for several other energy efficient supercomputers. Roadrunner was decommissioned by Los Alamos on March 31, 2013. In its place, Los Alamos commissioned a supercomputer called Cielo, which was installed in 2010. Cielo was smaller and more energy efficient than Roadrunner, and cost $54 million.

1. Overview

IBM built the computer for the U.S. Department of Energy's (DOE) National Nuclear Security Administration.[1][2] It was a hybrid design with 12,960 IBM PowerXCell 8i[3] and 6,480 AMD Opteron dual-core processors[4] in specially designed blade servers connected by InfiniBand. The Roadrunner used Red Hat Enterprise Linux along with Fedora[5] as its operating systems and was managed with xCAT distributed computing software. It also used the Open MPI Message Passing Interface implementation.[6]

Roadrunner occupied approximately 296 server racks[7] which covered 560 square metres (6,000 sq ft)[8] and became operational in 2008. It was decommissioned March 31, 2013.[7] The DOE used the computer for simulating how nuclear materials age in order to predict whether the USA's aging arsenal of nuclear weapons are both safe and reliable. Other uses for the Roadrunner included the science, financial, automotive and aerospace industries.

2. Hybrid Design

Roadrunner differed from other contemporary supercomputers because it continued the hybrid approach[7] to supercomputer design introduced by Seymour Cray in 1964 with the Control Data Corporation CDC 6600 and continued with the order of magnitude faster CDC 7600 in 1969. However, in this architecture the peripheral processors were used only for operating system functions and all applications ran in the one central processor. Most previous supercomputers had only used one processor architecture, since it was thought to be easier to design and program for. To realize the full potential of Roadrunner, all software had to be written specially for this hybrid architecture. The hybrid design consisted of dual-core Opteron server processors manufactured by AMD using the standard AMD64 architecture. Attached to each Opteron core is an IBM-designed and -fabricated PowerXCell 8i processor. As a supercomputer, the Roadrunner was considered an Opteron cluster with Cell accelerators, as each node consists of a Cell attached to an Opteron core and the Opterons to each other.[9]

3. Development

Roadrunner was in development from 2002 and went online in 2006. Due to its novel design and complexity it was constructed in three phases and became fully operational in 2008. Its predecessor was a machine also developed at Los Alamos named Dark Horse.[10] This machine was one of the earliest hybrid architecture systems originally based on ARM and then moved to the Cell processor. It was entirely a 3D design, its design integrated 3D memory, networking, processors and a number of other technologies.

3.1. Phase 1

The first phase of the Roadrunner was building a standard Opteron based cluster, while evaluating the feasibility to further construct and program the future hybrid version. This Phase 1 Roadrunner reached 71 teraflops and was in full operation at Los Alamos National Laboratory in 2006.

3.2. Phase 2

Phase 2 known as AAIS (Advanced Architecture Initial System) included building a small hybrid version of the finished system using an older version of the Cell processor. This phase was used to build prototype applications for the hybrid architecture. It went online in January 2007.

3.3. Phase 3

The goal of Phase 3 was to reach sustained performance in excess of 1 petaflops. Additional Opteron nodes and new PowerXCell processors were added to the design. These PowerXCell processors are five times as powerful as the Cell processors used in Phase 2. It was built to full scale at IBM’s Poughkeepsie, New York facility,[11] where it broke the 1 petaflops barrier during its fourth attempt on May 25, 2008. The complete system was moved to its permanent location in New Mexico in the summer of 2008.[11]

4. Technical Specifications

4.1. Processors

Roadrunner used two different models of processors. The first is the AMD Opteron 2210, running at 1.8 GHz. Opterons are used both in the computational nodes feeding the Cells with useful data and in the system operations and communication nodes passing data between computing nodes and helping the operators running the system. Roadrunner has a total of 6,912 Opteron processors with 6,480 used for computation and 432 for operation. The Opterons are connected together by HyperTransport links. Each Opteron has two cores for a total 13,824 cores.

The second processor is the IBM PowerXCell 8i, running at 3.2 GHz. These processors have one general purpose core (PPE), and eight special performance cores (SPE) for floating point operations. Roadrunner has a total of 12,960 PowerXCell processors, with 12,960 PPE cores and 103,680 SPE cores, for a total of 116,640 cores.

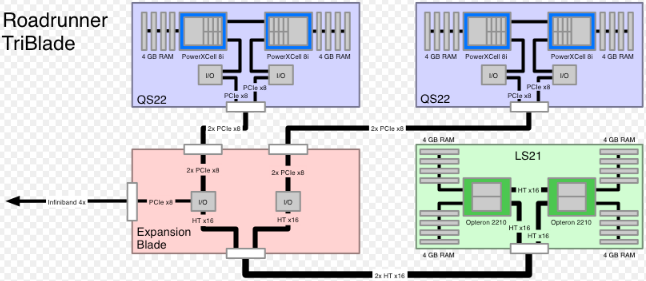

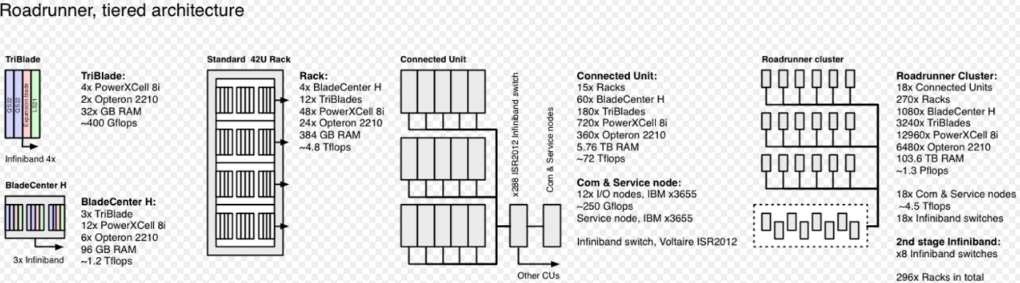

4.2. TriBlade

Logically, a TriBlade consists of two dual-core Opterons with 16 GB RAM and four PowerXCell 8i CPUs with 16 GB Cell RAM.[4]

Physically, a TriBlade consists of one LS21 Opteron blade, an expansion blade, and two QS22 Cell blades. The LS21 has two 1.8 GHz dual-core Opterons with 16 GB memory for the whole blade, providing 8GB for each CPU. Each QS22 has two PowerXCell 8i CPUs, running at 3.2 GHz and 8 GB memory, which makes 4 GB for each CPU. The expansion blade connects the two QS22 via four PCIe x8 links to the LS21, two links for each QS22. It also provides outside connectivity via an InfiniBand 4x DDR adapter. This makes a total width of four slots for a single TriBlade. Three TriBlades fit into one BladeCenter H chassis. The expansion blade is connected to the Opteron blade via HyperTransport.

4.3. Connected Unit (CU)

A Connected Unit is 60 BladeCenter H full of TriBlades, that is 180 TriBlades. All TriBlades are connected to a 288-port Voltaire ISR2012 Infiniband switch. Each CU also has access to the Panasas file system through twelve System x3755 servers.[4]

CU system information:[4]

- 360 dual-core Opterons with 2.88 TiB RAM.

- 720 PowerXCell 8i cores with 2.88 TiB RAM.

- 12 System x3755 with dual 10-GBit Ethernet each.

- 288-port Voltaire ISR2012 switch with 192 Infiniband 4x DDR links (180 TriBlades and twelve I/O nodes).

4.4. Roadrunner cluster

The final cluster is made up of 18 connected units, which are connected via eight additional (second-stage) Infiniband ISR2012 switches. Each CU is connected through twelve uplinks for each second-stage switch, which makes a total of 96 uplink connections.[4]

Overall system information:[4]

- 6,480 Opteron processors with 51.8 TiB RAM (in 3,240 LS21 blades)

- 12,960 Cell processors with 51.8 TiB RAM (in 6,480 QS22 blades)

- 216 System x3755 I/O nodes

- 26 288-port ISR2012 Infiniband 4x DDR switches

- 296 racks

- 2.345 MW power[7]

5. Shutdown

IBM Roadrunner was shut down on March 31, 2013.[7] While the supercomputer was one of the fastest in the world, its energy efficiency was relatively low. Roadrunner delivered 444 megaflops per watt vs the 886 megaflops per watt of a comparable supercomputer.[12] Before the supercomputer is dismantled, researchers will spend one month performing memory and data routing experiments that will aid in designing future supercomputers.[7]

After IBM Roadrunner is dismantled, the electronics will be shredded.[13] Los Alamos will perform the majority of the supercomputer's destruction, citing the classified nature of its calculations. Some of its parts will be retained for historical purposes.[13]

References

- "IBM to Build World's First Cell Broadband Engine Based Supercomputer". IBM. 2006-09-06. http://www-03.ibm.com/press/us/en/pressrelease/20210.wss. Retrieved 2008-05-31.

- "IBM Selected to Build New DOE Supercomputer". NNSA. 2006-09-06. Archived from the original on 2008-06-18. https://web.archive.org/web/20080618162000/http://nnsa.energy.gov/news/1015.htm. Retrieved 2008-05-31.

- "International Supercomputing Conference to Host First Panel Discussion on Breaking the Petaflops Barrier". TOP500 Supercomputing Sites. 9 June 2008. Archived from the original on 11 October 2008. https://web.archive.org/web/20081011055756/http://www.top500.org/blog/2008/06/09/international_supercomputing_conference_host_first_panel_discussion_breaking_petaflop_s_barrier. Retrieved 11 October 2015.

- Koch, Ken (2008-03-13). "Roadrunner Platform Overview". Los Alamos National Laboratory. http://www.lanl.gov/orgs/hpc/roadrunner/pdfs/Koch%20-%20Roadrunner%20Overview/RR%20Seminar%20-%20System%20Overview.pdf. Retrieved 2008-05-31.

- Borrett, Ann (2007). "Roadrunner - Integrated Hybrid Node". http://sti.cc.gatech.edu/SC07-BOF/06-Borrett.pdf.

- Squyres, Jeff. "Open MPI: 10^15 Flops Can't Be Wrong" (PDF). Open MPI. http://www.open-mpi.org/papers/sc-2008/jsquyres-cisco-booth-talk-1up.pdf. Retrieved 2008-11-22.

- Brodkin, Jon. "World’s top supercomputer from ‘09 is now obsolete, will be dismantled". Ars Technica. https://arstechnica.com/information-technology/2013/03/worlds-fastest-supercomputer-from-09-is-now-obsolete-will-be-dismantled/. Retrieved March 31, 2013.

- "Los Alamos computer breaks petaflop barrier". IBM. 2008-06-09. http://www.ibm.com/news/us/en/2008/06/09/d074803y96972a84.html. Retrieved 2008-06-12.

- Barker, Kevin J.; Davis, Kei; Hoisie, Adolfy; Kerbyson, Darren J.; Lang, Mike; Pakin, Scott; Sancho, Jose C. (2008). "Entering the petaflop era: The architecture and performance of Roadrunner". International Conference for High Performance Computing, Networking, Storage and Analysis: 1–11. doi:10.1109/SC.2008.5217926. ISBN 978-1-4244-2834-2. http://www.cse.psu.edu/~raghavan/cse598C/RoadrunnerSC08-CR-final.doc.pdf. Retrieved 2013-04-02.

- Poole, Steve (September 2006). "DarkHorse: a Proposed PetaScale Architecture". Los Alamos National Laboratory. http://www.ll.mit.edu/HPEC/agendas/proc06/Day2/27_Poole_Panel.pdf. Retrieved 11 October 2015.

- "Fact Sheet & Background: Roadrunner Smashes the Petaflop Barrier". IBM. 9 June 2008. http://www-03.ibm.com/press/us/en/pressrelease/24405.wss. Retrieved April 1, 2013.

- "Top500 List - November 2012". TOP500. http://top500.org/list/2012/11/. Retrieved April 2, 2013.

- "World’s first petascale supercomputer will be shredded to bits". Ars Technica. https://arstechnica.com/information-technology/2013/04/worlds-first-petascale-supercomputer-will-be-shredded-to-bits/. Retrieved April 1, 2013.