| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Vivi Li | -- | 1790 | 2022-10-13 01:36:17 |

Video Upload Options

In information theory there have been various attempts over the years to extend the definition of mutual information to more than two random variables. The expression and study of multivariate higher-degree mutual-information was achieved in two seemingly independent works: McGill (1954) who called these functions “interaction information”, and Hu Kuo Ting (1962) who also first proved the possible negativity of mutual-information for degrees higher than 2 and justified algebraically the intuitive correspondence to Venn diagrams .

1. Definition

The conditional mutual information can be used to inductively define a multivariate mutual information (MMI) in a set- or measure-theoretic sense in the context of information diagrams. In this sense we define the multivariate mutual information as follows:

- [math]\displaystyle{ I(X_1;\ldots;X_{n+1}) = I(X_1;\ldots;X_n) - I(X_1;\ldots;X_n|X_{n+1}), }[/math]

where

- [math]\displaystyle{ I(X_1;\ldots;X_n|X_{n+1}) = \mathbb{E}_{X_{n+1}} [D_{\mathrm{KL}}( P_{(X_1,\ldots,X_n)|X_{n+1}} \| P_{X_1|X_{n+1}} \otimes\cdots\otimes P_{X_n|X_{n+1}} )]. }[/math]

This definition is identical to that of interaction information except for a change in sign in the case of an odd number of random variables.

Alternatively, the multivariate mutual information may be defined in measure-theoretic terms as the intersection of the individual entropies [math]\displaystyle{ \mu(\tilde{X}_i) }[/math]:

- [math]\displaystyle{ I(X_1;X_2;...;X_{n+1})=\mu\left(\bigcap_{i=1}^{n+1}\tilde{X}_i\right) }[/math]

Defining [math]\displaystyle{ \tilde{Y}=\bigcap_{i=1}^n\tilde{X}_i }[/math], the set-theoretic identity [math]\displaystyle{ \tilde{A}=(\tilde{A}\cap\tilde{B})\cup(\tilde{A}\backslash\tilde{B}) }[/math] which corresponds to the measure-theoretic statement [math]\displaystyle{ \mu(\tilde{A})=\mu(\tilde{A}\cap \tilde{B})+\mu(\tilde{A}\backslash\tilde{B}) }[/math],[1]:p.63 allows the above to be rewritten as:

- [math]\displaystyle{ I(X_1;X_2;...;X_{n+1})=\mu(\tilde{Y}\cap\tilde{X}_{n+1})=\mu(\tilde{Y})-\mu(\tilde{Y}\backslash\tilde{X}_{n+1}) }[/math]

which is identical to the first definition.

2. Properties

Multi-variate information and conditional multi-variate information can be decomposed into a sum of entropies.

- [math]\displaystyle{ I(X_1;\ldots;X_n) = -\sum_{ T \subseteq \{1,\ldots,n\} }(-1)^{|T|}H(T) }[/math]

- [math]\displaystyle{ I(X_1;\ldots;X_n|Y) = -\sum_{T \subseteq \{1,\ldots,n\} } (-1)^{|T|} H(T|Y) }[/math]

3. Multivariate Statistical Independence

The multivariate mutual-information functions generalize the pairwise independence case that states that [math]\displaystyle{ X_1,X_2 }[/math] if and only if [math]\displaystyle{ I(X_1;X_2)=0 }[/math], to arbitrary numerous variable. n variables are mutually independent if and only if the [math]\displaystyle{ 2^n-n-1 }[/math] mutual information functions vanish [math]\displaystyle{ I(X_1;...;X_k)=0 }[/math] with [math]\displaystyle{ n \ge k \ge 2 }[/math] (theorem 2 [2]). In this sense, the [math]\displaystyle{ I(X_1;...;X_k)=0 }[/math] can be used as a refined statistical independence criterion.

4. Synergy and Redundancy

The multivariate mutual information may be positive, negative or zero. The positivity corresponds to relations generalizing the pairwise correlations, nullity corresponds to a refined notion of independence, and negativity detects high dimensional "emergent" relations and clusterized datapoints [3] [2]). For the simplest case of three variables X, Y, and Z, knowing, say, X yields a certain amount of information about Z. This information is just the mutual information [math]\displaystyle{ I(X;Z) }[/math] (yellow and gray in the Venn diagram above). Likewise, knowing Y will also yield a certain amount of information about Z, that being the mutual information [math]\displaystyle{ I(Y;Z) }[/math] (cyan and gray in the Venn diagram above). The amount of information about Z which is yielded by knowing both X and Y together is the information that is mutual to Z and the X,Y pair, written [math]\displaystyle{ I(X,Y;Z) }[/math] (yellow, gray and cyan in the Venn diagram above) and it may be greater than, equal to, or less than the sum of the two mutual information, this difference being the multivariate mutual information: [math]\displaystyle{ I(X;Y;Z)=I(Y;Z)+I(X;Z)-I(X,Y;Z) }[/math]. In the case where the sum of the two mutual information is greater than [math]\displaystyle{ I(X,Y;Z) }[/math], the multivariate mutual information will be positive. In this case, some of the information about Z provided by knowing X is also provided by knowing Y, causing their sum to be greater than the information about Z from knowing both together. That is to say, there is a "redundancy" in the information about Z provided by the X and Y variables. In the case where the sum of the mutual information is less than [math]\displaystyle{ I(X,Y;Z) }[/math], the multivariate mutual information will be negative. In this case, knowing both X and Y together provides more information about Z than the sum of the information yielded by knowing either one alone. That is to say, there is a "synergy" in the information about Z provided by the X and Y variables.[4] The above explanation is intended to give an intuitive understanding of the multivariate mutual information, but it obscures the fact that it does not depend upon which variable is the subject (e.g., Z in the above example) and which other two are being thought of as the source of information. For 3 variables, Brenner et al. applied multivariate mutual information to neural coding and called its negativity "synergy" [5] and Watkinson et al. applied it to genetic expression [6]

4.1. Example of Positive Multivariate Mutual Information (Redundancy)

Positive MMI is typical of common-cause structures. For example, clouds cause rain and also block the sun; therefore, the correlation between rain and darkness is partly accounted for by the presence of clouds, [math]\displaystyle{ I(\text{rain};\text{dark}|\text{cloud}) \leq I(\text{rain};\text{dark}) }[/math]. The result is positive MMI [math]\displaystyle{ I(\text{rain};\text{dark};\text{cloud}) }[/math].

4.2. Examples of Negative Multivariate Mutual Information (Synergy)

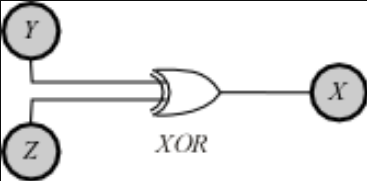

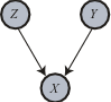

The case of negative MMI is infamously non-intuitive. A prototypical example of negative [math]\displaystyle{ I(X;Y;Z) }[/math] has [math]\displaystyle{ X }[/math] as the output of an XOR gate to which [math]\displaystyle{ Y }[/math] and [math]\displaystyle{ Z }[/math] are the independent random inputs. In this case [math]\displaystyle{ I(Y;Z) }[/math] will be zero, but [math]\displaystyle{ I(Y;Z|X) }[/math] will be positive (1 bit) since once output [math]\displaystyle{ X }[/math] is known, the value on input [math]\displaystyle{ Y }[/math] completely determines the value on input [math]\displaystyle{ Z }[/math]. Since [math]\displaystyle{ I(Y;Z|X)\gt I(Y;Z) }[/math], the result is negative MMI [math]\displaystyle{ I(X;Y;Z) }[/math]. It may seem that this example relies on a peculiar ordering of [math]\displaystyle{ X,Y,Z }[/math] to obtain the positive interaction, but the symmetry of the definition for [math]\displaystyle{ I(X;Y;Z) }[/math] indicates that the same positive interaction information results regardless of which variable we consider as the interloper or conditioning variable. For example, input [math]\displaystyle{ Y }[/math] and output [math]\displaystyle{ X }[/math] are also independent until input [math]\displaystyle{ Z }[/math] is fixed, at which time they are totally dependent.

This situation is an instance where fixing the common effect [math]\displaystyle{ X }[/math] of causes [math]\displaystyle{ Y }[/math] and [math]\displaystyle{ Z }[/math] induces a dependency among the causes that did not formerly exist. This behavior is colloquially referred to as explaining away and is thoroughly discussed in the Bayesian Network literature (e.g., Pearl 1988). Pearl's example is auto diagnostics: A car's engine can fail to start [math]\displaystyle{ (X) }[/math] due either to a dead battery [math]\displaystyle{ (Y) }[/math] or due to a blocked fuel pump [math]\displaystyle{ (Z) }[/math]. Ordinarily, we assume that battery death and fuel pump blockage are independent events, because of the essential modularity of such automotive systems. Thus, in the absence of other information, knowing whether or not the battery is dead gives us no information about whether or not the fuel pump is blocked. However, if we happen to know that the car fails to start (i.e., we fix common effect [math]\displaystyle{ X }[/math]), this information induces a dependency between the two causes battery death and fuel blockage. Thus, knowing that the car fails to start, if an inspection shows the battery to be in good health, we conclude the fuel pump is blocked.

Battery death and fuel blockage are thus dependent, conditional on their common effect car starting. The obvious directionality in the common-effect graph belies a deep informational symmetry: If conditioning on a common effect increases the dependency between its two parent causes, then conditioning on one of the causes must create the same increase in dependency between the second cause and the common effect. In Pearl's automotive example, if conditioning on car starts induces [math]\displaystyle{ I(X;Y;Z) }[/math] bits of dependency between the two causes battery dead and fuel blocked, then conditioning on fuel blocked must induce [math]\displaystyle{ I(X;Y;Z) }[/math] bits of dependency between battery dead and car starts. This may seem odd because battery dead and car starts are governed by the implication battery dead [math]\displaystyle{ \rightarrow }[/math] car doesn't start. However, these variables are still not totally correlated because the converse is not true. Conditioning on fuel blocked removes the major alternate cause of failure to start, and strengthens the converse relation and therefore the association between battery dead and car starts.

4.3. Positivity for Markov Chains

If three variables form a Markov chain [math]\displaystyle{ X\to Y \to Z }[/math], then [math]\displaystyle{ I(X;Y,Z)=H(X)-H(X|Y,Z)=H(X)-H(X|Y)=I(X;Y), }[/math] so[math]\displaystyle{ I(X;Y;Z) = I(X;Y)-I(X;Y|Z) = I(X;Y,Z)-I(X;Y|Z)= I(X;Z) \geq 0. }[/math]

5. Bounds

The bounds for the 3-variable case are

- [math]\displaystyle{ -\min\ \{ I(X;Y|Z), I(Y;Z|X), I(X;Z|Y) \} \leq I(X;Y;Z) \leq \min\ \{ I(X;Y), I(Y;Z), I(X;Z) \} }[/math]

6. Difficulties

A complication is that this multivariate mutual information (as well as the interaction information) can be positive, negative, or zero, which makes this quantity difficult to interpret intuitively. In fact, for n random variables, there are [math]\displaystyle{ 2^n-1 }[/math] degrees of freedom for how they might be correlated in an information-theoretic sense, corresponding to each non-empty subset of these variables. These degrees of freedom are bounded by the various inequalities in information theory.

References

- Cerf, Nicolas J.; Adami, Chris (1998). "Information theory of quantum entanglement and measurement". Physica D 120 (1–2): 62–81. doi:10.1016/s0167-2789(98)00045-1. Bibcode: 1998PhyD..120...62C. https://scholar.google.com/citations?view_op=view_citation&hl=en&user=OXlD-EQAAAAJ&cstart=20&pagesize=80&citation_for_view=OXlD-EQAAAAJ:_kc_bZDykSQC. Retrieved 7 June 2015.

- Baudot, P.; Tapia, M.; Bennequin, D.; Goaillard, JM. (2019). "Topological Information Data Analysis". Entropy 21 (9): 869. doi:10.3390/e21090869. https://dx.doi.org/10.3390%2Fe21090869

- Tapia, M.; Baudot, P.; Formizano-Treziny, C.; Dufour, M.; Goaillard, J.M. (2018). "Neurotransmitter identity and electrophysiological phenotype are genetically coupled in midbrain dopaminergic neurons". Sci. Rep. 8: 13637. doi:10.1038/s41598-018-31765-z. https://dx.doi.org/10.1038%2Fs41598-018-31765-z

- Timme, Nicholas; Alford, Wesley; Flecker, Benjamin; Beggs, John M. (2012). "Multivariate information measures: an experimentalist's perspective". arXiv:1111.6857. Bibcode: 2011arXiv1111.6857T http://adsabs.harvard.edu/abs/2011arXiv1111.6857T

- Brenner, N.; Strong, S.; Koberle, R.; Bialek, W. (2000). "Synergy in a Neural Code". Neural Comput 12: 1531–1552. doi:10.1162/089976600300015259. https://dx.doi.org/10.1162%2F089976600300015259

- Watkinson, J.; Liang, K.; Wang, X.; Zheng, T.; Anastassiou, D. (2009). "Inference of Regulatory Gene Interactions from Expression Data Using Three-Way Mutual Information". Chall. Syst. Biol. Ann. N. Y. Acad. Sci. 1158: 302–313. doi:10.1111/j.1749-6632.2008.03757.x. https://dx.doi.org/10.1111%2Fj.1749-6632.2008.03757.x