Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Kiro Petrovski | -- | 819 | 2022-10-18 06:13:41 | | | |

| 2 | Jason Zhu | Meta information modification | 819 | 2022-10-18 06:18:35 | | | | |

| 3 | Jason Zhu | -1 word(s) | 818 | 2022-12-01 09:50:47 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Carr, M.; Kirkwood, R.; Petrovski, K. Practical Use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) Framework in Veterinary Clinical Teaching with a Clinical Example. Encyclopedia. Available online: https://encyclopedia.pub/entry/29805 (accessed on 07 February 2026).

Carr M, Kirkwood R, Petrovski K. Practical Use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) Framework in Veterinary Clinical Teaching with a Clinical Example. Encyclopedia. Available at: https://encyclopedia.pub/entry/29805. Accessed February 07, 2026.

Carr, Mandi, Roy Kirkwood, Kiro Petrovski. "Practical Use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) Framework in Veterinary Clinical Teaching with a Clinical Example" Encyclopedia, https://encyclopedia.pub/entry/29805 (accessed February 07, 2026).

Carr, M., Kirkwood, R., & Petrovski, K. (2022, October 18). Practical Use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) Framework in Veterinary Clinical Teaching with a Clinical Example. In Encyclopedia. https://encyclopedia.pub/entry/29805

Carr, Mandi, et al. "Practical Use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) Framework in Veterinary Clinical Teaching with a Clinical Example." Encyclopedia. Web. 18 October, 2022.

Copy Citation

This review explores the practical use of the (Observer)—Reporter—Interpreter—Manager—Expert ((O)RIME) model in the assessment of clinical reasoning skills and for the potential to provide effective feedback that can be used in clinical teaching of veterinary learners. For descriptive purposes, we will use the examples of bovine left displaced abomasum and apparently anestric cow. Bearing in mind that the primary purpose of effective clinical teaching is to prepare graduates for a successful career in clinical practice, all effort should be made to have veterinary learners, at graduation, achieve a minimum of Manager level competency in clinical encounters. Contrastingly, there is relatively scant literature concerning clinical teaching in veterinary medicine. There is even less literature available on strategies and frameworks for assessment that can be utilized in the different settings that the veterinary learners are exposed to during their education.

animal science

clinical activities

clinical practice

The aim of clinical teaching in veterinary medicine is to prepare new entrants into the profession to meet all required day-1 veterinary graduate competencies. One of the cornerstones in the development of veterinary learners and their transition into practitioners is the exposure to practice. Exposure to practice (experiential learning or work-based learning) aims to assist veterinary learners to develop veterinary medical and professional attributes within the specific clinical context of the work [1]. One problem with exposure to practice is the assessment of progress in the clinical (reasoning) competencies of learners. This may be even more difficult with the increasing number of veterinary schools opting for a partial or entirely distributed model of exposure to practice, as instructors in the distributed institutions may be inexperienced in the assessment of the progression in clinical competencies of learners. In this review, we provide a description of one assessment framework that can be utilized for this purpose.

Development of clinical competencies in veterinary learners is heavily dependent on effective feedback [2][3][4][5][6][7]. With the aim of enhancing the learner’s performance, feedback must be relevant, specific, timely, thorough, given in a ‘safe environment’, and offered in a constructive manner using descriptive rather than evaluative language. For good execution of provision of effective feedback, instructors should receive formal training in the delivery of feedback [2][8][9][10][11][12].

1.1. Basics of Assessment of Clinical Competency of Veterinary Learners

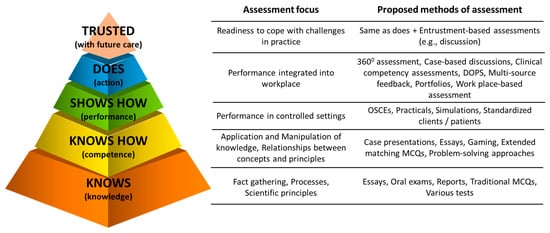

The foundation of assessment should be addressing domains stated within Miller’s pyramid and its extension (Figure 1) [13][14][15]. Miller’s pyramid gave rise to competency-based medical education [13][14]. A variety of frameworks for assessment of clinical competencies are available to medical instructors [14][16]. Detailed discussion of all frameworks is beyond the scope of this article. Readers are recommended to read reviews or appropriate articles on assessment of clinical competencies in learners in medical fields (e.g., [16][17]) and/or educational psychology related to veterinary learners [18].

Figure 1. Extended Miller’s pyramid of clinical competence of learners that can be useful to frame minds of veterinary instructors when assessing learners or planning learning and assessment activities (Modified from [13][14]). DOPS—Direct Observation of Procedural Skills. MCQ—Multi Choice Question. OSCE—Objective Structured Clinical Examination.

Overall, the frameworks for assessment of clinical competencies of learners have been classified as (1) analytical, that deconstruct clinical competence into individual pieces (e.g., attitudes, knowledge and skills), and each of these is assessed separately (e.g., ACGME framework—Accreditation Council for Graduate Medical Education); (2) synthetic, that attempt to view clinical competency comprehensively (synthesis of attitudes, knowledge and skills), assessing clinical performance in real-world activities (e.g., EPA—Entrustable Professional Activity or RIME frameworks); (3) developmental, that relates to milestones in the progression towards a clinical competence (e.g., ACGME or RIME frameworks and the novice to expert approach); or (4) hybrid, that incorporates assessment of clinical competency from a mixture of the above (e.g., CanMEDs framework, derived from the old acronym of Canadian Medical Education for Specialists) [16][18][19][20].

In contrast, despite a significant proportion of veterinary medical education occurring in clinical settings, the literature describing approaches to assessment of the clinical competency of learners is limited [18]. Taking into consideration the One Health approach, methodologies used in human medicine should be applicable in veterinary medicine. Medical education literature has a much larger body of evidence indicating that some of these methods work in various fields of medicine, including in-patient and out-patient care.

1.2. Common Traps in Assessment of Clinical Competency in Veterinary Learners

Common traps include (1) assessing theoretical knowledge rather than clinical competencies [13][14]; (2) assessing learners against each other rather than set standards [21]; (3) belief that pass/fail or similar grading system negatively affects clinical competency of learners compared to tiered grading system [22][23]; (4) different weightings for attitudes, knowledge and skills [24]; (5) leniency-bias (‘fail-to-fail’ or ‘halo’) using unstructured assessments of the clinical competency of learners [16][22][25]; and (6) occasional rather than continuous assessment of the learner’s performance [24]. A plethora of assessment methods that can objectively assess the clinical competency of the learner in the medical field have emerged in the past few decades [14][16][18] but very few have been adopted in the veterinary medicine setting.

References

- Carr, A.N.M.; Kirkwood, R.N.; Petrovski, K.R. Effective Veterinary Clinical Teaching in a Variety of Teaching Settings. Vet. Sci. 2022, 9, 17.

- Modi, J.N.; Anshu; Gupta, P.; Singh, T. Teaching and assessing clinical reasoning skills. Indian Pediatrics 2015, 52, 787–794.

- Lateef, F. Clinical reasoning: The core of medical education and practice. Int. J. Intern. Emerg. Med. 2018, 1, 1015.

- Linn, A.; Khaw, C.; Kildea, H.; Tonkin, A. Clinical reasoning: A guide to improving teaching and practice. Aust. Fam. Physician 2012, 41, 18–20.

- Dreifuerst, K.T. Using debriefing for meaningful learning to foster development of clinical reasoning in simulation. J. Nurs. Educ. 2012, 51, 326–333.

- Carr, A.N.; Kirkwood, R.N.; Petrovski, K.R. Using the five-microskills method in veterinary medicine clinical teaching. Vet. Sci. 2021, 8, 89.

- McKimm, J. Giving effective feedback. Br. J. Hosp. Med. 2009, 70, 158–161.

- Steinert, Y.; Mann, K.V. Faculty Development: Principles and Practices. J. Vet. Med. Educ. 2006, 33, 317–324.

- Houston, T.K.; Ferenchick, G.S.; Clark, J.M.; Bowen, J.L.; Branch, W.T.; Alguire, P.; Esham, R.H.; Clayton, C.P.; Kern, D.E. Faculty development needs. J. Gen. Intern. Med. 2004, 19, 375–379.

- Hashizume, C.T.; Hecker, K.G.; Myhre, D.L.; Bailey, J.V.; Lockyer, J.M. Supporting veterinary preceptors in a distributed model of education: A faculty development needs assessment. J. Vet. Med. Educ. 2016, 43, 104–110.

- Lane, I.F.; Strand, E. Clinical veterinary education: Insights from faculty and strategies for professional development in clinical teaching. J. Vet. Med. Educ. 2008, 35, 397–406.

- Wilkinson, S.T.; Couldry, R.; Phillips, H.; Buck, B. Preceptor development: Providing effective feedback. Hosp Pharm 2013, 48, 26–32.

- Miller, G.E. The assessment of clinical skills/competence/performance. Acad. Med. 1990, 65, S63–S67.

- ten Cate, O.; Carraccio, C.; Damodaran, A.; Gofton, W.; Hamstra, S.J.; Hart, D.; Richardson, D.; Ross, S.; Schultz, K.; Warm, E.; et al. Entrustment Decision Making: Extending Miller’s Pyramid. Acad. Med. 2020, 96, 199–204.

- Al-Eraky, M.; Marei, H. A fresh look at Miller’s pyramid: Assessment at the ‘Is’ and ‘Do’ levels. Med. Educ. 2016, 50, 1253–1257.

- Pangaro, L.; Ten Cate, O. Frameworks for learner assessment in medicine: AMEE Guide No. 78. Med. Teach. 2013, 35, e1197–e1210.

- Hemmer, P.A.; Papp, K.K.; Mechaber, A.J.; Durning, S.J. Evaluation, grading, and use of the RIME vocabulary on internal medicine clerkships: Results of a national survey and comparison to other clinical clerkships. Teach. Learn. Med. 2008, 20, 118–126.

- Smith, C.S. A developmental approach to evaluating competence in clinical reasoning. J. Vet. Med. Educ. 2008, 35, 375–381.

- Pangaro, L.N. A shared professional framework for anatomy and clinical clerkships. Clin. Anat. 2006, 19, 419–428.

- Papp, K.K.; Huang, G.C.; Clabo, L.M.L.; Delva, D.; Fischer, M.; Konopasek, L.; Schwartzstein, R.M.; Gusic, M. Milestones of critical thinking: A developmental model for medicine and nursing. Acad. Med. 2014, 89, 715–720.

- Sepdham, D.; Julka, M.; Hofmann, L.; Dobbie, A. Using the RIME model for learner assessment and feedback. Fam. Med.-Kans. City 2007, 39, 161.

- Fazio, S.B.; Torre, D.M.; DeFer, T.M. Grading Practices and Distributions Across Internal Medicine Clerkships. Teach. Learn. Med. 2016, 28, 286–292.

- Spring, L.; Robillard, D.; Gehlbach, L.; Moore Simas, T.A. Impact of pass/fail grading on medical students’ well-being and academic outcomes. Med. Educ. 2011, 45, 867–877.

- Pangaro, L. A new vocabulary and other innovations for improving descriptive in-training evaluations. Acad. Med. 1999, 74, 1203–1207.

- Battistone, M.J.; Pendelton, B.; Milne, C.; Battistone, M.L.; Sande, M.A.; Hemmer, P.A.; Shomaker, T.S. Global descriptive evaluations are more responsive than global numeric ratings in detecting students’ progress during the inpatient portion of an internal medicine clerkship. Acad. Med. 2001, 76, S105–S107.

More

Information

Subjects:

Veterinary Sciences

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.1K

Online Date:

18 Oct 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No