| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | David Rhoades | -- | 3052 | 2022-10-18 02:50:47 | | | |

| 2 | Jason Zhu | + 1 word(s) | 3053 | 2022-10-18 03:25:56 | | | | |

| 3 | Jason Zhu | Meta information modification | 3053 | 2022-10-21 04:10:20 | | |

Video Upload Options

The observation that major earthquakes are generally preceded by an increase in the seismicity rate on a timescale from months to decades was embedded in the “Every Earthquake a Precursor According to Scale” (EEPAS) model. EEPAS has since been successfully applied to regional real-world and synthetic earthquake catalogues to forecast future earthquake occurrence rates with time horizons up to a few decades. When combined with aftershock models, its forecasting performance is improved for short time horizons. As a result, EEPAS has been included as the medium-term component in public earthquake forecasts in New Zealand. EEPAS has been modified to advance its forecasting performance despite data limitations. One modification is to compensate for missing precursory earthquakes. Precursory earthquakes can be missing because of the time-lag between the end of a catalogue and the time at which a forecast applies or the limited lead time from the start of the catalogue to a target earthquake. An observed space-time trade-off in precursory seismicity, which affects the EEPAS scaling parameters for area and time, also can be used to improve forecasting performance. Systematic analysis of EEPAS performance on synthetic catalogues suggests that regional variations in EEPAS parameters can be explained by regional variations in the long-term earthquake rate.

1. Introduction

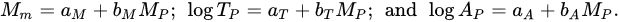

2. Empirical Foundations—The Ψ-Phenomenon

3. Combinations and Extensions to Accommodate Aftershocks

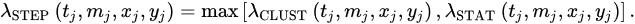

3.1. STEP-EEPAS Mixture

STEP and EEPAS were linearly combined to enhance short-term earthquake forecasting in California [50]. Using the Advanced National Seismic System (ANSS) catalogue of California over the period 1984–2004, the optimal mixture model for forecasting earthquakes with M ≥5:0 was found to be a convex linear combination consisting of 0.42 of EEPAS and 0.58 of STEP. This mixture gave an average probability gain of more than 2 (i.e., information gain per earthquake, ln(probability gain), of more than 0.7) compared to each of the individual models when forecasting ahead for the next 24 h time period. The contribution from EEPAS can be weighted depending on magnitude to enhance the performance at high target magnitudes. The STEP-EEPAS mixture improves short-term forecasting by allowing for the aftershocks of earthquakes that have already occurred.

3.2. EEPAS with Aftershocks Model

3.3. Janus Model: EEPAS-ETAS Mixture

3.4. Hybrid Forecasting in New Zealand

4. Challenges in EEPAS Forecasting

4.1. Understanding the Physics behind the Ψ-Phenomenon

4.2. Incorporating Dependence on the Long-Term Earthquake Rate

4.3. A Three-Dimensional Version of EEPAS?

4.4. Target-Earthquake Oriented Compensation for Missing Precursors

4.5. Accommodating Variable Incompleteness of the Earthquake Catalogue

4.6. Optimal Use of the Space-Time Trade-Off

4.7. Development of a Global Forecasting Model

References

- Vere-Jones, D.; Ben-Zion, Y.; Zúñiga, R. Statistical Seismology. Pure Appl. Geophys. 2005, 162, 1023–1026.

- Vere-Jones, D. Foundations of Statistical Seismology. Pure Appl. Geophys. 2010, 167, 645–653.

- Omori, F. On the aftershocks of earthquakes. J. Coll. Sci. Imp. Univ. Tokyo 1885, 7, 111–200.

- Utsu, T.; Ogata, Y. The centenary of the Omori formula for a decay law of aftershock activity. J. Phys. Earth 1995, 43, 1–33.

- Gutenberg, B.; Richter, C.F. Frequency of earthquakes in California. Bull. Seismol. Soc. Am. 1944, 34, 185–188.

- Lindman, M.; Lund, B.; Roberts, R.; Jonsdottir, K. Physics of the Omori law: Inferences from interevent time distributions and pore pressure diffusion modeling. Tectonophysics 2006, 424, 209–222.

- Hainzl, S.; Marsan, D. Dependence of the Omori-Utsu law parameters on main shock magnitude: Observations and modeling. J. Geophys. Res. Solid Earth 2008, 113, B10309.

- Guglielmi, A.V. Interpretation of the Omori law. Izv. Phys. Solid Earth 2016, 52, 785–786.

- Faraoni, V. Lagrangian formulation of Omori’s law and analogy with the cosmic Big Rip. Eur. Phys. J. C 2020, 80, 445.

- Mogi, K. Magnitude-Frequency Relation for Elastic Shocks Accompanying Fractures of Various Materials and Some Related problems in Earthquakes (2nd Paper). Bull. Earthq. Res. Inst. Univ. Tokyo 1963, 40, 831–853.

- Schorlemmer, D.; Wiemer, S.; Wyss, M. Variations in earthquake-size distribution across different stress regimes. Nature 2005, 437, 539–542.

- Amitrano, D. Variability in the power-law distributions of rupture events. Eur. Phys. J. Spec. Top. 2012, 205, 199–215.

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S.; Christopoulos, S.-R.G.; Lazaridou-Varotsos, M.S. Identifying the occurrence time of an impending mainshock: A very recent case. Earthq. Sci. 2015, 28, 215–222.

- Evison, F.; Rhoades, D. Precursory scale increase and long-term seismogenesis in California and Northern Mexico. Ann. Geophys. 2002, 45, 479–495.

- Evison, F.F.; Rhoades, D.A. Demarcation and Scaling of Long-term Seismogenesis. Pure Appl. Geophys. 2004, 161, 21–45.

- Rhoades, D.A.; Evison, F.F. Long-range earthquake forecasting with every earthquake a precursor according to scale. Pure Appl. Geophys. 2004, 161, 47–72.

- Rhoades, D.A.; Evison, F.F. Test of the EEPAS Forecasting Model on the Japan earthquake catalogue. Pure Appl. Geophys. 2005, 162, 1271–1290.

- Rhoades, D.A.; Evison, F.F. The EEPAS forecasting model and the probability of moderate-to-large earthquakes in central Japan. Tectonophysics 2006, 417, 119–130.

- Console, R.; Rhoades, D.A.; Murru, M.; Evison, F.F.; Papadimitriou, E.E.; Karakostas, V.G. Comparative performance of time-invariant, long-range and short-range forecasting models on the earthquake catalogue of Greece. J. Geophys. Res. Solid Earth 2006, 111, B09304.

- Rhoades, D.A. Application of the EEPAS model to forecasting earthquakes of moderate magnitude in southern California. Seismol. Res. Lett. 2007, 78, 110–115.

- Rhoades, D.A. Application of a long-range forecasting model to earthquakes in the Japan mainland testing region. Earth Planets Space 2011, 63, 197–206.

- Rhoades, D.A.; Somerville, P.G.; Somerville, F.; de Oliveira, D.; Thio, H.K. Effect of tectonic setting on the fit and performance of a long-range earthquake forecasting model. Res. Geophys. 2012, 2, 13–23.

- Zechar, J.D.; Schorlemmer, D.; Liukis, M.; Yu, J.; Euchner, F.; Maechling, P.J.; Jordan, T.H. The Collaboratory for the Study of Earthquake Predictability perspective on computational earthquake science. Concurr. Comput. Pract. Exp. 2010, 22, 1836–1847.

- Gerstenberger, M.C.; Rhoades, D.A. New Zealand earthquake forecast testing centre. In Seismogenesis and Earthquake Forecasting: The Frank Evison Volume II; Springer: Berlin/Heidelberg, Germany, 2010; pp. 23–38.

- Schneider, M.; Clements, R.; Rhoades, D.; Schorlemmer, D. Likelihood- and residual-based evaluation of medium-term earthquake forecast models for California. Geophys. J. Int. 2014, 198, 1307–1318.

- Rhoades, D.A.; Christophersen, A.; Gerstenberger, M.C.; Liukis, M.; Silva, F.; Marzocchi, W.; Werner, M.J.; Jordan, T.H. Highlights from the first ten years of the New Zealand Earthquake Forecast Testing Center. Seismol. Res. Lett. 2018, 89, 1229–1237.

- Gerstenberger, M.; Rhoades, D.; Litchfield, N.; Van Dissen, R.; Abbot, E.; Goded, T.; Christophersen, A.; Bannister, S.; Barrell, D.; Bruce, Z.; et al. The Kaikoura Seismic Hazard Model. N. Z. J. Geol. Geophys. 2022. in revision.

- Gerstenberger, M.; McVerry, G.; Rhoades, D.; Stirling, M. Seismic Hazard Modeling for the Recovery of Christchurch. Earthq. Spectra 2014, 30, 17–29.

- Rhoades, D.A.; Liukis, M.; Christophersen, A.; Gerstenberger, M.C. Retrospective tests of hybrid operational earthquake forecasting models for Canterbury. Geophys. J. Int. 2015, 204, 440–456.

- Evison, F.F. The precursory earthquake swarm. Phys. Earth Planet. Inter. 1977, 15, 19–23.

- Evison, F.F. Precursory seismic sequences in New Zealand. N. Z. J. Geol. Geophys. 1977, 20, 129–141.

- Evison, F.F. Fluctuations of seismicity before major earthquakes. Nature 1977, 266, 710–712.

- Evison, F. Multiple earthquake events at moderate-to-large magnitudes in Japan. J. Phys. Earth 1981, 29, 327–339.

- Evison, F. Generalised Precursory Swarm Hypothesis. J. Phys. Earth 1982, 30, 155–170.

- Rikitake, T. Earthquake precursors. Bull. Seismol. Soc. Am. 1975, 65, 1133–1162.

- Rikitake, T. Classification of earthquake precursors. Tectonophysics 1979, 54, 293–309.

- Rikitake, T. Earthquake Forecasting and Warning; Dordrecht Boston: D. Reidel; Hingham, Mass.: Sold and distributed in the U.S.A. and Canada by Kluwer Boston; Center for Academic Publications Japan: Tokyo, Japan, 1982.

- Rhoades, D.A.; Evison, F.F. Long-range earthquake forecasting based on a single predictor. Geophys. J. Int. 1979, 59, 43–56.

- Evison, F.F.; Rhoades, D.A. The precursory earthquake swarm in New Zealand: Hypothesis tests. N. Z. J. Geol. Geophys. 1993, 36, 51–60.

- Rhoades, D.A.; Evison, F.F. Long-range earthquake forecasting based on a single predictor with clustering. Geophys. J. Int. 1993, 113, 371–381.

- Evison, F.F.; Rhoades, D.A. The precursory earthquake swarm in New Zealand: Hypothesis tests. N. Z. J. Geol. Geophys. 1997, 40, 537–547.

- Evison, F.F.; Rhoades, D.A. Long-term seismogenic process for major earthquakes in subduction zones. Phys. Earth Planet. Inter. 1998, 108, 185–199.

- Evison, F.F.; Rhoades, D.A. The precursory earthquake swarm and the inferred precursory quarm. N. Z. J. Geol. Geophys. 1999, 42, 229–236.

- Evison, F.F.; Rhoades, D.A. The precursory earthquake swarm in Japan: Hypothesis test. Earth Planets Space 1999, 51, 1267–1277.

- Evison, F.; Rhoades, D. The precursory earthquake swarm in Greece. Ann. Geophys. 2000, 43, 991–1009.

- Evison, F.; Rhoades, D. Model of long-term seismogenesis. Ann. Geophys. 2001, 44, 81–93.

- Ogata, Y. Statistical model for standard seismicity and detection of anomalies by residual analysis. Tectonophysics 1989, 169, 159–174.

- Ogata, Y. Space-Time Point-Process Models for Earthquake Occurrences. Ann. Inst. Stat. Math. 1998, 50, 379–402.

- Gerstenberger, M.C.; Wiemer, S.; Jones, L.M.; Reasenberg, P.A. Real-time forecasts of tomorrow’s earthquakes in California. Nature 2005, 435, 328–331.

- Rhoades, D.A.; Gerstenberger, M.C. Mixture models for improved short-term earthquake forecasting. Bull. Seismol. Soc. Am. 2009, 99, 636–646.

- Rhoades, D.A. Long-range earthquake forecasting allowing for aftershocks. Geophys. J. Int. 2009, 178, 244–256.

- Gutenberg, B.; Richter, C.F. Seismicity of the Earth and Associated Phenomena; Princeton, N.J., Ed.; Princeton University Press: Princeton, NJ, USA, 1954.

- Utsu, T. A statistical study on the occurrence of aftershocks. Geophys. Mag. 1961, 30, 521–605.

- Rhoades, D.A. Mixture Models for Improved Earthquake Forecasting with Short-to-Medium Time Horizons. Bull. Seismol. Soc. Am. 2013, 103, 2203–2215.

- Gledhill, K.; Ristau, J.; Reyners, M.; Fry, B.; Holden, C. The Darfield (Canterbury) earthquake of September 2010: Preliminary seismological report. Bull. N. Z. Soc. Earthq. Eng. 2010, 43, 215–221.

- Kaiser, A.; Holden, C.; Beavan, J.; Beetham, D.; Benites, R.; Celentano, A.; Collett, D.; Cousins, J.; Cubrinovski, M.; Dellow, G.; et al. The Mw 6.2 Christchurch earthquake of February 2011: Preliminary report. N. Z. J. Geol. Geophys. 2012, 55, 67–90.

- Yan, W.; Galloway, W. Rethinking Resilience, Adaptation and Transformation in a Time of Change; Springer International Publishing: Berlin, Germany, 2017.

- Stirling, M.; McVerry, G.H.; Gerstenberger, M.C.; Litchfield, N.J.; Van Dissen, R.J.; Berryman, K.R.; Barnes, P.; Wallace, L.M.; Villamor, P.; Langridge, R.M.; et al. National Seismic Hazard Model for New Zealand: 2010 Update. Bull. Seismol. Soc. Am. 2012, 102, 1514–1542.

- Gerstenberger, M.C.; Rhoades, D.A.; McVerry, G.H. A hybrid time-dependent probabilistic seismic-hazard model for Canterbury, New Zealand. Seismol. Res. Lett. 2016, 87, 1311–1318.

- Meletti, C.; Galadini, F.; Valensise, G.; Stucchi, M.; Basili, R.; Barba, S.; Vannucci, G.; Boschi, E. A seismic source zone model for the seismic hazard assessment of the Italian territory. Tectonophysics 2008, 450, 85–108.

- Kaiser, A.; Balfour, N.; Fry, B.; Holden, C.; Litchfield, N.; Gerstenberger, M.; D’Anastasio, E.; Horspool, N.; McVerry, G.; Ristau, J.; et al. The 2016 Kaikōura, New Zealand, earthquake: Preliminary seismological report. Seismol. Res. Lett. 2017, 88, 727–739.

- Rhoades, D.A.; Christophersen, A. Time-varying probabilities of earthquake occurrence in central New Zealand based on the EEPAS model compensated for time-lag. Geophys. J. Int. 2019, 219, 417–429.

- Rastin, S.J.; Rhoades, D.A.; Rollins, C.; Gerstenberger, M.C.; Christophersen, A.; Thingbaijam, K.K.S. Spatial Distribution of Earthquake Occurrence for the New Zealand National Seismic Hazard Model Revision, GNS Science Report; SR2021-51; GNS Science: Lower Hutt, New Zealand, 2022.

- Rastin, S.J.; Rhoades, D.A.; Christophersen, A. Space–time trade-off of precursory seismicity in New Zealand and California revealed by a medium-term earthquake forecasting model. Appl. Sci. 2021, 11, 10215.

- Imoto, M.; Rhoades, D.A. Seismicity models of moderate earthquakes in Kanto, Japan utilizing multiple predictive parameters. Pure Appl. Geophys. 2010, 167, 831–843.

- Christophersen, A.; Rhoades, D.A.; Colella, H.V. Precursory seismicity in regions of low strain rate: Insights from a physics-based earthquake simulator. Geophys. J. Int. 2017, 209, 1513–1525.

- Rhoades, D.A.; Robinson, R.; Gerstenberger, M.C. Long-range predictability in physics-based synthetic earthquake catalogues. Geophys. J. Int. 2011, 185, 1037–1048.

- Richards-Dinger, K.; Dieterich, J.H. RSQSim Earthquake Simulator. Seismol. Res. Lett. 2012, 83, 983–990.

- Robinson, R.; Benites, R. Upgrading a synthetic seismicity model for more realistic fault ruptures. Geophys. Res. Lett. 2001, 28, 1843–1846.

- Scholz, C.H. The Mechanics of Earthquakes and Faulting, 3rd ed.; Cambridge University Press: Cambridge, UK, 2019.

- Rastin, S.J.; Rhoades, D.A.; Rollins, C.; Gerstenberger, M.C. How Useful Are Strain Rates for Estimating the Long-Term Spatial Distribution of Earthquakes? Appl. Sci. 2022, 12, 6804.

- Eberhart-Phillips, D.; Reyners, M.; Bannister, S.; Chadwick, M.; Ellis, S. Establishing a versatile 3-D seismic velocity model for New Zealand. Seismol. Res. Lett. 2010, 81, 992–1000.

- Havskov, J.; Ottemoller, L. Routine Data Processing in Earthquake Seismology: With Sample Data, Exercises and Software; Springer: Dordrecht, The Netherlands, 2010.