Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Lewis Urquhart | -- | 2266 | 2022-05-06 10:37:55 | | | |

| 2 | Catherine Yang | + 4 word(s) | 2270 | 2022-05-06 10:48:46 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Urquhart, L.; Wodehouse, A.; , . Generative Algorithms in Human-Centered Product Development. Encyclopedia. Available online: https://encyclopedia.pub/entry/22653 (accessed on 07 February 2026).

Urquhart L, Wodehouse A, . Generative Algorithms in Human-Centered Product Development. Encyclopedia. Available at: https://encyclopedia.pub/entry/22653. Accessed February 07, 2026.

Urquhart, Lewis, Andrew Wodehouse, . "Generative Algorithms in Human-Centered Product Development" Encyclopedia, https://encyclopedia.pub/entry/22653 (accessed February 07, 2026).

Urquhart, L., Wodehouse, A., & , . (2022, May 06). Generative Algorithms in Human-Centered Product Development. In Encyclopedia. https://encyclopedia.pub/entry/22653

Urquhart, Lewis, et al. "Generative Algorithms in Human-Centered Product Development." Encyclopedia. Web. 06 May, 2022.

Copy Citation

Algorithmic design harnesses the power of computation to generate a form based on input data and rules. In the product design setting, a major advantage afforded by this approach is the ability to automate the customization of design variations in accordance with the requirements of individual users. The background knowledge, intuition, and critical judgement of the designer are still essential but are focused on different areas of the design process.

generative design

algorithmic design

human-centered design

1. Introduction

Algorithmic design harnesses the power of computation to explore a greater diversity of concepts around a particular design goal in the development process. The background knowledge, intuition, and critical judgement of the designer are still essential but are focused on different areas of the design process. This includes developing the basic abstraction of the problem, designing algorithms for the basic form and constraints, the selection of promising avenues of exploration, and the refinement of problem parameters. These activities require the same creativity, intuition, and judgement normally associated with innovative design working. The generation of the algorithms and code requires logical means of thinking and skills in software that may not be currently familiar to designers.

2. Application to Product Development Process

2.1. Micro and Macro Level Design

With a view to creating a human-centered approach that can deal with complex information such as the anatomical requirements for wearable devices, the researchers can demarcate the design problem in order to manage this flexibility. Programmatically, from a required functional performance through to the granular detail of the geometry of components, the researchers can split the algorithmic approach into two broad categories: macro-level and micro-level. Each level deals with a distinct set of design problems. The macro-level deals with the processing of an overall architecture. Within the context of a human-centered approach, this would entail processing the user’s biomechanical data into a target system architecture including a spatial embedding of this connected structure around the user’s target anatomy. It is the macro-level algorithm that relates these abstract definitions to the spatial embedding. This spatial embedding involves arranging the components and their network of connectivity around the geometry and biomechanics of the individual product user. The macro-level graph representation supplies all the necessary boundary conditions to the sub-problem tackled by the micro-level algorithm, along with its control volume—the definition of the space within which the element under consideration can exist.

This subsequently allows us to pass from a high-level design problem at the macro level to a range of micro-level algorithms. The researchers can then derive a complete description of the sub-problem of generating the form for a part of the enclosure which must, e.g., allow bending around a particular axis under a certain force or, say, be entirely rigid under normal operation. With respect to the description, the researchers can relate the macro-micro bilateral to the problem of human-centered design thinking more concretely. The macro-level design relates to the breakdown of the broad ergonomics, i.e., the geometry that specifically relates to the human bodies likely to interact with the product. This could range from a very specific group to something intended for broad use within a population.

Specification and initial ideation take place at the beginning of the generative design process and establish the rough boundaries of the design. These rough boundaries allow for the elimination of a vast number of possible but not-suitable configurations, while establishing a basis in which to explore possible and suitable configurations. The specifications are set through the conventional means of research, and the potential solutions are explored in relation to these specifications with the assumption that these solutions are not optimal until all of the human-factors and design ontology intelligence has been inputted into the algorithm.

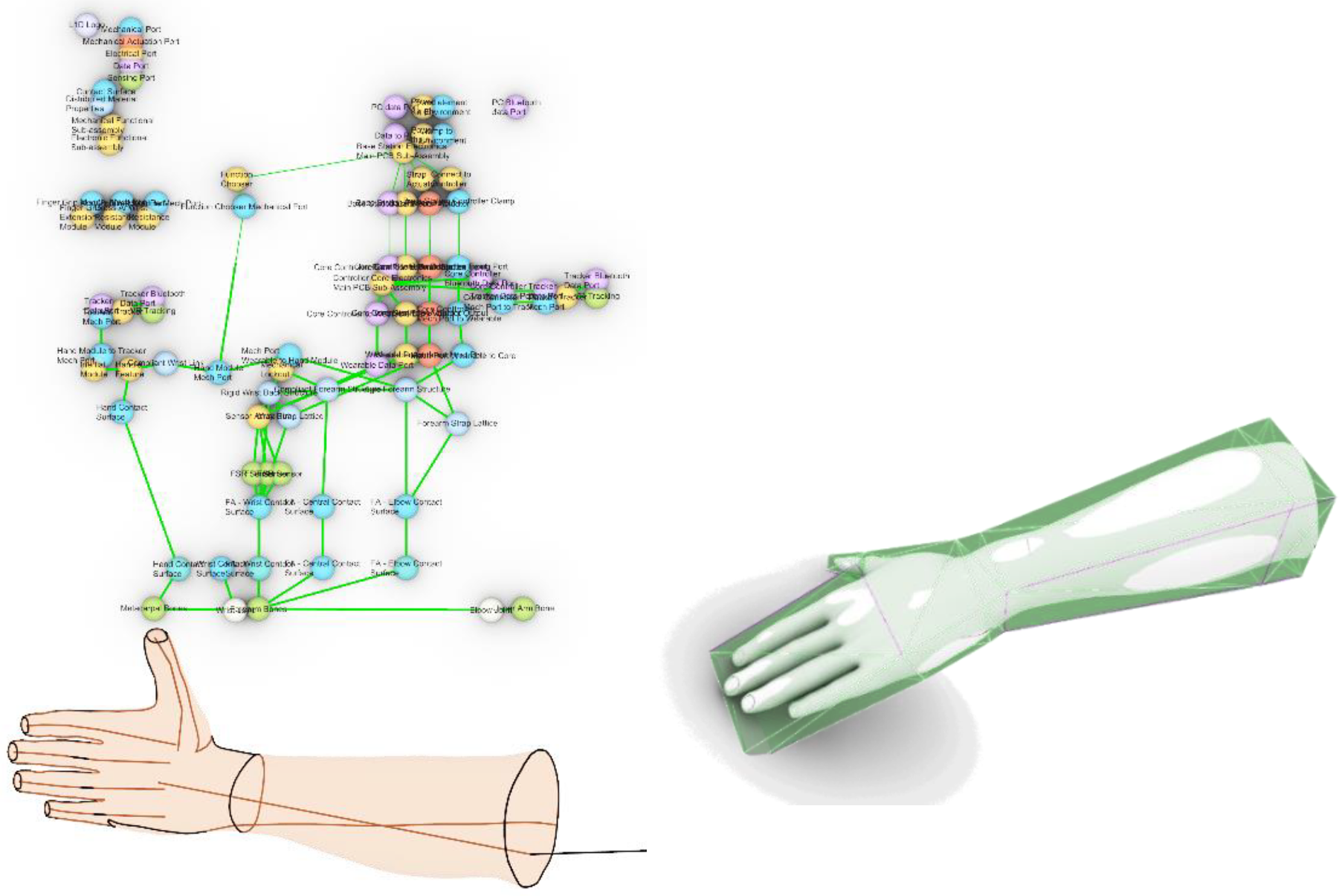

2.2. Conceptulisation Stage: Graphing and SBF Approach

For computational approaches, algorithms are necessary to govern the overall topology of the structure in relation to key ergonomic data, but a series of additional rules were created for the generation of detailed structure based on target functionality. Within the context of generative design, functional definition is a key factor for a successful algorithmic design outcome [1]. This allows the algorithm to work to achieve the desired goal within the defined boundaries and creates the necessary constraints. The functional definition utilized for generative design algorithms has a specific ontological status which influences how they are implemented programmatically. Critically, this relates to an understanding of abstract classes in a Structure-Behavior-Function (SBF) design ontology [2] that allow us to understand the spatial boundaries and the functional behaviors that might exist within those boundaries. The behavior aspect is composed of a series of states of the product system with transitions between them. Each transition will have a function which implements it with any input and output variables. Each function may then in turn be composed of a series of behaviors. In this way, functions may be decomposed with ever-increasing granularity; but how does this help with form generation and the algorithmic approach? Tools such as GraphML which facilitate a machine-readable representation of product structure are a useful starting point. These tools make explicit the connectivity network of the sub-assemblies and components within a system. This is one method in which the system architecture can be described in terms of the relationships between the form, behaviors, and functions of a given product, providing a useful architecture for establishing a human-centered framework for form generation.

Referring to related functions allows checks to be carried out on the viability of the current design implementation against its intended overall function. Having this kind of machine-readable representation of the design intent and implementation allows it to be parsed and interrogated in different ways. By developing a clear framework that tracks from the highest-level goals and functions of the product system down to the component and material level of the product’s implementation and fabrication, the researchers obtain a workflow that is robust to change.

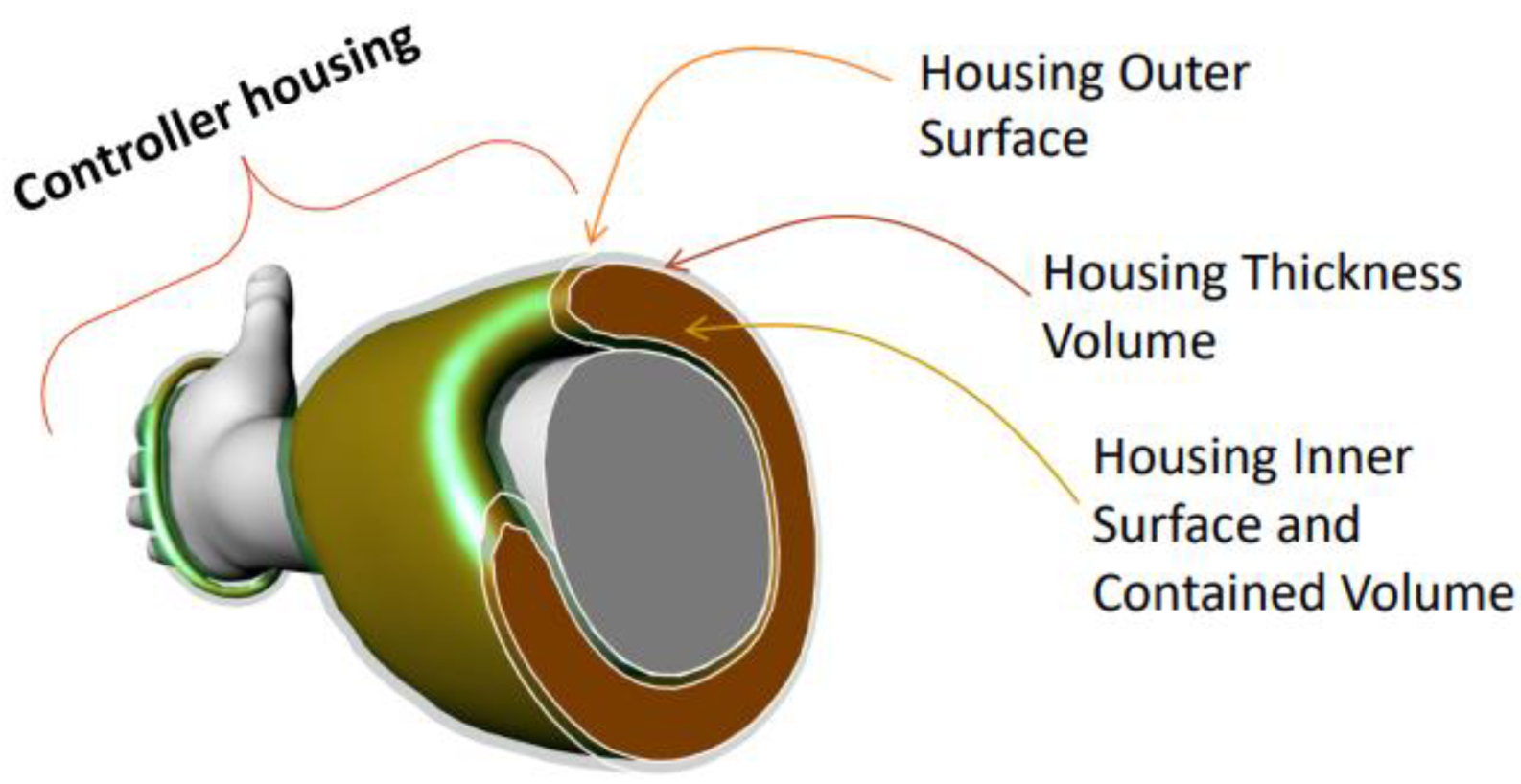

In applying this to the design problem of bespoke wearable controllers, the researchers can see how the boundaries of the system can be grouped into abstract classes that have specific behaviors and functions. Figure 1 shows this in more detail, where a hypothetical controller design can be parsed into distinct sections or classes. The volume bounded between the inner and outer surfaces is the “thickness volume” of which there is a corresponding inner surface and contained volume and outer surface. These difference abstractions of the hypothetical form elements can be used to establish the placement of componentry and the interactions between sub-assemblies. By creating class definitions which have member variables including 3D surfaces and volumes, the researchers are able to then move from an abstract representation of the design intent to one which can exist in a 3D CAD environment.

Figure 1. Diagrammatic relationship between the abstract volumes and surfaces of the controller housing class.

2.3. Embodiment Stage: Macro–Level SBF Solutions

With the intention of making the controller design highly attuned to ergonomics, the workflow incorporates the use of 3D scanners in order to acquire data on user anatomy. This is a direct input that differentiates this methodology as human-centered, drawing directly from discrete ergonomic properties to inform the design. Where this example utilizes ergonomic data, other forms of inputs could be used in replacement or as a complement.

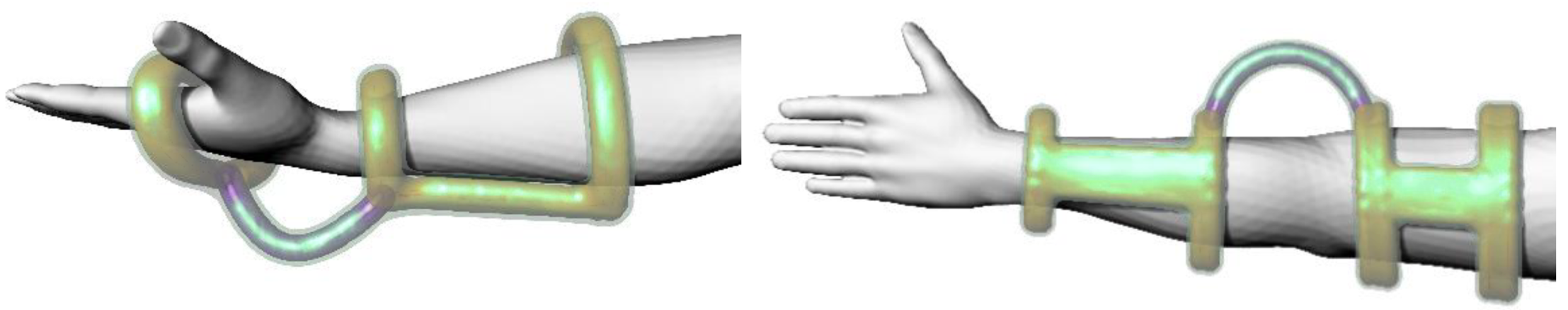

Making use of 3D scanning and derived knowledge about the location of the user’s joints, the researchers can then map the abstract SBF graph onto a spatial arrangement around the user’s arm. Figure 2 shows some hypothetical arrangements informed by the abstract class structure previously shown in Figure 1. Once in place, the researchers can use this spatially embedded graph to partition the space around the user’s arm and allocate the space available to each element or sub-assembly. Crucially, the degrees of freedom, spatial bounds, and mechanical performance required of any deformable, compliant elements will be specified at this stage. Supplementary information such as the user’s weight, age, and gender can be used to complement the ergonomic information and help to fill in any unknown information. For the purposes of this research, a standard arm mesh available for Rhino was utilized in order to demonstrate the approach.

Figure 2. Form generations developed from abstract class definitions and ergonomic inputs.

The researchers can then anchor the abstract graph structure to its relevant points on the user’s arm utilizing scan mesh data. The initial results are shown in Figure 3 (left), where each node in the graph is given a bounding sphere of the same radius. In this way, a physical structure to the product system that is valid in its adjacency and connectivity of functional components can emerge from this form-finding process, regardless of the particular anthropometry of the individual intended user. Whilst this spatial embedding of the structure may still seem abstract, the connectivity of the elements to the bone structure of the arm and its manifold mesh surface allows for the generation of CAD geometry through a variety of means by manifesting multiple points of reference.

Figure 3. (Left) The structure graph network. (Right) Network loaded directly into the CAD environment adjacent to the arm mesh. The structure graph is arranged around the arm mesh.

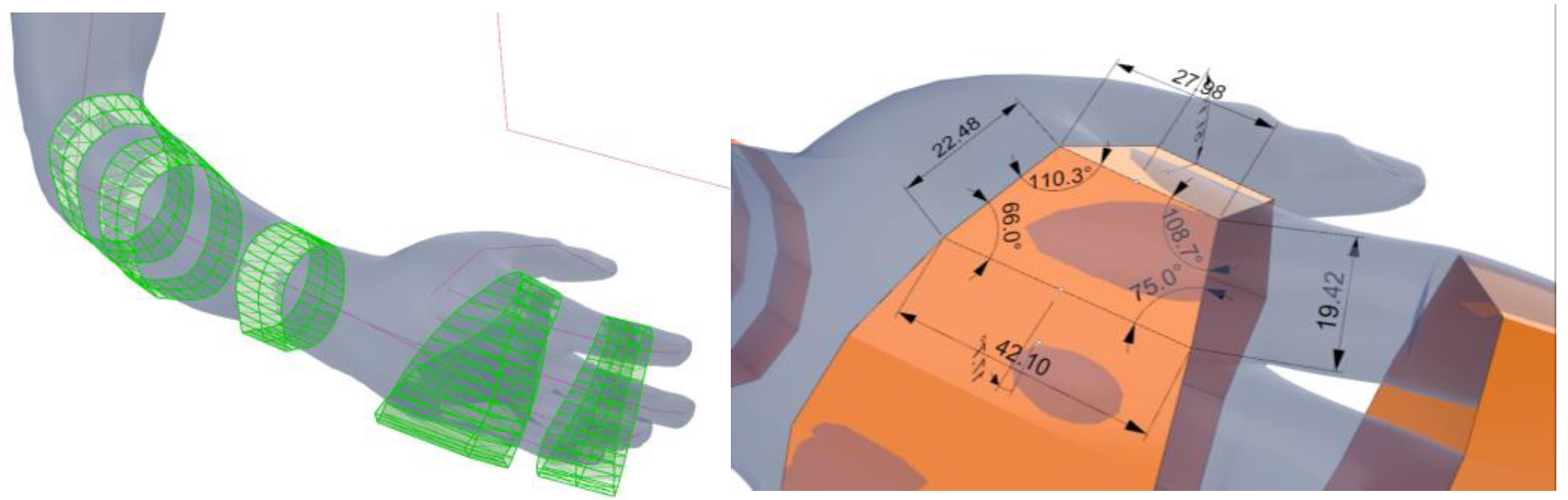

The next step here is to take the scan data as an input and integrate it with the data structure representing the joints and orientation of the user’s bones. By filtering the named nodes in the abstract graph representation, the number and connectivity of features which contact the user’s limb can be extracted and used to drive the component placement step. Knowing the location for the custom elements that will interact directly with the user, their relationship to the user’s anthropometry, the biomechanics, and the context for these elements within the product assembly, CAD models can be generated with respect to this human-factors data. The interactive physics engine “Kangaroo” inside Grasshopper was utilized to undertake a series of form-finding processes to arrive at a layout geometry for the bespoke features, taking the form of straps that can wrap around a specific user’s forearm and wrist, presented as a series of surfaces with unique dimensions (Figure 4). Another script is used to “unroll” the surfaces from around the scan mesh. The output of this stage of the algorithmic approach is a series of straps composed of planar facets. The face angle between each facet and its neighbors will also be unique with respect to the ergonomic profile.

Figure 4. Unique dimensions of generated strap geometry.

2.4. Realization Stage: Generation of Device Concepts

In essence, the graphing of the relationships between function and structure facilitates the identification of critical points of interaction around the anthropometric data. From here, the crucial sub-problems that support the creation of local mechanical features, attuned around the ergonomic constraints, can then be defined. The mechanism pictured in Figure 5 has been created as a fully parametric model in Grasshopper for PRIME-VR2 and is a clear “proof-of-concept” for the human-factors based design methodology the researchers are exploring here. The image on the left shows how the spatial relationships of the device have been informed by a user’s scan mesh, with the image on the right showing an elaborated design which includes componentry and more complex mechanical elements worked into the design after the spatial definitions have been set.

Figure 5. An initial arrangement of a linkage resulting in a rotation around a virtual axis aligned to the user’s wrist.

2.5. Implicaions for Design Practice

This case study shows how generative design development can be attuned around a specific human-centered workflow integrating ergonomic data and domain-specific intelligence regarding the functional goals of the object in question. This raises question of how these methods can influence wider design practice and whether a wide adoption is feasible in the current climate.

Part of what has been discussed herein is the significant conceptual shift in design thinking required to implement generative design development work effectively. The researchers presented the problem of design domains and the relations between elements. A system such as this is not widely used within traditionally understood design methodologies such as Pugh’s [3] that essentially assume that the design solution is arrived at by a slow process of problem solving. Generative design requires a different philosophy, whereby design solutions are optimized around a defined set of constraints and critically remove direct command over the solution space. The design process is, as a result, entirely reconfigured and incorporates the modern tools of CAD, CAM, and digital optimization strategies as part of the initial conditions for successful design work.

Immediately, this has implications for the delivery of design teaching—should the pedagogic models be expanded to include CAD visual programming workflows such as Grasshopper, for example? Some researchers have argued for this very thing (see [4][5]), as it may enhance the possibilities open to new designers and push the boundaries of innovation. Certainly, as this work has argued, HCD can benefit hugely from generative methods at both the macro-structural and the micro-detailed design levels. As other researchers such as Abby Patterson [6] have demonstrated, generative tools have huge applications in bespoke products for medicine, and combined with good interface design and software control, the tools could be widely used and broadly intelligible.

This in turn raises questions of adoption feasibility—how do such systems integrate with the current design practices that are already entrenched within industry today? As noted, often the problem is “cultural”, where particular design approaches are viewed as superior simply because of their widespread use. A case study such as PRIME-VR2 controller, though, may be a convincing exemplar in how generative methods could enhance innovation in HCD and perhaps broaden conversations in how industrial designers approach complex human-factors problems.

References

- Bendsøe, M.P.; Sigmund, O. Material interpolation schemes in topology optimization. Arch. Appl. Mech. 1999, 69, 635–654.

- Gero, J.S.; Kannengiesser, U. The Function-Behaviour-Structure ontology of design. In An Anthology of Theories and Models of Design; Chakrabarti, A., Blessing, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2013.

- Pugh, S. Engineering design—unscrambling the research issues. J. Eng. Des. 1990, 1, 65–72.

- Celani, G.; Vaz, C.E. CAD Scripting and Visual Programming Languages for Implementing Computational Design Concepts: A Comparison from a Pedagogical Point of View. Int. J. Archit. Comput. 2012, 10, 121–137.

- Goldstein, M.H.; Sommer, J.; Buswell, N.T.; Li, X.; Sha, Z.; Demirel, H.O. Uncovering Generative Design Rationale in the Undergraduate Classroom. In Proceedings of the 2021 IEEE Frontiers in Education Conference (FIE), Lincoln, NE, USA, 13–16 October 2021.

- Paterson, A. Digitisation of the splinting process: Exploration and evaluation of a computer aided design approach to support additive manufacture. Ph.D. Thesis, Loughborough University, Loughborough, UK, 2013. Available online: https://hdl.handle.net/2134/13021 (accessed on 25 March 2022).

More

Information

Subjects:

Ergonomics

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

883

Revisions:

2 times

(View History)

Update Date:

06 May 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No