| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Suresh Neethirajan | -- | 1563 | 2022-04-25 05:43:17 | | | |

| 2 | Jessie Wu | Meta information modification | 1563 | 2022-04-25 08:01:31 | | |

Video Upload Options

Farm animals, numbering over 70 billion worldwide, are increasingly managed in large-scale, intensive farms. With both public awareness and scientific evidence growing that farm animals experience suffering, as well as affective states such as fear, frustration and distress, there is an urgent need to develop efficient and accurate methods for monitoring their welfare. At present, there are not scientifically validated ‘benchmarks’ for quantifying transient emotional (affective) states in farm animals, and no established measures of good welfare, only indicators of poor welfare, such as injury, pain and fear. Conventional approaches to monitoring livestock welfare are time-consuming, interrupt farming processes and involve subjective judgments. Biometric sensor data enabled by artificial intelligence is an emerging smart solution to unobtrusively monitoring livestock, but its potential for quantifying affective states and ground-breaking solutions in their application are yet to be realized.

1. Modelling Farm Animal Affective State and Behaviour Using Multimodal Sensor Fusion

2. Classification and Annotation of Affective States and Behavioural Events

3. Sensor Network Fusion Protocols and Instrumentation Framework

4. Build Predictive Models of Affective State and Behaviour

5. Challenges in the Quantification and Validation of Performance Models for Affective States Measurement

5.1. Sensor Durability

5.2. Low Sensitivity of the Model at Detecting Affective and Behavioural States

5.3. Lack of Correlation between Sensor Data and Biochemical Reference Values

5.4. Limiting the Numbers of Animals Used in the Experiments

References

- Neethirajan, S. The role of sensors, big data and machine learning in modern animal farming. Sens. Bio-Sens. Res. 2020, 29, 100367.

- Neethirajan, S.; Kemp, B. Digital Livestock Farming. Sens. Bio-Sens. Res. 2021, 32, 100408.

- Neethirajan, S.; Reimert, I.; Kemp, B. Measuring Farm Animal Emotions—Sensor-Based Approaches. Sensors 2021, 21, 553.

- Baciadonna, L.; Briefer, E.F.; McElligott, A.G. Investigation of reward quality-related behaviour as a tool to assess emotions. Appl. Anim. Behav. Sci. 2020, 225, 104968.

- Relić, R.; Sossidou, E.; Xexaki, A.; Perić, L.; Božičković, I.; Đukić-Stojčić, M. Behavioral and health problems of poultry related to rearing systems. Ank. Üniversitesi Vet. Fakültesi Derg. 2019, 66, 423–428.

- Verdon, M.; Rault, J.-L. 8—Aggression in Group Housed Sows and Fattening Pigs; Špinka, M., Ed.; Woodhead Publishing: Sawston, UK, 2018; pp. 235–260.

- Ujita, A.; El Faro, L.; Vicentini, R.R.; Lima, M.L.P.; Fernandes, L.D.O.; Oliveira, A.P.; Veroneze, R.; Negrão, J.A. Effect of positive tactile stimulation and prepartum milking routine training on behavior, cortisol and oxytocin in milking, milk composition, and milk yield in Gyr cows in early lactation. Appl. Anim. Behav. Sci. 2021, 234, 105205.

- Lürzel, S.; Bückendorf, L.; Waiblinger, S.; Rault, J.-L. Salivary oxytocin in pigs, cattle, and goats during positive human-animal interactions. Psychoneuroendocrinology 2020, 115, 104636.

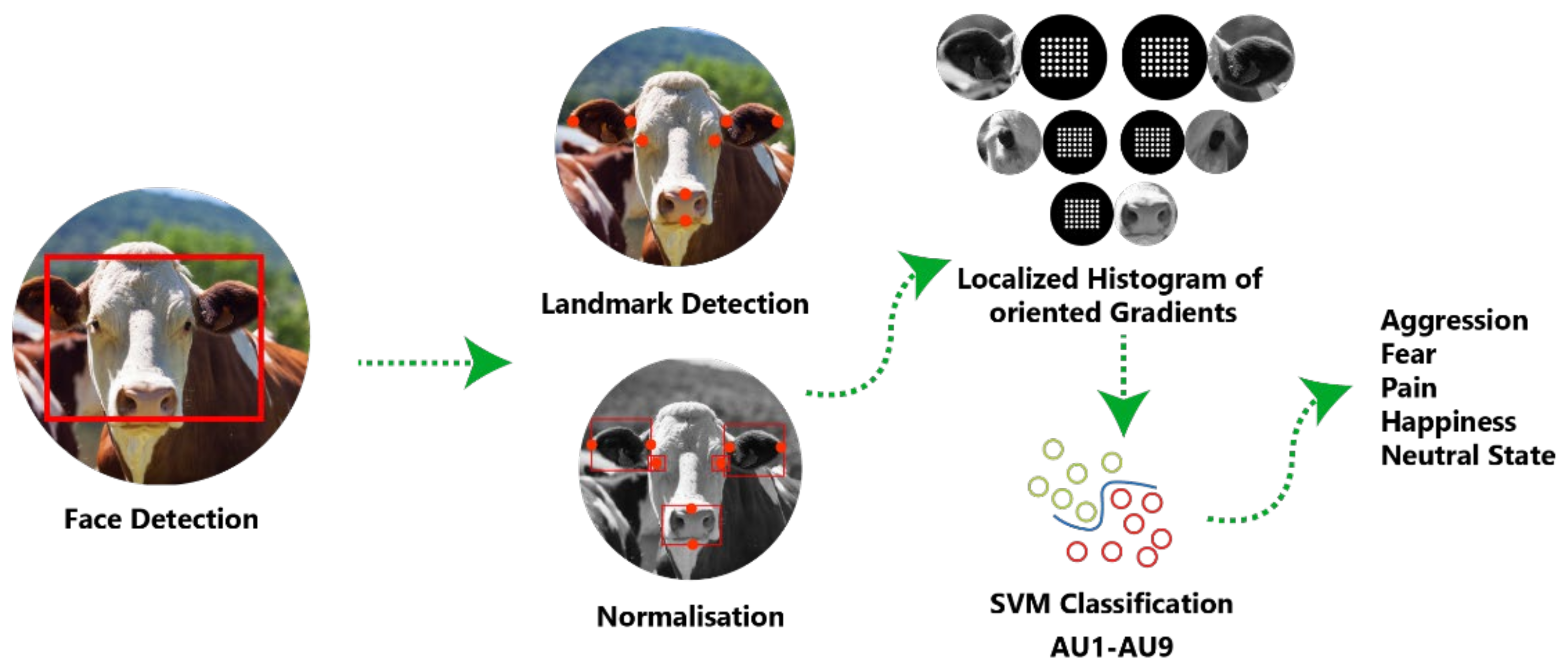

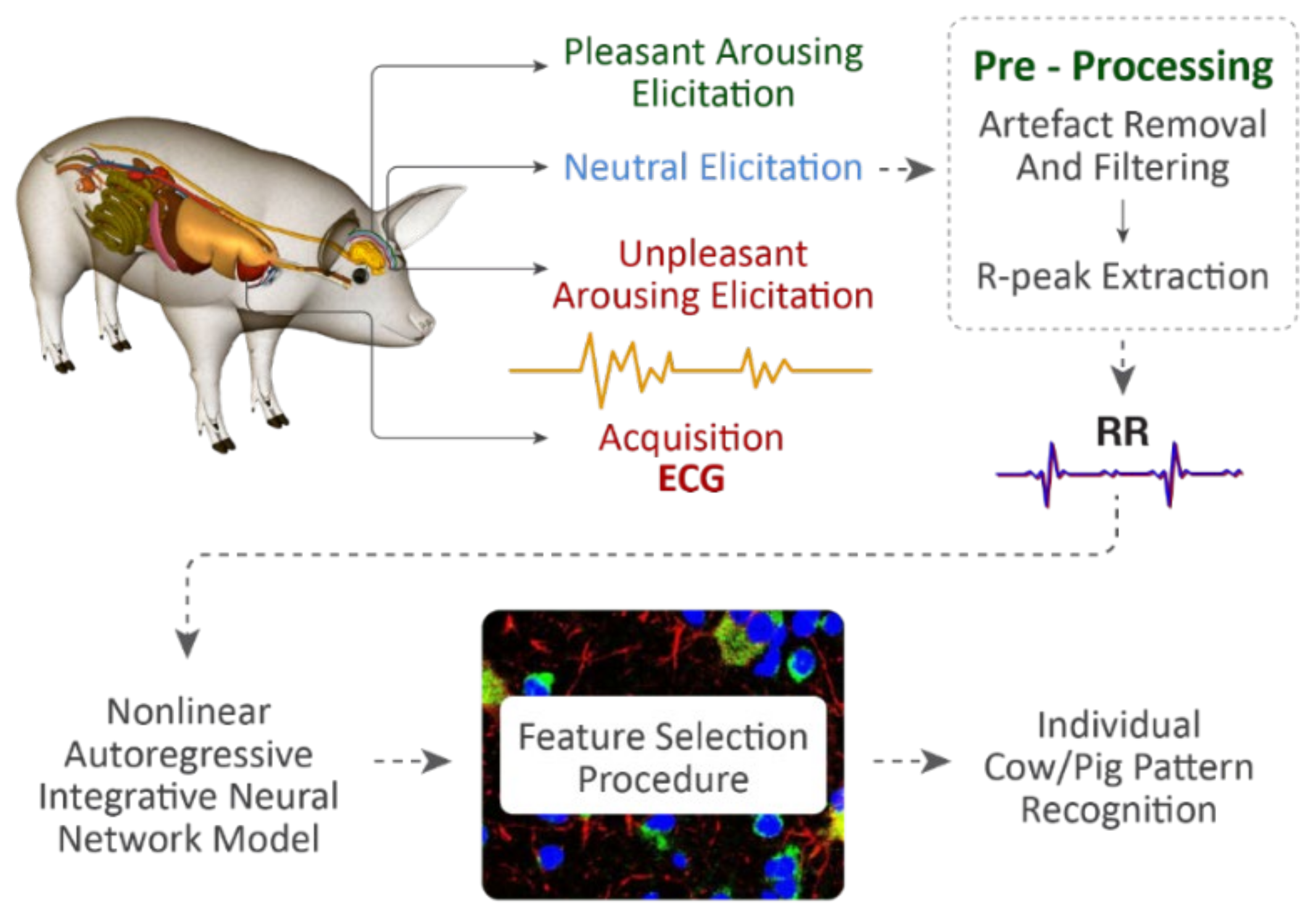

- Neethirajan, S. Happy Cow or Thinking Pig? WUR Wolf—Facial Coding Platform for Measuring Emotions in Farm Animals. AI 2021, 2, 342–354.

- Abdullahi, U.S.; Nyabam, M.; Orisekeh, K.; Umar, S.; Sani, B.; David, E.; Umoru, A.A. Exploiting IoT and LoRaWAN Technologies for Effective Livestock Monitoring in Nigeria. AZOJETE 2019, 15, 146–159. Available online: https://azojete.com.ng/index.php/azojete/article/view/22 (accessed on 5 April 2021).

- Waterhouse, A.; Holland, J.P.; McLaren, A.; Arthur, R.; Duthie, C.A.; Kodam, S.; Wishart, H.M. Opportunities and challenges for real-time management (RTM) in extensive livestock systems. In Proceedings of the The European Conference in Precision Livestock Farming, Cork, Ireland, 26–29 August 2019; Available online: https://pure.sruc.ac.uk/en/publications/opportunities-and-challenges-for-real-time-management-rtm-in-exte (accessed on 6 April 2021).

- Citoni, B.; Fioranelli, F.; Imran, M.A.; Abbasi, Q.H. Internet of Things and LoRaWAN-Enabled Future Smart Farming. IEEE Internet Things Mag. 2019, 2, 14–19.

- Liu, L.-S.; Ni, J.-Q.; Zhao, R.-Q.; Shen, M.-X.; He, C.-L.; Lu, M.-Z. Design and test of a low-power acceleration sensor with Bluetooth Low Energy on ear tags for sow behaviour monitoring. Biosyst. Eng. 2018, 176, 162–171.

- Trogh, J.; Plets, D.; Martens, L.; Joseph, W. Bluetooth low energy based location tracking for livestock monitoring. In Proceedings of the 8th European Conference on Precision Livestock Farming, Nantes, France, 12–14 September 2017; Available online: http://hdl.handle.net/1854/LU-8544264 (accessed on 8 April 2021).

- Bloch, V.; Pastell, M. Monitoring of Cow Location in a Barn by an Open-Source, Low-Cost, Low-Energy Bluetooth Tag System. Sensors 2020, 20, 3841.

- Fote, F.N.; Mahmoudi, S.; Roukh, A.; Mahmoudi, S.A. Big data storage and analysis for smart farming. In Proceedings of the 2020 5th International Conference on Cloud Computing and Artificial Intelligence: Technologies and Applications (CloudTech), Marrakesh, Morocco, 24–26 November 2020; Available online: https://ieeexplore.ieee.org/abstract/document/9365869 (accessed on 8 April 2021).

- Zhang, W.; Liu, H.; Silenzio, V.M.B.; Qiu, P.; Gong, W. Machine Learning Models for the Prediction of Postpartum Depression: Application and Comparison Based on a Cohort Study. JMIR Med. Inform. 2020, 8, e15516.

- Meire, M.; Ballings, M.; Poel, D.V.D. The added value of auxiliary data in sentiment analysis of Facebook posts. Decis. Support Syst. 2016, 89, 98–112.

- Elhai, J.D.; Tiamiyu, M.F.; Weeks, J.W.; Levine, J.C.; Picard, K.J.; Hall, B. Depression and emotion regulation predict objective smartphone use measured over one week. Pers. Individ. Differ. 2018, 133, 21–28.

- Dhall, A. EmotiW 2019: Automatic emotion, engagement and cohesion prediction tasks. In Proceedings of the 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; Available online: https://dl.acm.org/doi/10.1145/3340555.3355710 (accessed on 5 April 2021).

- Liu, C.; Tang, T.; Lv, K.; Wang, M. Multi-feature based emotion recognition for video clips. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; Available online: https://dl.acm.org/doi/10.1145/3242969.3264989 (accessed on 5 April 2021).

- Pei, E.; Jiang, D.; Alioscha-Perez, M.; Sahli, H. Continuous affect recognition with weakly supervised learning. Multimed. Tools Appl. 2019, 78, 19387–19412.

- Chang, F.-J.; Tran, A.T.; Hassner, T.; Masi, I.; Nevatia, R.; Medioni, G. Deep, Landmark-Free FAME: Face Alignment, Modeling, and Expression Estimation. Int. J. Comput. Vis. 2019, 127, 930–956.

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674.

- Valletta, J.J.; Torney, C.; Kings, M.; Thornton, A.; Madden, J. Applications of machine learning in animal behaviour studies. Anim. Behav. 2017, 124, 203–220.

- Gris, K.V.; Coutu, J.-P.; Gris, D. Supervised and Unsupervised Learning Technology in the Study of Rodent Behavior. Front. Behav. Neurosci. 2017, 11, 141.

- Chandrasekaran, B.; Gangadhar, S.; Conrad, J.M. A survey of multisensor fusion techniques, architectures and methodologies. In Proceedings of the SoutheastCon, Concord, NC, USA, 30 March–2 April 2017; Available online: https://ieeexplore.ieee.org/abstract/document/7925311 (accessed on 7 April 2021).

- Shah, N.H.; Milstein, A.; Bagley, S.C. Making Machine Learning Models Clinically Useful. JAMA 2019, 322, 1351.

- Lapuschkin, S.; Wäldchen, S.; Binder, A.; Montavon, G.; Samek, W.; Müller, K.-R. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. 2019, 10, 1096.

- Tuteja, S.K.; Ormsby, C.; Neethirajan, S. Noninvasive Label-Free Detection of Cortisol and Lactate Using Graphene Embedded Screen-Printed Electrode. Nano-Micro Lett. 2018, 10, 41.

- Bienboire-Frosini, C.; Chabaud, C.; Cozzi, A.; Codecasa, E.; Pageat, P. Validation of a Commercially Available Enzyme ImmunoAssay for the Determination of Oxytocin in Plasma Samples from Seven Domestic Animal Species. Front. Neurosci. 2017, 11, 524.

- Siegel, P.B.; Gross, W.B. General Principles of Stress and Well-Being; Grandin, T., Ed.; CABI: Wallingford, UK, 2000; pp. 27–41.

- Bonapersona, V.; Hoijtink, H.; Sarabdjitsingh, R.A.; Joëls, M. Increasing the statistical power of animal experiments with historical control data. Nat. Neurosci. 2021, 24, 470–477.