Farm animals, numbering over 70 billion worldwide, are increasingly managed in large-scale, intensive farms. With both public awareness and scientific evidence growing that farm animals experience suffering, as well as affective states such as fear, frustration and distress, there is an urgent need to develop efficient and accurate methods for monitoring their welfare. At present, there are not scientifically validated ‘benchmarks’ for quantifying transient emotional (affective) states in farm animals, and no established measures of good welfare, only indicators of poor welfare, such as injury, pain and fear. Conventional approaches to monitoring livestock welfare are time-consuming, interrupt farming processes and involve subjective judgments. Biometric sensor data enabled by artificial intelligence is an emerging smart solution to unobtrusively monitoring livestock, but its potential for quantifying affective states and ground-breaking solutions in their application are yet to be realized.

1. Modelling Farm Animal Affective State and Behaviour Using Multimodal Sensor Fusion

High-fidelity, integrated multimodal imaging and sensing technologies have the potential to revolutionize how livestock are monitored and cared for

[1][2]. Currently, there are no commercially available multimodal biosensing platforms capable of monitoring the affective and behavioural states of farm animals in real time

[3]. Developing such a platform would allow comprehensive quantitative analyses of these states, potentially leading to significant insights and advances in our understanding of optimal approaches to animal care. The development and integration of next-generation multimodal sensor systems and advanced statistical methods to estimate and predict affective and behavioural states in farm animals would significantly open pathways for enhancing animal welfare.

Establishing a distributed network of non-obtrusive, non-invasive sensors to collect real-time behavioural and physiological data from farm animals could be the initial step in the realization of framework development (

Figure 1). Non-invasive sensors comprising video and thermal imaging cameras, microphones, and wearable TNO Holst 3-in-1 patches (monitoring heart rate, respiration rate, and activity) will help in the collection of data on behavioural and affective states. Data collected during natural behaviour, without any interference from experimenters, and the data collected during protocols in which defined positive and negative affective states will be induced in the animals using established protocols, including withholding high-value food from animals to induce disappointment

[4]; placing animals in crowded situations to induce frustration

[5][6]; and petting and socializing the animals to induce contentment

[7][8] are some possibilities.

Figure 1. Multimodal affective state recognition data analysis workflow framework of the per-animal quantified approach. EEG—electroencephalogram; FNIRS—functional near-infrared spectroscopy; ML—machine learning; CNN—convolutional neural networks.

2. Classification and Annotation of Affective States and Behavioural Events

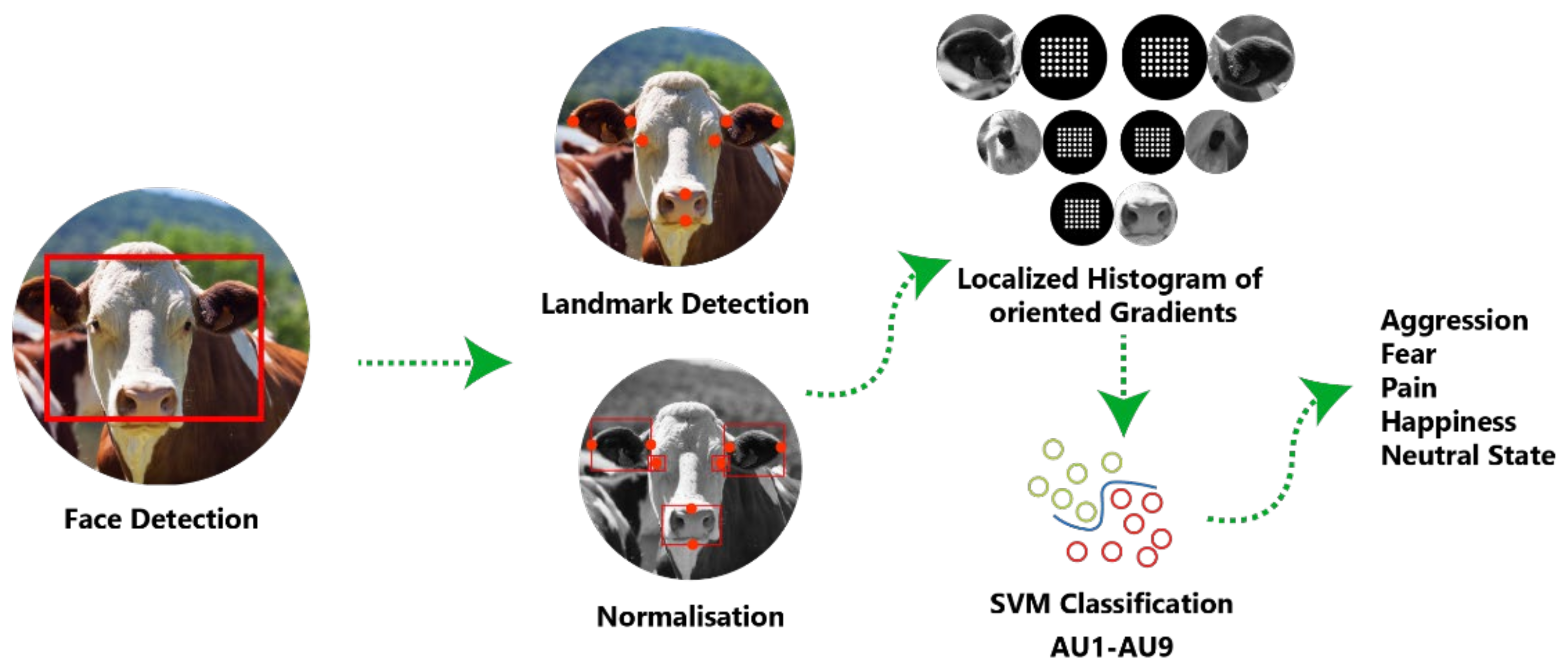

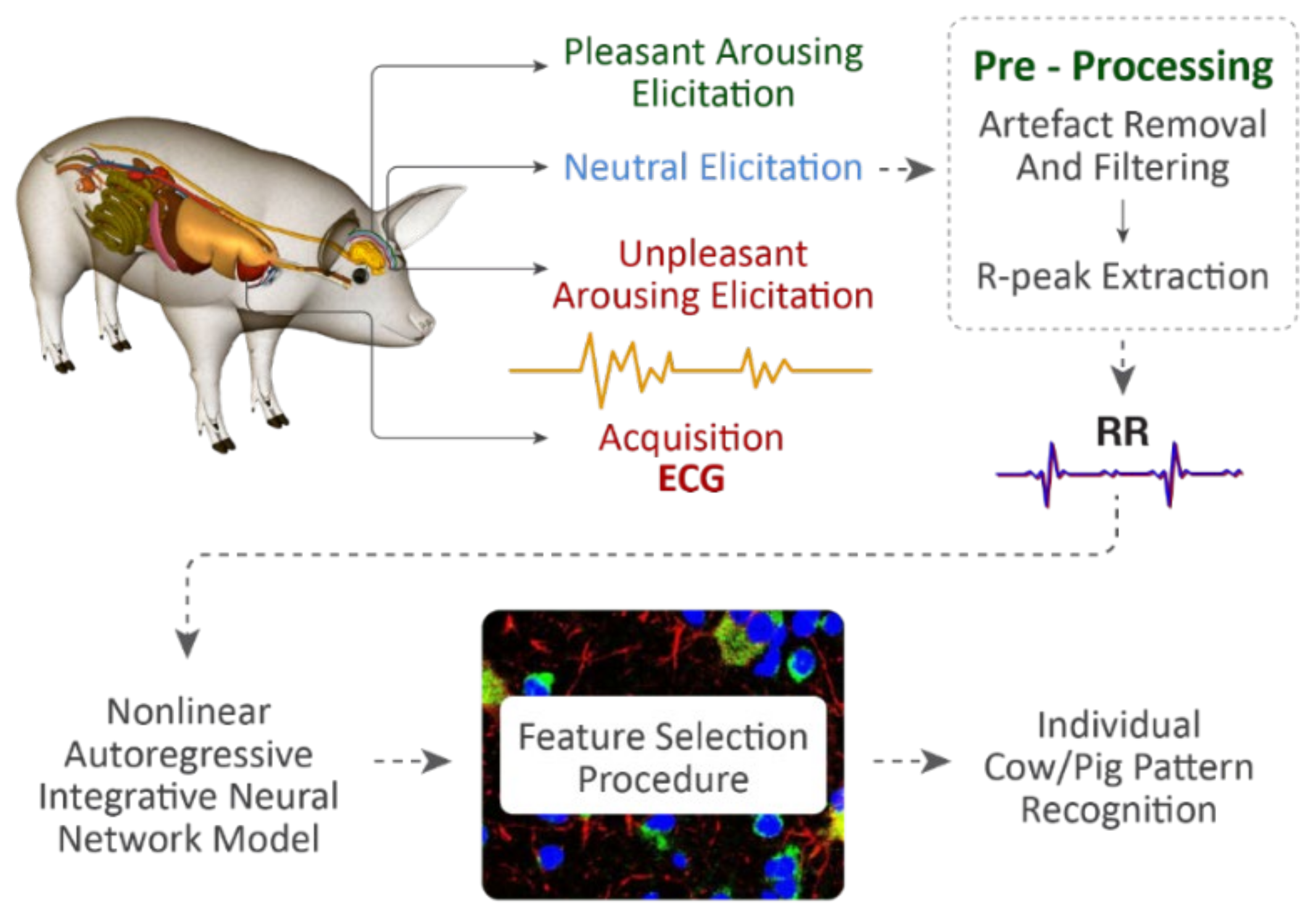

Common methods to identify affective and behavioural events in farm animals using sensors and AI enabled sensor data are: (a) An automatic affective state classification approach, capitalizing on preliminary work

[9] conducted by FarmWorx of the Wageningen University. Pre-existing trained farm animals’ facial recognition platform such as WUR Wolf (Wageningen University and Research—Wolf Mascot)

[9] can be used to classify changes in affective state over time in pigs and cows based on the video camera data (

Figure 2). (b) Manual annotation of behavioural and emotional events in the data sets by ethologists and behavioural scientists with specific expertise in cow and pig behaviour, providing “gold-standard” annotated data sets. The annotators could potentially evaluate one category of behaviour (e.g., feeding, playing, resting) or affective state (e.g., fearful, happy, relaxed) at a time for all the animals under study, to maintain consistent scoring across animals. Krippendorff’s alpha coefficient could be calculated to compute the reliability across annotators and metrics, and to assess the influence of unequal sample sizes and disparities between dimensional and categorical variables on the results.

Figure 2. Pipeline of WUR Wolf (Wageningen University and Research—Wolf Mascot) automatic approach

[9] in coding affective states from facial features of cows using machine learning models. SVM—support vector machine; AU—arbitrary units.

3. Sensor Network Fusion Protocols and Instrumentation Framework

Integrating heterogeneous sensor types into a multimodal network involves implementing a sensing platform capable of fusing data streams with differing precisions, update rates, and data formats to produce a common framework in which these data can be correlated and analysed. At present, there exist no platforms that possess the necessary functionality to correlate heterogeneous data streams, integrate diverse data sets, and identify data from individual animals

[3].

There is a need for developing an instrumentation framework capable of integrating sensor data from diverse sensor types, opening the door to acquiring and analysing large data sets of multimodal sensor data on animal behaviour and affective state for the first time. It has to focus on establishing the hardware infrastructure to reliably gather large quantities of multimodal sensor data, along with the high-performance cloud server architecture to store and process these data.

In order to stream data in real time from multiple sensor types simultaneously, making use of long-range wide area network (LoRaWAN) communications technology, which is rapidly emerging as the state of the art in smart farming

[10][11][12] would be ideal. LoRaWAN can wirelessly transmit data from 300 different types of sensors at a time, which will thereby allow the researchers to avoid the technical complexity and cost of a conventional wired setup. Extending the functionality of the LoRaWAN system to use low-energy Bluetooth technology, by increasing the length of time that data can be acquired from portable sensors before they need to be recharged

[13][14][15] would save time and resource overload. To accelerate and facilitate the real-time analysis of the data, cloud servers connected via the internet must be used to store and process the data

[1][16], avoiding the need to install complex and expensive computer servers at each individual farm site. The Microsoft AZURE platform is a commercial application that could allow seamless integration between the sensor data streams and the high-performance AI and ML methods used to analyse the data.

4. Build Predictive Models of Affective State and Behaviour

By using the data sets collected from the distributed sensor network, robust predictive models of farm animal behaviour and affective states can be built. Advanced statistical analyses applied to the annotated data set using supervised AI and ML methods, namely the Latent Growth Curve Modelling, Random Forest and Support Vector Machine models

[17][18][19], offers established approaches in capturing and measuring patterns in dynamic interactive variables, such as behaviour and affective states of farm animals. These methods employed to extract features from the visual, thermal, auditory, physiological and activity sensor data, enables different behavioural and affective states to be distinguished with high accuracy, sensitivity, and selectivity

[20][21][22][23].

Following the supervised training stage, unsupervised ML models applied aids in the determination of clusters of similar behavioural and affective state descriptors from unannotated sensor data obtained from farm animals

[24][25][26]. These descriptors function as numerical “fingerprints” that allow distinct behavioural or affective states to be reliably identified, even in entirely novel data. The best features from each sensor modality corresponding to these descriptors can then be selected to define high-level specific indicators, which will then be fused to build an ML classifier-based model. There are two potential approaches to fusing sensor data from different modalities to predict behavioural and affective states which are (i) decision-level fusion, in which prediction scores from the unimodal models will be linearly combined; (ii) feature- and indicator-level fusion, in which feature vectors and indicators will be integrated across modalities to yield the prediction scores. The performance levels of different ML methods at estimating behavioural and affective states can be assessed using regression methods

[27][28][29].

5. Challenges in the Quantification and Validation of Performance Models for Affective States Measurement

The assessment effectiveness of the platform at estimating behaviour and affective state in real time from farm animals is quite challenging. The predictive model can be evaluated by calculating its accuracy at estimating affective and behavioural states in novel data sets collected from the sensor network. In addition, the accuracy of the model can further be validated by correlating the affective and behavioural states it identifies against: (i) Quantitative assays of cortisol, lactate and oxytocin levels in blood and/or saliva samples from the animals

[30][31]. These provide a reliable biochemical reference measure of emotional arousal and stress. (ii) Physiological indices associated with specific affective states in the animals, such as heart rate, respiratory rate, and body temperature. Physiological signals are more reflective of autonomic nervous system activity than non-physiological signals

[32], such as facial expressions or vocalizations. Autonomic nervous system activation during emotional expression is involuntary in animals and therefore provides an unambiguous, quantitative reference measure for evaluating affective states.

5.1. Sensor Durability

There is a risk that a wearable sensor cannot be attached securely to the animals, or the animals may damage the sensors by chewing or crushing. To mitigate the former, animal scientists or researchers could improve the adhesion protocol or use a belly belt, which is more secure.

5.2. Low Sensitivity of the Model at Detecting Affective and Behavioural States

To address this, optimization of the AI algorithms and the sensors to increase sensitivity turn out to be useful.

5.3. Lack of Correlation between Sensor Data and Biochemical Reference Values

Researchers collaborate with veterinarians to set up the biochemical validation assays.

5.4. Limiting the Numbers of Animals Used in the Experiments

Increasing the sample size opens up ethical and practical issues

[33]. The numbers of pigs and cows to be used in animal experiments should meet optimal research standards and experimental design but also meet the 3R (reduce, replace, refine) policies. Bayesian approaches could be used to increase the statistical power of the animal experiments using historical control data

[33], while developing indices.

This entry is adapted from the peer-reviewed paper 10.3390/ani12060759