Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Fang Xu | + 6982 word(s) | 6982 | 2022-02-16 05:17:50 | | | |

| 2 | Conner Chen | -42 word(s) | 6940 | 2022-02-24 01:56:48 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Xu, F. Multi-Exposure Image Fusion Techniques. Encyclopedia. Available online: https://encyclopedia.pub/entry/19787 (accessed on 25 January 2026).

Xu F. Multi-Exposure Image Fusion Techniques. Encyclopedia. Available at: https://encyclopedia.pub/entry/19787. Accessed January 25, 2026.

Xu, Fang. "Multi-Exposure Image Fusion Techniques" Encyclopedia, https://encyclopedia.pub/entry/19787 (accessed January 25, 2026).

Xu, F. (2022, February 23). Multi-Exposure Image Fusion Techniques. In Encyclopedia. https://encyclopedia.pub/entry/19787

Xu, Fang. "Multi-Exposure Image Fusion Techniques." Encyclopedia. Web. 23 February, 2022.

Copy Citation

Multi-exposure image fusion (MEF) can integrate images with multiple exposure levels into a full exposure image of high quality. It is an economical and effective way to improve the dynamic range of the imaging system and has broad application prospects.

multi-exposure image fusion

dynamic range

image transform

deep learning

deghosting

1. Introduction

Brightness in a natural scene usually varies greatly. For example, sunlight is about 105 cd/m2, room light is about 102 cd/m2, and starlight is about 10−3 cd/m2 [1]. Owing to the limitations of imaging devices, the dynamic range of a single image is much lower than that of a natural scene [2]. The shooting scene may be affected by light, weather, solar altitude, and other factors. Overexposure and underexposure often occur. A single image cannot fully reflect the light and dark levels of the scene, and some information may be lost, resulting in unsatisfactory imaging. Solving the problem of incomplete dynamic range matching in existing imaging equipment, display monitors, and the human eye’s dynamic response to real natural scenes is still challenging.

There are generally two ways to broaden the dynamic range of imaging detectors: hardware design and software technology. For the former, the CCD or CMOS detector needs to be redesigned, and a new optical modulation device may need to be introduced. Aggarwal [3] realized a camera design by dividing the aperture into multiple parts and using a set of mirrors to direct the light emitted by each piece to different directions. Tumblin [4] described a camera to measure the static gradient rather than static intensity and appropriately quantify the difference to capture HDR images. This kind of method can directly improve the efficiency of exposure quantity and imaging quality, but they are expensive and their practicability is limited. Through software technology, some researchers reconstruct the high dynamic range (HDR) image using the camera response function (CRF). Then, the HDR image can be displayed on the ordinary display device through tone mapping (TM). Others adopt Multi-exposure image fusion (MEF) technology directly, fusing the input images with different exposure levels into an image with rich information and vivid colors, which do not need to consider camera curve calibration, HDR reconstruction, and tone mapping, as shown in Figure 1. Compared with the first way, MEF technology provides a simple, economical, and efficient manner to overcome the contradiction between HDR imaging and a low dynamic range (LDR) display. It avoids the complexity of imaging hardware circuit design and reduces the weight and power consumption of the whole device. It improves image quality and has essential application significance.

Figure 1. The illustration of the multi-exposure image fusion.

MEF is a branch of image fusion, similar to other image fusion tasks [5]; for example, multi-focus image fusion, visible and infrared image fusion, PET and MRI medical image fusion, multispectral and panchromatic remote sensing image fusion, hyperspectral and multispectral remote sensing image fusion, and optical and SAR remote sensing image fusion. They combine multidimensional content from multiple-source images to generate high-quality images containing more important information. The main difference between these image fusion tasks is that the source images are different, and the source images of MEF are a series of images with different exposure levels. In addition, it can also be used for image enhancement under low illumination [6][7], defogging [8], and saliency detection [9] by fusing or generating pseudo exposure sequences.

2. A Review on MEF

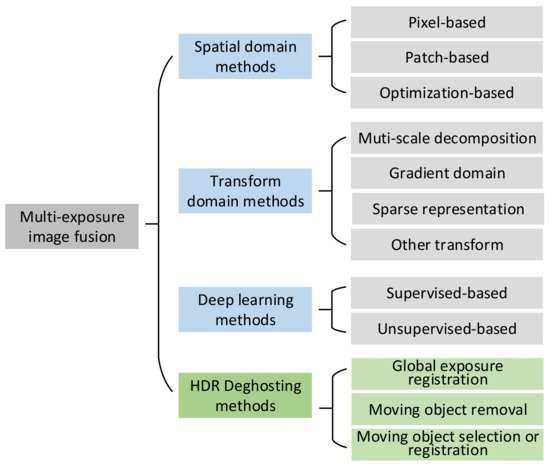

MEF has attracted extensive attention because it can effectively generate high-quality images with a high dynamic range by combining different information from the image sequence with different exposure levels. In the past 30 years, many scholars have proposed a variety of MEF algorithms. According to the existing research data, Burt et al. [10] were one of the earliest research teams to study MEF. In their work, a pyramid-based method was proposed to perform multiple image fusion tasks, including visible and infrared image fusion, multi-focus image fusion, and multi-exposure image fusion. After that, a large number of traditional MEF algorithms were proposed. In recent years, research based on deep learning has become a very active direction in MEF. The MEF algorithms can be classified in different ways. Zhang [11] divided MEF into three categories based on the number of input source images, whether the imaging scene is static or dynamic, and whether deep learning is used. Some methods can only process two images [11][12][13][14]. In [11], they compared the fusion results from the two images and gave a benchmark. However, most of the current MEF methods support multiple input images. Some MEF methods that can deal with the images in a static scene may also perform in a dynamic scene. Therefore, here present a taxonomy of MEF methods that proposes to divide the existing MEF approaches into three categories: spatial domain methods, transform domain methods, and deep learning methods. In addition, MEF from a dynamic scene when camera jitters or moving objects are present has always been a challenge in this field. Ghost detection and elimination technology in a dynamic scene has already attracted much interest. The taxonomy is shown in Figure 2. It should be noted that although the presented taxonomy is valid in most cases, some hybrid algorithms are not easy to be classified into a single class. Such methods are classified according to their most dominant ideas.

Figure 2. Taxonomy of MEF methods.

The MEF methods based on the spatial domain use certain spatial features to fuse the input source images directly in the spatial domain according to specific rules. The general processing flow of this class of method is to generate a weight-mapping map for each input image and calculate the fused image as a weighted average of all input images. According to the level of information extraction, the MEF methods based on the spatial domain can be roughly divided into three types: pixel-based methods, patch-based methods, and optimization-based methods.

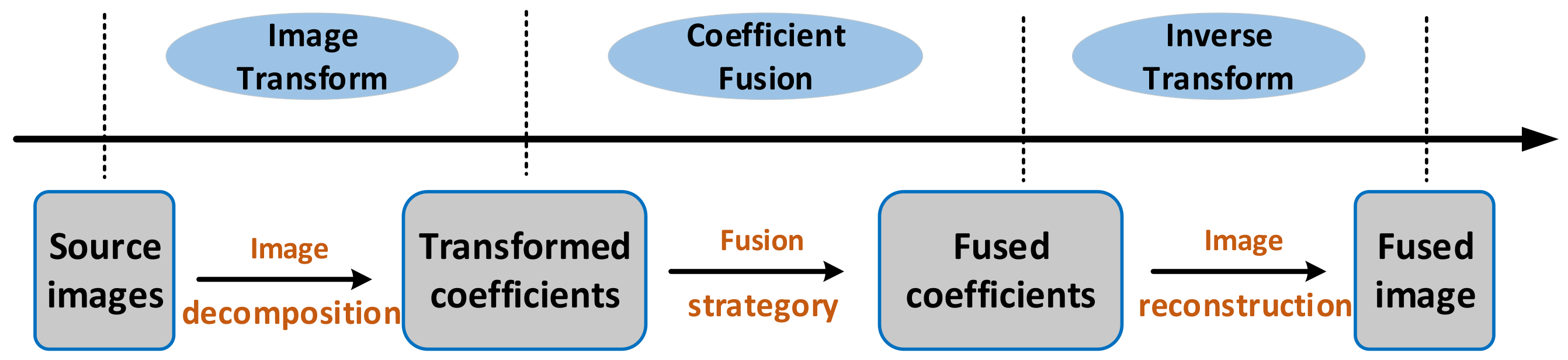

The MEF methods based on the transform domain generally consist of three stages: image transformation, coefficient fusion, and inverse transformation [15], as shown in Figure 3. First, the input images are transformed into another domain by applying image decomposition or image representation. Then, the transformed coefficients are fused through some pre-designed fusion rules. Finally, the fused image is reconstructed by the corresponding inverse transformation on the fused coefficients. Compared with the MEF methods based on spatial domain, the most prominent feature includes the inverse transformation stage of reconstructing the fused image. According to the transformation used, the MEF methods based on the transformation domain can be further divided into the multi-scale decomposition-based approaches, gradient-domain-based methods, sparse representation-based methods, and other transform-based methods.

Figure 3. The general flow chart of transform domain methods.

In recent years, deep learning has become a very active direction in the field of MEF. Neural networks with a deep structure have been widely proved to have strong feature representation ability and are very useful for various image and vision tasks, including image fusion. Currently, deep learning models, including convolutional neural networks (CNNs) [16] and generative adversarial networks (GANs) [17], have been successfully applied to MEF. Depending on the model employed, deep learning-based methods can be further classified into supervised-based and unsupervised-based methods.

Due to the time difference in the image acquisition, camera jitter, and inconsistent object motion, it is challenging to avoid ghosts in fusion results [18][19]. The introduction of movement correction and relevant measures in the dynamic scene can effectively eliminate ghosts and improve the visual quality of the fused image. The deghosting algorithms in MEF can be broadly classified into three categories: global exposure registration, moving object removal, and moving object selection or registration.

Each category of the MEF methods is reviewed in detail as follows.

2.1. Spatial Domain Methods

2.1.1. Pixel-Based Methods

This kind of method directly fuses the pixel-based features of the source images according to certain fusion rules. Due to its advantages in obtaining accurate pixel weight maps for fusing, it has become a popular direction for MEF. These methods directly act on pixels. Most pixel-based methods are designed in the framework of a linear weighted sum, that is, the fused image is calculated as a weighted sum of all input images. The core problem is to obtain the weight map for each input image, and various pixel-based MEF methods have been proposed on different strategies to compute the weight maps. Bruce [20] normalized each pixel from the input image sequence and converted it into a logarithmic domain. Taking each pixel as the center and R as the radius, they calculated the entropy in the circle and assigned a weight to each pixel in line with the information entropy. Finally, the input images were merged based on the weight after exiting the logarithmic domain. Although the information entropy of the fused image is high, the color is unnatural in some cases. Lee [21] proposed an MEF method based on adaptive weight. Specifically, they defined two weight functions that reflected the pixel quality related to the overall brightness and global gradient. The final weight was the combination of these two weights. To adjust the brightness of the input images, Kinoshita [22] presented a scene segmentation method based on brightness distribution and tried to obtain the appropriate exposure values to decrease the saturation area of the fused image. Xu [23] designed a multi-scale MEF method based on physical features. In their work, a new Retinex model was used to obtain the illumination maps of the original input images, and weight maps were built, combined with the extracted features. Ulucan [24] introduced an MEF method based on linear embedding and watershed masking using a static scene. Linear embedding weights were extracted from differently exposed images. The corresponding watershed templates were used to adjust these mappings according to the information of the input images for the final fusion. However, the visual quality and statistical score will be reduced when the input image sequence contains extremely overexposed or underexposed images. There are many other pixel-level image fusion algorithms that use filtering methods to process the weighted maps. Raman and Chaudhuri [25] designed a bilateral filter-based MEF method that preserved the texture details of the input images at different exposure levels. Later, Li [26] used a median filter and recursive filter to reduce the noise of weight maps. However, only the gradient information of individual pixels was considered, regardless of the local regions.

The main drawback of pixel-based MEF methods is that they are sensitive to noise, ignore neighborhood information, and are prone to various artifacts in the final fused image. Therefore, most methods require some pre-processing, such as histogram equalization, or post-processing of the weight map, such as edge-preserving filtering, to produce a higher-quality fusion result. Even though the boundary filtering algorithms were added in some methods [25][26][27] and the halo artifacts can be reduced to some extent, the problem has not been solved at the root. Meanwhile, improvement strategies may bring new issues, such as breaking illumination relationships, over-relying on the bootstrap image, or significantly increasing computational complexity.

2.1.2. Patch-Based Methods

Unlike the pixel-based MEF method, the patch-based method divides the source images into multiple patch regions at a certain step size. Then, the patches at the same position corresponding to each image in the sequence are compared, and the patch containing the significant information is selected to form the final fused image. In [28], a patch-based method was first introduced to solve the MEF problem in the static scenes. The image was divided into uniform patches and the information entropy of each patch was used to measure the richness of the patch. They selected the most information patches and integrated them together using a patch-centered monotonically decreasing blending function to obtain the fused image. The disadvantage of this method is that it is easy to cause a halo at the boundary of different objects within the fused image. After that, many patch-based MEF methods were presented [29]. Ma [30] conducted a commonly used MEF method, which first extracted image patches from the input images, and decomposed them into three conceptually independent components: signal strength, signal structure, and average strength. These components were processed according to the patch intensity and exposure measurement to generate color image patches. These image patches were then put back into the fused image. Following this work, Ma [31] proposed a structural patch decomposition MEF approach (SPD-MEF). Compared with [30], the main improvement is that SPD-MEF can use the orientation of the signal structure components in the image patch to guide the verification of structural consistency for generating vivid images and overcoming ghost effects. This method does not need subsequent processing to improve visual quality or reduce spatial artifacts. However, the image patch size is fixed and has poor adaptability to the scene. The smaller size will cause the fused image to have serious spatial inconsistency, and the larger size will lead to the loss of detail in the fused image. On this basis, Li [32] proposed an improved multi-scale fast SPD-MEF method, which effectively reduced halo artifacts by recursively downsampling the patch size. In addition, the implicit implementation of structural patch decomposition also greatly improved the calculation efficiency. Later, Li [33] continued to add an edge detail retention factor and further designed a flexible bell curve for accurately estimating the weight function of the average intensity component. This function can retain the details in bright and dark regions and improve the fusion quality while maintaining a high computational speed. Wang [34] proposed an adaptive image patch segmentation method that used superpixel segmentation to divide the input images into non-overlapping patches composed of pixels with similar visual properties. Compared with the existing MEF methods that used fixed-size image patches, it avoided the patch effect and preserved the color properties of the source images.

In contrast to the pixel-based MEF methods, the main advantage of the approach based on patch is that the weight map has less noise because it combines the neighborhood information of the pixels and is robust to noise. However, since the patch in the image may span different objects, there are problems in edge detail retention, leading to edge blurring and halo, especially in edges with sharp changes in brightness.

2.1.3. Optimization-Based Methods

Several other MEF approaches are integrated into an optimization framework, and the weight maps are estimated by calculating the energy function. Shen [35] proposed a general random walk framework considering neighborhood information from the probability model and global optimization. The fusion was converted into a probabilistic estimation of the global optimal solution, and the computational complexity was reduced. However, since the method ultimately used a weighted average to fuse the pixels, it may degrade image details. In [2], by estimating the maximum a posteriori probability in the hierarchical multivariate Gaussian conditional random field, the optimal fusion weights can be obtained based on color saturation and local contrast. Li [36] performed MEF using fine detail enhancement for extracting the details from the input images based on quadratic optimization to improve the overall quality of the fused image. Song [37] approximated the ideal luminance image to a maximum contrast image using gradient constraints under the framework of maximum a posteriori probability. This fusion scheme integrated the gradient information of the input images and increased the fused image’s detail information. In [38], an underexposed image enhancement method was proposed, where the optimal weights were obtained by the energy function to retain the details and boost edges. Ma [39] obtained a fusion result by globally optimizing the structural similarity index that directly operated in all input images. They used the gradient rise optimization method to search the image to be optimized, which was the color MEF structural similarity (MEF-SSIMc) index, iteratively moving toward improving the MEF-SSIMc until convergence. The proposed optimization framework was easily extended when MEF models with better objective quality were available. In fused images, using only global optimization may lead to local overexposure or underexposure. Similarly, using only local optimization may degrade the overall performance of the fusion result. Therefore, Qi [40] combined a priori exposure quality and a structural consistency test to improve the robustness of MEF. At the same time, through the evaluation of exposure quality and the decomposition of image patch structure, the global and local quality of the fused image were optimized.

The main advantage of the optimization-based methods is that they are general. Specifically, they can flexibly change optimization indicators if a better one is available. However, this is also the major disadvantage of these methods, since a single indicator may not be sufficient to obtain a high-quality fused image. Therefore, the performance of these methods is highly indicator dependent. Unfortunately, there is no indicator that can completely express the fused image quality. All these methods suffer from severe artifacts such as ringing effects, loss of detail, and color distortion, which lead to poor fusion results. In addition, these methods are computationally intensive and cannot meet real-time requirements.

2.2. Transform Domain Methods

Transform domain-based MEF methods can be mainly classified into multi-scale decomposition-based methods, gradient domain-based methods, sparse representation based-methods, and other transform-based methods.

2.2.1. Multi-Scale Decomposition-Based Methods

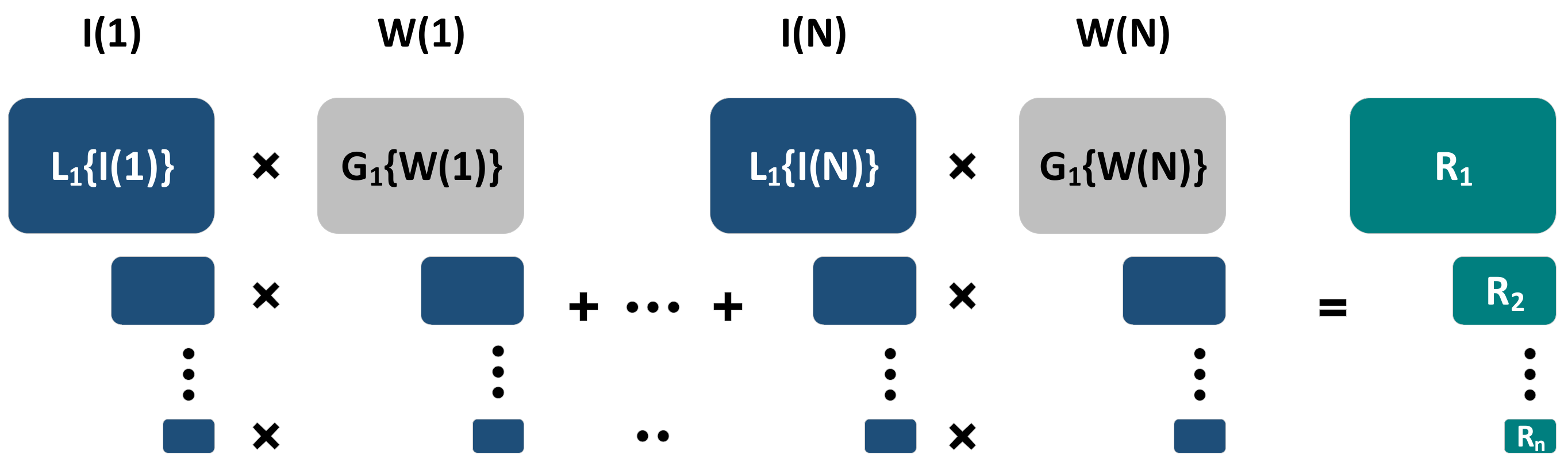

Burt [10] was one of the first to research the MEF algorithm, and proposed a gradient pyramid model based on directional filtering. Mertens [41] proposed a multi-scale fusion framework, as shown in Figure 4, which decomposed all the input images using the Laplace pyramid. The framework took the contrast, color saturation, and exposure to calculate and normalize the weight maps, which were smoothed by the Gaussian pyramid. Then, the Gaussian pyramid of the weight maps was multiplied by the Laplace pyramid of the multi exposure images to obtain the fusion result, which can better recover the image’s brightness, but cannot restore the details of the severely overexposed region. Based on this framework, many studies have been proposed to further improve fusion performance.

Figure 4. The general schematic of the pyramid fusion framework. I(1)–I(N) denote N input images; W(1)–W(N) are N weight maps; L{▪} and G{▪} indicate Laplace pyramid and Gaussian pyramid, respectively. R1-Rn are layers of the Laplace pyramid of the fusion result.

Li [42] presented a two-scale MEF method, which first decomposed the input images into base and detail layers and then calculated the weight maps by utilizing the significance measure. They fined weight maps using the guidance filter. The texture information of the input images could be retained, but halo artifacts still existed. An MEF method based on mixed weight and an improved Laplace pyramid was introduced to enhance the detail and color of the fused image in [43]. Based on the multi-scale guided filter, Singh [44] proposed an image fusion method to obtain detail-enhanced fusion images. This method had the advantages of both the multi-scale and guided filter methods, which can also be expanded in multi-focus image fusion task. Nejati [45] designed a fast MEF approach in which a guided filter was applied to decompose the input images for obtaining base and detail layers. To obtain the fused image, the brightness components of the input images were used to combine the base layers and the detail layers based on the blending weights of the exposure function. LZG [46] merged LDR images with different exposure levels by using the weighted guide filter to smooth the weight maps’ Gaussian pyramid. They designed a detail-extraction component to manipulate the details in the fusion image according to the users’ preference. Yan [47] proposed a simulated exposure model for white balance and image gradient processing. It integrated the input images under different exposure conditions into a fused image by using the linear fusion framework based on the Laplace pyramid. Wang [48] presented a multi-scale MEF algorithm based on YUV color space instead of RGB color space and designed a new weight smoothing pyramid used in YUV color space. A vector field construction algorithm was introduced to maintain the details of the brightest and the darkest areas in HDR scenes and avoid color distortion in the fused image. In some approaches, they often used edge-preserving smoothing technology to improve multi-scale MEF algorithms. Kou [49] proposed a multi-scale MEF method that introduced an edge-preserving smoothing pyramid to smooth the weight map. Owing to the edge-preserving characteristics of the filter, the details of the brightest/darkest regions in the fused image were well kept. Following [49], Yang [50] introduced a multi-scale MEF algorithm that first generated a virtual image with medium exposure based on the input images. Then, the method presented in [49] was applied to fuse this virtual image and achieve the fused result. Qu [51] proposed an improved Laplace pyramid fusion framework to achieve a fused image with detail enhancement. In addition, it is not easy to determine the appropriate fusion weight. To overcome this difficulty, Lin [52] presented an adaptive search strategy from coarse to fine, which used fuzzy logic and a multivariable normal conditional random field to search the optimal weight for multi-scale fusion.

2.2.2. Gradient-Based Methods

This kind of method is inspired by the physiological characteristics of the human visual system and is very sensitive to illumination. These methods aim to obtain the gradient information of the source images and then compose the fusion image in the gradient domain. Gu [53] presented an MEF method in a gradient field based on Riemannian geometric measurement and the gradient value of each pixel, which was generated by maximizing the structure tensor. The final fused image was obtained by a Poisson solver. The average gradient of the fused image was high, and this method was suitable in the details. However, the color was rarely processed, such that the fused image was dark, and the color was unnatural. Zhang [54] proposed an MEF method to apply in static and dynamic scenes based on gradient information. Under the guidance of the gradient-based quality evaluation, it generated a tone map similar to a high dynamic range image through seamless synthesis. Similarly, research using the gradient-based method to maintain image saliency was presented in [55], where the significance gradient of each color channel was computed. Moreover, the acquisition of the gradient was also a critical issue for calculating the image contrast. In general, the corresponding eigenvector of the matrix decided the gradient amplitude of the fusion. Several improved approaches were appropriated to optimize the weighted sum of the gradient amplitude in [56]. In this method, they used a wavelet filter, decomposing the image luminance, to obtain the corresponding decomposition coefficients. Paul [57] designed an MEF approach based on the gradient domain, which first converted the input images into YCbCr color space and then performed the fusion of the Y channel in the gradient domain. At the same time, the chrominance channels (Cb and Cr) were fused by applying a weighted sum of the chrominance channels. Specifically, the gradient in each orientation was estimated based on the maximum amplitude gradient selection. Using the gradient, the luminance was reconstructed based on the Harr wavelet. In [58], according to local contrast, brightness, and spatial structure, the author first calculated three weights of the input images and combined them using the multi-scale Laplacian pyramid. The dense scale-invariant feature transformation was used to compute the local contrast around each pixel position and measure the weight maps. The luminance was calculated in the gradient domain to obtain more visual information.

2.2.3. Sparse Representation-Based Methods

The approaches based on sparse representation (SR) take the linear combination of elements in the over-complete dictionary to describe the input signal. The error between the reconstructed signal and the input signal is minimized with as few non-zero coefficients as possible, allowing for a more concise representation of the signal and easier access to signal details [59]. In the past decade, SR-based MEF methods have rapidly become an essential branch in the area of image fusion. A dictionary obtained by K-SVD was used to represent the overlapping patches of the image brightness by the “sliding window” technique in [60]. The fusion image was reconstructed based on the sparse coefficients and the dictionary. Shao [61] proposed a local gradient sparse descriptor to generate the local details of the input image. It extracted the image features to remove the halo artifacts when the brightness of the source images changed sharply. Yang [62] designed a sparse exposure dictionary for exposure estimation based on sparse decomposition, which was used to construct the exposure estimation maps according to the atomic number of the image patches obtained by sparse decomposition.

2.2.4. Other Transform-Based Methods

In addition to the above methods, discrete cosine transform (DCT) and wavelet transform have also been successfully applied to MEF. Lee [63] proposed an HDR enhancement method based on DCT that fused two overexposure and underexposure images. This algorithm used the quantization process in JPEG coding as a metric for improving image quality so that the fusion process can be included in the DCT-based compression baseline. They proposed a Gaussian error function based on camera characteristics to improve the global image brightness. Martorell [64] constructed an MEF method based on the sliding window DCT transform, which used YCbCr transform to calculate the luminance and chrominance components of the image, respectively. Specifically, this technique decomposed the input images into multiple patches and computed the DCT of these patches. The patch coefficients from the same position of the input images with different exposure levels were combined according to their sizes. The chromaticity values were fused separately as a weighted average at the pixel level. In [65], the input images were converted into YUV space, and the color difference components U and V were fused in line with the saturation weight. The luminance component Y was converted into the wavelet domain, and the corresponding approximate sub-band and detail sub-band were fused by the well-exposedness weight and adjustable contrast weight, respectively. The final fused result was obtained by transforming the fusion image into RGB space.

2.3. Deep Learning Methods

In recent years, significant success has been achieved based on deep learning in computer vision and image processing applications [66][67]. More and more MEF methods based on deep learning have been proposed to improve fusion performance [68][69][70][71].

2.3.1. Supervised Methods

In supervised MEF algorithms, a large number of multi-exposure images with ground truth are required for training. However, this requirement is difficult to meet because there is generally no ground truth available in the MEF. Researchers have to find effective ways to create ground truth to develop this kind of method. CNN is known to be effective to learn local patterns and capture promising semantic information. Furthermore, it is also known to be efficient compared with other networks [72][73]. In 2017, Kalantari [74] first introduced a supervised CNN framework for MEF research. The ground truth image dataset was generated by combining three static images with different exposure levels in their work. The three images were converted into an approximate static scene by optical flow. Then, a convolutional neural network (CNN) was used to obtain fusion weights and fuse the aligned images. The contributions were: (1) presenting the first study on deep learning MEF; (2) the fusion effects of the three CNN architectures were discussed and compared; and (3) a dataset suitable for MEF was created. Since then, many MEF algorithms based on deep learning have been proposed. In 2018, Wang [75] proposed a supervised CNN-based framework for MEF. The main innovation of the approach was that it used the CNN model to gain multiple sub-images of the input images to use more neighborhood information for convolution operation. This work changed the pixel intensity of the ILSVRC 2012 verification dataset [76] to generate the ground truth images. However, it may not be real for the ground truth images generated in this way.

The second way to solve the lack of the ground truth is to use the pre-trained model generated by different methods. Li [77] extracted the features of the input images by utilizing a pre-trained model in other networks and calculated the local consistency using these features to determine the weights. In addition, due to motion detection implementation, this method can be used in both static and dynamic scenes. Similar work was also presented in [78].

The third way to solve this problem is to select fusion results from some methods as the ground truth. Cai [79] used 13 representative MEF techniques to generate 13 corresponding fused images from each sequence and then selected the image with the best visual quality as the ground truth by conducting subjective experiments. They provided a dataset containing 589 groups of multi-exposure image sequences, with 4413 images. The whole process required much manual intervention, so the number of image sequences trained was very limited, which may hinder the generalization ability of the fusion network. Liu [80] proposed a network for decolorization and MEF based on CNN. To obtain satisfactory qualitative and quantitative fusion results, the local gradient information from the input images with different exposure levels was calculated as the network’s input. It worked on a source image sequence consisting of three exposure levels and each exposure level can be viewed as a signal channel. In [81], a dual-network cascade model was constructed consisting of an exposure prediction network and an exposure fusion network. The former was used to recover the lost details in underexposed or overexposed regions, and the latter could perform fusion enhancement. This cascade model used a three-stage training strategy to reduce the training complexity. However, the down-sampling operation in this model may cause checkerboard defects in the fused image, and the author alleviated this problem by applying a loss function constructed with the structural anisotropy index.

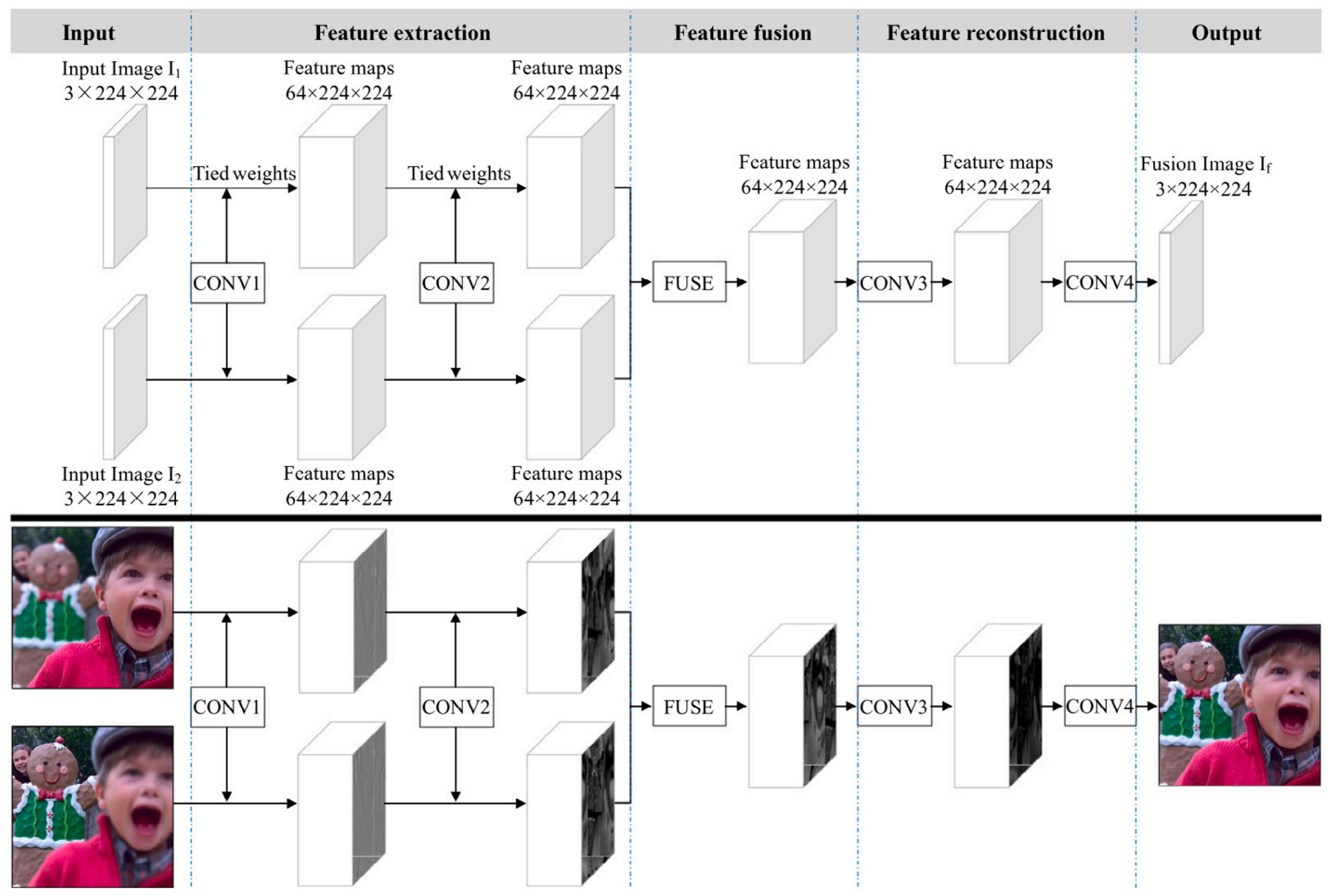

The above supervised methods are explicitly designed for the MEF issue. There are also several methods based on supervised deep learning that are constructed for some image fusion tasks, including MEF. Zhang [82] proposed an end-to-end fully convolutional approach (IFCNN) that used Siam architecture, as shown in Figure 5. Two branches extracted the convolutional features from the input images and fused them using element average fusion rules (note that different fusion tasks used different rules). In IFCNN, the model was optimized utilizing perceptual loss, plus a fundamental loss that calculated the intensity difference between the input images and the ground truth. IFCNN can be suitable for fusing images at arbitrary resolution. However, its performance in the MEF task may be limited because it was trained only with a multi-focus image dataset. In [83], a general cross-modal image fusion network was presented, exploring the commonalities and characteristics of different fusion tasks. Different network structures were analyzed in terms of their impact on the quality and efficiency of image fusion. The dataset constructed by Cai [79] was used for the MEF task. However, these models were not explicitly designed for MEF issues and were not fine-tuned on multi-exposure images, so their performance may not be satisfactory in some cases.

Figure 5. The network architecture of IFCNN.

The methods above either create the ground truth images by adjusting the brightness value of the normal images, use other pre-trained models in other works to obtain the ground truth images, or use the images with subjective effects in the existing fusion results as the ground truth images. However, these methods may not deal well with the lack of real ground truth images. In particular, the ground truth images in some MEF algorithms are selected from the fusion results of other methods and not taken by optical cameras. They may not be accurate or appropriate. In order to solve these problems, some studies try to construct unsupervised MEF architectures.

2.3.2. Unsupervised Methods

Since there are generally no real ground truth images available, some studies have turned to developing the MEF methods based on unsupervised deep learning to avoid the need for ground truth in training. This section describes the relevant unsupervised MEF methods.

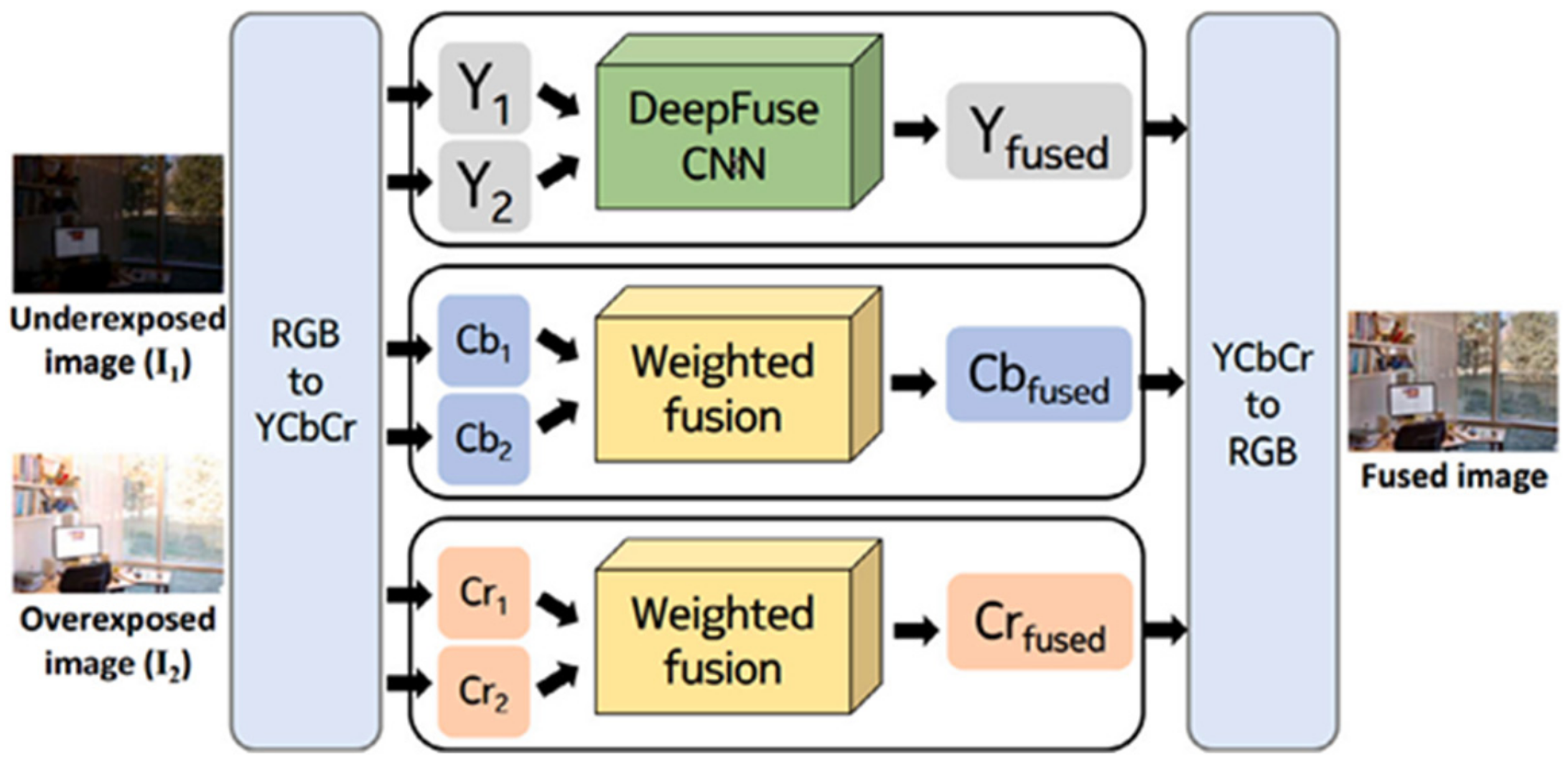

In 2017, Prabhakar [84] built the first unsupervised MEF architecture for fusing image pairs, named DeepFuse, as shown in Figure 6. This method first converted the input images into YCbCr color space. Then, a CNN composed of the feature layers, a fusion layer, and the reconstruction layers was used for feature extraction of the Y channel, while the fusion of Chrominance channels (Cb and Cr) was still executed manually. Thirdly, the image data in YCbCr space were converted back into RGB space to obtain the final fusion image. This unsupervised method used a fusion quality metric MEF-SSIM [85] as the loss function to realize unsupervised learning. DeepFuse can extract effective features and be more robust to different inputs because it uses CNN to fuse the brightness, which is its main advantage. Furthermore, as an unsupervised method, it does not need ground truth to train. However, a different color space conversion is required, which is not easy compared with fusing RGB images directly. In addition, simply using MEF-SSIM as the loss function is not enough to learn other critical information not covered by MEF-SSIM.

Figure 6. The network architecture of DeepFuse.

Ma [86] presented a flexible and fast MEFNet for the MEF task, and it also worked in the YCbCr color space. First, the input images were downsampled and sent to a context aggregation network for generating the learned weight maps, which were jointly upsampled to high resolution using a guided filter. Then, the upsampling weight maps were used for the weighted summation of the input images. Specifically, the context aggregation network was trained for fusing the Y channel, while the fusion of Cb and Cr was executed with a simple weighted summation. The final fused image in RGB space was obtained by converting color space. The flexibility and speed were the significant advantages of MEFNet, i.e., the input images with arbitrary spatial resolution can be fused using this fully convolutional network, and the fusion process was efficient since the main calculation was carried out with a fixed low resolution. However, because only MEF-SSIM was used as the loss function, there was the same problem in MEFNet as in DeepFuse.

Qi [87] presented the UMEF network for MEF in static scenes. They used CNN to extract features and fused them to create the final fusion image. Compared with DeepFuse, there were three main differences between them, as follows. First, UMEF can fuse multiple input images. By contrast, DeepFuse was designed to fuse two input images. Second, the loss function was made up of two parts: MEF-SSIMc and an unreferenced gradient loss, while the loss function of DeepFuse was only MEF-SSIM. As a result, more details of the fused images were reserved in UMEF. Third, the color images can be directly fused with MEF-SSIMc in UMEF, and the color space conversion is avoided. In [88], an end-to-end unsupervised fusion network was designed to generate a fusion image, named U2Fusion. It was applied to solve different fusion tasks, such as multi-modal, multi-exposure, and multi-focus issues. U2Fusion extracted the features with pre-trained VGGNet-16 and fused the input images with the DenseNet network. The importance of the input images can be automatically estimated through feature extraction and information measurement, and an adaptive information preservation degree was put forward. However, this method was required for the quality of the input images. When acquiring the image, these problems will be amplified if there is noise or distortion. Gao [89] made some improvements based on U2Fusion and applied the MEF model to the transportation field. The quality of the fused images from the fusion model was improved using adaptive optimization.

Besides the unsupervised method based on CNN, some unsupervised MEF methods based on Generative Adversarial Networks (GAN) were also proposed. Chen [90] presented an MEF network and fused two input images. This network integrated homography estimation, attention mechanism, and adversarial learning, which were, respectively, applied to camera motion compensation, the correction of the remaining moving pixels, and artifact reduction. Xu [17] designed an end-to-end architecture for MEF based on GAN, named MEF-GAN, and used the dataset from Cai [79]. Following [17] and [90], a GAN-based MEF network, named GANFuse, was proposed in [91]. There were two main differences between GANFuse and the GAN-based MEF approaches above. First, as an unsupervised network, GANFuse used an unsupervised loss function, which was applied to measure the similarity between the fusion image and the input images, rather than the similarity with the ground truth. Second, GANFuse was composed of one generator and two discriminators. Each discriminator was used to distinguish the difference between the fusion image and the input images.

It should be noted that all the above unsupervised MEF networks, except UMEF, required color space conversion. The input images needed to be converted into YCbCr color space, and the Y channel was fused with the deep learning model, while the Cb and Cr channels were fused by weighted summation.

2.4. HDR Deghosting Methods

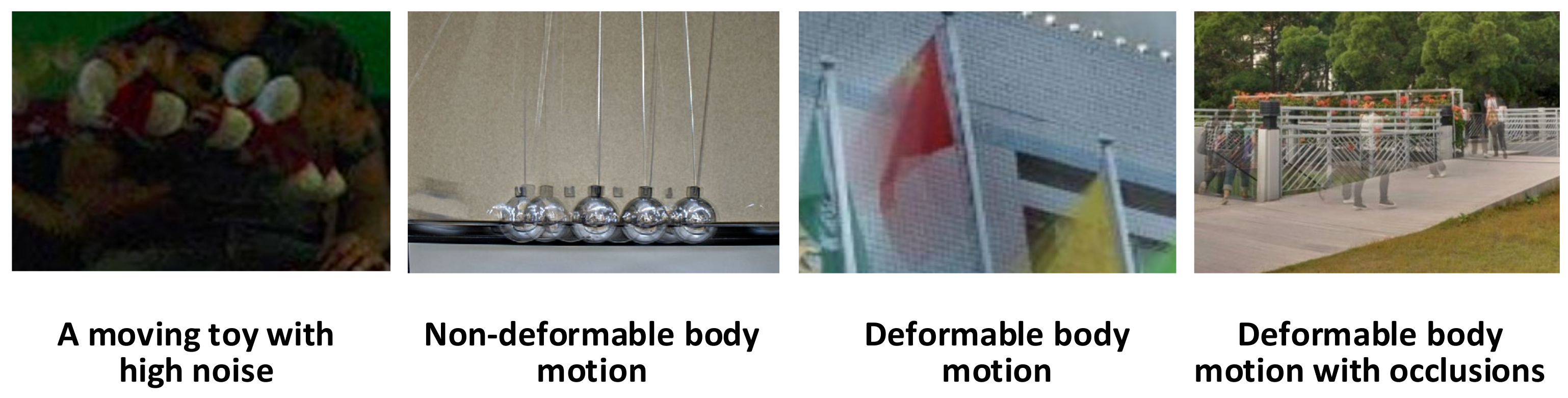

Most MEF approaches assume that the source images are perfectly aligned, which is usually violated in practice because of the time differences in image acquisition. Once there are moving objects in the scene, ghosts or blur artifacts often occur that degrade the quality of the fusion image [92][93][94], as shown in Figure 7.

Figure 7. Different types of the fusion images with ghost artifacts.

MEF in dynamic scenes has always been a challenge. To obtain HDR images without artifacts, a large number of deghosting MEF methods have been proposed from different angles, which are mainly in two aspects: how to detect the ghost area and how to eliminate ghosts. Based on the above parts, this part analyzes the MEF deghosting methods in-depth. The current MEF methods in dynamic scenes are investigated, classified, and compared. The MEF processing in a dynamic scene is divided into the following categories: global exposure registration, moving object removal, and moving object selection or registration.

2.4.1. Global Exposure Registration

The main aim of the methods in this class is to compensate for and eliminate the impacts of the camera motion based on parameter estimation of the transformations that are applied to each input image. These methods do not pay attention to the existence of moving objects.

Cerman [95] presented an MEF approach to register the source images and eliminate the camera motion in handheld image acquisition equipment. The correlation in the Fourier domain was used to evaluate the image offset from the translational camera motion in initial estimation. Both the translational and rotational movement of the subpixels between input images were locally optimized. They used the registration on continuous image pairs without selecting a reference image. Gevrekci [96] proposed a new contrast-invariant feature transform method. This method assumed that the Fourier components were in phase at the corner position, used a local contrast stretching step on each pixel of the source images, and applied the phase congruency for detecting the corners. Then, they registered the source images by matching features using RANSAC. Another approach using the phase congruency images was provided in [97], which used cross-correlation technology to register the phase congruency images in the frequency domain instead of using them to discriminate the key points in the spatial domain. Besides translation registration, rotation registration was also performed with log polar coordinates, where the rotation motion was represented by the translation transformation in the coordinates. Evolutionary programming was applied to detect subpixel shifts to search the optimal transformation values. In [98] used a target frame localization method to register the input images and compensated for the undesired camera motion in the registration process.

Furthermore, using a camera with a fixed position could reduce this problem. In addition to camera motion, the more challenging problem is that moving objects may appear as ghost artifacts in the fused image. Therefore, in recent years more research has focused on removing the ghosts of moving objects in fusion images.

2.4.2. Moving Object Removal

This kind of method removes all moving targets in the scene by static background estimation. Most of the image scenes are static in practical applications, and only a small part of the image contains moving objects. Without selecting a reference image, most of these algorithms perform a consistency check for each pixel of the input images. The moving object is modeled as the outlier and eliminated to obtain an HDR image without artifacts.

Khan [99] proposed an HDR deghosting approach for adjusting the weight by estimating the probability that each pixel belongs to the background iteratively. Pedone [100] designed a similar iterative process, which increased the chances of the pixels belonging to the static set through the energy minimization technology. The final probabilities were applied as the weights of MEF. Zhang [101] utilized the gradient direction consistency to determine whether there was a moving object in the input images. This method calculated the pixel weights using quality measures in the gradient domain rather than absolute pixel intensities. The weight of each image was computed as a product of consistency and visibility scores. If the pixel gradient direction was consistent with the collocated pixels from other input images, the pixel was assigned a larger weight by the consistency score. On the other hand, the pixel with a larger gradient was assigned a larger weight by the visibility score. However, this method may not be robust in frequently changing image scenes. Wang [102] introduced visual saliency to measure the difference between the input images. They applied bilateral motion detection to improve the accuracy of the marked moving area and avoid the artifacts in a fused image through fusion masks. The ghosts of moving objects and handheld camera motion can be removed. However, they need more than three input images for effective fusing. Li [103] applied a light intensity mapping function and bidirectional algorithm to correct non-conforming pixels without reference images. This method used two rounds of hybrid correction steps to remove ghosts in the fused image. In [51], the weight maps were calculated based on luminance and chromaticity information in the YIQ color space. For dynamic scenes, this method used image difference and superpixel segmentation to refine the weight maps, and the weights of moving objects were decreased to eliminate the undesirable artifacts. Finally, a fusion framework based on the improved Laplacian pyramid was proposed to fuse the input images and enhance the details. However, the algorithm was time-consuming and did not work well when the camera jittered.

These methods assume a main pattern in the input image sequence, referred to as the “majority hypothesis”, which means that moving objects only occupy a small part of the image. A common problem in these methods is that the performance may not be satisfied when the image scene contains moving objects with large motion amplitude or when some parts of the images in the sequence change frequently.

References

- Huang, L.; Li, Z.; Xu, C.; Feng, B. Multi-exposure image fusion based on feature evaluation with adaptive factor. IET Image Process. 2021, 15, 3211–3220.

- Shen, R.; Cheng, I.; Basu, A. QoE-based multi-exposure fusion in hierarchical multivariate gaussian CRF. IEEE Trans. Image Process. 2013, 22, 2469–2478.

- Aggarwal, M.; Ahuja, N. Split aperture imaging for high dynamic range. In Proceedings of the 8th IEEE International Conference on Computer Vision(ICCV), Vancouver, BC, Canada, 7–14 July 2001; pp. 10–16.

- Tumblin, J.; Agrawal, A.; Raskar, R. Why I want a gradient camera. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition(CVPR), San Diego, CA, USA, 20–25 June 2005; pp. 103–110.

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112.

- Nie, T.; Huang, L.; Liu, H.; Xiansheng Li, X. Multi-exposure fusion of gray images under low illumination based on low-rank decomposition. Remote Sens. 2021, 13, 204.

- Kim, J.; Ryu, J.; Kim, J. Deep gradual flash fusion for low-light enhancement. J. Vis. Commun. Image Represent. 2020, 72, 102903.

- Galdran, A. Image dehazing by artificial multiple-exposure image fusion. Signal Process. 2018, 149, 135–147.

- Wang, X.; Sun, Z.; Zhang, Q.; Fang, Y. Multi-exposure decomposition-fusion model for high dynamic range image saliency detection. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4409–4420.

- Burt, P.; Kolczynski, R. Enhanced image capture through fusion. In Proceedings of the International Conference on Computer Vision (ICCV), Berlin, Germany, 11–14 May 1993; pp. 173–182.

- Zhang, X. Benchmarking and comparing multi-exposure image fusion algorithms. Inf. Fusion 2021, 74, 111–131.

- Bertalmío, M.; Levine, S. Variational approach for the fusion of exposure bracketed pairs. IEEE Trans. Image Process. 2013, 22, 712–723.

- Yang, Y.; Wu, S.; Wang, X.; Li, Z. Exposure interpolation for two large-exposure-ratio images. IEEE Access 2020, 8, 227141–227151.

- Prabhakar, K.R.; Agrawal, S.; Babu, R.V. Self-gated memory recurrent network for efficient scalable HDR deghosting. IEEE Trans. Comput. Imaging 2021, 7, 1228–1239.

- Liu, Y.; Wang, L.; Cheng, J.; Chang, L.; Xun, C. Multi-focus image fusion: A Survey of the state of the art. Inf. Fusion 2020, 64, 71–91.

- Chen, Y.; Jiang, G.; Yu, M.; Yang, Y.; Ho, Y.S. Learning stereo high dynamic range imaging from a pair of cameras with different exposure parameters. IEEE Trans. Comput. Imaging 2020, 6, 1044–1058.

- Xu, H.; Ma, J.; Zhang, X. MEF-GAN: Multi-exposure image fusion via generative adversarial networks. IEEE Trans. Image Process. 2020, 29, 7203–7216.

- Chang, M.; Feng, H.; Xu, Z.; Li, Q. Robust ghost-free multiexposure fusion for dynamic scenes. J. Electron. Imaging 2018, 27, 033023.

- Karaduzovic-Hadziabdic, K.; Telalovic, J.H.; Mantiuk, R.K. Assessment of multi-exposure HDR image deghosting methods. Comput. Graph. 2017, 63, 1–17.

- Bruce, N.D.B. Expoblend: Information preserving exposure blending based on normalized log-domain entropy. Comput. Graph. 2014, 39, 12–23.

- Lee, L.-H.; Park, J.S.; Cho, N.I. A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1737–1741.

- Kinoshita, Y.; Kiya, H. Scene segmentation-based luminance adjustment for multi-exposure image fusion. IEEE Trans. Image Process. 2019, 28, 4101–4115.

- Xu, Y.; Sun, B. Color-compensated multi-scale exposure fusion based on physical features. Optik 2020, 223, 165494.

- Ulucan, O.; Karakaya, D.; Turkan, M. Multi-exposure image fusion based on linear embeddings and watershed masking. Signal Process. 2021, 178, 107791.

- Raman, S.; Chaudhuri, S. Bilateral Filter Based Compositing for Variable Exposure Photography. The Eurographics Association: Geneve, Switzerland, 2009; pp. 1–4.

- Li, S.; Kang, X. Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 2012, 58, 626–632.

- Wang, C.; He, C.; Xu, M. Fast exposure fusion of detail enhancement for brightest and darkest regions. Vis. Comput. 2021, 37, 1233–1243.

- Goshtasby, A.A. Fusion of multi-exposure images. Image Vis. Comput. 2005, 23, 611–618.

- Huang, F.; Zhou, D.; Nie, R. A Color Multi-exposure image fusion approach using structural patch decomposition. IEEE Access 2018, 6, 42877–42885.

- Ma, K.; Wang, Z. Multi-exposure image fusion: A patch-wise approach. In Proceedings of the 2015 IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015; pp. 1717–1721.

- Ma, K.; Li, H.; Yong, H.; Wang, Z.; Meng, D.; Zhang, L. Robust multi-exposure image fusion: A structural patch decomposition approach. IEEE Trans. Image Process. 2017, 26, 2519–2532.

- Li, H.; Ma, K.; Yong, H.; Zhang, L. Fast multi-scale structural patch decomposition for multi-exposure image fusion. IEEE Trans. Image Process. 2020, 29, 5805–5816.

- Li, H.; Chan, T.N.; Qi, X.; Xie, W. Detail-preserving multi-exposure fusion with edge-preserving structural patch decomposition. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1–12.

- Wang, S.; Zhao, Y. A novel patch-based multi-exposure image fusion using super-pixel segmentation. IEEE Access 2020, 8, 39034–39045.

- Shen, R.; Cheng, I.; Shi, J.; Basu, A. Generalized random walks for fusion of multi-exposure images. IEEE Trans. Image Process. 2011, 20, 3634–3646.

- Li, Z.; Zheng, J.; Rahardja, S. Detail-enhanced exposure fusion. IEEE Trans. Image Process. 2012, 21, 4672–4676.

- Song, M.; Tao, D.; Chen, C. Probabilistic exposure fusion. IEEE Trans. Image Process. 2012, 21, 341–357.

- Liu, S.; Zhang, Y. Detail-preserving underexposed image enhancement via optimal weighted multi-exposure fusion. IEEE Trans. Consum. Electron. 2019, 65, 303–311.

- Ma, K.; Duanmu, Z.; Yeganeh, H.; Wang, Z. Multi-exposure image fusion by optimizing a structural similarity index. IEEE Trans. Comput. Imaging 2018, 4, 60–72.

- Qi, G.; Chang, L.; Luo, Y.; Chen, Y. A precise multi-exposure image fusion method based on low-level features. Sensors 2020, 20, 1597.

- Mertens, T.; Kautz, J.; Reeth, F.V. Exposure fusion. In Proceedings of the 15th Pacific Conference on Computer Graphics and Applications, Maui, HI, USA, 4 December 2007; pp. 382–390.

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875.

- Shen, J.; Zhao, Y.; Yan, S.; Li, X. Exposure fusion using boosting laplacian pyramid. IEEE Trans. Cybern. 2014, 44, 1579–1590.

- Singh, H.; Kumar, V.; Bhooshan, S. A novel approach for detail-enhanced exposure fusion using guided filter. Sci. World J. 2014, 2014, 659217.

- Nejati, M.; Karimi, M.; Soroushmehr, S.M.R.; Karimi, N.; Samavi, S.; Najarian, K. Fast exposure fusion using exposuredness function. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2234–2238.

- Li, Z.; Wen, C.; Zheng, J. Detail-enhanced multi-scale exposure fusion. IEEE Trans. Image Process. 2017, 26, 1243–1252.

- Yan, Q.; Zhu, Y.; Zhou, Y.; Sun, J.; Zhang, L.; Zhang, Y. Enhancing image visuality by multi-exposure fusion. Pattern Recognit. Lett. 2019, 127, 66–75.

- Wang, Q.; Chen, W.; Wu, X.; Li, Z. Detail-enhanced multi-scale exposure fusion in YUV color space. IEEE Trans. Circuits Syst. Video Technol. 2019, 26, 1243–1252.

- Kou, F.; Li, Z.; Wen, C.; Chen, W. Edge-preserving smoothing pyramid based multi-scale exposure fusion. J. Vis. Commun. Image Represent. 2018, 53, 235–244.

- Yang, Y.; Cao, W.; Wu, S.; Li, Z. Multi-scale fusion of two large-exposure-ratio image. IEEE Signal Process. Lett. 2018, 25, 1885–1889.

- Qu, Z.; Huang, X.; Chen, K. Algorithm of multi-exposure image fusion with detail enhancement and ghosting removal. J. Electron. Imaging 2019, 28, 013022.

- Lin, Y.-H.; Hua, K.-L.; Lu, H.-H.; Sun, W.-L.; Chen, Y.-Y. An adaptive exposure fusion method using fuzzy logic and multivariate normal conditional random fields. Sensors 2019, 19, 1–23.

- Gu, B.; Li, W.; Wong, J.; Zhu, M.; Wang, M. Gradient field multi-exposure images fusion for high dynamic range image visualization. J. Vis. Commun. Image Represent. 2012, 23, 604–610.

- Zhang, W.; Cham, W.-K. Gradient-directed multiexposure composition. IEEE Trans. Image Process. 2012, 21, 2318–2323.

- Wang, C.; Yang, Q.; Tang, X.; Ye, Z. Salience preserving image fusion with dynamic range compression. In Proceedings of the IEEE International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; pp. 989–992.

- Hara, K.; Inoue, K.; Urahama, K. A differentiable approximation approach to contrast aware image fusion. IEEE Signal Process. Lett. 2014, 21, 742–745.

- Paul, S.; Sevcenco, J.S.; Agathoklis, P. Multi-exposure and multi-focus image fusion in gradient domain. J. Circuits Syst. Comput. 2016, 25, 1650123.

- Liu, Y.; Zhou, D.; Nie, R.; Hou, R.; Ding, Z. Construction of high dynamic range image based on gradient information transformation. IET Image Process. 2020, 14, 1327–1338.

- Wang, X.; Shen, S.; Ning, C.; Huang, F.; Gao, H. Multiclass remote sensing object recognition based on discriminative sparse representation. Appl. Opt. 2016, 55, 1381–1394.

- Wang, J.; Liu, H.; He, N. Exposure fusion based on sparse representation using approximate K-SVD. Neurocomputing 2014, 135, 145–154.

- Shao, H.; Jiang, G.; Yu, M.; Song, Y.; Jiang, H.; Peng, Z.; Chen, F. Halo-free multi-exposure image fusion based on sparse representation of gradient features. Appl. Sci. 2018, 8, 1543.

- Yang, Y.; Wu, J.; Huang, S.; Lin, P. Multi-exposure estimation and fusion based on a sparsity exposure dictionary. IEEE Trans. Instrum. Meas. 2020, 69, 4753–4767.

- Lee, G.-Y.; Lee, S.-H.; Kwon, H.-J. DCT-based HDR exposure fusion using multiexposed image sensors. J. Sensors 2017, 2017, 1–14.

- Martorell, O.; Sbert, C.; Buades, A. Ghosting-free DCT based multi-exposure image fusion. Signal Process. Image Commun. 2019, 78, 409–425.

- Zhang, W.; Liu, X.; Wang, W.; Zeng, Y. Multi-exposure image fusion based on wavelet transform. Int. J. Adv. Robot. Syst. 2018, 15, 1–19.

- Zhang, Y.; Liu, T.; Singh, M.; Cetintas, E.; Luo, Y.; Rivenson, Y.; Larin, K.V.; Ozcan, A. Neural network-based image reconstruction in swept-source optical coherence tomography using undersampled spectral data. Light. Sci. Appl. 2021, 10, 390–400.

- Li, X.; Zhang, G.; Qiao, H.; Bao, F.; Deng, Y.; Wu, J.; He, Y.; Yun, J.; Lin, X.; Xie, H.; et al. Unsupervised content-preserving transformation for optical microscopy. Light. Sci. Appl. 2021, 10, 1658–1671.

- Wu, S.; Xu, J.; Tai, Y.W. Deep high dynamic range imaging with large foreground motions. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 9 October 2018; pp. 120–135.

- Yan, Q.; Gong, D.; Zhang, P. Multi-scale dense networks for deep high dynamic range imaging. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 41–50.

- Yan, Q.; Gong, D.; Shi, Q. Attention guided network for ghost-free high dynamic range imaging. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1751–1760.

- Wang, J.; Li, X.; Liu, H. Exposure fusion using a relative generative adversarial network. IEICE Trans. Inf. Syst. 2021, E104D, 1017–1027.

- Vu, T.; Nguyen, C.V.; Pham, T.X.; Luu, T.M.; Yoo, C.D. Fast and efficient image quality enhancement via desubpixel convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 23 January 2019; pp. 243–259.

- Jeon, M.; Jeong, Y.S. Compact and accurate scene text detector. Appl. Sci. 2020, 10, 2096.

- Kalantari, N.K.; Ramamoorthi, R. Deep high dynamic range imaging of dynamic scenes. ACM Trans. Graph. 2017, 36, 144.

- Wang, J.; Wang, W.; Xu, G.; Liu, H. End-to-end exposure fusion using convolutional neural network. IEICE Trans. Inf. Syst. 2018, 101, 560–563.

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255.

- Li, H.; Zhang, L. Multi-exposure fusion with CNN features. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1723–1727.

- Lahoud, F.; Süsstrunk, S. Fast and efficient zero-learning image fusion. arXiv 2019, arXiv:1902.00730.

- Cai, J.; Gu, S.; Zhang, L. Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 2018, 27, 2049–2062.

- Liu, Q.; Leung, H. Variable augmented neural network for decolorization and multi-exposure fusion. Inf. Fusion 2019, 46, 114–127.

- Chen, Y.; Yu, M.; Jiang, G.; Peng, Z.; Chen, F. End-to-end single image enhancement based on a dual network cascade model. J. Vis. Commun. Image Represent. 2019, 61, 284–295.

- Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 2020, 54, 99–118.

- Fang, A.; Zhao, X.; Yang, J.; Qin, B.; Zhang, Y. A light-weight, efficient, and general cross-modal image fusion network. Neurocomputing 2021, 463, 198–211.

- Prabhakar, K.P.; Srikar, V.S.; Babu, R.V. Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4724–4732.

- Ma, K.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356.

- Ma, K.; Duanmu, Z.; Zhu, H.; Fang, Y.; Wang, Z. Deep guided learning for fast multi-exposure image fusion. IEEE Trans. Image Process. 2020, 29, 2808–2819.

- Qi, Y.; Zhou, S.; Zhang, Z.; Luo, S.; Lin, X.; Wang, L.; Qiang, B. Deep unsupervised learning based on color un-referenced loss functions for multi-exposure image fusion. Inf. Fusion 2021, 66, 18–39.

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518.

- Gao, M.; Wang, J.; Chen, Y.; Du, C. An improved multi-exposure image fusion method for intelligent transportation system. Electronics 2021, 10, 383.

- Chen, S.Y.; Chuang, Y.Y. Deep exposure fusion with deghosting via homography estimation and attention learning. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1464–1468.

- Yang, Z.; Chen, Y.; Le, Z.; Ma, Y. GANFuse: A novel multi-exposure image fusion method based on generative adversarial networks. Neural Comput. Appl. 2021, 33, 6133–6145.

- Tursun, O.T.; Akyüz, A.O.; Erdem, A.; Erdem, E. The state of the art in HDR deghosting: A survey and evaluation. Comput. Graphics 2015, 34, 683–707.

- Yan, Q.; Gong, D.; Shi, J.Q.; Hengel, A.; Sun, J.; Zhu, Y.; Zhang, Y. High dynamic range imaging via gradient-aware context aggregation network. Pattern Recogn. 2022, 122, 108342.

- Woo, S.M.; Ryu, J.H.; Kim, J.O. Ghost-free deep high-dynamic-range imaging using focus pixels for complex motion scenes. IEEE Trans. Image Process. 2021, 30, 5001–5016.

- Cerman, L.; Hlaváč, V. Exposure time estimation for high dynamic range imaging with hand held camera. In Proceedings of the Computer Vision Winter Workshop, Telc, Czech Republic, 6–8 February 2006; pp. 1–6.

- Gevrekci, M.; Gunturk, K.B. On geometric and photometric registration of images. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing, Honolulu, HI, USA, 15–20 April 2007; pp. 1261–1264.

- Yao, S. Robust image registration for multiple exposure high dynamic range image synthesis. In Proceedings of the SPIE, Conference on Image Processing: Algorithms and Systems IX, San Francisco, CA, USA, 24–25 January 2011.

- Im, J.; Lee, S.; Paik, J. Improved elastic registration for ghost artifact free high dynamic range imaging. IEEE Trans. Consum. Electron. 2011, 57, 932–935.

- Khan, E.A.; Akyuz, A.O.; Reinhard, E. Ghost removal in high dynamic range images. In Proceedings of the IEEE International Conference on Image Processing, Atlanta, GA, USA, 8–11 October 2006; pp. 2005–2008.

- Pedone, M.; Heikkil, J. Constrain propagation for ghost removal in high dynamic range images. VISAPP 2008. In Proceedings of the 3rd International Conference on Computer Vision Theory and Applications, Funchal, Madeira, Portugal, 22–25 January 2008; pp. 36–41.

- Zhang, W.; Cham, W.K. Gradient-directed composition of multi-exposure images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 530–536.

- Wang, Z.; Liu, Q.; Ikenaga, T. Robust ghost-free high-dynamic-range imaging by visual salience based bilateral motion detection and stack extension based exposure fusion. IEICE Trans. Fundam. Electron. Commun. Computer Sci. 2017, E100, 2266–2274.

- Li, Z.; Zheng, J.; Zhu, Z.; Wu, S. Selectively detail-enhanced fusion of differently exposed images with moving objects. IEEE Trans. Image Process. 2014, 23, 4372–4382.

More

Information

Contributor

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

2.3K

Revisions:

2 times

(View History)

Update Date:

24 Feb 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No