Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Dan Popescu | + 3993 word(s) | 3993 | 2022-01-13 03:11:41 | | | |

| 2 | Bruce Ren | Meta information modification | 3993 | 2022-01-26 02:22:05 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Popescu, D. New Trends in Melanoma Detection Using Neural Networks. Encyclopedia. Available online: https://encyclopedia.pub/entry/18770 (accessed on 08 February 2026).

Popescu D. New Trends in Melanoma Detection Using Neural Networks. Encyclopedia. Available at: https://encyclopedia.pub/entry/18770. Accessed February 08, 2026.

Popescu, Dan. "New Trends in Melanoma Detection Using Neural Networks" Encyclopedia, https://encyclopedia.pub/entry/18770 (accessed February 08, 2026).

Popescu, D. (2022, January 25). New Trends in Melanoma Detection Using Neural Networks. In Encyclopedia. https://encyclopedia.pub/entry/18770

Popescu, Dan. "New Trends in Melanoma Detection Using Neural Networks." Encyclopedia. Web. 25 January, 2022.

Copy Citation

Due to its increasing incidence, skin cancer, and especially melanoma, is a serious health disease today. The high mortality rate associated with melanoma makes it necessary to detect the early stages to be treated urgently and properly. This is the reason why many researchers in this domain wanted to obtain accurate computer-aided diagnosis systems to assist in the early detection and diagnosis of such diseases.

skin lesion

image processing

machine learning

deep learning

neural networks

image classifiers

image segmentation

melanoma detection

statistic performances

review

1. Introduction

Melanoma (Me) is known as the deadliest type of skin cancer [1], the incidence of its occurrence increasing for both men and women worldwide every year [2][3]. According to Sun X. et al. [4] the main cause of Me occurrence is exposure to ultraviolet radiation. Due to this excessive exposure, some mutations that occur at the level of melanocytes can lead to Me genesis. Even though it is one of the deadliest types of skin cancers, many studies showed that early detection of Me leads to its treatment in 90% of cases [5]. Currently, the standard method of Me diagnosis is visual analysis by a specialist. However, this method can be time-consuming. Moreover, it can lead to misdiagnosis due to the complexity of providing the diagnosis. The following aspects need to be considered: the number of parameters that need to be analyzed (color, shape, texture, edge, asymmetry, etc.), the fatigue, and the lack of experience of the specialist [6][7][8]. In most cases, the dermoscopic images are acquired and analyzed by the dermatologist, thus achieving a maximum of 84% examination accuracy (ACC) [9][10], which is insufficient. Therefore, the help of a computer-aided diagnosis (CAD) system for Me diagnosis from images is more than necessary [11].

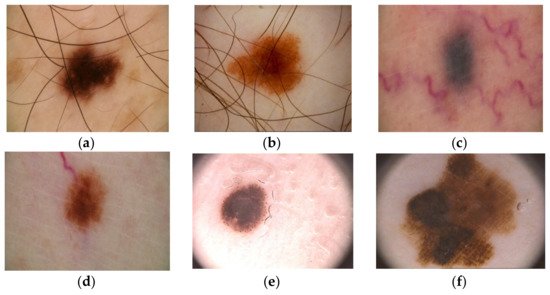

Over time, a lot of researchers have put their ideas together to try to develop an automatic Me detection system based on machine learning (ML) that provides a quick result with high ACC, even if the complexity of skin lesion (SL) images analysis presented many problems [12][13]. In reality, it is a rather complex task to find a suitable diagnosis algorithm due to the presence of artifacts, such as the presence of hair around or even in the lesion, different lesion dimensions, color and shapes, the presence of blood vessels, and other artifacts [14], as seen in Figure 1.

Figure 1. Artifacts in Me images collected from the ISIC 2016 dataset [14]: (a–b)—presence of hair, (c-d)—presence of blood vessels, (e,f)—presence of oil drops.

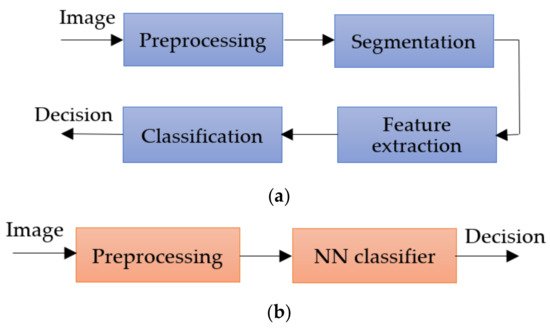

The inconveniences caused by these factors led the authors to expand their research a lot but, in principle, most approaches use the same classical method in which the first step is the preprocessing step, followed by segmentation, feature extraction, and then the classification step. The main workflow of the classical method is as shown in Figure 2.

Figure 2. Methods workflow for Me detection: (a) classical method, (b) NN approach.

The preprocessing step consists of applying primary operations such as the following: noise removal, data augmentation, resizing, brightness grayscale transformation or brightness corrections, binarization, and, mainly, intensity and contrast enhancement [15]. As the Me images have a high variability of content, the segmentation step is a much-debated topic and a difficult task. This step represents the part of the algorithm that makes possible the image splitting into several sets of pixels [16], with the extraction of regions of interest (RoI) by an automatic or semiautomatic process as the end result [17]. Among the most commonly used techniques for Me detection and segmentation are artificial neural network-based methods (NNs). Considering the variability of Me images, the first-mentioned method (Figure 2a) cannot provide the best results. After the segmentation, the feature extraction step is usually applied. This task consists of reducing the dimensions of the data representation such that this becomes more administrable. Thus, data processing becomes faster and easier, without losing important information. Even so, it is known as a large consumer of resources due to the high number of variables. Generally, if the feature extraction is well done, the detection ACC will increase significantly [16]. In the past, most authors [18][19][20] used the ABCD (Asymmetry, Border, Color, Differential structure) rule as a feature extraction-based method for Me detection, while presently others use deep learning (DL) techniques to make the feature extraction better. The last, and the most discussed step in our review, is the classification step. The goal of this step is to assign a class to an RoI from an image. Manual classification is hard and time-consuming and therefore the interest for developing an accurate automatic classification algorithm increased in last years.

Nowadays, whether it is about segmentation, feature extraction, or classification, the tendency is to use the benefits of Artificial Intelligence (AI) using NN and DL techniques to obtain more accurate results. The main goal of AI is the reproduction of human intelligence, with applications in domains such as autonomous vehicles, search engines, art creation, or medical diagnosis. In the case of Me detection by applying AI, promising results were obtained, reaching a level where only visual inspection of SL is no longer a reliable solution. Known as a subset of the AI, the classical ML algorithms were proposed first as a solution for automatic Me detection. Mainly, ML uses the previous experience to improve the given results [21]. The system first extracts the needed features to create the training data. After the training data are obtained, supervised or unsupervised learning is used in the learning process. Generally, most papers used the supervised learning models, being more accurate. As has been observed also in other areas in which it is applied, the classical ML-based methods showed promising results, but also some limitations. For example, a large amount of data are needed to train the system, the learning phase takes a long time, and ML presents a high error-susceptibility. Thus, the authors turned their attention to NN and DL techniques.

NNs consist of a collection of neurons that simulates the function of neurons in a human being. In such a network, the neurons are connected to each other, each connection being assigned a weight, helping the neurons to give the necessary output. The authors prefer the NNs because they present benefits, such as distributed memory, the possibility of giving good results with a small amount of information, or the possibility of parallel processing. For training, the system error is calculated by taking the difference between the predicted value and the output target. Using this calculated error, the system adjusts its weights until the error is minimized.

2. Neural Networks Used in Melanoma Detection, Segmentation, and Classification

According to the current study related to SL detection, segmentation, and classification papers in the literature, it turned out that the majority of these kinds of tasks used NNs, CNNs, DCNNs (Deep Convolutional Neural Networks), and TL for NNs. It can be observed that the trend throughout the years, in general, and not strictly related to SL diagnosis systems, is that researchers used to design deep networks with a lot of hidden layers (either convolutional or fully connected layers) to obtain better results. It is normal that, when this happened at first, the time complexity for training, classification, detection, or segmentation was somehow neglected, all works being more focused on better statistical performance (required by diagnostic specialists). As a consequence, the majority of works related to Me detection, segmentation and/or classification systems are based on NNs. Table 1 illustrates the most used NNs in such applications. As we are mostly interested in the usage trend of NNs used in Me diagnosis, this section presents the architecture of the basic NNs widely used in these kinds of applications.

Table 1. Family of NNs used for Me diagnosis used in references.

| NN family | Representatives | References |

|---|---|---|

| ResNet | ResNet 34, ResNet 50, SEResNet 50, ResNet 101, ResNet 152, FCRN | [5][6][22][23][24][25][26][27][28][29][30][31][32][33][34][35] |

| Inception/GoogLeNet | GoogLeNet (Inception v2), InceptionResNet-v2, Inception v3, Inception v4 | [5][36][25][26][27][28][30][31][34][35][37][38] |

| U-Net | U-Net | [28][34][39][40][41][42][43][44][45][46][47][48][49] |

| GAN | GAN, SPGGAN, DCGAN, DDGAN, LAPGAN, PGAN | [6][38][42][50][51][52][53][54][55][56][57] |

| DenseNet | DenseNet 121, DenseNet 161, DenseNet 169, DenseNet 201 | [1][22][25][26][34][35][38][53][57][58] |

| AlexNet | AlexNet | [6][12][30][31][59][60][61][62] |

| Xception | Xception | [25][27][28][31][34][38][53] |

| EfficientNet | EfficientNet, EfficientNetB5, EfficientNetB6 | [32][63][64][65][66][67][68][69] |

| VGG | VGG 16, VGG 19 | [25][28][30][31][32][40][70][71] |

| NASNet | NASNet, NASNet-Large | [5][22][27][72] |

| MobileNet | MobileNet, MobileNet2 | [25][28][32][73] |

| YOLO | YOLO v3, YOLO v4, YOLO v5 | [74][75][76] |

| FrNet | FrNet | [77] |

| Mask R_CNN | Mask R_CNN | [78] |

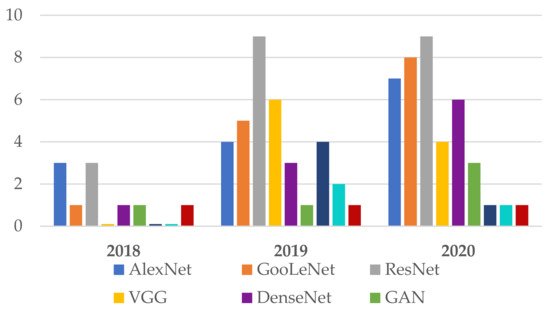

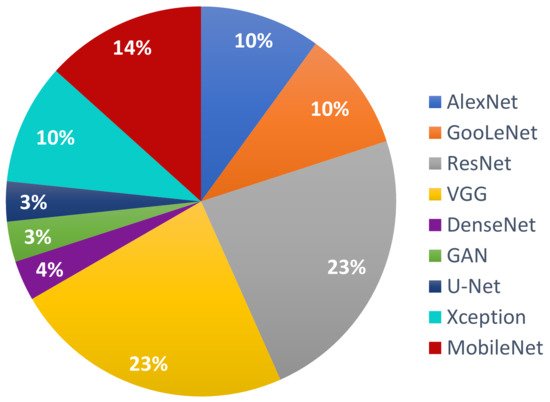

Following the investigation of the Web of Science DB between the years 2018 and 2020 (Figure 3), it can be found that the most used NN in the detection of Me were those in the family ResNet, followed by the families: VGG, GoogLeNet, and AlexNet. For the year 2021, the tendency is for ResNet and VGG networks (Figure 4). Figure 3 marks the number of appearances in the years 2018, 2019, and 2020, and Figure 4 the percentage of appearances in 2021 (unfinished year).

Figure 3. Frequently NNs used in Me detection between 2018 and 2020.

Figure 4. The most used NNs for Me detection in 2021 (percentage).

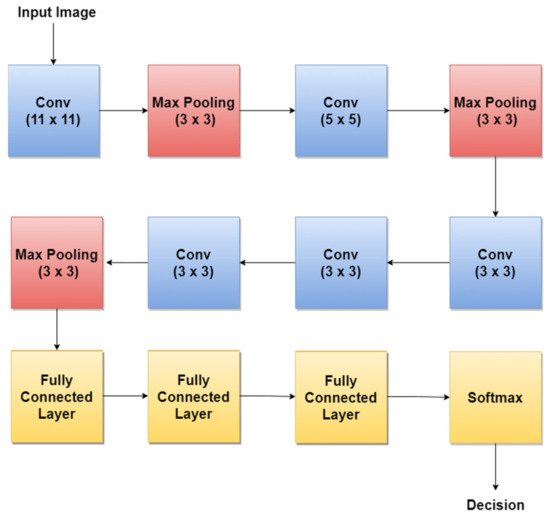

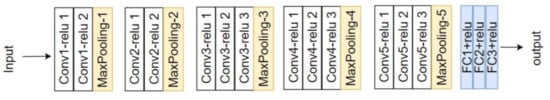

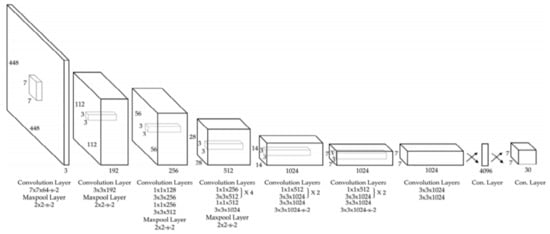

2.1. AlexNet

AlexNet [79] is one of the first CNNs widely used in SL classification tasks via TL. The basic architecture (Figure 5) is composed of eight layers, out of which five are convolutional layers (Conv) and three are fully connected layers (FC). The first and second layers are followed by Max Pooling layers (MPX) and Local Response Normalization (LRN), while the third, fourth, and fifth are followed by ReLU (Rectified Linear Units) [80]. The last layer (Softmax layer) has 1000 neurons and is used for the classification task (1000 classes). The number of layers specified in the above architecture is not what makes AlexNet special. AlexNet replaced the Tanh function with ReLU for speed enhancement in terms of training time. In Figure 5, at each layer, the number of neurons is specified.

Figure 5. AlexNet basic architecture.

For example, in 2018, the authors in [30] trained AlexNet using TL, together with three other architectures: GoogLeNet, ResNet, and VGGNet to achieve a better ACC in such classification tasks. By training AlexNet to classify SL, the authors obtained an average ACC of about 85%. Other research papers such as: [12][59][60] used the trained AlexNet for SL diagnosis.

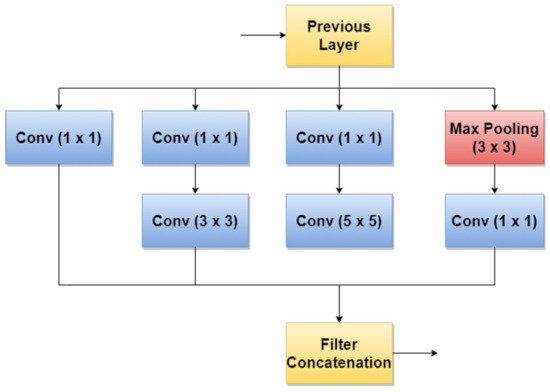

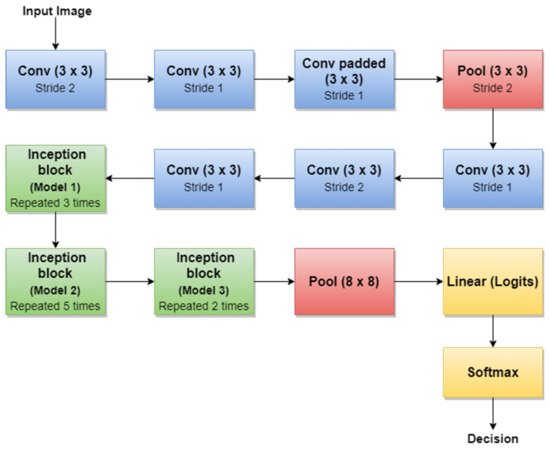

2.2. GoogLeNet/Inception

GoogLeNet, also named Inception v1, is a CNN proposed by researchers at Google in 2014 [81]. Its architecture was the winner of the ILSVRC 2014 image classification challenge (ImageNet Large Scale Visual Recognition Challenge 2014) and performed better in terms of error rate compared with previous winners: AlexNet in 2012 and ZG-Net in 2013. New features of GoogLeNet are the following: 1 × 1 convolution, global average pooling, an Inception module, and an auxiliary classifier for training. The 1 × 1 convolution blocks were introduced to decrease the number of parameters in general (weights and biases), which of course led to a depth increase of the architecture. The network’s basic block is the Inception module, where 1 × 1, 3 × 3, 5 × 5 convolutions, and 3 × 3 Max Pooling blocks perform in parallel. The outputs of these blocks are concatenated and fed to the next layer. The Inception module was introduced since different convolutions blocks of different sizes handle objects better at multiple scales. Figure 6 illustrates the components of the Inception module used in GoogLeNet.

Figure 6. Inception module used in GoogLeNet.

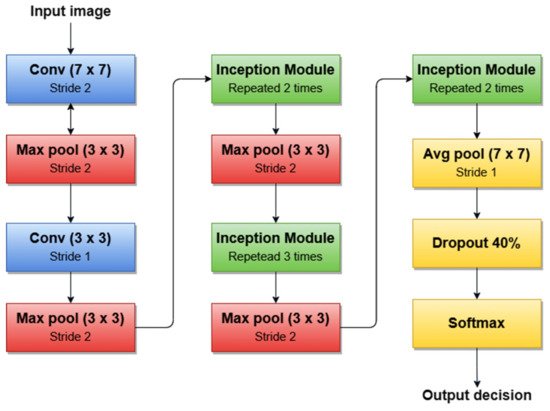

A simplified architecture of GoogLeNet is 22 layers deep (Figure 7). The network takes a color image (RGB) of size 224 × 224 pixels as input and provides the classification result (out of 1000 classes) as output, using a Softmax layer of 1000 neurons. Another important aspect to mention is that all convolutions inside the architecture use ReLU as an activation function.

Figure 7. GoogleNet architecture’s simplified block diagram.

For example, the authors in [30] used the first version of GoogLeNet (Inception v1) as the basic CNN from which they started TL for SL diagnosis. Additionally, the authors in [5] (published in 2020) trained Goog-LeNet for the Me classification task, which shows that this architecture added a lot of value with its newly introduced features. Recently, a series of published SL diagnosis systems used newer versions of GoogLeNet. For instance [28] related to Me and the nevus SL classification task uses the Inception v3 NN [82] with 42 layers deep. Figure 8 illustrates the overall architecture of the Inception v3 network.

Figure 8. Inception v3 basic architecture.

An important observation is that Batch Norm (Batch Normalization) and ReLU blocks are used after each convolution. The basic idea of Inception v3 NN and what makes it more special than the first version (GoogLeNet—Inception v1) is to reduce the number of connections/parameters without decreasing the network efficiency. This is one of the reasons why researchers also investigate the performance of this CNN in their applications. Inception v3 uses “Factorizing convolutions” by replacing the 5 × 5 convolution filter represented in Figure 6 with two convolution filters 3 × 3. This procedure reduces the number of parameters from 25 to 18. The same technique was also used in VGG Net [83]. Another important novelty introduced by Inception v3 is related to factorization into asymmetric convolutions which means that a 3 × 3 convolution filter will be replaced by one 3 × 1 convolution filter followed by one 1 × 3 convolution filter.

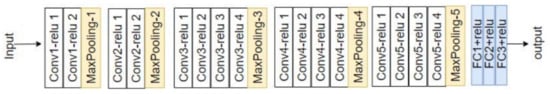

2.3. VGG Networks

VGG is a NN family with the first representative VGG 16, which is widely used in SL diagnosis. VGG16 [84] is slightly similar to, but larger, than AlexNet, being 16 layers deep and containing only small 3 × 3 convolution filters (Figure 9). For instance, the authors in [30][32][39] used a TL technique to train VGG 16 to achieve SL diagnosis.

Figure 9. VGG 16 network architecture [84].

VGG 16 model achieves a 92.7% top-5 test ACC in ImageNet BD (14 million images belonging to 1000 classes) and was the winner of ILSVRC-2014. With this model, an improvement can be seen over AlexNet, since it replaces large filters such as 11 × 11 and 5 × 5 with multiple smaller 3 × 3 filters, making the network deeper (ascending trend for obtaining a better ACC). The same behavior of “Factorizing Convolutions” was also used in GoogLeNet Inception v3.

VGG 19, shown in Figure 10 [84], is another VGG network used in SL (especially Me) diagnostic research papers in the literature. This time, the model becomes deeper (19 layers, out of which 3 are fully connected layers). According to our survey, examples of paper works related to SL diagnosis are [28][32]. Both papers mentioned as examples were published in 2020 and represent comparative studies between multiple networks to find the most accurate and precise ones for SL diagnosis tasks. What can also be noticed is that, in terms of compared networks, apart from VGG 16 and VGG 19, other deeper networks such as ResNet-50 (50 layers deep) and DenseNet-201 (201 layers deep) are involved. This means that the trend in using NNs for SLs diagnosis is to use deeper networks to achieve better ACC and precision. Of course, this can lead to more and more network parameters and large computation time in terms of the learning task, which will continue to be a subject of research.

Figure 10. VGG 19 network architecture [84].

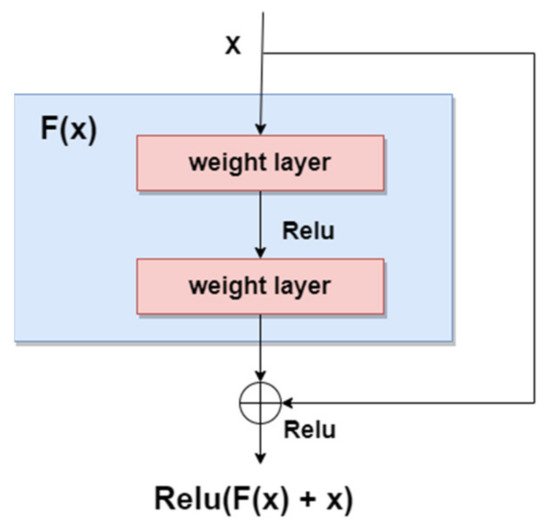

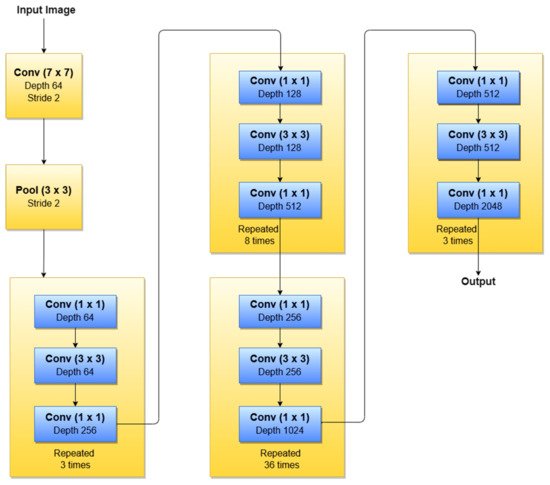

2.4. ResNet

As we mentioned in the previous sections, the general trend for segmentation, detection, and classification tasks is to use deeper NNs. However, it was demonstrated that, as we go deeper with more and more layers with “plain” networks, the training error will start to increase over time. Therefore, very deep NNs are in general hard to train because of vanishing and exploding gradients kind of problems. To avoid this issue, researchers introduced “skip connections” in the networks which allow them to take the activation from one layer and feed it to another layer, even much deeper in the NN. This allows building “Residual” networks, instead of “Plain” networks, thus building very deep NNs (over hundreds of layers deep). The newly introduced “Residual” network [85] solves the problem of the vanishing gradient in deep NNs by allowing the shortcut presented in Figure 11. In this way, the gradient can flow through. With this new feature, ResNet won first place in the ILSVRC 2015 competition with an error rate of 3.57%. It also won the COCO 2015 competition for detection and segmentation problems.

Figure 11. Residual block.

According to our search related to SLs diagnosis (Table 1), in terms of the ResNet family, the most used NNs for detection, segmentation, and classification task, are ResNet-34, ResNet-50, ResNet-101, and ResNet-152. As can be seen in Figure 12, ResNet-152 is a 152-layer-deep CNN composed of residual blocks which solve the vanishing gradient issue when training deep NNs. An example of an SL diagnosis paper that uses ResNet-34 is [32]. Another residual network used in SL diagnosis tasks is ResNet-50 (50 layers deep), which was used for instance in [26][28][32][35], all published in 2020. A residual network 101 layers deep, used in SL diagnosis, is ResNet-101 ([5][27][28][35], all published in 2020). Of course, there are also other studies, such as [23][24][33][82], that use a deeper “residual” network (representing the trend of using more deeper networks for better ACC) called ResNet-152 (152 layers deep).

Figure 12. ResNet-152 basic architecture.

2.5. YOLO Networks

YOLO (You Only Look Once) is a CNN widely used in real-time object detection tasks and commonly used network in Me detection papers (usually YOLO v3 and YOLO v4). According to [86], YOLO is a “new approach to object detection” by using a single NN to “predict bounding boxes and class probabilities directly from full images in one evaluation”. YOLO is composed of 24 convolutional layers followed by two fully connected layers which were pre-trained on ImageNet DB, similarly to other commonly used networks. As can be seen in Figure 13 [87], the network contains some alternating 1 × 1 convolution filters which are mainly used to reduce the features space from the preceding layers. This looks similar to what GoogLeNe—Inception v3 introduced. There are multiple versions of YOLO, out of which, according to our research, the most used CNNs for Me detection tasks are YOLO v3 and YOLO v4 [74].

Figure 13. YOLO v3 architecture [87].

YOLO v3 is an incremental improvement of the previous YOLO v2 which was based on DarkNet-19 network. According to the authors in [88], the network is bigger than YOLO v2, with increased ACC, and is fast enough. The authors proposed a hybrid approach between DarkNet-19 and a residual network (inspired from ResNet). The new architecture is based on 53 convolutional layers called DarkNet-53. As we already mentioned, YOLO v3 is used in Me detection tasks. For instance, the authors in [75][76] used YOLO v3 for benign/malignant Me or seborrheic keratosis detection. There is also the YOLO v4 version with an increasing speed, used in Me detection and segmentation [74].

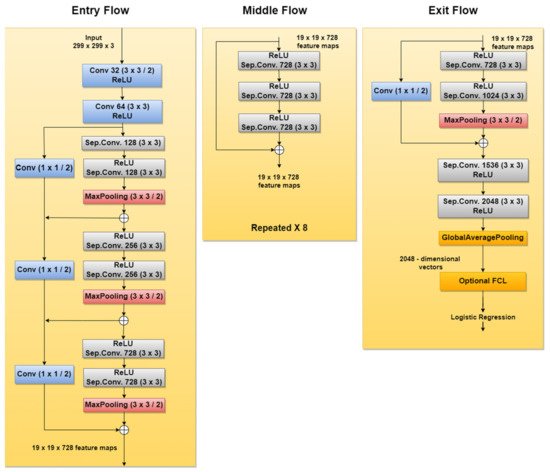

2.6. Xception Network

Xception is another CNN used in SL diagnosis tasks. For instance, new related papers are [27][28], both being published in 2020. According to [89], this network was inspired by GoogLeNet Inception NN also developed by Google researchers and was meant to obtain better performance by replacing the standard Inception modules with depthwise separable convolutions. The Xception architecture (Figure 14), which outperforms Inception v3, contains 36 convolutional layers structured in 14 modules, all with residual connections around them [90].

Figure 14. Xception network architecture.

2.7. MobileNet

MobileNet is a type of NN designed for mobile and embedded vision applications [91]. Since this CNN is deployed on mobile devices, memory usage should be taken seriously into consideration. Therefore, to decrease the complexity and to reduce the model size, the architecture is based on depthwise separable convolution blocks, as in the case of Xception NN described in the previous section.

There are multiple versions of MobileNet, out of which, according to this research, MobileNet-v1 and MobileNet-v2 are the most used in SL diagnosis papers. For instance [28][32], both published in 2020, use MobileNet-v1; meanwhile, newer papers such as [73] use MobileNet-v2 (deeper and improved version of MobileNet-v1) in such applications.

As we already mentioned, MobileNet NN reduces the complexity and number of network parameters using depthwise separable convolutions (1 × 1 convolution applied on each of the RGB channels). However, it also uses pointwise convolution with a 1 × 1 kernel (depth equal to the number of channels of the image) which iterates through every single point. To this end, MobileNet-v1 uses 13 blocks composed of depthwise separable convolution and pointwise convolution. However, researchers were focused on obtaining better results. Therefore, MobileNet-v2 came about as an improved version of MobileNet-v1. The first important change was marked by the fact that the network is now composed of 17 bottleneck blocks, each of them containing an expansion module, a depthwise separable convolution, and a pointwise convolution. The expansion block was introduced to increase the size of the representation within the bottleneck block to allow the NN to learn a richer function. The pointwise convolution will then “down” project the data so that they reach the initial size. Another important issue introduced in MobileNet-v2 is the residual connections around the bottleneck blocks, to solve the “vanishing gradient” problem, as in the case of ResNet. Of course, both versions end with a Max Pooling layer, followed by Fully Connected layers, and finally followed by a Softmax layer.

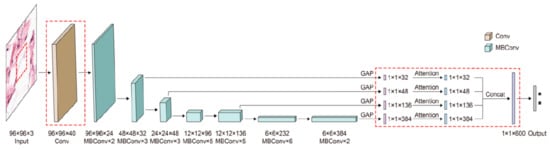

2.8. EfficientNet

As we have already mentioned in previous sections, researchers tend to obtain better results in terms of ACC and other performance metrics. For this to happen, the trend is to design deeper CNNs. For example, ResNet can be scaled up to ResNet 200 by increasing the number of layers. Authors in [92] propose a novel model scaling approach that uses compound coefficients to scale up CNNs in a more structured manner. This method uniformly scales each dimension with a fixed set of scaling coefficients. The authors also demonstrated the effectiveness of the proposed method on scaling up MobileNets and ResNets. In the same paper, they also build different versions of EfficientNet (EfficientNet B0–B7), all of them with better ACC than the networks with which they were compared. Another example of a recent paper [32] used EfficientNet to improve ACC for pigmented SL classification. The architecture (Figure 15) is based on MBConv blocks (inverted residual blocks), originally applied on MobileNet-v2 [93].

Figure 15. EfficientNet architecture [93].

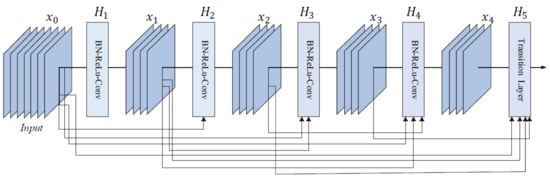

2.9. DenseNet

DenseNet is a CNN family often used in SL diagnosis. Examples of papers using DenseNet (especially DenseNet-201) are ([1][26][32], all of them published in 2020). Therefore, DenseNet represents a trend for recently published papers because of its efficiency and better ACC. The reason is that, in the initial paper [94], the authors introduced densely connected layers, thus modifying the standard CNN architecture as in Figure 16. In DenseNet, each layer is fed with additional inputs from all preceding layers and provides its own input/feature map to all subsequent layers. In this way, each layer obtains knowledge from previous layers. Therefore, it is obvious that this becomes more powerful than ResNet, obtaining a stronger gradient flow, more diversified features, and a smaller network size. DenseNet-121, DenseNet-169, DenseNet-201, and DenseNet-264 are DenseNet networks presented in different works.

Figure 16. Five-layer DenseNet architecture [94].

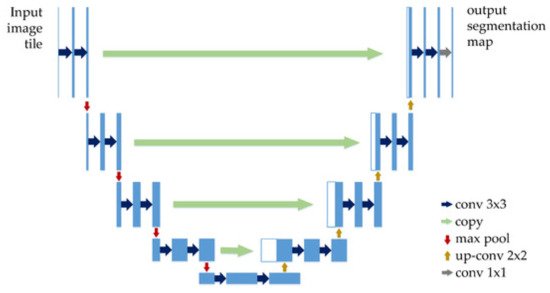

2.10. U-Net

Recent papers such as [44] used U-Net CNN for SL segmentation. As can be seen in Figure 16, U-Net has a “U” form, being composed of 23 convolutional layers. After each max pooling operation, the number of feature channels is increased by the previous number of feature channels, multiplied by two. The number of channels is increased until it reaches 1024 and then starts to decrease (dividing by 2, after each 2 × 2 up-conv block). This architecture contains four sections: the encoder, the bottleneck, the decoder, and the skip connections (Figure 17). The bottleneck layer is a section between the down-sampling path (encoder) and up-sampling path (decoder), containing the smallest size of the feature map and the biggest number of filters. The skip connections are between the corresponding blocks of the encoder and decoder.

Figure 17. U-Net architecture [95].

According to the original paper [96], U-Net achieved very good performance on very different biomedical segmentation applications. This is one of the important reasons why researchers tend to use it in Me detection and segmentation-related papers.

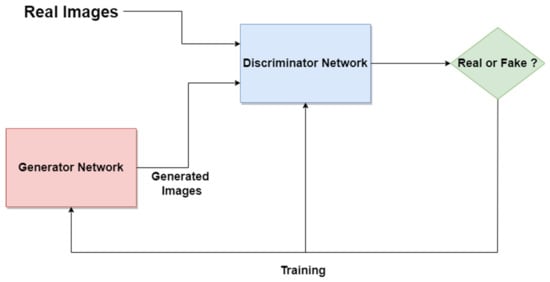

2.11. Generative Adversarial Network

The Generative Adversarial Network (GAN) is another type of artificial NN that was used in the design of Me and SL diagnosis and segmentation systems. The GAN is composed of two different networks (main blocks), as can be seen in Figure 18. The first one is the generator network which learns how to generate real-like data, while the second one is a discriminator network which learns how to detect fake data and not to classify them as real data. Both networks are competing and playing an adversarial zero-sum game [97]. The main blocks try to optimize objective functions. GAN was proposed for image synthesis tasks. Starting from this idea, the GAN is used in melanoma segmentation as a generative model based on supervised learning.

Figure 18. GAN standard network architecture.

According to our research, examples of research papers in this domain are [50][51][52][98], all of them proposing modified variants of GANs, such as SPGGAN (Self-attention Progressive Growing of Generative Adversarial Network), DCGAN (Deep Convolutional Generative Adversarial Network), DDGAN (Deeply Discriminated Generative Adversarial Network), LAPGAN (Laplacian Generative Adversarial Network), etc. There were other research papers involving the combination of GANs with other CNNs, such as Xception, Inception v3, etc. One example is [38], which presents an ensemble strategy of group decision for an accurate diagnosis.

References

- Adegun, A.; Viriri, S. FCN-based DenseNet framework for automated detection and classification of skin lesions in dermoscopy images. IEEE Access 2020, 8, 150377–150396.

- Matthews, N.H.; Li, W.Q.; Qureshi, A.A.; Weinstock, M.A.; Cho, E. Epidemiology of melanoma. In Cutaneous Melanoma: Etiology and Therapy ; Ward, W.H., Farma, J.M., Eds.; Codon Publications: Brisbane, Australia, 2017.

- Lideikaitė, A.; Mozūraitienė, J.; Letautienė, S. Analysis of prognostic factors for melanoma patients. Acta Med. Litu. 2017, 24, 25–34.

- Sun, X.; Zhang, N.; Yin, C.; Zhu, B.; Li, X. Ultraviolet radiation and melanomagenesis: From mechanism to immunotherapy. Front. Oncol. 2020, 10, 951.

- El-Khatib, H.; Popescu, D.; Ichim, L. Deep learning–based methods for automatic diagnosis of skin lesions. Sensors 2020, 20, 1753.

- Ichim, L.; Popescu, D. Melanoma detection using an objective system based on multiple connected neural networks. IEEE Access 2020, 8, 179189–179202.

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P. Automated melanoma recognition in dermoscopy images via very deep residual networks. IEEE Trans. Med. Imaging 2017, 36, 994–1004.

- Vestergaard, M.E.; Macaskill, P.H.P.M.; Holt, P.E.; Menzies, S.W. Dermoscopy compared with naked eye examination for the diagnosis of primary melanoma: A meta-analysis of studies performed in a clinical setting. Br. J. Dermatol. 2008, 159, 669–676.

- Ara, A.; Deserno, T.M. A systematic review of automated melanoma detection in dermatoscopic images and its ground truth data. In Proceedings of the SPIE Medical Imaging 2012: Image Perception, Observer Performance, and Technology Assessment, San Diego, CA, USA, 12–16 August 2012; pp. 83181I-1–83181I-11.

- Fabbrocini, G.; De Vita, V.; Pastore, F.; D’Arco, V.; Mazzella, C.; Annunziata, M.C.; Cacciapuoti, S.; Mauriello, M.C.; Monfrecola, A. Teledermatology: From prevention to diag-nosis of nonmelanoma and melanoma skin cancer. Int. J. Telemed. Appl. 2011, 17, 125762.

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Berking, C.; Haferkamp, S.; Hauschild, A.; Weichenthal, M.; Klode, J.; Schadendorf, D.; Holland-Letz, T.; et al. Deep neural networks are superior to dermatologists in melanoma image classification. Eur. J. Cancer 2019, 119, 11–17.

- Esteva, A.; Kuprel, B.; Novoa, R.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118.

- Olugbara, O.O.; Taiwo, T.B.; Heukelman, D. Segmentation of melanoma skin lesion using perceptual color difference saliency with morphological analysis. Math. Probl. Eng. 2018, 2018, 1524286.

- Gutman, D.; Codella, N.C.F.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). arXiv 2016, arXiv:1605.01397.

- Oliveira, R.B.; Filho, E.M.; Ma, Z.; Papa, J.P.; Pereira, A.S.; Tavares, J.M.R.S. Computational methods for the image segmentation of pigmented skin lesions: A review. Comput. Methods Programs Biomed. 2016, 131, 127–141.

- Merjulah, R.; Chandra, J. Classification of myocardial ischemia in delayed contrast enhancement using machine learning. In Intelligent Data Analysis for Biomedical Applications, 1st ed.; Hemanth, D.J., Gupta, D., Balas, V.E., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 209–235.

- Guo, Y.; Ashour, A.S. Neutrosophic sets in dermoscopic medical image segmentation. In Neutrosophic Set in Medical Image Analysis, 1st ed.; Academic Press: Cambridge, MA, USA, 2019; pp. 229–243.

- Alcon, J.F.; Ciuhu, C.; ten Kate, W.; Heinrich, A.; Uzunbajakava, N.; Krekels, G.; Siem, D.; de Haan, G. Automatic imaging system with decision support for inspection of pigmented skin lesions and melanoma diagnosis. IEEE J. Sel. Top. Signal Process. 2009, 3, 14–25.

- Capdehourat, G.; Corez, A.; Bazzano, A.; Alonso, R.; Musé, P. Toward a combined tool to assist dermatologists in melanoma detection from dermoscopic images of pigmented skin lesions. Pattern Recognit. Lett. 2011, 32, 2187–2196.

- Ramezani, M.; Karimian, A.; Moallem, P. Automatic detection of malignant melanoma using macroscopic images. J. Med. Signals Sens. 2014, 4, 281–290.

- Mitchell, T.M. Machine Learning; OCLC 36417892; McGraw Hill: New York, NY, USA, 1997; pp. 1–432.

- Bajwa, M.N.; Muta, K.; Malik, M.I.; Siddiqui, S.A.; Braun, S.A.; Homey, B.; Dengel, A.; Ahmed, S. Computer-aided diagnosis of skin diseases using deep neural networks. Appl. Sci. 2020, 10, 2488.

- Jojoa Acosta, M.F.; Caballero Tovar, L.Y.; Garcia-Zapirain, M.B.; Percybrooks, W.S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med. Imaging 2021, 21, 6.

- Ahmad, B.; Usama, M.; Huang, C.; Hwang, K.; Hossain, M.S.; Muhammad, G. Discriminative feature learning for skin disease classification using deep convolutional neural network. IEEE Access 2020, 8, 39025–39033.

- Almeida, M.A.M.; Santos, I.A.X. Classification models for skin tumor detection using texture analysis in medical images. J. Imaging 2020, 6, 51.

- Al-Masni, M.A.; Kim, D.-H.; Kim, T.-S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Comput. Methods Programs Biomed. 2020, 190, 105351.

- Chaturvedi, S.S.; Tembhurne, J.V.; Diwan, T. A multi-class skin cancer classification using deep convolutional neural networks. Multimed. Tools Appl. 2020, 79, 28477–28498.

- Almaraz-Damian, J.-A.; Ponomaryov, V.; Sadovnychiy, S.; Castillejos-Fernandez, H. Melanoma and nevus skin lesion classification using handcraft and deep learning feature fusion via mutual information measures. Entropy 2020, 22, 484.

- Goceri, E. Analysis of deep networks with residual blocks and different activation functions: Classification of skin diseases. In Proceedings of the Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 6–9 November 2019; pp. 1–6.

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32.

- Kassani, S.H.; Kassani, P.H. A comparative study of deep learning architectures on melanoma detection. Tissue Cell 2019, 58, 76–83.

- Lucius, M.; De All, J.; De All, J.A.; Belvisi, M.; Radizza, L.; Lanfranconi, M.; Lorenzatti, V.; Galmarini, C.M. Deep neural frameworks improve the accuracy of general practitioners in the classification of pigmented skin lesions. Diagnostics 2020, 10, 969.

- Mendes, D.B.; da Silva, N.C. Skin lesions classification using convolutional neural networks in clinical images. arXiv 2018, arXiv:1812.02316.

- Song, J.; Li, J.; Ma, S.; Tang, J.; Guo, F. Melanoma classification in dermoscopy images via ensemble learning on deep neural network. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea, 16–19 December 2020; pp. 751–756.

- Akram, T.; Lodhi, H.M.J.; Naqvi, S.R.; Naeem, S.; Alhaisoni, M.; Ali, M.; Haider, S.A.; Qadri, N.N. A multilevel features selection framework for skin lesion classification. Hum. Cent. Comput. Inf. Sci. 2020, 10, 12.

- Kassem, M.A.; Hosny, K.M.; Fouad, M.M. Skin lesions classification into eight classes for ISIC 2019 using deep convolutional neural network and transfer learning. IEEE Access 2020, 8, 114822–114832.

- Albert, B.A. Deep learning from limited training data: Novel segmentation and ensemble algorithms applied to automatic melanoma diagnosis. IEEE Access 2020, 8, 31254–31269.

- Gong, A.; Yao, X.; Lin, W. Classification for dermoscopy images using convolutional neural networks based on the ensemble of individual advantage and group decision. IEEE Access 2020, 8, 155337–155351.

- Adegun, A.; Viriri, S. Deep learning model for skin lesion segmentation fully convolutional network. Lect. Notes Comput. Sci. 2019, 11663, 232–242.

- Ali, R.; Hardie, R.C.; Narayanan Narayanan, B.; De Silva, S. Deep learning ensemble methods for skin lesion analysis towards melanoma detection. In Proceedings of the IEEE National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 July 2019; pp. 311–316.

- Codella, N.; Nguyen, Q.-B.; Pankanti, S.; Gutman, D.; Helba, B.; Halpern, A.; Smith, J.R. Deep learning ensembles for melanoma recognition in dermoscopy images. arXiv 2016, arXiv:1610.04662.

- Izadi, S.; Mirikharaji, Z.; Kawahara, J.; Hamarneh, G. Generative adversarial networks to segment skin lesions. In Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI), Washington, DC, USA, 4–7 April 2018; pp. 881–884.

- Lin, B.S.; Michael, K.; Kalra, S.; Tizhoosh, H.R. Skin lesion segmentation U-Nets versus clustering. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–7.

- Sanjar, K.; Bekhzod, O.; Kim, J.; Kim, J.; Paul, A.; Kim, J. Improved U-Net: Fully Convolutional Network Model for Skin-Lesion Segmentation. Appl. Sci. 2020, 10, 3658.

- Seeja, R.D.; Suresh, A. Deep learning based skin lesion segmentation and classification of melanoma using support vector machine (SVM). Asian Pac. J. Cancer Prev. 2019, 20, 1555–1561.

- Tran, S.-T.; Cheng, C.-H.; Nguyen, T.-T.; Le, M.-H.; Liu, D.-G. TMD-Unet: Triple-Unet with multi-scale input features and dense skip connection for medical image segmentation. Healthcare 2021, 9, 54.

- Wang, N.; Peng, Y.; Wang, Y.; Wang, M. Skin lesion image segmentation based on adversarial networks. KSII Trans. Internet Inf. Syst. 2018, 12, 2826–2840.

- Wei, L.; Ding, K.; Hu, H. Automatic skin cancer detection in dermoscopy images based on ensemble lightweight deep learning network. IEEE Access 2020, 8, 99633–99647.

- Zafar, K.; Gilani, S.O.; Waris, A.; Ahmed, A.; Jamil, M.; Khan, M.N.; Sohail Kashif, A. Skin lesion segmentation from dermoscopic images using convolutional neural network. Sensors 2020, 20, 1601.

- Bauer, C.; Albarqouni, S.; Navab, N. MelanoGANs: High resolution skin lesion synthesis with GANs. arXiv 2018, arXiv:1804.04338.

- Bi, L.; Feng, D.; Fulham, M.; Kim, J. Improving skin lesion segmentation via stacked adversarial learning. In Proceedings of the IEEE 16th International Symposium on Biomedical Imaging (ISBI), Venice, Italy, 8–11 April 2019; pp. 1100–1103.

- Bissoto, A.; Perez, F.; Valle, E.; Avila, S. Skin lesion synthesis with Generative Adversarial Networks. Lect. Notes Comput. Sci. 2018, 11041, 294–302.

- Gong, A.; Yao, X.; Lin, W. Dermoscopy image classification based on StyleGANs and decision fusion. IEEE Access 2020, 8, 70640–70650.

- Gu, Y.; Ge, Z.; Bonnington, C.P.; Zhou, J. Progressive transfer learning and adversarial domain adaptation for cross-domain skin disease classification. IEEE J. Biomed. Health Inform. 2020, 24, 24–1379.

- Qin, Z.; Liu, Z.; Zhu, P.; Xue, Y. A GAN-based image synthesis method for skin lesion classification. Comput. Methods Programs Biomed. 2020, 195, 105568.

- Yi, X.; Walia, E.; Babyn, P. Unsupervised and semi-supervised learning with categorical Generative Adversarial Networks assisted by Wasserstein distance for dermoscopy image classification. arXiv 2018, arXiv:1804.03700.

- Zhao, C.; Shuai, R.; Ma, L.; Liu, W.; Hu, D.; Wu, M. Dermoscopy image classification based on StyleGAN and DenseNet201. IEEE Access 2021, 9, 8659–8679.

- Goceri, E. Deep learning-based classification of facial dermatological disorders. Comput. Biol. Med. 2021, 128, 104118.

- Ashraf, R.; Afzal, S.; Rehman, A.U.; Gul, S.; Baber, J.; Bakhtyar, M.; Mehmood, I.; Song, O.-Y.; Maqsood, M. Region-of-interest based transfer learning assisted framework for skin cancer detection. IEEE Access 2020, 8, 147858–147871.

- Dorj, U.O.; Lee, K.K.; Choi, J.Y.; Lee, M. The skin cancer classification using deep convolutional neural network. Multimed. Tools Appl. 2018, 77, 9909–9924.

- Kaymak, R.; Kaymak, C.; Ucar, A. Skin lesion segmentation using fully convolutional networks: A comparative experimental study. Expert Syst. Appl. 2020, 161, 113742.

- Osowski, S.; Les, T. Deep learning ensemble for melanoma recognition. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7.

- Gessert, N.; Nielsen, M.; Shaikh, M.; Werner, R.; Schlaefer, A. Skin lesion classification using ensembles of multi-resolution EfficientNets with metadata. MethodsX 2020, 7, 100864.

- Ha, Q.; Liu, B.; Liu, F. Identifying melanoma images using efficient net ensemble: Winning solution to the SIIM-ISIC melanoma classification challenge. arXiv 2020, arXiv:2010.05351.

- Jiahao, W.; Xingguang, J.; Yuan, W.; Luo, Z.; Yu, Z. Deep neural network for melanoma classification in dermoscopic images. In Proceedings of the IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 666–669.

- Karki, S.; Kulkarni, P.; Stranieri, A. Melanoma classification using EfficientNets and Ensemble of models with different input resolution. In Proceedings of the Australasian Computer Science Week Multiconference (ACSW), Dunedin, New Zealand, 1–5 February 2021; pp. 1–5, Article No.: 17.

- Pham, T.-C.; Doucet, A.; Luong, C.-M.; Tran, C.-T.; Hoang, V.-D. Improving skin-disease classification based on customized loss function combined with balanced mini-batch logic and real-time image augmentation. IEEE Access 2020, 8, 150725–150737.

- Putra, T.A.; Rufaida, S.I.; Leu, J. Enhanced skin condition prediction through machine learning using dynamic training and testing augmentation. IEEE Access 2020, 8, 40536–40546.

- Zhang, R. Melanoma detection using convolutional neural network. In Proceedings of the IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 75–78.

- Huang, L.; Zhao, Y.-g.; Yang, T.-j. Skin lesion segmentation using object scale-oriented fully convolutional neural networks. Signal Image Video Process. 2019, 13, 431–438.

- Jaworek-Korjakowska, J.; Kleczek, P.; Gorgon, M. Melanoma thickness prediction based on convolutional neural network with VGG-19 model transfer learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 2748–2756.

- Kwasigroch, A.; Grochowski, M.; Mikołajczyk, A. Neural architecture search for skin lesion classification. IEEE Access 2020, 8, 9061–9071.

- Toğaçar, M.; Cömert, Z.; Ergen, B. Intelligent skin cancer detection applying autoencoder, MobileNetV2 and spiking neural networks. Chaos Solitons Fractals 2021, 144, 110714.

- Albahli, S.; Nida, N.; Irtaza, A.; Yousaf, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using YOLOv4-DarkNet and active contour. IEEE Access 2020, 8, 198403–198414.

- Banerjee, S.; Singh, S.K.; Chakraborty, A.; Das, A.; Bag, R. Melanoma diagnosis using deep learning and fuzzy logic. Diagnostics 2020, 10, 577.

- Ünver, H.M.; Ayan, E. Skin lesion segmentation in dermoscopic images with combination of YOLO and GrabCut algorithm. Diagnostics 2019, 9, 72.

- Al-masni, M.A.; Al-antari, M.A.; Choi, M.-T.; Han, S.-M.; Kim, T.-S. Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput. Methods Programs Biomed. 2018, 162, 221–231.

- Goyal, M.; Oakley, A.; Bansal, P.; Dancey, D.; Yap, M.H. Skin lesion segmentation in dermoscopic images with ensemble deep learning methods. IEEE Access 2020, 8, 4171–4181.

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1106–1114.

- Alom, Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, S.; Van Essen, B.C.; Awwal, A.A.S.; Asari, V.K. The history began from AlexNet: A comprehensive survey on deep learning approaches. arXiv 2018, arXiv:1803.01164.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9.

- Nguyen, L.D.; Lin, D.; Lin, Z.; Cao, J. Deep CNNs for microscopic image classification by exploiting transfer learning and feature concatenation. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5.

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015.

- Deng, M.; Goy, A.; Li, S.; Arthur, K.; Barbastathis, G. Probing shallower: Perceptual loss trained phase extraction neural network (PLT-PhENN) for artifact-free reconstruction at low photon budge. Opt. Express 2020, 28, 2511–2535.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. arXiv 2015, arXiv:1506.02640.

- Koylu, C.; Zhao, C.; Shao, W. Deep neural networks and kernel density estimation for detecting human activity patterns from geo-tagged images: A case study of birdwatching on flickr. ISPRS Int. J. Geo Inf. 2019, 8, 45.

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767.

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807.

- Leonardo, M.M.; Carvalho, T.J.; Rezende, E.; Zucchi, R.; Faria, F.A. Deep feature-based classifiers for fruit fly identification (Diptera: Tephritidae). In Proceedings of the 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 41–47.

- Howard, A.G.; Zhu, M.; Chen, B.; Kelenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861.

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2020, arXiv:1905.11946.

- Wang, J.; Liu, Q.; Xie, H.; Yang, Z.; Zhou, H. Boosted EfficientNet: Detection of Lymph Node Metastases in Breast Cancer Using Convolutional Neural Networks. Cancers 2021, 13, 661.

- Huang, W.; Feng, J.; Wang, H.; Sun, L. A New Architecture of Densely Connected Convolutional Networks for Pan-Sharpening. ISPRS Int. J. Geo Inf. 2020, 9, 242.

- Yang, D.; Liu, G.; Ren, M.; Xu, B.; Wang, J. A Multi-Scale Feature Fusion Method Based on U-Net for Retinal Vessel Segmentation. Entropy 2020, 22, 811.

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. 2015, 9351, 234–241.

- Benjdira, B.; Ammar, A.; Koubaa, A.; Ouni, K. Data-efficient domain adaptation for semantic segmentation of aerial imagery using Generative Adversarial Networks. Appl. Sci. 2020, 10, 1092.

- Abdelhalim, I.S.A.; Mohamed, M.F.; Mahdy, Y.B. Data augmentation for skin lesion using self-attention based progressive generative adversarial network. Expert Syst. Appl. 2021, 165, 113922.

More

Information

Contributor

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.2K

Revisions:

2 times

(View History)

Update Date:

26 Jan 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No