Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Omar Mata | + 2095 word(s) | 2095 | 2022-01-06 08:04:00 | | | |

| 2 | Yvaine Wei | Meta information modification | 2095 | 2022-01-10 03:03:51 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Mata, O. Designing a Robot against COVID-19—Robocov. Encyclopedia. Available online: https://encyclopedia.pub/entry/17890 (accessed on 08 February 2026).

Mata O. Designing a Robot against COVID-19—Robocov. Encyclopedia. Available at: https://encyclopedia.pub/entry/17890. Accessed February 08, 2026.

Mata, Omar. "Designing a Robot against COVID-19—Robocov" Encyclopedia, https://encyclopedia.pub/entry/17890 (accessed February 08, 2026).

Mata, O. (2022, January 07). Designing a Robot against COVID-19—Robocov. In Encyclopedia. https://encyclopedia.pub/entry/17890

Mata, Omar. "Designing a Robot against COVID-19—Robocov." Encyclopedia. Web. 07 January, 2022.

Copy Citation

Robocov, designed as a rapid response against the COVID-19 Pandemic at Tecnologico de Monterrey, Mexico, with implementations of artificial intelligence and the S4 concept, is a low-cost robot. Robocov can achieve numerous tasks using the S4 concept that provides flexibility in hardware and software. Thus, Robocov can impact positivity public safety, clinical care, continuity of work, quality of life, laboratory and supply chain automation, and non-hospital care. The mechanical structure and software development allow Robocov to complete support tasks effectively so Robocov can be integrated as a technological tool.

COVID-19

S4 products

1. Introduction

The human-robot interaction has been growing up extremely fast since COVID-19 is limiting the human-to-human interaction because an aggressive spread of COVID-19 can be promoted when there are persons undetected with COVID-19. Thus, they have to limit their close contact with another person [1].

On the other hand, designing robots using the S4 concept can be beneficial since several degrees of sensing, smart, sustainable, and social, can be delimited to boost the design process. Products designed using the S4 concept are presented in [2][3]. These products show a significant advantage since they can be designed using different levels of sensing, smart, social, and sustainable features. Thus, those products can be customized, and the selection of materials could also be limited. Besides, it is essential to consider that the concept of S4 products allows incrementing the speed of the design process and decrement the product’s cost. When the product is designed, the level of each S4 feature allows decrementing the cost of the total product. For instance, if the cost of sensors is unaffordable, the level of the smart feature can be incremented to create a more suitable design with a low-cost digital system.

In [4], several examples of S4 products are presented in agriculture applications. In addition, several communication channels between robots and humans can be used according to specific requirements, and those communication channels also have to be tailored. The communication channels between the end-user and the robot could be classified by the stimuli signal received using the robot, such as visual, tactile, smell, taste, and auditory. Furthermore, the signal could be interpreted and adjusted to determine the actions that need to be done.

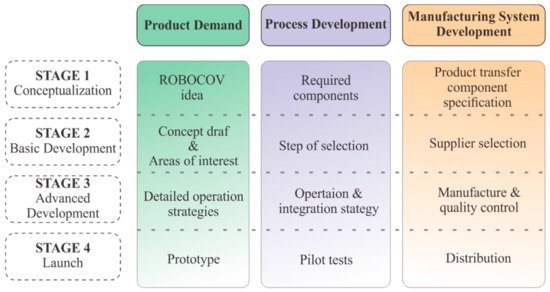

The communication channels are designed to know and solve the end-users necessities, so the communication channel is considered part of the social, smart, and sensing system. Moreover, robots must follow some moral and cultural behaviors according to defined geographical regions or applications in COVID-19; they must be designed to be adopted and accepted swiftly by communities. Figure 1 shows the design process stages for building the reconfigurable robot.

Figure 1. Design and manufacturing stages.

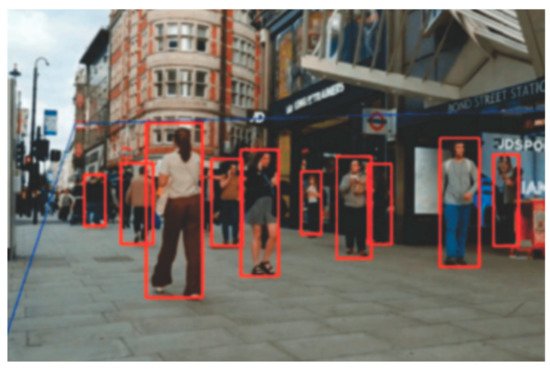

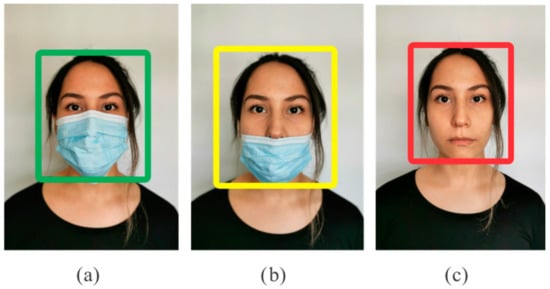

In addition, artificial intelligence (AI) emerged as a powerful computational tool when data are available; according to [5], it could be possible to automatically extract features for object classification. Thus, AI is implemented to detect the face mask’s correct position. Moreover, it could be used for determining the social distance. Moreover, a fuzzy logic controller was designed to avoid collisions against objects. In repetitive tasks such as sanitization, it is possible to use a line follower algorithm to detect a line on the ground that allows recording the trajectory and completing it autonomously (see Figure 2).

Figure 2. Following a line for repetitive tasks.

2. AI Integrated on the Robot

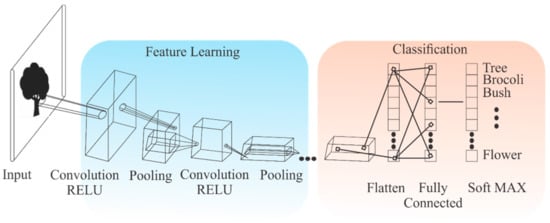

AI has increased its impact since new methodologies, advanced digital systems, and data for training and evaluating the AI methodologies [6]. Convolutional neural networks have been used in different applications related to image classifications. During the COVID-19 pandemic, face masks and social distance detection have been essential to incorporate in public places. Hence, convolutional neural networks have shown to be a good alternative for achieving the recognition requirements in the COVID-19 pandemic [7][8][9][10].

The designed robot integrates essential features needed to control the spread of COVID-19, such as keeping social distance and detecting face masks. Those tasks were selected as autonomous tasks since they are difficult to evaluate when there are crowded spaces, and they are not easy to perform by the human operator. Besides, there are enough databases for training those conditions; a convolution neural network was implemented [11][12]. Besides, the same navigation camera deployed on the robot could evaluate social distance and detect face masks. Since the operator requires a backup for detecting those, the robot integrates an autonomous detection. Figure 3 shows the general topology of a convolutional neural networks~(CNN), and Figure 4 illustrates the detection of social distance, and Figure 5 illustrates the face mask detection. The inference process uses a pre-trained model of approximately 2000 images, and some data augmentation techniques were applied. The robot only sends the image to evaluate on the PC, and then locally, the CNN is run, releasing the onboard processor of computational load. The minimum specification of the PC to run the CNN are:

Figure 3. The general CNN topology, from the input to the classification result.

Figure 4. Social distance detection.

Figure 5. Face mask detection: (a) correct use of the face mask; (b) incorrect use of the face mask; (c) face mask not detected.

3. General Description of the Robocov Systems and Subsystems

Robocov is a reconfigurable robot that has multiple subsystems (modules). Each module is designed to fulfill a specific objective. For example, Sensing (Level 1) describes each subsystem’s measuring and monitoring components. Smart (Level 2) enlists the primary actuators or subsystems. Sustainable (Level 3) analyzes the economic, social, and environmental aspects behind the operation of each module. Finally, the social level describes the interaction of the subsystems with their environments and different users. This analysis is described in Table 1.

Table 1. S3 solution according to the implemented modules in the ROBOCOV unit.

| Description of the Main Implemented S3 Solutions | ||||

|---|---|---|---|---|

| Disinfection system: (Two modules)

|

Vigilance system:

|

Monitoring system:

|

Cargo system:

|

|

| Sensing (Level 1) |

Measuring and monitoring systems using:

|

Measuring and monitoring systems using:

|

Measuring and monitoring systems using:

|

Measuring and monitoring systems using:

|

| Smart (Level 2) |

Actuators:

|

Actuators:

|

Actuators:

|

Actuators:

|

| Sustainable (Level 3) |

Economic:

|

Economic:

|

Economic:

|

Economic:

|

| Social features |

The robot promotes changes in the behavior of the operator by:

|

The robot can improve its performance when the operator is changing their habits | The robot can have a direct interaction with the users, allowing a safe communication channel between users and operator |

The robot functions as a safe delivery service. |

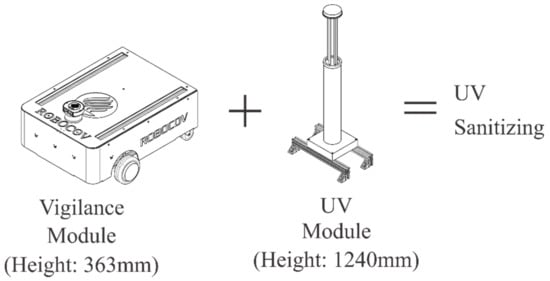

In Table 1, the first module is the disinfection system. The main objective of this module is to sanitize public spaces in an efficient and non-invasive manner; the implementation of two separate modules can achieve this. The first disinfection module is based on UV light for non-invasive disinfection. This module allows Robocov to sanitize any surface and spaces within reach of the UV light (2.5 m from the source) and the correct exposure time (around 30 min). It is essential to clarify that this module should only be used in empty spaces; it has been demonstrated that the UV light used for sanitizing tasks can be harmful to a human user or operator in case of exposure. The Robocov UV light module allows safe and efficient use of this disinfection method owing to a remote control operation. Figure 6 shows how this module is integrated into the Robocov platform.

Figure 6. UV disinfection system.

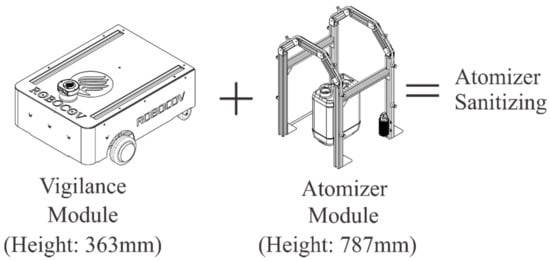

Alternatively, the second disinfection module consists of operating atomizers that distribute a sanitizing solution in the robot’s surroundings using a mechanical designed arch-type structure. This module requires a sanitizer container to transport and provide the sanitizing solution. Moreover, a hose system is designed to reach the distributed atomizers. The latter components are then paired up with a mini-water pump to inject the sanitizing solution from the container to the hose system. In this case, the remote operation of the atomizer module and the safe disinfection are suited for public use. Figure 7 shows the atomized disinfection module and system. The control of both modules within the disinfection system is achieved with onboard Arduino and Raspberry Pi boards; these controller boards and the battery-powered system make these modules a safe, uncomplicated, and economical solution, with minimum environmental impact.

Figure 7. Atomizer disinfection system.

The second system consists of the Vigilance system. This system can be comprehended as the central driving unit, and its main objective is to mobilize the robot as intended by the operator as efficiently as possible. To achieve this, onboard cameras and proximity sensors are installed to guide the operator in the maneuvering tasks. The operator interaction is carried out via an Xbox controller for ergonomic and intuitive commands. This base module can be paired with any other Robocov module, operating cooperatively or individually.

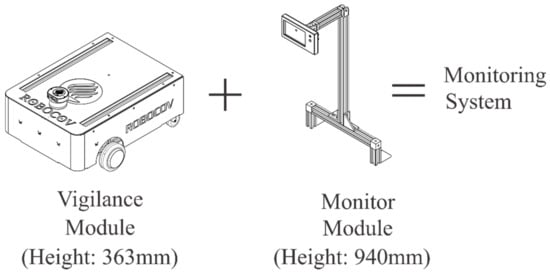

The third system consists of the Monitoring module; this module measures the temperature of particular users owing to an installed proximity temperature sensor; it also interacts with the students via a two-way communication channel and a guide user interface in the front display. The onboard speaker and microphone provide a user-friendly interface for remote interaction between the users and the operator, allowing a personalized interaction for every student. The personalized attention to the students has been achieved owing to an RFID card reader, which identifies the users when interfaced with a student or personnel ID. Figure 8 illustrates how this module can be assembled.

Figure 8. Monitoring system.

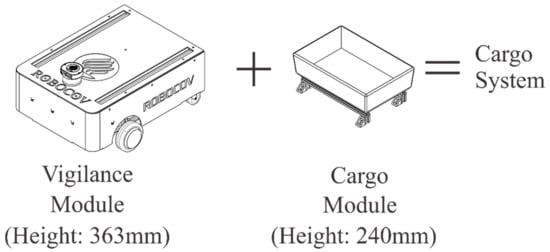

Lastly, the cargo module is designed to function as a safe and reliable transportation service. By taking advantage of the control and maneuverability of the vigilance system, the cargo module can be used to deliver hazardous material, standard packages, or documents from point A to point B without the need for direct interaction between peers. This helps to reduce the direct interaction and number of exposures in a crowded community such as universities or offices. This module is shown in Figure 9.

Figure 9. Cargo system.

4. The Tasks That Robocov Can Perform

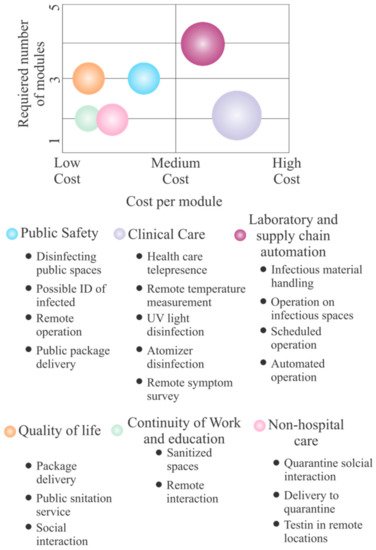

As a modular system, the proposed Robocov platform can accomplish a fair number of tasks. As shown by the bubble cart in Figure 10 [13], the installed module allows attaining specific tasks to increase the number of performed tasks. However, the robot is based on a leading platform, so the specific module can be selected to maintain the cost of the robot low. For instance, when the UV module is selected, a specific UV lamp can be installed according to the sanitization requirements as the area that requires to be sanitized and the time for achieving this task. Thus, the designer can tailor the elements of each module based on the requirements and the primary platform limits.

Figure 10. Design and manufacturing stages.

-

Public Safety

-

Clinical Care

-

Continuity of work and education

-

Quality of Life

-

Laboratory and supply chain automation

-

Non-hospital care

5. Conclusions

The proposed robot, Robocov, is a low-cost robot that can perform several tasks to decrease the COVID-19 impact using a reconfigurable structure. Besides, it is a robot that integrates S4 features and AI, allowing the robot to accomplish the end-user requirements such as sanitization and delivered packages. Besides, monitoring temperature is a critical variable used to detect infected people, so temperature sensors are selected instead of thermal cameras that can increase the cost of the whole robot. Moreover, face mask detection or social distance are essential tasks added to the robot’s detection system to integrate into the robot. The remote navigation system has a supportive, intelligent control system to avoid obstacles. In future work, the navigation could be completely autonomous with another implementation of AI. Social features are integrated since the interaction with the operator must be improved according to the operator’s behavior and needs. In addition, this robot is a reconfigurable robot with a central element, the leading platform with the traction system, and a central microprocessor that allows different devices to connect with the robot. Using this concept of S4 and AI is possible to design robots with high performance and low cost that can perform complex tasks required to decrease the impact of the COVID-19 Pandemic.

References

- Yang, G.Z.; Nelson, B.J.; Murphy, R.R.; Choset, H.; Christensen, H.; Collins, S.H.; Dario, P.; Goldberg, K.; Ikuta, K.; Jacobstein, N.; et al. Combating COVID-19—The role of robotics in managing public health and infectious diseases. Sci. Robot. 2020, 5, eabb5589.

- Méndez Garduño, I.; Ponce, P.; Mata, O.; Meier, A.; Peffer, T.; Molina, A.; Aguilar, M. Empower saving energy into smart homesusing a gamification structure by social products. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–7.

- Ponce, P.; Meier, A.; Miranda, J.; Molina, A.; Peffer, T. The Next Generation of Social Products Based on Sensing, Smart and Sustainable (S3) Features: A Smart Thermostat as Case Study. IFAC-PapersOnLine 2019, 52, 2390–2395.

- Miranda, J.; Ponce, P.; Molina, A.; Wright, P. Sensing, smart and sustainable technologies for Agri-Food 4.0. Comput. Ind. 2019, 108, 21–36.

- Spampinato, C.; Palazzo, S.; Kavasidis, I.; Giordano, D.; Shah, M.; Souly, N. Deep Learning Human Mind for Automated Visual Classification. arXiv 2019, arXiv:1609.00344.

- Wang, F.; Preininger, A. AI in Health: State of the Art, Challenges, and Future Directions. Yearb. Med. Inform. 2019, 28, 16–26.

- Suresh, K.; Palangappa, M.B.; Bhuvan, S. Face Mask Detection by using Optimistic Convolutional Neural Network. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1084–1089.

- Mata, B.U. Face Mask Detection Using Convolutional Neural Network. J. Nat. Remedies 2021, 21, 14–19.

- Tomás, J.; Rego, A.; Viciano-Tudela, S.; Lloret, J. Incorrect Facemask-Wearing Detection Using Convolutional Neural Networks with Transfer Learning. In Healthcare; Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2021; Volume 9, p. 1050.

- Ramadass, L.; Arunachalam, S.; Sagayasree, Z. Applying deep learning algorithm to maintain social distance in public place through drone technology. Int. J. Pervasive Comput. Commun. 2020, 16, 223–234.

- Vo, N.; Jacobs, N.; Hays, J. Revisiting IM2GPS in the Deep Learning Era. arXiv 2017, arXiv:1705.04838.

- Gao, X.W.; Hui, R.; Tian, Z. Classification of CT brain images based on deep learning networks. Comput. Methods Programsin Biomed. 2017, 138, 49–56.

- Murphy, R.R.; Gandudi, V.B.M.; Adams, J. Applications of Robots for COVID-19 Response. arXiv 2020, arXiv:2008.06976.

More

Information

Subjects:

Engineering, Electrical & Electronic; Robotics

Contributor

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

766

Revisions:

2 times

(View History)

Update Date:

10 Jan 2022

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No