Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Anil Bhujel | + 2697 word(s) | 2697 | 2021-11-01 09:19:16 | | | |

| 2 | Rita Xu | Meta information modification | 2697 | 2021-11-12 06:41:45 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Bhujel, A. Pigs’ Physico-Temporal Activities. Encyclopedia. Available online: https://encyclopedia.pub/entry/15927 (accessed on 08 February 2026).

Bhujel A. Pigs’ Physico-Temporal Activities. Encyclopedia. Available at: https://encyclopedia.pub/entry/15927. Accessed February 08, 2026.

Bhujel, Anil. "Pigs’ Physico-Temporal Activities" Encyclopedia, https://encyclopedia.pub/entry/15927 (accessed February 08, 2026).

Bhujel, A. (2021, November 12). Pigs’ Physico-Temporal Activities. In Encyclopedia. https://encyclopedia.pub/entry/15927

Bhujel, Anil. "Pigs’ Physico-Temporal Activities." Encyclopedia. Web. 12 November, 2021.

Copy Citation

Animals exhibit their internal and external stimuli through changing behavior. Therefore, people intrinsically used animal physical activities as an indicator to determine their health and welfare status.

YOLOv4

Faster R-CNN

Deep-SORT

pig posture detection

1. Introduction

Pig behavior is a key trait for recognizing their health and welfare conditions [1]. Regular monitoring of pigs’ physical activity is essential to identify short- and long-term pig stresses [2]. Although the monitoring of pigs round-the-clock in precision farming provides invaluable information regarding their physical and biological status, manual monitoring of every single pig in a large-scale commercial farm is impractical due to the requirement for a higher animal-to-staff ratio, consequently increasing production cost. Therefore, the staff can only observe the pig briefly and might miss identifying subtle changes in the pigs’ activity [3]. Furthermore, the presence of a human in the barn influences the pigs’ behavior, leading to unusual activity that can be misunderstood during the decision-making process [4][5]. Therefore, sensor-based non-disturbing automatic monitoring of pigs is being used considerably.

Numerous studies have shown that the housing environment greatly influences the physical and social behavior of the pigs. Changes in posture and locomotion are key indicators of disease (clinical and subclinical) and compromised welfare [6]. It is also an indicator of pig comfort in the reared environment. For instance, a pig utilizes different lying postures to cope with ambient temperature and maintains body temperature through thermoregulation [7]. They prefer lateral-lying positions in high ambient temperature and sternal-lying at low-temperature conditions. Alameer et al. [8] observed significant changes in posture and locomotion activities with limited feed supply. Identifying subtle changes in pig posture is challenging by sporadic human observation since a pig spends most of the time (88% time in a day) lying in a thermo-comfort environment [7]. Therefore, a computer vision-based automatic monitoring system is valuable, identifying the minute changes in posture through continuous monitoring.

Moreover, there is a burgeoning concern for animal health and welfare in the intensive farmhouse [8][9]. Behavior monitoring is even more pertinent in group-housed pigs as they exhibit significant behavior changes in the compromised environment [10]. Another equally important concern is the emission of greenhouse gases (GHGs) from extensive livestock farming. Pig manure management is the second-highest contributor (27%) after feed production (60%) in the overall emission of GHGs from the livestock barn. Besides, enteric fermentation and various on-farm energy usage devices produce the major GHGs [11]. Correspondingly, the high GHG concentration inside the livestock barn stems from poor manure management, improper ventilation system, and densely populated pigs, affecting the pigs’ behaviors [12][13]. Since pigs are averse to an excessive amount of GHGs, such as carbon dioxide (CO2), methane (CH4), and nitrous oxide (N2O), and show discomfort and pain in such environments, it is essential to observe the response of pigs in terms of posture activities with increased indoor GHGs.

CO2 and N2O, two major GHGs produced in the livestock, are commonly used for euthanizing the pigs, regardless of questions over the pigs’ welfare. Various studies have been conducted by assessing the pigs’ response during stunning. Atkinson et al. [13] observed the changes in pigs’ behavior and meat quality while applying different concentrations of CO2. Two different CO2 concentrations, 20C2O (20% CO2 and 2% O2) and 90C (90% CO2 in air) were exposed to slaughter pigs, and it was found that pigs felt more uncomfortable in the 90C concentration, with 100% of pigs being stunned. In another experiment, CO2, CO2 plus Butorphanol, and N2O gases were applied to compare stress levels during the euthanization of pigs [12]. Although they were unable to identify the distinction in stress levels in those treatments, N2O application could be more humane than CO2. Similar results were found by Lindahl et al. [14] in that N2O-filled foam could be a suitable alternative to CO2 when stunning pigs, improving animal welfare. Verhoeven et al. [15] studied the time taken for the slaughter pig to become unconscious by using different concentrations of CO2 (80% and 95%) and studying their corresponding effects on behavior changes. The higher the gas concentration, the quicker the time for the pig to become unconscious (33 ± 7 s). This shows that pigs are significantly affected by a high concentration of GHGs. However, to our knowledge, no study has been conducted to observe the pigs’ behavioral alteration in naturally increased GHGs due to poor livestock management. Therefore, it is essential to monitor the pigs’ behavior in the GHG-concentrated environment, as the livestock barn emits a considerable amount of GHGs [16].

In this scenario, several studies have been conducted for monitoring the pigs’ activity at individual and group levels over the last few decades. The implementation of computer-vision-based monitoring systems in pig barns has been soaring due to the automatic, low cost, real-time monitoring, non-contact, animal friendly, and state-of-the-art performance [17][18][19][20][21][22][23][24][25][26]. An ellipse-fitting-based image-processing technique was used to monitor the pigs’ mounting behavior in the commercial farm [18]. Various features such as ellipse-like shape, centroid, axis lengths, and Euclidean distance of head-tail and head-side were extracted to detect the pigs’ mounting position. Similarly, lying behaviors (sternal and lateral lying) at individual and group levels have been classified using image-processing techniques [19][20], where pig bodies from the video frames were extracted using background subtraction and the Otsu threshold algorithm, and then an ellipse-fitting method was applied to determine the lying postures. Matthews et al. [21] implemented a 3D camera and an image-processing algorithm to detect pigs’ behaviors (standing or not standing, feeding, and drinking). The XYZ coordinates obtained from the depth sensor, camera position, and vertical angle of the camera were used to filter out unnecessary scenes such as the floor, walls, and gates. In addition, an outlier threshold calculated from the grand mean and standard deviation was set to remove the unusual depth noise. A region-growing technique for similar pixels was used to detect the pig, whereas a Hungarian algorithm was used to track pigs between the frames. The image-processing technique, although widely used in pig monitoring, demands various pre-and post-processing steps. It is even challenging in an uncontrolled house environment, variable illumination, huddled pigs, and deformed body shapes [17][22].

Accordingly, a convolutional neural network (CNN)-based deep-learning object detection model outperformed the conventional image-processing techniques. Recently, various researches have been carried out using a deep-learning model as an end-to-end activity detection and scoring model rather than only for object detection. A combination of a CNN and a recurrent neural network (RNN) has been used to extract the spatial and temporal features of pigs for tail-biting detection [23], where a pair of bitten and biting pigs from the video frames were detected using a single-shot detector (SSD) with two base networks, Visual Graphic Group-16 (VGG-16) and Residual Network-50 (ResNet-50). A video of tail-biting behavior was sub-divided into a short video of 1 s length to minimize the tracking error. Then, the pairwise interaction of the two pigs was identified by the trajectory of motion in the subsequent frames to detect the biting and bitten pigs. They achieved an accuracy of 89.23% to identify and locate the tail-biting behavior of pigs. Likewise, the pigs’ posture (standing, dog sitting, sternal lying, and lateral lying) and drinking activity were detected automatically using two deep-learning models (YOLO: you only look once; Faster R-CNN) [8]. They found that the YOLO model outperformed the Faster R-CNN (ResNet-50 as a base network) in both activity detection accuracy and speed. They observed the distinction in pig behavior by creating hunger stress and achieved the highest mAP from the YOLO model (0.989 ± 0.009). In addition, the mean squared error (MSE) on the distance traveled by a pig and its average speed were 0.078 and 0.002, respectively.

Similarly, the performance of three deep-learning architectures—namely, Faster R-CNN, SSD, and region-based fully convolutional network (R-FCN) having Inception V2, ResNet, and Inception ResNet V2, respectively, as their base networks—have been evaluated during the detection of pigs’ standing, belly-lying, and lateral-lying activities [24]. The datasets were collected from three commercial pig barns with different colors and age groups of pigs. All the models showed superior detection capabilities (maximum AP of 0.95 compared to standing AP of 0.93 and belly lying AP of 0.92) for the lying by side pigs due to having unique features. Yang et al. [25] developed an FCN-based segmentation and feature extraction model coupled with an SVM classifier to detect sow nursing behavior. Initially, the sow image was segmented from the video frames and converted into a binary image. Features such as area and length-to-width ratio were extracted to find out the possible nursing conditions. Then, the nursing activity was further confirmed by identifying the udder region using geometrical information from the sows′ shape and the number of piglets present, which was estimated by the area covered by them and their movement. Although this technique required heavy manual effort during the spatial and temporal feature extraction and analysis, it produced state-of-the-art performance on their testing videos (accuracy, sensitivity, and specificity of 97.6%, 90.9%, and 99.2%, respectively).

Even though the deep-learning-based object detection model has surpassed the conventional image-processing technique, due to the limited availability of labeled datasets for wide varieties of piggery environments, it is, therefore, challenging to build a fully generalized model. However, Riekert et al. [26] attempted to develop a generalized deep-learning model using a faster region-based convolutional neural network (R-CNN) with neural architecture search (NAS) as a base network to detect lying or not lying pigs. They applied a large number of training datasets (7277 manually annotated) captured by multiple 2D cameras (20), from various pens (18), prepared from 31 different one-hour videos. The trained model achieved an average precision (AP) of 87.4% and a mean AP (mAP) of 80.2% for the images taken from separate pens with a similar experimental environment during testing. However, the performance reduced significantly (AP of 67.7% and mAP between 44.8% and 58.8%) for those pens with different and complex environmental conditions, which is obvious and signifies that the training dataset is crucial for the deep-learning model to make a generalized model.

2. Greenhouse Gas Concentrations

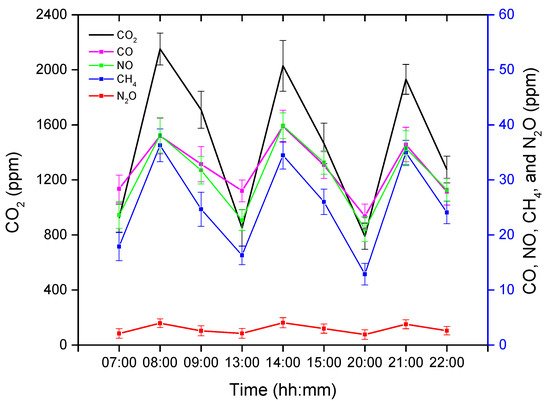

The GHG concentrations obtained after analyzing the gas samples were averaged and are presented in Figure 1. The GC has the ability to detect five varieties of GHGs, namely carbon dioxide (CO2), methane (CH4), carbon monoxide (CO), nitric oxide (NO), and nitrous oxide (N2O). CO2 is the dominant GHG, followed by CO and NO, whereas N2O was found in the lowest concentration in this experimental pig barn. The GHG concentrations were measured three times in each treatment instance (before, after, and one hour later of treatment completion), as shown in Figure 1.

Figure 1. Average greenhouse gas (GHG) concentrations before, during, and after an hour of treatment. The x-axis represents the time (hh: mm) of day, whereas the left y-axis represents the average CO2 concentration, and the right y-axis represents the average CO, NO, CH4, and N2O.

3. Group-Wise Pig Posture and Walking Behavior Score

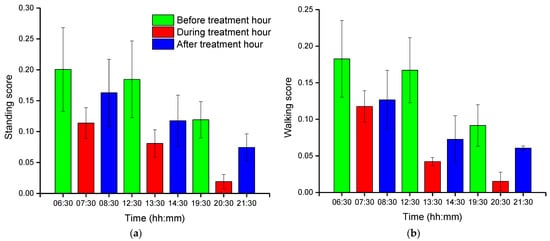

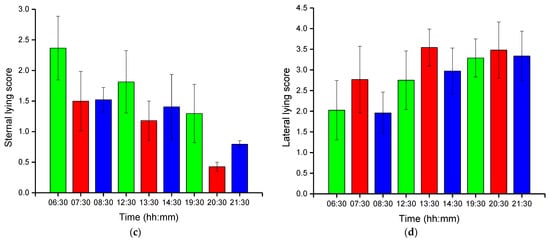

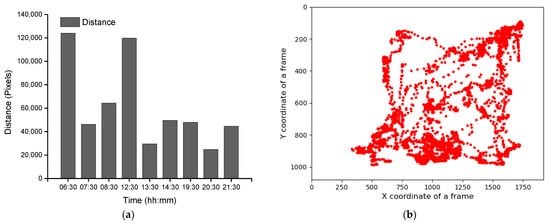

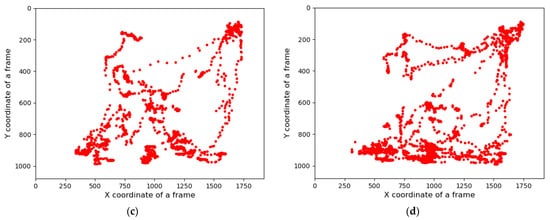

Group-wise pig posture and walking activity scores are measured by dividing the number of pigs with a particular posture by the number of frames before, during, and after treatment hour. Most of the time, pigs stayed in the lying position (sternal and lateral). However, the pigs were more active in the morning compared to the day and nighttime. Moreover, at night, pigs primarily rested in the lateral lying position. With the peak value in GHGs, the standing and walking activities of the pigs were decreased significantly (almost by half), as shown in Figure 2a,b. The standing score was increased with the decrease in GHGs (one hour later of treatment). A similar pattern was followed by the walking activity score, except in the morning, where the walking score did not increase noticeably. Likewise, the sternal lying behavior of the pigs also decreased with the increase in GHGs (Figure 2c). Conversely, the lateral lying behavior of the pigs increased significantly in the morning and daytime (nearly 40% in the morning and 30% in the day). However, it was marginally increased in the nighttime, as given in Figure 2d. One hour later of the treatment (08:00–09:00, 14:00–15:00, and 21:00–22:00), the GHGs remained relatively higher than before the treatment. Therefore, the respective effects on all the pigs’ activities were observed, as presented in Figure 2. The total distance traveled (in terms of pixels) by all walking pigs was higher in the morning before treatment hour and observed least in the nighttime treatment hour. Figure 3 demonstrates the total distance traveled and the locomotion pattern of walking pigs on the morning of the day 1 experiment.

Figure 2. Group-wise average posture and walking frame scores of pigs: (a) standing score, (b) walking score, (c) sternal-lying score, and (d) lateral-lying score. The scores show the average number of pigs in a frame with a particular posture. The bars represent the average activity scores obtained in three days at an hour before, during, and after the treatment period in the morning, day, and nighttime.

Figure 3. Total distance traveled by the pigs in group and the day 1-morning time locomotion activities: (a) the total distance traveled by all pigs during the study period, (b) locomotion of the group pigs before treatment in the morning of day 1, (c) during the treatment, and (d) after the treatment.

4. Individual Pig Posture and Walking Behavior

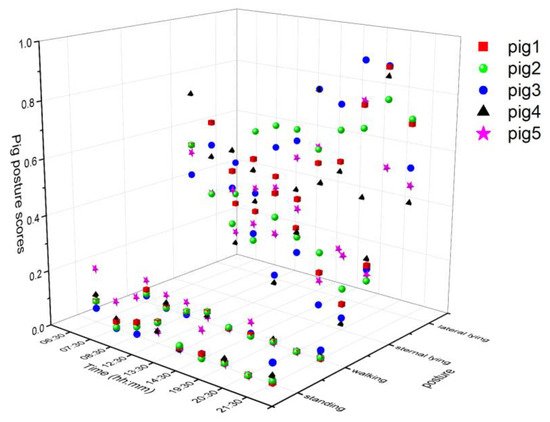

The tracking algorithm provided a virtual ID to each pig and remained tracked until the number of missing frames was less than the specified age of the ID (50). The individual posture and walking activities were determined similarly as group-wise behavior measurements, except treating them individually. The posture and walking activity scores of each pig are given in Figure 4. Pig 5 was more active than the other pigs, whereas Pig 3 was inactive most of the time.

Figure 4. Posture and walking activity score profiles of individual pigs during the study period.

5. Pig-Activity Detection and Tracking Model Performance

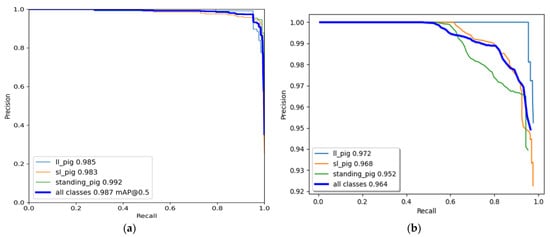

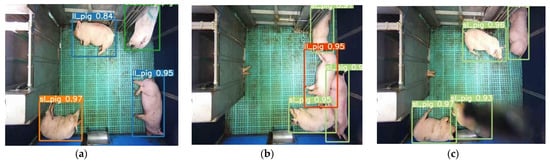

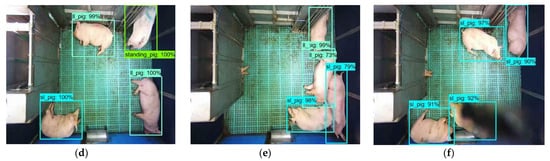

The YOLO model was trained for 500 epochs, whereas the Faster R-CNN model was trained for 50,000 iterations and saved with trained weights. Then, the model performance was assessed comprehensively using a toolkit implemented in the object detection metrics analysis [27]. The overall and class-wise average precision and recall obtained from the two models are shown in Figure 5. The YOLO model gave balance accuracy metrics (Figure 5a) compared to the Faster R-CNN model, which produced the highest accuracies for lateral lying posture detection and least for standing posture (Figure 5b). The APs of the Faster R-CNN model for the lateral lying, sternal lying, and standing postures were 97.21%, 96.83%, and 95.23%, respectively, with an overall mAP of 96.42% at 0.5 IoU. In comparison, the YOLO model provided 98.52%, 98.33%, and 99.18% accuracies for lateral lying, sternal lying, and standing postures, respectively, with an overall mAP of 98.67% at 0.5 IoU. Figure 6 shows the example frames of pig posture detection in different scenarios. The detection confidence of the Faster R-CNN model was higher for the sparsely located pigs. However, it declines with the increase in pig congestion and occlusion occurrence. Whereas the YOLO model produced balanced detection confidence in all scenarios, providing better precision and recall values. The Faster R-CNN model provided some false positive detections for standing and sternal lying postures, reducing the precision score, as shown in Figure 5b. Similarly, the time taken by the YOLO model was 0.0314 s per image compared to 0.15 s per image of the Faster R-CNN model. The models’ detection speed was calculated by averaging the time taken to detect 30 min video frames.

Figure 5. Class-wise and overall precision-recall evaluation metrics of the model: (a) YOLOv4 model and (b) Faster R-CNN model.

Figure 6. Sample of pig posture detection under various conditions by two models: (a–c) by YOLOv4 model for the frame having sparsely located pigs, the frame having densely located pigs, and the frame having occlusion, respectively, and (d–f) by Faster R-CNN model for the same frames applied in the YOLOv4 model.

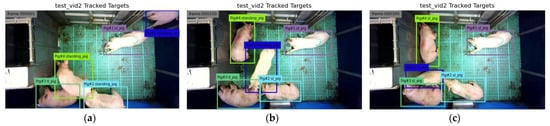

Our proposed model works on tracking by detection strategy. The pig location in the frame was detected by the YOLO model with the corresponding pig posture. Then, the tracking algorithm assigned a virtual ID to the detected pig and tracked it throughout the frames in a video clip. Therefore, the accuracy of the tracking algorithm also depends upon the accuracy of the detection algorithm. In this study, the YOLOv4 produced good detection accuracy (98.67%), resulting in a good tracking accuracy of MOTA 93.13% and MOTP 81.23%. Some example frames after implementing the tracking algorithm are shown in Figure 7.

Figure 7. Sample of tracked frames by the tracking algorithm: (a) the first frame, (b) the 150th frame as Pig 5 is moving, and (c) the 300th frame where Pig 5 has changed its posture from standing to sternal lying.

References

- Czycholl, I.; Büttner, K.; grosse Beilage, E.; Krieter, J. Review of the assessment of animal welfare with special emphasis on the Welfare Quality® animal welfare assessment protocol for growing pigs. Arch. Anim. Breed. 2015, 58, 237–249.

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early detection of health and welfare compromises through automated detection of behavioural changes in pigs. Vet. J. 2016, 217, 43–51.

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction Using Deep Learning. IEEE Access 2019, 7, 108049–108060.

- Villain, A.S.; Lanthony, M.; Guérin, C.; Tallet, C. Manipulable Object and Human Contact: Preference and Modulation of Emotional States in Weaned Pigs. Front. Vet. Sci. 2020, 7, 577433.

- Leruste, H.; Bokkers, E.A.; Sergent, O.; Wolthuis-Fillerup, M.; van Reenen, C.G.; Lensink, B.J. Effects of the observation method (direct v. From video) and of the presence of an observer on behavioural results in veal calves. Animal 2013, 7, 1858–1864.

- Rostagno, M.H.; Eicher, S.D.; Lay, D.C., Jr. Immunological, physiological, and behavioral effects of salmonella enterica carriage and shedding in experimentally infected finishing pigs. Foodborne Pathog. Dis. 2011, 8, 623–630.

- Huynh, T.T.T.; Aarnink, A.J.A.; Gerrits, W.J.J.; Heetkamp, M.J.H.; Canh, T.T.; Spoolder, H.A.M.; Kemp, B.; Verstegen, M.W.A. Thermal behaviour of growing pigs in response to high temperature and humidity. Appl. Anim. Behav. Sci. 2005, 19, 1–16.

- Alameer, A.; Kyriazakis, I.; Bacardit, J. Automated recognition of postures and drinking behaviour for the detection of compromised health in pigs. Sci. Rep. 2020, 10, 13665.

- Benjamin, M.; Yik, S. Precision Livestock Farming in Swine Welfare: A Review for Swine Practitioners. Animals 2019, 9, 133.

- Diana, A.; Carpentier, L.; Piette, D.; Boyle, L.A.; Berckmans, D.; Norton, T. An ethogram of biter and bitten pigs during an ear biting event: First step in the development of a Precision Livestock Farming tool. App. Anim. Behav. Sci. 2019, 215, 26–36.

- MacLeod, M.; Gerber, P.; Mottet, A.; Tempio, G.; Falcucci, A.; Opio, C.; Vellinga, T.; Henderson, B.; Steinfeld, H. Greenhouse Gas Emissions from Pig and Chicken Supply Chains–A Global Life Cycle Assessment; Food and Agriculture Organization of the United Nations (FAO): Rome, Italy, 2013; Available online: http://www.fao.org/3/i3460e/i3460e.pdf (accessed on 4 March 2021).

- Çavuşoğlu, E.; Rault, J.-L.; Gates, R.; Lay, D.C., Jr. Behavioral Response of Weaned Pigs during Gas Euthanasia with CO2, CO2 with Butorphanol, or Nitrous Oxide. Animals 2020, 10, 787.

- Atkinson, S.; Algers, B.; Pallisera, J.; Velarde, A.; Llonch, P. Animal Welfare and Meat Quality Assessment in Gas Stunning during Commercial Slaughter of Pigs Using Hypercapnic-Hypoxia (20% CO2 2% O2) Compared to Acute Hypercapnia (90% CO2 in Air). Animals 2020, 10, 2440.

- Lindahl, C.; Sindhøj, E.; Brattlund Hellgren, R.; Berg, C.; Wallenbeck, A. Responses of Pigs to Stunning with Nitrogen Filled High-Expansion Foam. Animals 2020, 10, 2210.

- Verhoeven, M.; Gerritzen, M.; Velarde, A.; Hellebrekers, L.; Kemp, B. Time to Loss of Consciousness and Its Relation to Behavior in Slaughter Pigs during Stunning with 80 or 95% Carbon Dioxide. Front Vet. Sci. 2016, 3, 38.

- Sejian, V.; Bhatta, R.; Malik, K.; Madiajagan, B.; Al-Hosni, Y.A.S.; Sullivan, M.; Gaughan, J.B. Livestock as Sources of Greenhouse Gases and Its Significance to Climate Change. In Greenhouse Gases; Llamas, B., Pous, J., Eds.; IntechOpen: London, UK, 2016; pp. 243–259.

- Nasirahmadi, A.; Edwardsa, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livest. Sci. 2017, 202, 25–38.

- Nasirahmadi, A.; Hensel, O.; Edwards, S.A.; Sturm, B. Automatic detection of mounting behaviours among pigs using image analysis. Comput. Electron. Agric. 2016, 124, 295–302.

- Nasirahmadi, A.; Sturm, B.; Olsson, A.C.; Jeppsson, K.H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481.

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agric. 2015, 119, 184–190.

- Matthews, S.G.; Miller, A.L.; Plötz, T.; Kyriazakis, I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017, 7, 17582.

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors 2019, 19, 1188.

- Liu, D.; Oczak, M.; Maschat, K.; Baumgartner, J.; Pletzer, B.; He, D.; Norton, T. A computer vision-based method for spatial-temporal action recognition of tail-biting behavior in group-housed pigs. Biosyst. Eng. 2020, 195, 27–41.

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.-H.; Olsson, A.-C.; Müller, S.; Hensel, O. Deep Learning and Machine Vision Approaches for Posture Detection of Individual Pigs. Sensors 2019, 19, 3738.

- Yang, A.; Huang, H.; Zhu, X.; Yang, X.; Chen, P.; Li, S.; Xue, Y. Automatic recognition of sow nursing behavior using deep learning-based segmentation and spatial and temporal features. Biosyst. Eng. 2018, 175, 133–145.

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391.

- Padilla, R.; Passos, W.L.; Dias, T.L.B.; Netto, S.L.; da Silva, E.A.B. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279.

More

Information

Subjects:

Agricultural Engineering

Contributor

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

923

Revisions:

2 times

(View History)

Update Date:

12 Nov 2021

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No