2.1. Methodology and Frameworks Appropriate for IoT Data

Batini et al. defined a DQ methodology

[1] as “a set of guidelines and techniques that define a rational process for assessing and improving DQ, starting from describing the input information for a given application context, defines a rational process to assess and improve the quality of data”. A framework is considered as a theory-building and practice-oriented tool

[60], providing a structure for using QA theory and methods

[61][62]. The terms DQ methodology and DQ framework are often used interchangeably in related research. In this chapter, we review the general DQ management methodologies and frameworks, comparing them in terms of research objectives, management phases, applicable data types, the number of DQ dimensions, and whether they can be extended, respectively. Most of the research in DQ methodology has focused on structured and semi-structured data, while only a few of them also involve semi-structured data. Methodology and framework, in many studies, refer to the same thing.

Early on, Wang

[63] proposed a general methodology “Total Data Quality Management (TDQM)”, which is one of the most famous complete and general methodologies. The TDQM treats data as information products and presents a comprehensive set of associated dimensions and enhancements, which can be applied to different contexts. However, the structure of the processable data is not specified. The goal of TDQM is to continuously enhance the quality of information products through a cycle of defining, measuring, analyzing and enhancing data and the process of managing them, without appropriate steps specified in the assessment process.

English

[4] described a methodology of “Total Information Quality Management (TIQM)” applied to data warehouse projects. Later, due to its detailed design and universality, it became a generic information quality management methodology that can be customized for many backgrounds and different data types, including structured data, unstructured data, and semi-structured data, the latter two of which are not mentioned in the study but can be inferred. The TIQM cycle includes evaluation, improvement, and improvement management and monitoring. Compared with other methodology, TIQM is original and more comprehensive in terms of cost–benefit analysis and the management perspective

[3]. However, during the evaluation phase, TIQM manages a fixed set of DQ dimensions, with a number of DQ dimensions of 13 and their solution strictly follows these dimensions. TIQM is one of the few methodologies that considers the cost dimension and provides detailed classifications for costs.

Lee et al.

[5] presented “A Methodology for Information Quality Assessment (AIMQ)”, which is the first quality management method that focuses on benchmarking and will provide objective and domain-independent generic quality assessment techniques. The methodology designs a PSP/IQ model that provides a standard list of quality dimensions and attributes that can be used to categorize quality dimensions according to importance from a user and an administrator perspective. The AIMQ cycle includes the measurement, analysis, and interpretation of an assessment, and lacks guidance on activities to improve DQ. AIMQ uses questionnaires applicable to structured data for qualitative assessments but can be applied to other data types, including unstructured data and semi-structured data. Similar to TIQM, during the measurement phase, AIMQ manages a fixed group of DQ dimensions (metrics), with a number of dimensions of 15, and their solution strictly follows these dimensions.

Monica et al.

[64] present a cooperative framework “DaQuinCIS” for DQ by applying TDQM, which is one of the rare methodologies that focuses on semi-structured data. This approach proposes a model, called data and data quality (D2Q). The model associates DQ values with XML documents, and can be used to verify the accuracy, currency, completeness, and consistency of the data. Another contribution of DaQuinCIS is the degree of flexibility that each organization has to export the quality of its data because of the semi-structured model.

Batini et al.

[10] proposed a “Comprehensive Data Quality methodology (CDQ)” that extends the steps and techniques originally developed for all types of organizational data. CDQ integrates the phases, techniques and tools from other methodologies and overcomes some of the limitations in those methodologies. The CDQ cycle includes state reconstruction, assessment, and improvement. All data types, both structured and semi-structured, should be investigated in the state reconstruction step. CDQ manages four DQ dimensions and considers the cost of alternative improvement activities to compare and evaluate the minimum-cost improvement processes.

Cappiello

[65] described a “Hybrid Information Quality Management (HIQM) methodology”, which supported error detection and correction management at runtime and improved the traditional DQ management cycle by adding the user perspective. For example, HIQM defines DQ by considering the needs of not only companies and suppliers, but also user end consumers to determine DQ requirements. The HIQM cycle includes definition, quality measurement, analysis and monitoring, and improvement. However, in the measurement stage, only the need for measurement algorithms for each DQ dimension is expressed, without defining specific metrics. In particular, TIQM designed a warning interface that represents an efficient way to analyze and manage problems and warnings that appear, and considers whether to recommend a recovery operation by analyzing the details of the warning message.

Caballero

[66] proposed “A Methodology Based on ISO/IEC 15939 to Draw up Data Quality Measurement Process (MMPRO)”, which is based on the ISO/IEC 15939 standard

[67] for software quality assessment and can also be used for DQ assessment. The MMPRO cycle includes the DQ Measurement Commitment, Plan the DQ Measurement Process, Perform the DQ Measurement Process and Evaluate the DQ Measurement Process. Although the approach does not categorize DQ measures or provide a set of behaviors for improving data quality, its structure helps to incorporate DQ issues into the software.

Maria et al.

[68] described “A Data Quality Practical Approach (DQPA)”, which described a DQ framework in a heterogeneous multi-database environment and applied it with a use case. The DQPA cycle consists of seven phases, the including identification of DQ issues, identification of relevant data that has a direct impact on the business, evaluation, the determination of the business impact through DQ comparison, cleansing of data, monitoring the DQ, and carrying out the assessment stage regularly. In DQPA, the authors propose the Measurement Model based on

[69][70][71], which extends the DQ assessment metrics into metrics for evaluating primary data sources and metrics for evaluating derived data. The model can be used at different levels of granularity for databases, relationships, tuples, and attributes.

Batini et al.

[11] presented a “Heterogenous Data Quality Methodology (HDQM)”, which can be used to evaluate and improve the DQ, and has been verified by using cases. The HDQM cycle includes state reconstruction, assessment, and improvement. The HDQM recommends considering all types of data in the state reconstruction phase by using a model that describes the information according to the level of abstraction. In the assessment phase, HDQM defines a method that can be easily generalized to any dimension. Furthermore, the DQ dimensions of the HDQM measurement and improvement phase can be applied to different data types. A major contribution of HDQM is based on the techniques of the cost–benefit analysis in TIQM, COLDQ and CDQ, presenting a more qualitative approach to guide the selection of appropriate improvement techniques.

Laura et al.

[2] described a “Data Quality Measurement Framework (DQAF)”, which provides a comprehensive set of objective DQ metrics for DQ assessment organizations to choose from, comprising 48 universal measurement types based on completeness, timeliness, validity, consistency, and integrity. In DQAF, the authors introduce a concept of “measurement type” that is a generic form suitable for a particular metric, and develop some strategies to describe six aspects of each measure type, including definition, business concerns, measurement methodology, programming, support processes and a skills and measurement logical model. The DQAF cycle includes define, measure, analyze, improve, and control. Specifically, the authors focus on comparing the results of the DQ assessment with assumptions or expectations, and continuously monitoring the data to ensure that it continues to meet the requirements.

Carretero et al.

[72] developed an “Alarcos Data Improvement Model (MADM Framework)” that can be applied in many fields, which can provide a Process Reference Model and evaluation and improvement methods. Finally, it was verified with an applied hospital case. The MADM Framework cycle consists of a two-stage Process Reference Model based on the ISO 8000-61 standard and an Assessment and Improvement Model based on ISO/IEC 33000. The MAMD Process Reference Model consists of 21 processes that can be used in the areas of data management, data governance, and DQ management quality. The assessment model is a methodology that consists of five steps and a maturity model.

Reza et al.

[73] introduced an “observe–orient–decide–act (OODA)” framework to identify and improve DQ through the cyclic application of the OODA method, which is adaptive and can be used across industries, organizational types, and organizational scales. The OODA framework cycle includes observe, orient, decide and act. Only the need for a metric algorithm for each DQ dimension is indicated, and the OODA DQ approach refers to the use of existing DQ metrics and tools for metrics. Although the OODA DQ methodology does not involve any formal process for analysis and improvement processes, DQ issues are identified through tools such as routine reports and dashboards during the observe phase. In addition, notices alerting for possible DQ issues and feedback from external agencies are also recommended

[7].

There are many more comparative perspectives on these 12 general DQ management methodologies/frameworks, such as flexibility in the choice of dimensions

[7], the use of subjective or objective measurements in the assessment phase, specific steps in the assessment/improvement phase, cost considerations, data-driven or process-driven, etc. There is not much research on IoT DQ assessment yet, and a beginner may have some difficulties on aspects such as how to make decisions, so start with the question, what are the general requirements of data users? If the user needs to manage IoT data in a holistic way that supports the definition, assessment and improvement process without resorting to some tool or software, the generic DQ management methodology/framework mentioned in this section can be chosen.

2.2. ISO Standards Related to Data Quality

Another important area of DQ in industry and academia is the research and standardization of DQ standards. By developing uniform DQ standards, DQ can be managed more efficiently across countries, organizations, and departments, thereby facilitating data storage, delivery, and sharing, and reducing errors in judgment and decision making due to data incompatibility, data redundancy, and data deficiencies. Since IoT systems are distributed in nature, the use of international standards can have a positive effect on improving the performance of business processes by aligning various organizations with the same foundation, addressing interoperability issues, and finally working in a seamless manner.

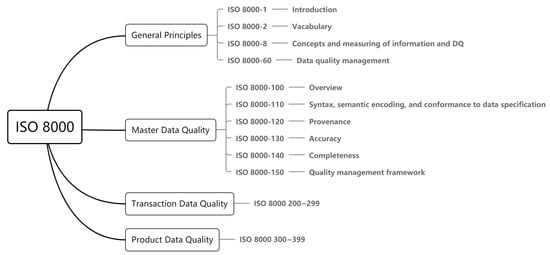

The ISOas made a great deal of effort in this regard and has developed several standards to regulate international data quality. The ISO 8000 DQ standard has been developed

[74] to address the increasingly important issue of DQ and data management. ISO 8000 covers the quality characteristics of data throughout the product life cycle, from conceptual design to disposal. ISO 8000 describes a framework for improving the DQ of a particular data, which can be used independently or in cooperation with a quality management system.

The ISO 8000-6x family of standards provides a value-driven approach to DQ management. Several of the IoT data assessment frameworks reviewed in the next section are based on this standard. This series of standards provides a set of guidelines for the overall management of DQ that can be customized for different domains. It describes a DQ management structure derived from ISO 9001’s Plan-Do-Check-Act (PDCA), a life cycle that includes DQ planning, DQ control, DQ assurance, and DQ improvement. However, it is not primarily intended as a methodology for DQ management, but merely to serve as a process reference model. Figure 3 depicts the components of the ISO 8000 DQ standard.

Figure 3. Components of the ISO 8000.

Before ISO 8000 DQ standards were published, a more mature management system of product quality standards existed—ISO 9000

[75]. Initially published by the ISO in 1987 and refined several times, the ISO 9000 family of standards was designed to help organizations ensure that they meet the needs of their customers and other stakeholders while meeting the legal and regulatory requirements associated with their products. It is a general requirement and guide for quality management that helps organizations to effectively implement and operate a quality management system. While ISO 9000 is concerned with product quality, ISO 8000 is focused on DQ. ISO 8000 is designed to improve data-based quality management systems, a standard that addresses the gap between ISO 9000 standards and data products

[76].

In addition, international standards related to DQ include ISO/IEC 25012 Software Product Quality Requirements and Assessment Data Quality Model

[77], ISO/IEC 25024 Quality Requirements and Evaluation of Systems and Software

[78]—Measurement of Data Quality, etc. ISO/IEC 25012 standard proposes a DQ model called Software Product Quality Requirements and Evaluation (SQuaRE) that can be used to manage any type of data. It emphasizes the view of DQ as a part of the information system and defines quality features for the subject data. In the following, we compare the following 5 ISO standards that are often used in DQ management studies, as shown in

Table 3.

Table 3. ISO standards related to data quality.

| Standards |

Components |

Scope of Application |

| ISO/IEC 33000 |

Terminology related to process assessment; a framework for process quality assessment. |

Information Technology Domain Systems |

| ISO/IEC 25000 |

A general DQ model; 15 data quality characteristics. |

Structured data |

| ISO/IEC 15939 |

Activities of the measurement process; a suitable set of measures. |

System and software engineering |

| ISO 9000 |

A quality management system; 7 quality management principles. |

Quality management system |

| ISO 8000 |

Characteristics related to information and DQ; a framework for enhancing the quality of specific types of data methods for managing, measuring and refining information and DQ. |

Partially available for all types of data, partially available for specified data types |

The benefits of customizing and using international standards in the IoT context are: (1) the number of issues and system failures in the IoT environment will be reduced and all stakeholders will be aligned. (2) It is easier to apply DQ solutions on a global scale due to reduced heterogeneity. (3) DQ research in the IoT can be aligned with international standards to provide standardized solutions. (4) It enables better communication between partners.