| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Federica Villa | + 3009 word(s) | 3009 | 2021-06-09 11:36:06 | | | |

| 2 | Karina Chen | Meta information modification | 3009 | 2021-06-23 08:50:24 | | |

Video Upload Options

Light Detection and Ranging (LiDAR) is a 3D imaging technique, widely used in many applications such as augmented reality, automotive, machine vision, spacecraft navigation and landing. Achieving long-ranges and high-speed, most of all in outdoor applications with strong solar background illumination, are challenging requirements.

1. Introduction

2. LiDAR Techniques

3. Illumination Schemes

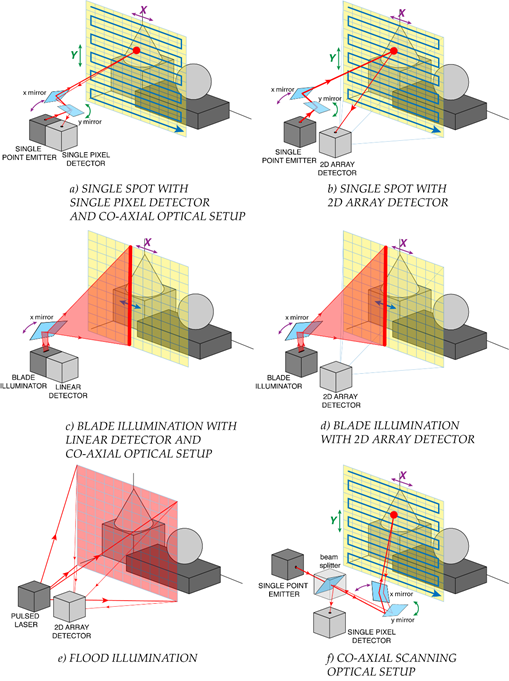

TOF-LiDAR techniques require to illuminate the scene by means of either a single light spot, a blade beam, or a flood illumination, as shown in Figure 4. The former two need to cover the whole scene through respectively 2D or 1D scanning, whereas the latter is exploited in flash-LiDAR with no need of scanning elements.

Single-spot illumination typically employs also a single pixel detector (Figure 4-a); hence, a coaxial optical system (as shown in Figure 4-f) is preferred, in order to avoid any alignment and parallax issue. Note that such a single pixel may be composed by a set (e.g., an array) of detectors, all acting as an ensemble detector (i.e., with no possibility to provide further spatial information within the active area), such as in Silicon PhotoMultipliers. Alternatively, it is also possible to use a 2D staring array detector (with an active area larger than the laser spot), shining the illumination spot onto the target through a simple non-coaxial optical setup, and eventually measuring the signal echo which walk across the 2D detector, depending also on the object distance (Figure 4-b). The main disadvantage is a lower Signal-to-Noise Ratio (SNR) because the detector collects the laser echo from the target spot but also the background light from a larger surrounding area. A more complex detector architecture or readout electronics can recover the ideal SNR by properly tracking the laser spot position within the 2D array detector, so to discard off-spot pixels, for example as proposed in [31].

Blade illumination can be employed in combination with linear detector arrays, mechanically spinning around their axis so to speed up scanning, or using a co-axial optical system (Figure 4-c) such as in the Velodyne LiDAR system [32]. Also in this case, it is possible to scan only the blade illumination, while keeping fixed a 2D staring array detector imaging the overall scene, or activating only one row at a time (Figure 4-d).

Finally, flash-LiDAR relies on a flood illumination of the whole scene, while using a staring camera where each pixel images one specific spot of the scene and measures the corresponding distance (Figure 4-e). The name “flash” highlights the possibility to acquire images at very high framerates, ideally also in a single laser shot, since no scanning is needed. However, the required laser pulse energy is typically extremely high for covering a sufficiently wide FOV, often far exceeding the eye-safety limits in case of human crossing the FOV at very short distance.

When scanning is required, the beam-steering can be performed with either optomechanical elements (e.g., rotating mirrors and prisms) and electromechanical moving parts (e.g., electric motors with mechanical stages) or compact Micro Electro-Mechanical Systems (MEMS) mirrors and solid-state Optical Phase Arrays (OPAs). MEMS and OPAs offer a more compact and lightweight alternative to electromechanical scanning and consequently also faster scanning, for instance through the usage of resonant mirrors. MEMS technology is by far more mature than OPAs, so to become the most exploited technology in modern LiDAR scanning systems [33].

The selection of the illumination scheme must trade off many parameters, such as laser energy, repetition frequency, eye-safety limits, detector architecture, measurement speed, and system complexity. Indeed, compared to scanning techniques, flood illumination in flash-LiDAR typically requires higher laser power to illuminate the entire scene in one shot and assuring enough signal return onto the detector. Such flood illumination could be convenient for eye-safety considerations (if no human being stays too close to the LiDAR output port) because, even if the total emitted power is high, it is spread across a wider area, so the power for unit area could be lower than single-spot long-range illumination. Flash-LiDAR has the advantage of simpler optics, at the expense of large pixel number 2D detector. In fact, the number of pixels limits the angular resolution given the FOV, or vice versa limits the FOV given the angular resolution. Instead, scanning negatively impacts acquisition speed and framerate: particularly 2D scanning is very slow and hardly compatible with real-time acquisitions and fast-moving targets. However, also flash-LiDAR can be operated not single-shot but with more laser shots and image acquisitions to collect enough signal, because the total pulse energy is distributed across a wide FOV and the return signal (above all from far-away objects) can be extremely very faint. In the following, we will focus on both 1D linear scanning with blade illumination and flash-LiDAR with flood illumination, because there is no preferred one for all applications.

Figure 4. TOF-LiDAR illumination schemes: (a) 2D raster scan with single spot and one pixel detector in a co-axial optical setup, (b) 2D raster scan with single spot and a 2D array detector, (c) 1D line scan with blade beam and a linear array detector in a co-axial optical setup, (d) 1D line scan with blade beam and a 2D array detector, (e) no scan with flood illumination and 2D imager (full scene flash acquisition), for flash-LiDAR. (f) Example of a co-axial scanning optical setup.

References

- Bronzi, D.; Zou, Y.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Automotive Three-Dimensional Vision through a Single-Photon Counting SPAD Camera. IEEE Trans. Intell. Transp. Syst. 2016, 17, 782–785.

- Warren, M.E. Automotive LIDAR Technology. In Proceedings of the Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C254–C255.

- Steger, C.; Ulrich, M.; Wiedemann, C. Machine Vision Algorithms and Applications; Wiley: Hoboken, NJ, USA, 2018.

- Glennie, C.L.; Carter, W.E.; Shrestha, R.L.; Dietrich, W.E. Geodetic imaging with airborne LiDAR: The Earth's surface revealed. Rep. Prog. Phys. 2013, 76, 086801.

- Yu, A.W.; Troupaki, E.; Li, S.X.; Coyle, D.B.; Stysley, P.; Numata, K.; Fahey, M.E.; Stephen, M.A.; Chen, J.R.; Yang, G.; et al. Orbiting and In-Situ Lidars for Earth and Planetary Applications. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 3479–3482.

- Pierrottet, D.F.; Amzajerdian, F.; Hines, G.D.; Barnes, B.W.; Petway, L.B.; Carson, J.M. Lidar Development at NASA Langley Research Center for Vehicle Navigation and Landing in GPS Denied Environments. In Proceedings of the IEEE Research and Applications of Photonics In Defense Conference (RAPID), Miramar Beach, FL, USA, 22–24 August 2018; pp. 1–4.

- Rangwala, S. Lidar: Lighting the path to vehicle autonomy. SPIE News, March 2021. Available online: (accessed on 31 May 2021).

- Hartley, R.I.; Sturm, P. Triangulation. Comput. Vis. Image Underst. 1997, 68, 146–157.

- Bertozzi, M.; Broggi, A.; Fascioli, A.; Nichele, S. Stereo vision-based vehicle detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 5 October 2000; pp. 39–44.

- Sun, J.; Li, Y.; Kang, S.B.; Shum, H.Y. Symmetric Stereo Matching for Occlusion Handling. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 399–406.

- Behroozpour, B.; Sandborn, P.A.M.; Wu, M.C.; Boser, B.E. Lidar System Architectures and Circuits. IEEE Commun. Mag. 2017, 55, 135–142.

- ENSENSO XR SERIES. Available online: (accessed on 25 May 2021).

- Structure Core. Available online: (accessed on 25 May 2021).

- Intel® RealSense™ Depth Camera D455. Available online: (accessed on 25 May 2021).

- Geng, J. Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photon. 2011, 3, 128–160.

- PRIMESENSE CARMINE. Available online: (accessed on 25 May 2021).

- Structure Sensor: Capture the World in 3D. Available online: (accessed on 25 May 2021).

- Atra ORBBEC. Available online: (accessed on 25 May 2021).

- Smisek, J.; Jancosek, M.; Pajdla, T. 3D with Kinect. In Consumer Depth Cameras for Computer Vision; Springer: Berlin, Germany, 2013; pp. 3–25.

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R.P. Time-of-Flight Cameras: Principles, Methods and Applications; Springer: Berlin, Germany, 2012.

- Becker, W. Advanced Time-Correlated Single Photon Counting Techniques; Springer: Berlin, Germany, 2005.

- Becker, W.; Bergmann, A.; Hink, M.A.; König, K.; Benndorf, K.; Biskup, C. Fluorescence lifetime imaging by time-correlated single-photon counting. Microsc. Res. Tech. 2004, 63, 58–66.

- Becker, W.; Bergmann, A.; Kacprzak, M.; Liebert, A. Advanced time-correlated single photon counting technique for spectroscopy and imaging of biological systems. In Proceedings of the SPIE, Fourth International Conference on Photonics and Imaging in Biology and Medicine, Tianjin, China, 3–6 September 2005; p. 604714.

- Bronzi, D.; Zou, Y.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Automotive Three-Dimensional Vision through a Single-Photon Counting SPAD Camera. IEEE Trans. Intell. Transp. Syst. 2016, 17, 782–785.

- Bellisai, S.; Bronzi, D.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Single-photon pulsed light indirect time-of-flight 3D ranging. Opt. Express 2013, 21, 5086–5098.

- Zhang, C.; Zhang, Z.; Tian, Y.; Set, S.Y.; Yamashita, S. Comprehensive Ranging Disambiguation for Amplitude-Modulated Continuous-Wave Laser Scanner With Focusing Optics. IEEE Trans. Instrum. Meas. 2021, 70, 8500711.

- Lum, D.J.; Knarr, S.H.; Howell, J.C. Frequency-modulated continuous-wave LiDAR compressive depth-mapping. Opt. Express 2018, 26, 15420–15435.

- Zhang, F.; Yi, L.; Qu, X. Simultaneous measurements of velocity and distance via a dual-path FMCW lidar system. Opt. Commun. 2020, 474, 126066.

- FMCW Lidar: The Self-Driving Game-Changer. Available online: (accessed on 25 May 2021).

- Radar & LiDAR Autonomous Driving Sensors by Mobileye & Intel. Available online: (accessed on 25 May 2021).