1. Augmented Reality Overview

Augmented Reality provides the composite view to the users by superimposing the computer-generated virtual content i.e. audio, graphic, text, or video on the real world object. The major components of the AR process are the tracking of the position for placing virtual objects in the real environment and display of the virtual content to the user. Tracking process in AR is to follow a defined pattern in the real world using the computer or mobile for the correct placement of the virtual object in the real world. While display technologies are used to display the virtual content in front of the viewer's eyes.

For many years, people have been using lenses, light sources, and mirrors to create illusions and virtual images in the real world [

22,

23,

24]. Ivan Sutherland was the first person to truly generate the AR experience. Sketchpad, developed at MIT in 1963 by Ivan Sutherland, is the world’s first interactive graphic application [

25]. In

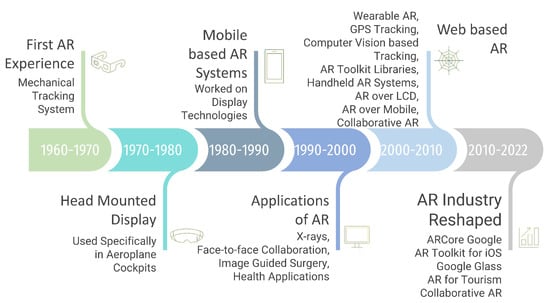

Figure 1, an overview of the development of AR technology from the beginning to 2022 is given. Bottani et al. [

26] reviewed the AR literature published during the time period of 2006–2017. Moreover, Sereno et al. [

27] use a systematic survey approach to detail the existing literature available on the intersection of computer-supported collaborative work and AR.

Figure 1. Augmented reality advancement over time for the last 60 years.

1.1. Head-Mounted Display

Ens et al. [

28] review the existing work on design exploration for mixed-scale gestures where the Hololens AR display is used to interweave larger gestures with micro-gestures.

1.2. AR Towards Applications

ARToolKit tracking library [

13] aimed to provide the computer vision tracking of a square marker in real-time which fixed two major problems, i.e., enabling interaction with real-world objects and secondly, the user’s viewpoint tracking system. Researchers conducted studies to develop handheld AR systems. Hettig et al. [

29] present a system called “Augmented Visualization Box” to asses surgical augmented reality visualizations in a virtual environment. Goh et al. [

30] present details of the critical analysis of 3D interaction techniques in mobile AR. Kollatsch et al. [

31] introduce a system that creates and introduces the production data and maintenance documentation into the AR maintenance apps for machine tools which aims to reduce the overall cost of necessary expertise and the planning process of AR technology. Bhattacharyya et al. [

32] introduce a two-player mobile AR game known as Brick, where users can engage in synchronous collaboration while inhabiting the real-time and shared augmented environment. Kim et al. [

33] suggest that this decade is marked by a tremendous technological boom particularly in rendering and evaluation research while display and calibration research has declined. Liu et al. [

34] expand the information feedback channel from industrial robots to a human workforce for human–robot collaboration development.

1.3. Augmented Reality for the Web

Cortes et al. [

35] introduce the new techniques of collaboratively authoring surfaces on the web using mobile AR. Qiao et al. [

36] review the current implementations of mobile AR, enabling technologies of AR, state-of-art technology, approaches for potential web AR provisioning, and challenges that AR faces in a web-based system.

1.4. AR Application Development

The AR industry was tremendously increasing in 2015, extending from smartphones to websites with head-worn display systems such as Google Glass. In this regard, Agati et al. [

18] propose design guidelines for the development of an AR manual assembly system which includes ergonomics, usability, corporate-related, and cognition.

AR for Tourism and Education: Shukri et al. [

37] aim to introduce the design guidelines of mobile AR for tourism by proposing 11 principles for developing efficient AR design for tourism which reduces cognitive overload, provides learning ability, and helps explore the content while traveling in Malaysia. In addition to it, Fallahkhair et al. [

38] introduce new guidelines to make AR technologies with enhanced user satisfaction, efficiency, and effectiveness in cultural and contextual learning using mobiles, thereby enhancing the tourism experience. Akccayir et al. [

39] show that AR has the advantage of placing the virtual image on a real object in real time while pedagogical and technical issues should be addressed to make the technology more reliable. Salvia et al. [

40] suggest that AR has a positive impact on learning but requires some advancements.

Sarkar et al. [

41] present an AR app known as ScholAR. It introduces enhancing the learning skills of the students to inculcate conceptualizing and logical thinking among sevemth-grade students. Soleiman et al. [

42] suggest that the use of AR improves abstract writing as compared to VR.

1.5. AR Security and Privacy

Hadar et al. [

43] scrutinize security at all steps of AR application development and identify the need for new strategies for information security, privacy, and security, with a main goal to design and introduce capturing and mapping concerns. Moreover, in the industrial arena, Mukhametshin et al. [

44] focus on developing sensor tag detection, tracking, and recognition for designing an AR client-side app for Siemen Company to monitor the equipment for remote facilities.

2. Tracking Technology of AR

Tracking technologies introduce the sensation of motion in the virtual and augmented reality world and perform a variety of tasks. Once a tracking system is rightly chosen and correctly installed, it allows a person to move within a virtual and augmented environment. It further allows us to interact with people and objects within augmented environments. The selection of tracking technology depends on the sort of environment, the sort of data, and the availability of required budgets. For AR technology to meet Azuma’s definition of an augmented reality system, it must adhere to three main components:

- it combines virtual and the real content;

- it is interactive in real time;

- it is registered in three dimensions.

The third condition of being “registered in three dimensions” alludes to the capability of an AR system to project the virtual content on physical surroundings in such a way that it seems to be part of the real world. The position and orientation (pose) of the viewer concerning some anchor in the real world must be identified and determined for registering the virtual content in the real environment. This anchor of the real world may be the dead-reckoning from inertial tracking, a defined location in space determined using GPS, or a physical object such as a paper image marker or magnetic tracker source. In short, the real-world anchor depends upon the applications and the technologies used. With respect to the type of technology used, there are two ways of registering the AR system in 3D:

- Determination of the position and orientation of the viewer relative to the real-world anchor: registration phase;

- Upgrading of viewer’s pose with respect to previously known pose: tracking phase.

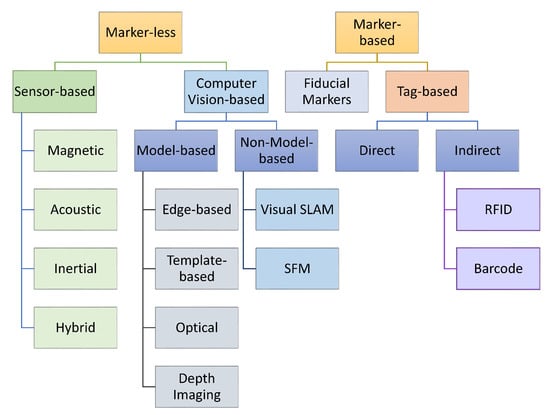

In this text, the word “tracking” would define both phases as common terminology. There are two main types of tracking techniques which are explained as follows (depicted in Figure 2).

Figure 2. Categorization of augmented reality tracking techniques.

2.1. Markerless Tracking Techniques

Markerless tracking techniques further have two types, one is sensor based and another is vision based.

2.1.1. Sensor-Based Tracking

Magnetic Tracking Technology: This technology includes a tracking source and two sensors, one sensor for the head and another one for the hand. The tracking source creates an electromagnetic field in which the sensors are placed. The computer then calculates the orientation and position of the sensors based on the signal attenuation of the field. This gives the effect of allowing a full 360 range of motion. i.e., allowing us to look all the way around the 3D environment. It also allows us to move around all three degrees of freedom. The hand tracker has some control buttons that allow the user to navigate along the environment. It allows us to pick things up and understand the size and shape of the objects [

45].

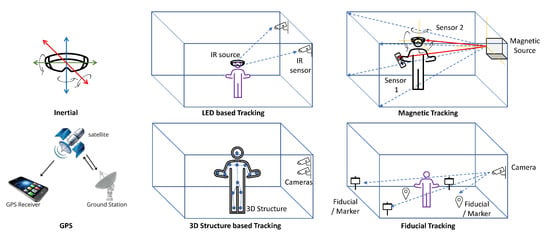

Figure 5 shows the tracking techniques to give a better understanding to the reader.

Figure 5. Augmented reality tracking techniques presentation.

Frikha et al. [

46] introduce a new mutual occlusion problem handler. The problem of occlusion occurs when the real objects are in front of the virtual objects in the scene. The authors use a 3D positioning approach and surgical instrument tracking in an AR environment. The paradigm is introduced that is based on monocular image-based processing. The result of the experiment suggested that this approach is capable of handling mutual occlusion automatically in real-time.

One of the main issues with magnetic tracking is the limited positioning range [

47]. Orientation and position can be determined by setting up the receiver to the viewer [

48]. Receivers are small and light in weight and the magnetic trackers are indifferent to optical disturbances and occlusion; therefore, these have high update rates. However, the resolution magnetic field declines with the fourth power of the distance, and the strength of magnetic fields decline with the cube of the distance [

49]. Therefore, the magnetic trackers have constrained working volume. Moreover, magnetic trackers are sensitive to environments around magnetic fields and the type of magnetic material used and are also susceptible to measurement jitter [

50].

Magnetic tracking technology is widely used in the range of AR systems, with applications ranging from maintenance [

51] to medicine [

52] and manufacturing [

53].

Inertial Tracking: Magnetometers, accelerometers, and gyroscopes are examples of inertial measurement units (IMU) used in inertial tracking to evaluate the velocity and orientation of the tracked object. An inertial tracking system is used to find the three rotational degrees of freedom relative to gravity. Moreover, the time period of the trackers’ update and the inertial velocity can be determined by the change in the position of the tracker.

Advantages of Inertial Tracking: It does not require a line of sight and has no range limitations. It is not prone to optical, acoustic, magnetic, and RE interference sources. Furthermore, it provides motion measurement with high bandwidth. Moreover, it has negligible latency and can be processed as fast as one desires.

Disadvantages of Inertial Tracking: They are prone to drift of orientation and position over time, but their major impact is on the position measurement. The rationale behind this is that the position must be derived from the velocity measurements. The usage of a filter could help in resolving this issue. However, the issue could while focusing on this, the filter can decrease the responsiveness and the update rate of the tracker [

54]. For the ultimate correction of this issue of the drift, the inertial sensor should be combined with any other kind of sensor. For instance, it could be combined with ultrasonic range measurement devices and optical trackers.

2.1.2. Vision-Based Tracking

Vision-based tracking is defined as tracking approaches that ascertain the camera pose by the use of data captured from optical sensors and as registration. The optical sensors can be divided into the following three categories:

- visible light tracking;

- 3D structure tracking;

- infrared tracking.

In recent times, vision-based tracking AR is becoming highly popular due to the improved computational power of consumer devices and the ubiquity of mobile devices, such as tablets and smartphones, thereby making them the best platform for AR technologies. Chakrabarty et al. [

55] contribute to the development of autonomous tracking by integrating the CMT into IBVS, their impact on the rigid deformable targets in indoor settings, and finally the integration of the system into the Gazebo simulator. Vision-based tracking is demonstrated by the use of an effective object tracking algorithm [

56] known as the clustering of static-adaptive correspondences for deformable object tracking (CMT). Gupta et al. [

57] detail the comparative analysis between the different types of vision-based tracking systems.

Moreover, Krishna et al. [

58] explore the use of electroencephalogram (EEG) signals in user authentication. User authentication is similar to facial recognition in mobile phones. Moreover, this is also evaluated by combining it with eye-tracking data. This research contributes to the development of a novel evaluation paradigm and a biometric authentication system for the integration of these systems. Furthermore, Dzsotjan et al. [

59] delineate the usefulness of the eye-tracking data evaluated during the lectures in order to determine the learning gain of the user. Microsoft HoloLens2’s designed Walk the Graph app was used to generate the data. Binary classification was performed on the basis of the kinematic graphs which users reported of their own movement.

Ranging from smartphones to laptops and even to wearable devices with suitable cameras located in them, visible light tracking is the most commonly used optical sensor. These cameras are particularly important because they can both make a video of the real environment and can also register the virtual content to it, and thereby can be used in video see-through AR systems.

Chen et al. [

60] resolve the shortcomings of the deep learning lightning model (DAM) by combining the method of transferring a regular video to a 3D photo-realistic avatar and a high-quality 3D face tracking algorithm. The evaluation of the proposed system suggests its effectiveness in real-world scenarios when we have variability in expression, pose, and illumination. Furthermore, Rambach et al. [

61] explore the details pipeline of 6DoF object tracking using scanned 3D images of the objects. The scope of research covers the initialization of frame-to-frame tracking, object registration, and implementation of these aspects to make the experience more efficient. Moreover, it resolves the challenges that we faced with occlusion, illumination changes, and fast motion.

2.1.3. Three-Dimensional Structure Tracking

Three-dimensional structure information has become very affordable because of the development of commercial sensors capable of accomplishing this task. It was begun after the development of Microsoft Kinect [

62]. Syahidi et al. [

63] introduce a 3D AR-based learning system for pre-school children. For determining the three-dimensional points in the scene, different types of sensors could be used. The most commonly used are the structured lights [

64] or the time of flight [

65]. These technologies work on the principle of depth analysis. In this, the real environment depth information is extracted by the mapping and the tracking [

66]. The Kinect system [

67], developed by Microsoft, is one of the widely used and well-developed approaches in Augmented Reality.

Rambach et al. [

68] present the idea of augmented things: utilizing off-screen rendering of 3D objects, the realization of application architecture, universal 3D object tracking based on the high-quality scans of the objects, and a high degree of parallelization. Viyanon et al. [

69] focus on the development of an AR app known as “AR Furniture" for providing the experience of visualizing the design and decoration to the customers. The customers fit the pieces of furniture in their rooms and were able to make a decision regarding their experience. Turkan et al. [

70] introduce the new models for teaching structural analysis which has considerably improved the learning experience. The model integrates 3D visualization technology with mobile AR. Students can enjoy the different loading conditions by having the choice of switching loads, and feedback can be provided in the real-time by AR interface.

2.1.4. Infrared Tracking

The objects that emitted or reflected the light are some of the earliest vision-based tracking techniques used in AR technologies. Their high brightness compared to their surrounding environment made this tracking very easy [

71,

72]. The self-light emitting targets were also indifferent to the drastic illumination effects i.e., harsh shadows or poor ambient lighting. In addition, these targets could either be transfixed to the object being tracked and camera at the exterior of the object and was known as “outside-looking-in” [

73]. Or it could be “inside-looking-out”, external in the environment with camera attached to the target [

74]. The inside-looking-out configuration, compared to the sensor of the inside-looking-out system, has greater resolution and higher accuracy of angular orientation. The inside-looking-out configuration is used in the development of several systems [

20,

75,

76,

77], typically with infrared LEDs mounted on the ceiling and a head-mounted display with a camera facing externally.

2.1.5. Model-Based Tracking

The three-dimensional tracking of real-world objects has been the subject of researchers’ interest. It is not as popular as natural feature tracking or planner fiducials, however, a large amount of research has been done on it. In the past, tracking the three-dimensional model of the object was usually created by the hand. In this system, the lines, cylinders, spheres, circles, and other primitives were combined to identify the structure of objects [

78]. Wuest et al. [

79] focus on the development of the scalable and performance pipeline for creating a tracking solution. The structural information of the scene was extracted by using the edge filters. Additionally, for the determination of the pose, edge information and the primitives were matched [

80].

In addition, Gao et al. [

81] explore the tracking method to identify the different vertices of a convex polygon. This is done successfully as most of the markers are square. The coordinates of four vertices are used to determine the transformation matrix of the camera. Results of the experiment suggested that the algorithm was so robust to withstand fast motion and large ranges that make the tracking more accurate, stable, and real time.

The combination of edge-based tracking and natural feature tracking has the following advantages:

-

It provides additional robustness [

82].

-

Enables spatial tracking and thereby is able to be operated in open environments [

83].

-

For variable and complex environments, greater robustness was required. Therefore, they introduced the concept of keyframes [

84] in addition to the primitive model [

85].

Figen et al. [

86] demonstrate of a series of studies that were done at the university level in which participants were asked to make the mass volume of buildings. The first study demanded the solo work of a designer in which they had to work using two tools: MTUIs of the AR apps and analog tools. The second study developed the collaboration of the designers while using analog tools. The study has two goals: change in the behavior of the designer while using AR apps and affordances of different interfaces.

Developing and updating the real environment’s map simultaneously had been the subject of interest in model-based tracking. This has a number of developments. First, simultaneous localization and map building (SLAM) was primarily done for robot navigation in unknown environments [

87]. In augmented reality, [

88,

89], this technique was used for tracking the unknown environment in a drift-free manner. Second, parallel mapping and tracking [

88] was developed especially for AR technology. In this, the mapping of environmental components and the camera tracks were identified as a separate function. It improved tracking accuracy and also overall performance. However, like SLAM, it did not have the capability to close large loops in the constrained environment and area (

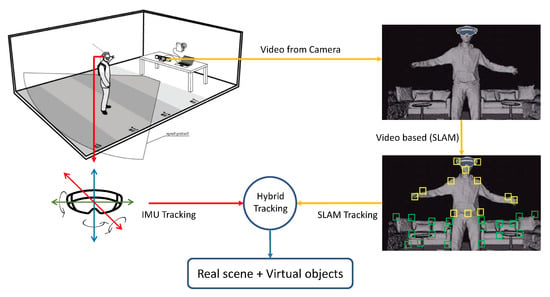

Figure 6).

Figure 6. Hybrid tracking: inertial and SLAM combined and used in the latest mobile-based AR tracking.

Oskiper et al. [

90] propose a simultaneous localization and mapping (SLAM) framework for sensor fusion, indexing, and feature matching in AR apps. It has a parallel mapping engine and error-state extended Kalman filter (EKF) for these purposes. Zhang et al.’s [

91] Jaguar is a mobile tracking AR application with low latency and flexible object tracking. This paper discusses the design, execution, and evaluation of Jaguar. Jaguar enables a markerless tracking feature which is enabled through its client development on top of ARCoreest from Google. ARCore is also helpful for context awareness while estimating and recognizing the physical size and object capabilities, respectively.

2.1.6. Global Positioning System—GPS Tracking

This technology refers to the positioning of outdoor tracking with reference to the earth. The present accuracy of the GPS system is up to 3 m. However, improvements are available with the advancements in satellite technology and a few other developments. Real-time kinematic (RTS) is one example of them. It works by using the carrier of a GPS signal. The major benefit of it is that it has the ability to improve the accuracy level up to the centimeter level. Feiner’s touring machine [

92] was the first AR system that utilized GPS in its tracking system. It used the inclinometer/magnetometer and differential GPS positional tracking. The military, gaming [

93,

94], and the viewership of historical data [

95] have applied GPS tracking for the AR experiences. As it only has the supporting positional tracking low accuracy, it could only be beneficial in the hybrid tracking systems or in the applications where the pose registration is not important. AR et al. [

96] use the GPS-INS receiver to develop models for object motion having more precision. Ashutosh et al. [

97] explore the hardware challenges of AR technology and also explore the two main components of hardware technology: battery performance and global positioning system (GPS).

Table 1 provides a succinct categorization of the prominent tracking technologies in augmented reality. Example studies are referred to while highlighting the advantages and challenges of each type of tracking technology. Moreover, possible areas of application are suggested.

Table 1. Summary of tracking techniques and their related attributes.

2.1.7. Miscellaneous Tracking

Yang et al. [

98], in order to recognize the different forms of hatch covers having similar shapes, propose tracking and cover recognition methods. The results of the experiment suggest its real-time property and practicability, and tracking accuracy was enough to be implemented in the AR inspection environment. Kang et al. [

99] propose a pupil tracker which consists of several features that make AR more robust: key point alignment, eye-nose detection, and infrared (NIR) led. NIR led turns on and off based on the illumination light. The limitation of this detector is that it cannot be applied in low-light conditions.

Moreover, Bach et al. [

118] introduce an AR canvas for information visualization which is quite different from the traditional AR canvas. Therefore, dimensions and essential aspects for developing the visualization design for AR-canvas while enlisting the several limitations within the process. Zeng et al. [

119] discuss the design and the implementation of FunPianoAR for creating a better AR piano learning experience. However, a number of discrepancies occurred with this system, and the initiation of a hybrid system is a more viable option. Rewkowski et al. [

120] introduce a prototype system of AR to visualize the laparoscopic training task. This system is capable of tracking small objects and requires surgery training by using widely compatible and inexpensive borescopes.

2.1.8. Hybrid Tracking

Hybrid tracking systems were used to improve the following aspects of the tracking systems:

-

Improving the accuracy of the tracking system.

-

Coping with the weaknesses of the respective tracking methods.

-

Adding more degrees of freedom.

Gorovyi et al. [

108] detail the basic principles that make up an AR by proposing a hybrid visual tracking algorithm. The direct tracking techniques are incorporated with the optical flow technique to achieve precise and stable results. The results suggested that they both can be incorporated to make a hybrid system, and ensured its success in devices having limited hardware capabilities. Previously, magnetic tracking [

109] or inertial trackers [

110] were used in the tracking applications while using the vision-based tracking system. Isham et al. [

111] use a game controller and hybrid tracking to identify and resolve the ultrasound image position in a 3D AR environment. This hybrid system was beneficial because of the following reasons:

-

Low drift of vision-based tracking.

-

Low jitter of vision-based tracking.

-

They had a robust sensor with high update rates. These characteristics decreased the invalid pose computation and ensured the responsiveness of the graphical updates [

121].

-

They had more developed inertial and magnetic trackers which were capable of extending the range of tracking and did not require the line of sight. The above-mentioned benefits suggest that the utilization of the hybrid system is more beneficial than just using the inertial trackers.

In addition, Mao et al. [

122] propose a new tracking system with a number of unique features. First, it accurately translates the relative distance into the absolute distance by locating the reference points at the new positions. Secondly, it embraces the separate receiver and sender. Thirdly, resolves the discrepancy in the sampling frequency between the sender and receiver. Finally, the frequency shift due to movement is highly considered in this system. Moreover, the combination of the IMU sensor and Doppler shift with the distributed frequency modulated continuous waveform (FMCW) helps in the continuous tracking of mobile due to multiple time interval developments. The evaluation of the system suggested that it can be applied to the existing hardware and has an accuracy to the millimeter level.

The GPS tracking system alone only provides the positional information and has low accuracy. So, GPS tracking systems are usually combined with vision-based tracking or inertial sensors. The intervention would help gain the full pose estimation of 6DoF [

123]. Moreover, backup tracking systems have been developed as an alternative when the GPS fails [

98,

124]. The optical tracking systems [

100] or the ultrasonic rangefinders [

101] can be coupled with the inertial trackers for enhancing efficiency. As the differential measurement approach causes the problem of drift, these hybrid systems help resolve them. Furthermore, the use of gravity as a reference to the inertial sensor made them static and bound. The introduction of the hybrid system would make them operate in a simulator, vehicle, or in any other moving platform [

125]. The introduction of accelerators, cameras, gyroscopes [

126], global positioning systems [

127], and wireless networking [

128] in mobile phones such as tablets and smartphones also gives an opportunity for hybrid tracking. Furthermore, these devices have the capability of determining outdoor as well as indoor accurate poses [

129].

2.2. Marker-Based Tracking

Fiducial Tracking: Artificial landmarks for aiding the tracking and registration that are added to the environment are known as fiducial. The complexity of fiducial tracking varies significantly depending upon the technology and the application used. Pieces of paper or small colored LEDs were used typically in the early systems, which had the ability to be detected using color matching and could be added to the environment [

130]. If the position of fiducials is well-known and they are detected enough in the scene then the pose of the camera can be determined. The positioning of one fiducial on the basis of a well-known previous position and the introduction of additional fiducials gives an additional benefit that workplaces could dynamically extend [

131]. A QR code-based fudicial/marker is also proposed by some researchers for marker-/tag-based tracking [

115]. With the progression of work on the concept and complexity of the fiducials, additional features such as multi-rings were introduced for the detection of fiducials at much larger distances [

116]. A minimum of four points of a known position is needed for determining for calculating the pose of the viewer [

117]. In order to make sure that the four points are visible, the use of these simpler fiducials demanded more care and effort for placing them in the environment. Examples of such fiducials are ARToolkit and its successors, whose registration techniques are mostly planar fiducial. In the upcoming section, AR display technologies are discussed to fulfill all the conditions of Azuma’s definition.

2.3. Summary

The text above provides comprehensive details on tracking technologies that are broadly classified into markerless and marker-based approaches. Both types have many subtypes whose details, applications, pros, and cons are provided in a detailed fashion. The different categories of tracking technologies are presented in Figure 2, while the summary of tracking technologies is provided in Figure 7. Among the different tracking technologies, hybrid tracking technologies are the most adaptive.

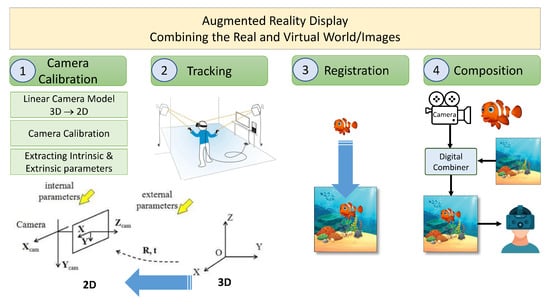

Figure 7. Steps for combining real and virtual content.

This entry is adapted from the peer-reviewed paper 10.3390/s23010146