High Dynamic Range (HDR) is a video and image technology that improves the way light is represented. It overcomes the limits of the standard format that is named Standard Dynamic Range (SDR). HDR offers the possibility to represent substantially brighter highlights, darker shadows, more details in both sides and more colorful colors than what was previously possible. HDR do not increase display's capabilities, rather it allows to make better use of displays that have high brightness, contrast and colors capabilities. Not all HDR displays have the same capabilities and HDR contents will thus look different depending the display used. HDR emerged first for videos in 2014. HDR10, HDR10+, Dolby Vision and HLG are common HDR formats. Some still pictures formats do also support HDR.

- sdr

- video

- hdr

1. Description

Other technologies before HDR improved the image quality by increasing the pixel quantity (resolution and frame rate). HDR improve the pixel quality.[1]

While CRT are not used anymore and modern displays are often much higher capable, SDR format is still based and limited to CRT's characteristics. HDR overcomes those limits.[2]

The maximum luminance level that can be represented is limited to around 100 nits for SDR while it is at least 1000 nits for HDR up to 10,000 nits (depends on the format used).[2][3] HDR also allows for lower black levels[4] and more saturated colors (i.e. more colorful colors).[2] The most common SDR format is limited to Rec.709/sRGB gamut while common HDR formats uses Rec. 2100 color primaries which is a Wide Color Gamut (WCG).[2][5]

Those are the technical limits of HDR formats. HDR contents are often limited to a peak brightness of 1000 or 4000 nits and DCI-P3 colors, even if they are stored in a higher capable format.[6][7] Display's cabilities vary and no current display is able to reproduce all the maximal range of brightness and colors that can be stored in HDR formats.

HDR video involves capture, production, content/encoding, and display.

1.1. Benefits

Main benefits:

- Highlights (i.e. the brightest parts of the image) can at the same time be substantially brighter, more colorful and have more details.[8]

- Lowlights (i.e. the darkest parts of the image) can be darker and have more details.[8]

- The colorful parts of the image can be even more colorful. (This is achieved by the use of WCG which is not common for SDR and common for HDR.)[9]

Other benefits:

- More realistic luminance variation between scenes such as sunlit, indoor and night scenes.[8]

- Better surface material identification.[8]

- Better in depth perception, even with 2D imagery.[8]

The increased maximum brightness capability can be used to increase the brightness of small areas without increasing overall image's brightness, resulting in for example bright reflections off shin objects, bright stars in a dark night scene, bright and colorful fire or sunset, bright and colorful light emissive objects.[8][9][10] Content creators can choose the way they use the increased capabilities. They also can choose to restrain themselves to the limits of SDR even if the content is delivered in an HDR format.[10]

1.2. Creative Intents Preservation

For the creative intents to be preserved, video formats require contents to be displayed and viewed following the standards.

HDR formats that use dynamic metadata (such as Dolby Vision and HDR10+) can be viewed on any compatible display. The metadata allow for the image to be adjusted to the display accordingly to the creative intents.[5] Other HDR formats (such as HDR10 and HLG) requires the consumer display to have at least the same capability than the mastering display. The creative intents are not ensured to be preserved on lower capable displays.[11]

For optimal quality, contents are required to be viewed in a relative dark environment.[12][13] Dolby Vision IQ and HDR10+ Adaptive adjust the content according to the ambient light.[14][15]

1.3. Other HDR Technologies

The HDR capture technique used for years in photography increase the dynamic range captured by cameras. The photos would then need to be tone mapped to SDR. Now they could be saved in HDR format (such as HDR10) that can be used to display the photos in a higher range of brightness.

Previous high dynamic range format such as raw and logarithmic format were only intended to be used for storage. Before reaching the consumer, they would need to be converted to SDR (i.e. gamma curve). Now they can also be converted into an HDR format (such as HDR10) and being displayed with a higher range of brightness and color.

2. Formats

Since 2014, multiple HDR formats have emerged including HDR10, HDR10+, Dolby Vision and HLG.[5][16] Some formats are royalty free, others require license and some are more capable than others.

Dolby Vision and HDR10+ include dynamic metadata while HDR10 and HLG don't.[5] Those are used to improve image quality on limited displays that are not capable to reproduce an HDR video in the way it has been created and delivered. Dynamic metadata allow content creators to control and choose the way the image is adjusted.[17] When low capable displays are used and dynamic metadata are not available, the result will vary upon the display's choices and artistic intents might not be preserved.

2.1. HDR10

HDR10 Media Profile, more commonly known as HDR10, is an open HDR standard announced on 27 August 2015 by the Consumer Technology Association.[18] It is the most wide spread of the HDR formats.[19]

HDR10 is technically limited to a maximum of 10,000 nits peak brightness, however HDR10 contents are commonly mastered with peak brightness from 1,000 to 4,000 nits.[6]

HDR10 is not backward compatible with SDR displays.

HDR10 lacks dynamic metadata.[20] On HDR10 displays that have lower color volume than the HDR10 content (for example lower peak brightness capability), the HDR10 metadata give information to help adjusting the content.[5] However the metadata are static (remain the same for the entire video) and do not tell how the content should be adjusted, thus the decision is up to the display and the creative intents might not be preserved.[11]

2.2. Dolby Vision

Dolby Vision is an end-to-end ecosystem for HDR video. It covers content creation, distribution and playback.[21] It's a proprietary solution from Dolby Laboratories that emerged in 2014.[22] It does use dynamic metadata and it's technically capable to represent luminance levels up to 10,000 nits.[5] Dolby Vision require content creators's display to have a peak brightness of at least 1,000 nits.[7]

2.3. HDR10+

HDR10+, also known as HDR10 Plus, is an HDR video format announced on 20 April 2017.[23] It's the same as HDR10 but with some dynamic metadata developed by Samsung added to it.[24][25][26] It's free to use for content creators and has a maximal $10,000 annual licence for some manufacturers.[27] It's aimed to be an alternative to Dolby Vision without the fees.[19]

2.4. HLG (HLG10 Format)

HLG10, commonly referred as HLG, is a video format using the HLG transfer function, a bit depth of 10-bits and the wide-gamut Rec. 2020 color space.[28] The HLG transfer function is backward compatible with SDR video[29][30][31] but the Rec. 2020 color space is not compatible with SDR color space (Rec.709).

2.5. Other Formats

- PQ10: Same as HDR10 without the metadata[32]

- Technicolor Advanced HDR: An HDR format which aims to be backwards compatible with SDR.[19] (As of December 2020) there is no content in this format.[19]

- SL-HDR1 (Single-Layer HDR system Part 1) is a HDR standard that was jointly developed by STMicroelectronics, Philips International B.V., and Technicolor R&D France.[33] It was standardised as ETSI TS 103 433 in August 2016.[34] SL-HDR1 provides direct backwards compatibility by using static (SMPTE ST 2086) and dynamic metadata (using SMPTE ST 2094-20 Philips and 2094-30 Technicolor formats) to reconstruct a HDR signal from a SDR video stream that can be delivered using SDR distribution networks and services already in place. SL-HDR1 allows for HDR rendering on HDR devices and SDR rendering on SDR devices using a single layer video stream.[34] The HDR reconstruction metadata can be added either to HEVC or AVC using a supplemental enhancement information (SEI) message.[34] Version 1.3.1 was published in March 2020.[35]

- SL-HDR2[36]

- SL-HDR3[37]

2.6. Comparison of Video Formats

| HDR10 | HDR10+ | Dolby Vision | HLG10 | ||

|---|---|---|---|---|---|

| Developed by | CTA | Samsung | Dolby | NHK and BBC | |

| Year | 2015 | 2017 | 2014 | 2015 | |

| Cost | Free | Free (for content company)

Yearly license (for manufacturer) [38] |

Proprietary | Free | |

| Technical characteristics | |||||

| Metadata | Static

(SMPTE ST 2086, MaxFALL, MaxCLL) |

Dynamic | Dynamic

(Dolby Vision L0, L1, L2 trim, L8 trim) |

None | |

| Transfer function | PQ | PQ | PQ (in most profiles)[39]

HLG (in profile 8.1)[39] |

HLG | |

| Bit Depth | 10 bit | 10 bit (or more) | 10 bit or 12 bit | 10 bit | |

| Peak luminance | Technical limit | 10,000 nits | 10,000 nits | 10,000 nits | Variable |

| Contents | No rules

1,000 - 4,000 nits (common) [6] |

No rules

1,000 - 4,000 nits (common)[6] |

(At least 1,000 nits[40])

4,000 nits common[6] |

1,000 nits common[41][42] | |

| Color primaries | Technical limit | Rec. 2020 | Rec. 2020 | Rec. 2020 | Rec. 2020 |

| Contents | DCI-P3 (common)[5] | DCI-P3 (common)[5] | At least DCI-P3[40] | DCI-P3 (common)[5] | |

| Backward compatibility | None | HDR10 | It depends on the profile used:

|

|

|

| Notes | PQ10 format is same as HDR10 without the metadata[32] | Technical characteristics of Dolby Vision depend on the profile used, but all profiles support the same Dolby Vision dynamic metadata.[39] | On SDR displays that don't support Rec. 2020 color primaries (WCG), HLG formats using Rec. 2020 color primaries will show a de-saturated image with visible hue shifts.[32] | ||

| Sources | [5][6][43] | [5][6][44][45] | [5][6][39][40][46][47] | [5][32][41][42] | |

3. Displays

Display devices capable of greater dynamic range have been researched for decades, primarily with flat panel technologies like plasma, SED/FED and OLED.

TV sets with enhanced dynamic range and upscaling of existing SDR/LDR video/broadcast content with reverse tone mapping have been anticipated since early 2000s.[48][49] In 2016, HDR conversion of SDR video was released to market as Samsung's HDR+ (in LCD TV sets)[50] and Technicolor SA's HDR Intelligent Tone Management.[51]

As of 2018, high-end consumer-grade HDR displays can achieve 1,000 cd/m2 of luminance, at least for a short duration or over a small portion of the screen, compared to 250-300 cd/m2 for a typical SDR display.[52]

Video interfaces that support at least one HDR Format include HDMI 2.0a, which was released in April 2015 and DisplayPort 1.4, which was released in March 2016.[53][54] On 12 December 2016, HDMI announced that Hybrid Log-Gamma (HLG) support had been added to the HDMI 2.0b standard.[55][56][57] HDMI 2.1 was officially announced on 4 January 2017, and added support for Dynamic HDR, which is dynamic metadata that supports changes scene-by-scene or frame-by-frame.[58][59]

3.1. Compatibility

As of 2020, no display is capable of rendering the full range of brightness and color of HDR formats.[28] A display is called an HDR display if it can accept HDR content and map them to its display characteristics.[28] Thus, the HDR logo only provides information about content compatibility and not display capability.

3.2. Certifications

Certifications have been made in order to give consumers information about the display rendering capability of a screen.

VESA DisplayHDR

The DisplayHDR standard from VESA is an attempt to make the differences in HDR specifications easier to understand for consumers, with standards mainly used in computer monitors and laptops. VESA defines a set of HDR levels; all of them must support HDR10, but not all are required to support 10-bit displays.[60] DisplayHDR is not an HDR format, but a tool to verify HDR formats and their performance on a given monitor. The most recent standard is DisplayHDR 1400 which was introduced in September 2019, with monitors supporting it released in 2020.[61][62] DisplayHDR 1000 and DisplayHDR 1400 are primarily used in professional work like video editing. Monitors with DisplayHDR 500 or DisplayHDR 600 certification provide a noticeable improvement over SDR displays, and are more often used for general computing and gaming.[63]

| Minimum peak luminance | Range of color | Typical dimming technology | Maximum black level luminance | Maximum backlight adjustment latency | |

|---|---|---|---|---|---|

| Brightness in cd/m2 | Color gamut | Brightness in cd/m2 | Number of video frames | ||

| DisplayHDR 400 | 400 | sRGB | Screen-level | 0.4 | 8 |

| DisplayHDR 500 | 500 | WCG* | Zone-level | 0.1 | 8 |

| DisplayHDR 600 | 600 | WCG* | Zone-level | 0.1 | 8 |

| DisplayHDR 1000 | 1000 | WCG* | Zone-level | 0.05 | 8 |

| DisplayHDR 1400 | 1400 | WCG* | Zone-level | 0.02 | 8 |

| DisplayHDR 400 True Black | 400 | WCG* | Pixel-level | 0.0005 | 2 |

| DisplayHDR 500 True Black | 500 | WCG* | Pixel-level | 0.0005 | 2 |

*Wide Color Gamut, at least 90% of DCI-P3 in specified volume (peak luminance)

Other certifications

UHD Alliance certifications:

- Ultra HD Premium: at least 90% of DCI-P3 in area.[64]

- Mobile HDR Premium: for mobile devices.[64][65]

4. Technical Details

HDR is mainly achieved by the use of PQ or HLG transfer function.[2][3] Wide Color Gamut (WCG) is also commonly used along HDR. Rec. 2020 color primaries.[2] A bit-depth of 10-bit or 12-bit is used to not see banding across the extended brightness range. Some additional metadata are sometimes used to handle the variety in displays brightness, contrast and colors. HDR video is defined in Rec. 2100.[3]

4.1. Transfer Function

- SDR uses a gamma curve transfer function that is based on CRT's characteristics and that is used to represent luminance levels up to around 100 nits.[2] HDR uses newly developed PQ or HLG transfer functions instead of the traditionnal gamma curve.[2] If the gamma curve would have been extended to 10,000 nits, it would have required a bit-depth of 15-bit to avoid banding.[66] PQ and HLG are more efficient.

HDR transfer functions:

- Perceptual Quantizer (PQ), or SMPTE ST 2084[67], is a transfer function developed for HDR that is able to represent luminance level up to 10,000 cd/m2.[68][69][70][71] It is the basis of HDR video formats (such as Dolby Vision,[39][72] HDR10[20] and HDR10+[44]) and is also used for HDR still picture formats.[73][74] PQ is not backward compatible with SDR. PQ can be encoded in 12-bit without showing any banding.

- Hybrid Log-Gamma (HLG) is a transfer function developed by the NHK and BBC.[75] It's backward compatible with SDR's gamma curve. It's the basis of an HDR format that is also named HLG or sometimes referred to as HLG10.[28] (The HLG10 format uses Rec. 2100 color primaries and is therefore not backward compatible with all SDR displays[28]). The HLG transfer function is also used by other video formats such Dolby Vision profile 8.4 and for HDR still picture formats.[39][76][77]

Both PQ and HLG are royalty-free.[78]

4.2. Metadata

Static metadata

Static HDR metadatas gives informations about the whole video.

- SMPTE ST 2086 or MDCV (Mastering Display Color Volume): It describes the color volume of the mastering display (i.e. the color primaries, the white point and the maximum and minimum luminance). It has been defined by SMPTE[11] and also in AVC[79] and HEVC[80] standards.

- MaxFALL (Maximum Frame Average Light Level)

- MaxCLL (Maximum Content Light Level)

Those metadatas do not describe how the HDR content should be adapted to an HDR consumer displays that have lower color volume (i.e. peak brightness, contrast and color gamut) than the content.[11][80]

The values of MaxFALL and MaxCLL should be calculated from the video stream itself (not including black borders for MaxFALL) based on how the scenes appear on the mastering display. It is not recommended to set them arbitrary.[81]

Dynamic metadata

Dynamic metadatas are specific for each frame or each scene of the video.

Dynamic metadatas of Dolby Vision, HDR10+ and SMPTE ST 2094 describe what color volume transform should be applied to contents that are shown on displays that have different color volume from the mastering display. It's optimized for each scene and each display. It allows for the creative intents to be preserved even on consumers displays that have limited color volume.

SMPTE ST 2094 or Dynamic Metadata for Color Volume Transform (DMCVT) is a standard for dynamic metadata published by SMPTE in 2016 as six parts.[82] It's carried in HEVC SEI, ETSI TS 103 433, CTA 861-G.[83] It includes four applications:

- ST 2094-10 (from Dolby), used for Dolby Vision.

- ST 2094-20 (from Philips). Colour Volume Reconstruction Information (CVRI) is based on ST 2094-20.[34]

- ST 2094-30 (by Technicolor). Colour Remapping Information (CRI) conforms to ST 2094-30 and is standardized in HEVC.[34]

- ST 2094-40 (by Samsung), used for HDR10+.

ETSI TS 103 572: A technical specification published on October 2020 by ETSI for HDR signaling and carriage of ST 2094-10 (Dolby Vision) metadata.[84]

4.3. Chromaticity

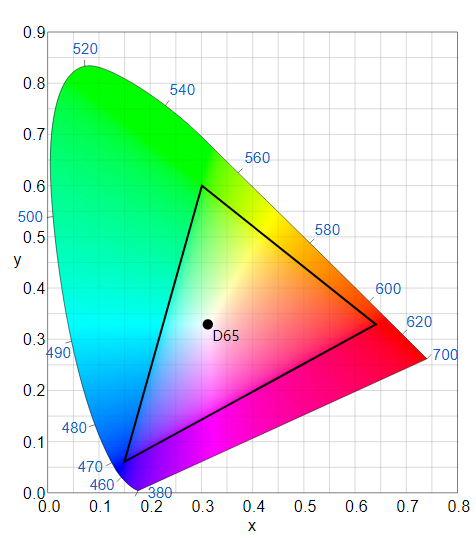

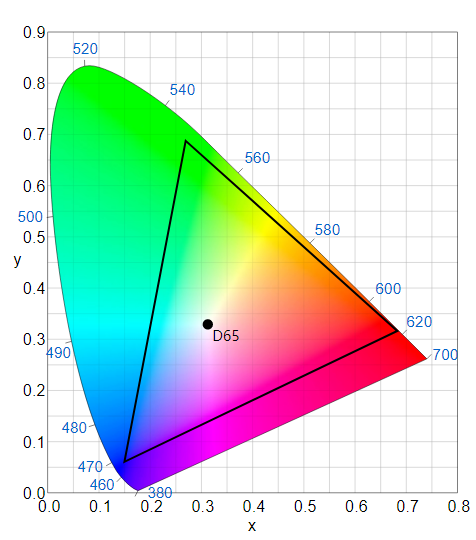

- SDR for HD video uses a system chromaticity (chromaticity of color primaries and white point) specified in Rec. 709 (same as sRGB).[85] SDR for SD used many different primaries, as said in BT.601, SMPTE 170M.

HDR is commonly associated to a Wide Color Gamut (a system chromaticity wider than BT.709). Rec. 2100 (HDR-TV) uses the same system chromaticity that is used in Rec. 2020 (UHDTV).[86][87] HDR formats such as HDR10, HDR10+, Dolby Vision and HLG also use Rec. 2020 chromaticities.

HDR contents are commonly graded on a DCI-P3 display[5][88] and then contained in a HDR format that uses Rec. 2020 color primaries.

| Color space | Chromaticity coordinate (CIE, 1931) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Primary colors | White point | ||||||||

| Red | Green | Blue | |||||||

| xR | yR | xG | yG | xB | yB | Name | xW | yW | |

| Rec. 709[85] | 0.64 | 0.33 | 0.30 | 0.60 | 0.15 | 0.06 | D65 | 0.3127 | 0.3290 |

| sRGB | |||||||||

| DCI-P3[89][90] | 0.680 | 0.320 | 0.265 | 0.690 | 0.150 | 0.060 | P3-D65 (Display) | 0.3127 | 0.3290 |

| P3-DCI (Theater) | 0.314 | 0.351 | |||||||

| P3-D60 (ACES Cinema) | 0.32168 | 0.33767 | |||||||

| Rec. 2020[87] | 0.708 | 0.292 | 0.170 | 0.797 | 0.131 | 0.046 | D65 | 0.3127 | 0.3290 |

| Rec. 2100[86] | |||||||||

Rec.709 and sRGB (SDR) https://handwiki.org/wiki/index.php?curid=2016345

DCI-P3 (common HDR contents) https://handwiki.org/wiki/index.php?curid=2018095

Rec.2020 and Rec.2100 (HDR technical limit) https://handwiki.org/wiki/index.php?curid=2046801

4.4. Bit Depth

Because of the increased dynamic range, HDR contents need to use more bit depth than SDR to avoid banding. While SDR uses a bit depth of 8 or 10 bits,[85] HDR uses 10 or 12 bits.[86] This, combined with the use of more efficient transfer function (i.e. PQ or HLG), is enough to avoid banding.[91][92]

4.5. Signal Format

Rec. 2100 specifies the use of the RGB, the YCbCr or the ICTCP signal formats for HDR-TV.[86]

ICTCP is a color representation designed by Dolby for HDR and wide color gamut (WCG)[93] and standardized in Rec. 2100.[86]

IPTPQc2 (or IPTPQc2) with reshaping is a proprietary format by Dolby and is similar to ICTCP.[94] It is used by Dolby Vision profile 5.[94]

4.6. Dual Layer Video

Some Dolby Vision profiles use a dual layer video composed of a base layer and an enhancement layer.[94][95] Depending of the Dolby Vision profile, the base layer can be backward compatible with SDR, HDR10, HLG, Blu-ray or no video format.[94]

ETSI GS CCM 001 describes a Compound Content Management functionality for a dual layer HDR system.[96]

4.7. Guidelines and Recommendations

ITU-R Rec. 2100

Rec. 2100 is a technical recommendation by ITU-R for production and distribution of HDR content using 1080p or UHD resolution, 10-bit or 12-bit color, HLG or PQ transfer functions, the Rec. 2020 wide color gamut and YCBCR or ICTCP as color space.[12][97]

Ultra HD Forum Guidelines

UHD Phase A are guidelines from the Ultra HD Forum for distribution of SDR and HDR content using Full HD 1080p and 4K UHD resolutions. It requires color depth of 10-bits per sample, a color gamut of Rec. 709 or Rec. 2020, a frame rate of up to 60 fps, a display resolution of 1080p or 2160p, and either standard dynamic range (SDR) or high dynamic range that uses Hybrid Log-Gamma (HLG) or Perceptual Quantizer (PQ) transfer functions.[98] UHD Phase A defines HDR as having a dynamic range of at least 13 stops (213=8192:1) and WCG as a color gamut that is wider than Rec. 709.[98] UHD Phase A consumer devices are compatible with HDR10 requirements and can process Rec. 2020 color space and HLG or PQ at 10 bits.

UHD Phase B will add support to 120 fps (and 120/1.001 fps), 12 bit PQ in HEVC Main12 (that will be enough for 0.0001 to 10000 nits), Dolby AC-4 and MPEG-H 3D Audio, IMAX sound in DTS:X (without LFE). It will also add ITU's ICtCp and Color Remapping Information (CRI).

5. HDR in Still Images

5.1. HDR Image Formats

The following image formats are compatible with HDR (Rec.2100 color space, PQ and HLG transfer functions, Rec.2100/Rec.2020 color primaries):

- HEIC (HEVC codec in HEIF file format)

- AVIF (AV1 codec in HEIF file format), supports even ICTCP[73]

- JPEG XL[99]

- HSP (a format used by Panasonic cameras for photo capture in HDR with the HLG transfer function)[76]

- JPEG 2000, PNG, WebP, JPEG support it too by the use of ICC profile[100][101]

5.2. Adoption

Panasonic: Panasonic's S-series cameras (including Lumix S1, S1R, S1H and S5) can capture photos in HDR using the HLG transfer function and output them in a HSP file format.[76][102][103] The captured HDR pictures can be viewed in HDR by connecting the camera to an HLG-compliant display with an HDMI cable.[76][102]

Canon: EOS-1D X Mark III and EOS R5 are able to capture still images in the Rec.2100 color space by using the PQ transfer function, the HEIC format (HEVC codec in HEIF file format), the Rec. 2020 color primaries, a bit depth of 10 bit and a 4:2:2 YCbCr subsampling.[74][104][105][106][107] The captured HDR pictures can be viewed in HDR by connecting the camera to an HDR display with an HDMI cable.[107] Captured HDR pictures can also be converted to SDR JPEG (sRGB color space) and then viewed on any standard display.[107] Canon refers to those SDR pictures as "HDR PQ-like JPEG". Canon's Digital Photo Professional software is able to show the captured HDR pictures in HDR on HDR displays or in SDR on SDR displays.[107][108] It is also able to convert the HDR PQ to SDR sRGB JPEG.[109]

Sony: Sony α7S III and α1 cameras can capture HDR photos in the Rec.2100 color space with the HLG transfer function, the HEIF format, Rec. 2020 color primaries, a bit depth of 10 bit and a 4:2:2 or 4:2:0 subsampling.[77][110][111][112] The captured HDR pictures can be viewed in HDR by connecting the camera to an HLG-compliant display with an HDMI cable.[112]

Qualcomm: Snapdragon 888 mobile SoC allow the capture of 10-bit HDR HEIF still photos.[113][114]

6. History

6.1. Before HDR Video

In February and April 1990, Georges Cornuéjols introduced the first real-time HDR camera combining two successively[115] or simultaneously[116]-captured images.

In 1991, the first commercial video camera using consumer-grade sensors and cameras was introduced that performed real-time capturing of multiple images with different exposures, and producing an HDR video image, by Hymatom, licensee of Cornuéjols.

Also in 1991, Cornuéjols introduced the principle of non linear image accumulation HDR+ to increase the camera sensitivity:[117] in low-light environments, several successive images are accumulated, increasing the signal-to-noise ratio.

Later, in the early 2000s, several scholarly research efforts used consumer-grade sensors and cameras.[118] A few companies such as RED and Arri have been developing digital sensors capable of a higher dynamic range.[119][120] RED EPIC-X can capture time-sequential HDRx[121] images with a user-selectable 1–3 stops of additional highlight latitude in the "x" channel. The "x" channel can be merged with the normal channel in post production software. The Arri Alexa camera uses a dual gain architecture to generate an HDR image from two exposures captured at the same time.[122]

With the advent of low-cost consumer digital cameras, many amateurs began posting tone mapped HDR time-lapse videos on the Internet, essentially a sequence of still photographs in quick succession. In 2010, the independent studio Soviet Montage produced an example of HDR video from disparately exposed video streams using a beam splitter and consumer grade HD video cameras.[123] Similar methods have been described in the academic literature in 2001 and 2007.[124][125]

Modern movies have often been filmed with cameras featuring a higher dynamic range, and legacy movies can be converted even if manual intervention would be needed for some frames (as when black-and-white films are converted to color). Also, special effects, especially those that mix real and synthetic footage, require both HDR shooting and rendering. HDR video is also needed in applications that demand high accuracy for capturing temporal aspects of changes in the scene. This is important in monitoring of some industrial processes such as welding, in predictive driver assistance systems in automotive industry, in surveillance video systems, and other applications. HDR video can be also considered to speed image acquisition in applications that need a large number of static HDR images are, for example in image-based methods in computer graphics.

OpenEXR was created in 1999 by Industrial Light & Magic (ILM) and released in 2003 as an open source software library.[126][127] OpenEXR is used for film and television production.[127]

Academy Color Encoding System (ACES) was created by the Academy of Motion Picture Arts and Sciences and released in December 2014.[128] ACES is a complete color and file management system that works with almost any professional workflow and it supports both HDR and wide color gamut. More information can be found at https://www.ACESCentral.com (WCG).[128]

6.2. HDR Video

On January 2014, Dolby Laboratories announced Dolby Vision.[16]

The HEVC specification incorporates the Main 10 profile on their first version that supports 10 bits per sample.[129]

On 8 April 2015, The HDMI Forum released version 2.0a of the HDMI Specification to enable transmission of HDR. The Specification references CEA-861.3, which in turn references the Perceptual Quantizer (PQ), which was standardized as SMPTE ST 2084.[53] The previous HDMI 2.0 version already supported the Rec. 2020 color space.[130]

On 24 June 2015, Amazon Video was the first streaming service to offer HDR video using HDR10 Media Profile video.[131][132]

On 27 August 2015, Consumer Technology Association announced HDR10.[18]

On 17 November 2015, Vudu announced that they had started offering titles in Dolby Vision.[133]

On 1 March 2016, the Blu-ray Disc Association released Ultra HD Blu-ray with mandatory support for HDR10 Media Profile video and optional support for Dolby Vision.[134]

On 9 April 2016, Netflix started offering both HDR10 Media Profile video and Dolby Vision.[135]

On 6 July 2016, the International Telecommunication Union (ITU) announced Rec. 2100 that defines two HDR transfer functions—HLG and PQ.[12][97]

On 29 July 2016, SKY Perfect JSAT Group announced that on 4 October, they will start the world's first 4K HDR broadcasts using HLG.[136]

On 9 September 2016, Google announced Android TV 7.0, which supports Dolby Vision, HDR10, and HLG.[137][138]

On 26 September 2016, Roku announced that the Roku Premiere+ and Roku Ultra will support HDR using HDR10.[139]

On 7 November 2016, Google announced that YouTube would stream HDR videos that can be encoded with HLG or PQ.[140][141]

On 17 November 2016, the Digital Video Broadcasting (DVB) Steering Board approved UHD-1 Phase 2 with a HDR solution that supports Hybrid Log-Gamma (HLG) and Perceptual Quantizer (PQ).[142][143] The specification has been published as DVB Bluebook A157 and will be published by the ETSI as TS 101 154 v2.3.1.[142][143]

On 2 January 2017, LG Electronics USA announced that all of LG's SUPER UHD TV models now support a variety of HDR technologies, including Dolby Vision, HDR10, and HLG (Hybrid Log Gamma), and are ready to support Advanced HDR by Technicolor.

On 20 April 2017, Samsung and Amazon annouced HDR10+.[23]

On 12 September 2017, Apple announced the Apple TV 4K with support for HDR10 and Dolby Vision, and that the iTunes Store would sell and rent 4K HDR content.[144]

On 13 October 2020, Apple announced the iPhone 12 and iPhone 12 Pro series, the first smartphone that can record and edit video in Dolby Vision right in the camera roll[145] on frame-by-frame basis. iPhone uses HLG compatible profile 8 of Dolby Vision[146] with only L1 trim.

The content is sourced from: https://handwiki.org/wiki/Engineering:High_Dynamic_Range_(display_and_formats)

References

- Morrison, Geoffrey. "HDR is a big step in TV picture quality. Here's why." (in en). https://www.cnet.com/how-to/what-is-hdr-for-tvs-and-why-should-you-care/.

- "HDR (High Dynamic Range) on TVs explained". https://www.flatpanelshd.com/focus.php?subaction=showfull&id=1435052975.

- "BT.2100 : Image parameter values for high dynamic range television for use in production and international programme exchange". https://www.itu.int/rec/R-REC-BT.2100.

- "ITU-R Report BT.2390 - High dynamic range television for production and international programme exchange" (in en-US). https://www.itu.int:443/en/publications/ITU-R/Pages/publications.aspx.

- "Understanding HDR10 and Dolby Vision" (in en-US). https://www.gsmarena.com/understanding_hdr10_and_dolby_vision-news-46151.php.

- "HDR10 vs HDR10+ vs Dolby Vision: Which is better?" (in en-US). https://www.rtings.com/tv/learn/hdr10-vs-dolby-vision.

- "Dolby Vision for Content Creators - Workflows" (in en). https://professional.dolby.com/content-creation/dolby-vision-for-content-creators/2.

- "ITU-R Report BT.2390 - High dynamic range television for production and international programme exchange" (in en-US). https://www.itu.int/pub/R-REP-BT.2390.

- "HDR (High Dynamic Range) on TVs explained". https://www.flatpanelshd.com/focus.php?subaction=showfull&id=1435052975.

- "We need to talk about HDR". https://www.flatpanelshd.com/focus.php?subaction=showfull&id=1602751285.

- "ST 2086:2018 - SMPTE Standard - Mastering Display Color Volume Metadata Supporting High Luminance and Wide Color Gamut Images". ST 2086:2018: 1–8. April 2018. doi:10.5594/SMPTE.ST2086.2018. https://ieeexplore.ieee.org/document/8353899.

- "BT.2100 : Image parameter values for high dynamic range television for use in production and international programme exchange". International Telecommunication Union. 4 July 2016. https://www.itu.int/rec/R-REC-BT.2100.

- "BT.2035 : A reference viewing environment for evaluation of HDTV program material or completed programmes". https://www.itu.int/rec/R-REC-BT.2035/en.

- January 2020, Becky Roberts 22. "Dolby Vision IQ: everything you need to know" (in en). https://www.whathifi.com/advice/dolby-vision-iq-everything-you-need-to-know.

- January 2021, Becky Roberts 04. "Samsung HDR10+ Adaptive adjusts HDR pictures based on room lighting – yes, like Dolby Vision IQ" (in en). https://www.whathifi.com/news/samsung-hdr10-adaptive-adjusts-picture-based-on-room-lighting-yes-like-dolby-vision-iq.

- "CES 2014: Dolby Vision promises a brighter future for TV, Netflix and Xbox Video on board" (in en). 6 January 2014. https://www.expertreviews.co.uk/go/27453.

- "Dolby Vision and Independent Filmmaking" (in en-US). https://www.mysterybox.us/blog/2019/3/18/dolby-vision-and-independent-filmmaking-e75yg.

- Rachel Cericola (27 August 2015). "What Makes a TV HDR-Compatible? The CEA Sets Guidelines". Big Picture Big Sound. http://www.bigpicturebigsound.com/What-Makes-a-TV-HDR-Compatible-The-CEA-Sets-Guidelines.shtml.

- "HDR TV: What it is and why your next TV should have it". Designtechnica Corporation. 19 December 2020. https://www.digitaltrends.com/home-theater/what-is-hdr-tv/.

- Consumer Technology Association (27 August 2015). "CEA Defines 'HDR Compatible' Displays". https://www.cta.tech/News/Press-Releases/2015/August/CEA-Defines-‘HDR-Compatible’-Displays.aspx.

- Dolby. "Dolby Vision Whitepaper - An introduction to Dolby Vision". https://professional.dolby.com/siteassets/pdfs/dolby-vision-whitepaper_an-introduction-to-dolby-vision_0916.pdf.

- "CES 2014: Dolby Vision promises a brighter future for TV, Netflix and Xbox Video on board" (in en). https://www.expertreviews.co.uk/go/27453.

- "Samsung and Amazon Video Deliver Next Generation HDR Video Experience with Updated Open Standard HDR10+". Samsung. 20 April 2017. https://news.samsung.com/global/samsung-and-amazon-video-deliver-next-generation-hdr-video-experience-with-updated-open-standard-hdr10.

- John Laposky (20 April 2017). "Samsung, Amazon Video Team To Deliver Updated Open Standard HDR10+". Twice. http://www.twice.com/news/tv/samsung-amazon-video-team-deliver-updated-open-standard-hdr10/64840.

- Dynamic Metadata for Color Volume Transform — Application #4. September 2016. pp. 1–26. doi:10.5594/SMPTE.ST2094-40.2016. ISBN 978-1-68303-048-5. https://dx.doi.org/10.5594%2FSMPTE.ST2094-40.2016

- "SMPTE ST 2094 and Dynamic Metadata". Society of Motion Picture and Television Engineers. https://www.smpte.org/sites/default/files/2017-01-12-ST-2094-Borg-V2-Handout.pdf.

- "License Program - HDR10+". https://hdr10plus.org/license-program/.

- "Ultra HD Forum Guidelines v2.4". 19 October 2020. https://ultrahdforum.org/wp-content/uploads/UHD-Guidelines-V2.4-Fall2020-1.pdf.

- Morrison, Geoffrey. "What is HLG? Hybrid log gamma. Say what?" (in en). https://www.cnet.com/news/all-about-hlg-what-hybrid-log-gamma-means-for-your-tv/.

- St. Leger, Henry. "Hybrid Log Gamma: everything you need to know about HLG HDR" (in en). https://www.techradar.com/news/hybrid-log-gamma-what-you-need-to-know-about-hlg.

- "High Dynamic Range Television and Hybrid Log-Gamma". https://www.bbc.co.uk/rd/projects/high-dynamic-range.

- Ultra HD Forum (19 October 2020). "Ultra HD Forum Guidelines v2.4". https://ultrahdforum.org/wp-content/uploads/UHD-Guidelines-V2.4-Fall2020-1.pdf.

- "High-Performance Single Layer Directly Standard Dynamic Range (SDR) Compatible High Dynamic Range (HDR) System for use in Consumer Electronics devices (SL-HDR1)". ETSI. https://portal.etsi.org/webapp/WorkProgram/Report_WorkItem.asp?wki_id=49423.

- "ETSI Technical Specification TS 103 433 V1.1.1". ETSI. 3 August 2016. http://www.etsi.org/deliver/etsi_ts/103400_103499/103433/01.01.01_60/ts_103433v010101p.pdf.

- ETSI (March 2020). "ETSI TS 103 433-1 V1.3.1". https://www.etsi.org/deliver/etsi_ts/103400_103499/10343301/01.03.01_60/ts_10343301v010301p.pdf.

- ETSI (March 2021). "ETSI TS 103 433-2 V1.2.1". https://www.etsi.org/deliver/etsi_ts/103400_103499/10343302/01.02.01_60/ts_10343302v010201p.pdf.

- ETSI (March 2020). "ETSI TS 103 433-3 V1.1.1". https://www.etsi.org/deliver/etsi_ts/103400_103499/10343303/01.01.01_60/ts_10343303v010101p.pdf.

- "License Program - HDR10+". https://hdr10plus.org/license-program/.

- Dolby. "Dolby Vision Profiles and Levels Version 1.3.2 - Specification". https://professional.dolby.com/siteassets/content-creation/dolby-vision-for-content-creators/dolbyvisionprofileslevels_v1_3_2_2019_09_16.pdf.

- "Dolby Vision for Content Creators" (in en). https://professional.dolby.com/content-creation/dolby-vision-for-content-creators/3.

- "Guidance for operational practices in HDR television production". https://www.itu.int/pub/R-REP-BT.2408-3-2019.

- "BT.2100 : Image parameter values for high dynamic range television for use in production and international programme exchange". https://www.itu.int/rec/R-REC-BT.2100.

- Consumer Technology Association (27 August 2015). "CEA Defines 'HDR Compatible' Displays". https://www.cta.tech/News/Press-Releases/2015/August/CEA-Defines-‘HDR-Compatible’-Displays.aspx.

- HDR10+ Technologies, LLC (4 September 2019). "HDR10+ System Whitepaper". https://hdr10plus.org/wp-content/uploads/2019/08/HDR10_WhitePaper.pdf.

- Archer, John. "Samsung And Amazon Just Made The TV World Even More Confusing" (in en). https://www.forbes.com/sites/johnarcher/2017/04/20/samsung-and-amazon-just-made-the-tv-world-even-more-confusing/.

- Dolby. "Dolby Vision Whitepaper - An introduction to Dolby Vision". https://professional.dolby.com/siteassets/pdfs/dolby-vision-whitepaper_an-introduction-to-dolby-vision_0916.pdf.

- Pocket-lint (2020-10-13). "What is Dolby Vision? Dolby's own HDR tech explained" (in en-gb). https://www.pocket-lint.com/tv/news/dolby/139947-what-is-dolby-vision-dolby-s-very-own-hdr-tv-tech-explained.

- Karol Myszkowski; Rafal Mantiuk; Grzegorz Krawczyk (2008). High Dynamic Range Video (First ed.). Morgan & Claypool. p. 8. ISBN 9781598292145. https://books.google.com/books?id=PVPggnBIC-wC&q=viable. Retrieved 11 October 2020.

- Ldr2Hdr: on-the-fly reverse tone mapping of legacy video and photographs . SIGGRAPH 2007 paper http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.228.7780

- Steven Cohen (27 July 2016). "Samsung Releases HDR+ Firmware Update for 2016 SUHD TV Lineup". High-Def Digest. http://www.highdefdigest.com/news/show/Samsung/hdr/high-dynamic-range/updates/Firmware/4K/Ultra_HD/suhd-tvs/Quantum_Dots/samsung-releases-hdr-firmware-update-for-2016-suhd-tv-lineup/33753.

- Carolyn Giardina (11 April 2016). "NAB: Technicolor, Vubiquity to Unwrap HDR Up-Conversion and TV Distribution Service". The Hollywood Reporter. http://www.hollywoodreporter.com/behind-screen/nab-2016-technicolor-vubiquity-unwrap-882261.

- "Summary of DisplayHDR Specs". https://displayhdr.org/performance-criteria/.

- "HDMI 2.0a Spec Released, HDR Capability Added". Twice. 8 April 2015. http://www.twice.com/news/trade-groups/hdmi-20a-spec-released-hdr-capability-added/56694.

- "VESA Updates Display Stream Compression Standard to Support New Applications and Richer Display Content". PRNewswire. 27 January 2016. http://www.prnewswire.com/news-releases/vesa-updates-display-stream-compression-standard-to-support-new-applications-and-richer-display-content-300210420.html.

- "Introducing HDMI 2.0b". HDMI.org. http://www.hdmi.org/manufacturer/hdmi_2_0/index.aspx.

- Ramus Larsen (12 December 2016). "HDMI 2.0b standard gets support for HLG HDR". flatpanelshd. http://www.flatpanelshd.com/news.php?subaction=showfull&id=1481526782.

- Andrew Cotton (31 December 2016). "2016 in Review - High Dynamic Range". BBC. http://www.bbc.co.uk/rd/blog/2016-12-bbc-high-dynamic-range-2016.

- "HDMI Forum announces version 2.1 of the HDMI specification". HDMI.org. 4 January 2017. http://www.hdmi.org/press/press_release.aspx?prid=145.

- "Philips HDR technology". Philips. http://www.ip.philips.com/data/downloadables/1/9/7/9/philips_hdr_white_paper.pdf.

- "DisplayHDR – The Higher Standard for HDR Monitors". https://displayhdr.org/.

- Coberly, Cohen (5 September 2019). "VESA's DisplayHDR specification now covers ultra-bright 1,400-nit monitors - Meet DisplayHDR 1400". https://www.techspot.com/news/81773-vesa-displayhdr-specification-now-covers-ultra-bright-1400.html.

- Byford, Sam (10 January 2020). "This year's monitors will be faster, brighter, and curvier than ever". https://www.theverge.com/circuitbreaker/2020/1/10/21059418/ces-2020-best-pc-monitors-specs-1000r-mini-led-360hz-curved-variable-refresh.

- Harding, Scharon (2021-01-15). "How to Choose the Best HDR Monitor: Make Your Upgrade Worth It - Understand HDR displays and how to find the best one for you". https://www.tomshardware.com/features/best-hdr-monitor-how-to-choose.

- "UHD Alliance". https://alliance.experienceuhd.com/.

- Pocket-lint (2021-01-26). "Mobile HDR: Dolby Vision, HDR10 and Mobile HDR Premium explained" (in en-gb). https://www.pocket-lint.com/phones/news/dolby/138387-mobile-hdr-dolby-vision-hdr10-and-mobile-hdr-premium-explained.

- Adam Wilt (20 February 2014). "HPA Tech Retreat 2014 – Day 4". DV Info Net. http://www.dvinfo.net/article/trip_reports/hpa-tech-retreat-2014-day-4.html.

- "ST 2084:2014". IEEE Xplore. doi:10.5594/SMPTE.ST2084.2014. https://ieeexplore.ieee.org/document/7291452.

- Dolby Laboratories. "Dolby Vision Whitepaper". http://www.dolby.com/us/en/technologies/dolby-vision/dolby-vision-white-paper.pdf.

- Eilertsen, Gabriel (2018). The high dynamic range imaging pipeline. Linköping University Electronic Press. pp. 30–31. ISBN 9789176853023. https://books.google.com/books?id=LCtbDwAAQBAJ&pg=PA30. Retrieved 22 August 2020.

- Chris Tribbey (10 July 2015). "HDR Special Report: SMPTE Standards Director: No HDR Format War, Yet". MESA. http://mesalliance.org/blog/me-daily/2015/07/10/smpte-standards-director-no-hdr-format-war-yet/.

- Bryant Frazer (9 June 2015). "Colorist Stephen Nakamura on Grading Tomorrowland in HDR". studiodaily. http://www.studiodaily.com/2015/06/colorist-stephen-nakamura-grading-tomorrowland-dolby-vision/.

- Dolby Laboratories. "Dolby Vision Whitepaper". http://www.dolby.com/us/en/technologies/dolby-vision/dolby-vision-white-paper.pdf.

- "AV1 Image File Format (AVIF)". https://aomediacodec.github.io/av1-avif/.

- "Canon EOS-1D X Mark III Review". https://www.the-digital-picture.com/Reviews/Canon-EOS-1D-X-Mark-III.aspx.

- "High Dynamic Range". European Broadcasting Union. https://tech.ebu.ch/docs/events/IBC2015/IBC_Fact_Sheet_HDR_Demo_Final.pdf.

- "Press Release - A New Hybrid Full-Frame Mirrorless Camera, the LUMIX S5 Featuring Exceptional Image Quality in High Sensitivity Photo/Video And Stunning Mobility". https://www.panasonic.com/content/dam/Panasonic/Global/Learn-More/Lumix-s/DC-S5_PressRelease.pdf.

- "Sony α1 with superb resolution and speed" (in en). https://www.sony.com/electronics/interchangeable-lens-cameras/ilce-1/specifications.

- "High Dynamic Range with Hybrid Log-Gamma". BBC. https://custom.cvent.com/63DDE0970BB04F3EA62737749B39B60C/files/event/841C5F30AA5948D4B4D985BC90AE0B2C/426e265101984437b7f9bde81acf8364.pdf.

- "H.264 : Advanced video coding for generic audiovisual services". https://www.itu.int/rec/T-REC-H.264-201906-I/en.

- "H.265 : High efficiency video coding". https://www.itu.int/rec/T-REC-H.265-201911-I/en.

- "A DTV Profile for Uncompressed High Speed Digital Interfaces (CTA-861-G), Annex P" (in en). https://shop.cta.tech/products/a-dtv-profile-for-uncompressed-high-speed-digital-interfaces-cta-861-g.

- "SMPTE ST 2094 and Dynamic Metadata". Society of Motion Picture and Television Engineers. https://www.smpte.org/sites/default/files/2017-01-12-ST-2094-Borg-V2-Handout.pdf.

- SMPTE Professional Development Academy. "SMPTE Standards Webcast Series - SMPTE ST 2094 and Dynamic Metadata". https://forum.selur.net/attachment.php?aid=611.

- ETSI (October 2020). "ETSI TS 103 572 V1.2.1". https://www.etsi.org/deliver/etsi_ts/103500_103599/103572/01.02.01_60/ts_103572v010201p.pdf.

- "BT.709 : Parameter values for the HDTV standards for production and international programme exchange". https://www.itu.int/rec/R-REC-BT.709/en.

- "BT.2100 : Image parameter values for high dynamic range television for use in production and international programme exchange". https://www.itu.int/rec/R-REC-BT.2100.

- "BT.2020 : Parameter values for ultra-high definition television systems for production and international programme exchange". https://www.itu.int/rec/R-REC-BT.2020/en.

- "Dolby Vision for Content Creators - Workflows" (in en). https://professional.dolby.com/content-creation/dolby-vision-for-content-creators/2.

- Kid Jansen (2014-02-19). "The Pointer's Gamut". tftcentral. https://www.tftcentral.co.uk/articles/pointers_gamut.htm.

- Rajan Joshi; Shan Liu; Gary Sullivan; Gerhard Tech; Ye-Kui Wang; Jizheng Xu; Yan Ye (2016-01-31). "HEVC Screen Content Coding Draft Text 5". JCT-VC. http://phenix.it-sudparis.eu/jct/doc_end_user/current_document.php?id=10311.

- "HDR Video Part 3: HDR Video Terms Explained" (in en-US). https://www.mysterybox.us/blog/2016/10/19/hdr-video-part-3-hdr-video-terms-explained.

- T. Borer; A. Cotton. "A "Display Independent" High Dynamic Range Television System". BBC. http://downloads.bbc.co.uk/rd/pubs/whp/whp-pdf-files/WHP309.pdf.

- Dolby. "ICtCp Dolby White Paper - What is ICTCP ? - Introduction". https://professional.dolby.com/siteassets/pdfs/ictcp_dolbywhitepaper_v071.pdf.

- Dolby. "Dolby Vision Profiles and Levels Version 1.3.2 - Specification". https://professional.dolby.com/siteassets/content-creation/dolby-vision-for-content-creators/dolbyvisionprofileslevels_v1_3_2_2019_09_16.pdf.

- "ETSI - GS CCM 001 - Compound Content Management Specification". https://www.etsi.org/deliver/etsi_gs/CCM/001_099/001/01.01.01_60/gs_CCM001v010101p.pdf.

- ETSI (February 2017). "ETSI GS CCM 001 V1.1.1". https://www.etsi.org/deliver/etsi_gs/CCM/001_099/001/01.01.01_60/gs_CCM001v010101p.pdf.

- "ITU announces BT.2100 HDR TV standard". Rasmus Larsen. 5 July 2016. https://www.flatpanelshd.com/news.php?subaction=showfull&id=1467719709.

- "Ultra HD Forum: Phase A Guidelines". Ultra HD Forum. 15 July 2016. http://ultrahdforum.org/wp-content/uploads/2016/04/Ultra-HD-Forum-Deployment-Guidelines-V1.1-Summer-2016.pdf.

- "ISO/IEC JTC 1/SC29/WG1". 9-15 April 2018. https://jpeg.org/downloads/jpegxl/jpegxl-cfp.pdf.

- "ICC HDR Working Group". http://www.color.org/groups/hdr/index.xalter.

- Blog, Netflix Technology (2018-09-24). "Enhancing the Netflix UI Experience with HDR" (in en). https://netflixtechblog.com/enhancing-the-netflix-ui-experience-with-hdr-1e7506ad3e8.

- "How HDR display could change your photography forever". https://www.dpreview.com/articles/8980170510/how-hdr-tvs-could-change-your-photography-forever.

- Pocket-lint (2019-09-10). "What is HLG Photo? Panasonic S1 feature explained in full" (in en-gb). https://www.pocket-lint.com/cameras/news/panasonic/147985-what-is-hlg-photo-why-do-i-need-it-panasonic-s1.

- Europe, Canon. "Specifications & Features - EOS-1D X Mark III" (in en-EU). https://www.canon-europe.com/cameras/eos-1d-x-mark-iii/specifications/.

- Canon. "EOS-1D X Mark III specifications". https://downloads.canon.com/nw/camera/products/eos/1d-x-mark-iii/specs/eos-1-d-x-mark-iii-specifications.pdf.

- Europe, Canon. "Canon EOS R5 Specifications and Features -" (in en-EU). https://www.canon-europe.com/cameras/eos-r5/specifications/.

- "HDR PQ HEIF: Breaking Through the Limits of JPEG" (in en). https://snapshot.canon-asia.com/article/en/hdr-pq-heif-breaking-through-the-limits-of-jpeg.

- "HEIF – What you need to know" (in en-US). 2020-02-17. https://www.photoreview.com.au/tips/shooting/heif-what-you-need-to-know/.

- Canon. "Working with files saved in HEVC format.". https://support.usa.canon.com/kb/index?page=content&id=ART176556.

- "Sony α7S III with pro movie/still capability" (in en). https://www.sony.com/electronics/interchangeable-lens-cameras/ilce-7sm3/specifications.

- "Characteristics of HEIF format | Sony". https://support.d-imaging.sony.co.jp/support/ilc/heif/01/en/index.html.

- Sony (July 2020). "ILCE-7SM3 brochure". http://sonyglobal.akamaized.net/is/content/gwtvid/pdf/2020/ILCE-7SM3/brochure-ilce7sm3.pdf.

- "Qualcomm Snapdragon 888 5G Mobile Platform | Latest 5G Snapdragon Processor" (in en). 2020-11-17. https://www.qualcomm.com/products/snapdragon-888-5g-mobile-platform.

- Judd Heap, VP of Product Management, Qualcomm Technologies, Inc.. "Triple down on the future of photography with Snapdragon 888". https://www.qualcomm.com/media/documents/files/snapdragon-888-camera-blog-post-by-judd-heape-vp-of-product-management.pdf.

- "Device for increasing the dynamic range of a camera". https://worldwide.espacenet.com/data/publicationDetails/biblio?II=0&ND=3&adjacent=true&locale=en_EP&FT=D&date=19970610&CC=US&NR=5638119A&KC=A.

- "Camera with very wide dynamic range". https://worldwide.espacenet.com/publicationDetails/biblio?II=0&ND=3&adjacent=true&locale=en_EP&FT=D&date=19970610&CC=US&NR=5638119A&KC=A.

- "Device for increasing the dynamic range of a camera". https://worldwide.espacenet.com/searchResults?ST=singleline&locale=en_EP&submitted=true&DB=&query=US5638119.

- Kang, Sing Bing; Uyttendaele, Matthew; Winder, Simon; Szeliski, Richard (2003). ACM SIGGRAPH 2003 Papers – on SIGGRAPH '03. ch. High dynamic range video (pages 319–325). doi:10.1145/1201775.882270. ISBN 978-1-58113-709-5. https://dx.doi.org/10.1145%2F1201775.882270

- "RED Digital Cinema | 8K & 5K Professional Cameras". https://www.red.com/.

- "ARRI | Inspiring your Vision". http://www.arridigital.com.

- Zimmerman, Steven (12 October 2016). "Sony IMX378: Comprehensive Breakdown of the Google Pixel's Sensor and its Features". XDA Developers. http://www.xda-developers.com/sony-imx378-comprehensive-breakdown-of-the-google-pixels-sensor-and-its-features/.

- "ARRI Group: ALEXA´s Sensor". https://www.arri.com/camera/alexa/technology/arri_imaging_technology/alexas_sensor/.

- "HDR video accomplished using dual 5D Mark IIs, is exactly what it sounds like". Engadget. https://www.engadget.com/2010/09/09/hdr-video-accomplished-using-dual-5d-mark-iis-is-exactly-what-i/.

- "A Real Time High Dynamic Range Light Probe". http://gl.ict.usc.edu/Research/rtlp/.

- McGuire, Morgan; Matusik, Wojciech; Pfister, Hanspeter; Chen, Billy; Hughes, John; Nayar, Shree (2007). "Optical Splitting Trees for High-Precision Monocular Imaging". IEEE Computer Graphics and Applications 27 (2): 32–42. doi:10.1109/MCG.2007.45. PMID 17388201. http://nrs.harvard.edu/urn-3:HUL.InstRepos:4101892. Retrieved 14 July 2019.

- "Industrial Light & Magic Releases Proprietary Extended Dynamic Range Image File Format OpenEXR to Open Source Community" (PDF) (Press release). 22 January 2003. Archived from the original (PDF) on 21 July 2017. Retrieved 27 July 2016. https://web.archive.org/web/20170721234341/http://www.openexr.com/OpenEXR_Press_Release_1_22_03.pdf

- "Main OpenEXR web site". http://www.openexr.com.

- "ACES". Academy of Motion Picture Arts and Sciences. http://www.oscars.org/science-technology/sci-tech-projects/aces.

- "The emergence of HEVC and 10-bit colour formats - With Imagination". 2013-09-15. https://web.archive.org/web/20130915075921/http://withimagination.imgtec.com/index.php/powervr-video/the-emergence-of-hevc-and-10-bit-colour-formats.

- "HDMI :: Manufacturer :: HDMI 2.0 :: FAQ for HDMI 2.0". 2014-04-08. http://www.hdmi.org/manufacturer/hdmi_2_0/hdmi_2_0_faq.aspx.

- John Archer (24 June 2015). "Amazon Grabs Key Tech Advantage Over Netflix With World's First HDR Streaming Service". Forbes. https://www.forbes.com/sites/johnarcher/2015/06/24/amazon-launches-worlds-first-high-dynamic-range-hdr-video-streaming-service/.

- Kris Wouk (24 June 2015). "Amazon brings Dolby Vision TVs into the HDR fold with short list of titles". Digital Trends. http://www.digitaltrends.com/home-theater/amazon-video-dolby-vision-hdr/.

- "Dolby and VUDU launch the future home theater experience with immersive sound and advanced imaging". Business Wire. 17 November 2015. http://www.businesswire.com/news/home/20151117006135/en/.

- Caleb Denison (28 January 2016). "Ultra HD Blu-ray arrives March 2016; here's everything we know". Digital Trends. http://www.digitaltrends.com/home-theater/ultra-hd-blu-ray-specs-dates-and-titles/.

- Rasmus Larsen (9 April 2016). "Netflix is now streaming in HDR / Dolby Vision". Digital Trends. http://www.flatpanelshd.com/news.php?subaction=showfull&id=1460179224.

- Colin Mann (29 July 2016). "4K HDR from SKY Perfect JSAT". Advanced Television. http://advanced-television.com/2016/07/29/4k-hdr-from-sky-perfect-jsat/.

- "HDR Video Playback". Android. https://source.android.com/devices/tech/display/hdr.html.

- Ramus Larsen (7 September 2016). "Android TV 7.0 supports Dolby Vision, HDR10 and HLG". flatpanelshd. http://www.flatpanelshd.com/news.php?subaction=showfull&id=1473405995.

- David Katzmaier (26 September 2016). "Roku unveils five new streaming boxes with prices as low as $30". CNET. https://www.cnet.com/news/new-roku-boxes-start-streaming-at-a-dirt-cheap-30-dollars/.

- Steven Robertson (7 November 2016). "True colors: adding support for HDR videos on YouTube". https://youtube.googleblog.com/2016/11/true-colors-adding-support-for-hdr.html.

- "Upload High Dynamic Range (HDR) videos". https://support.google.com/youtube/answer/7126552.

- "DVB SB Approves UHD HDR Specification". Digital Video Broadcasting. 17 November 2016. https://www.dvb.org/news/uhd_1-phase-2-approved-by-dvb.

- James Grover (17 November 2016). "UHD-1 Phase 2 approved". TVBEurope. http://www.tvbeurope.com/uhd-1-phase-2-approved/.

- "Apple TV 4K - Technical Specifications" (in en-US). https://www.apple.com/apple-tv-4k/specs/.

- "Why the iPhone 12's Dolby Vision HDR Recording Is a Big Deal" (in en). https://www.howtogeek.com/695825/why-the-iphone-12s-dolby-vision-hdr-recording-is-a-big-deal.

- Patel, Nilay (2020-10-20). "Apple iPhone 12 Pro review: ahead of its time" (in en). https://www.theverge.com/21524288/apple-iphone-12-pro-review.