Chemotherapy and radiotherapy are first-line treatments in the management of advanced solid tumors. While these treatments are directed at mitigating the growth of and potentially eliminating cancer cells, they cause significant adverse effects that can be detrimental to a patient’s quality of life or even life-threatening. Diet is a modifiable risk factor that has been shown to affect cancer risk, recurrence, and treatment toxicity, but little information is known how diet interacts with cancer treatment modalities. Dietary interventions, such as caloric restriction, intermittent fasting and ketogenic diets, have shown promise in clinical pilot studies and pre-clinical mammal model studies by reducing the toxicity and increasing the efficacy of chemotherapeutics and radiotherapy treatment. However, further clinical trials on a wider scale and much more evidence are required to before any changes to clinical practice can be advised.

1. Introduction

Chemotherapy (CT) and radiation therapy, also known as radiotherapy (RT), are commonly used in the treatment of patients with locally advanced or metastatic solid tumors. CT has its origins in the early twentieth century with the first primitive CT drugs consisting of early aniline dyes and alkylating agents. Both of these were developed by German chemist Paul Ehrlich, who himself coined the term “chemotherapy” [

1]. Despite the advent of CT, RT and surgical interventions remained at the forefront of cancer care during the twentieth century, until the development of modern CT drugs that were less toxic and more efficacious. CT drugs saw a resurgence in their use at the beginning of the 1960s, with the development of the L1210 leukemia system for treating acute leukemia, with 25% of the cases among children going into complete remission [

1]. This success propelled CT back into the spotlight as a viable avenue of research and treatment. With the rise of adjuvant CT regimens in the 1970s, CT was established as a feasible method of cancer treatment with extremely beneficial results [

1,

2].

Radiation therapy, or radiotherapy (RT), has been a mainstay in the treatment of most cancers for over a century, with nearly 50% of all of the cancer patients receiving some form of RT through the duration of their illness [

5]. In some cases, RT is given in tandem or sequentially in combination with various types of CT agents, with the goal of creating a synergistic effect [

6]. Whereas these regimens can be effective in the eradication of cancerous tumors, adverse effects are well documented, and these can limit the treatment duration and cause significant discomfort for patients [

7].

Oxidative stress can be modulated in somatic cells in a limited and controlled manner through the alterations of an organism’s diet [

14]. In a study of in vivo and in vitro colon carcinoma models, 48 h cycles of short-term starvation (STS) feeding exerted an anti-Warburg effect [

15]. This anti-Warburg effect was accomplished by forcing normally glycolysis-dependent cancer cells to use oxidative phosphorylation to generate ATP. As many cancer cells and cell lines possess malfunctioning mitochondria and electron transport chains, oxidative phosphorylation is often inefficient and partially uncoupled by ATP synthesis. This results in oxidative damage occurring to the cancer cells and possible apoptosis [

15].

Caloric restriction (CR) can be defined as a net energy deficit in an individual’s consumption of food as measured by their caloric intake. The benefits of CR in the context of cancer prevention and treatment have been documented for well over a century, with Moreschi first describing that the tumors that were transplanted into the underfed mice did not grow as well as those that were transplanted in the mice that were fed ad libitum (AL) in 1909 [

17]. This deficit in caloric intake is generally set between 20% and 40% of the energy consumption from an organism’s initial average intake [

18,

19,

20]. A lower caloric threshold of CR must be defined to prevent the occurrence of under-nutrition or malnutrition. Reaching these nutritional states is likely to counteract many of the known cancer prevention benefits that CR is known to provide [

20].

Intermittent fasting (IF), or as it is often referred to in the scientific community, the time-restricted diet (TRD), is a form of dietary intervention which sets limits on the amount of time an individual is allowed to intake calories, as opposed to the content or number of calories that they consume. This has been hypothesized to improve caloric expenditure and perhaps importantly, encourage the body’s use of stored fatty acids [

21].

In contrast, a ketogenic diet (KD) severely limits the consumption of carbohydrates to induce ketosis, thereby forcing the body primarily to mobilize stored fatty acids for its energy needs. To induce ketosis, one must consume primarily calories in the form of fats in tandem with an extremely low-carbohydrate diet (usually <20–50 g) that is supplemented with adequate amounts of protein [

23]. KD is not a new concept and has been used successfully to treat disorders such as intractable epilepsy [

24,

25].

2. Dietary Interventions

2.1. Caloric Restriction and Fasting-Mimicking Diets Assist in Further Attenuating Tumor Growth with CT/RT Treatment Regimens

As noted previously, CR represents the reduction in the gross number of calories that are consumed daily, which is generally to less than 20–30% of the normal caloric intake [52]. This diet has a well-established effect of decreasing the overall presence of ROS among the somatic cells, likely resulting from the activation of the eNOS pathway via SIRT1 upregulation [14]. CR has been associated with tumor growth attenuation and progression, with experiments in rodent models indicating that CR can reduce the vascular density of tumors [41].

CR has also been found to enhance the immunogenic effect of RT through the downregulation of regulatory T-cells [

18]. While cellular senescence has a strong correlation with the prevalence of the tumor immune response, CR could potentially help to modulate this. When comparing T cell priming between young and aged animal models, CR was able to mitigate the age-associated effects in CD4+ T cell priming [

46]. In addition to these immunotherapeutic effects, cancer cells have been shown to compete with lymphocytes, among other cell types, for glucose [

54]. It is possible that the acute immune response that is induced by RT alongside CR could increase the rate of glycolysis in CD8+ T cells, thereby potentially depleting the amount of glucose that is available in the tumor microenvironment.

In one trial using murine models, a 30% CR was correlated with tumor regression when it was compared to the control group that was allowed to eat AL. Those tumors in the CR animals showed greater rates of apoptosis and less proliferation at a cellular level [

54].

Despite the potential benefits of CR, this dietary intervention is difficult to sustain for most patients [

56], particularly because many cancer patients are at risk of excessive weight loss or malnutrition. Because there is a high patient attrition to the CR diet, the pharmacologic methods that target the molecular pathways which are induced by CR may be particularly useful alternatives. Caloric restriction mimetics are a class of drug/dietary supplement that can mimic the metabolic and hormonal effects of caloric restriction, without the denial of the access to essential nutrients. One example of this is 2-Deoxy-D-glucose (2DG), a glucose analogue that lacks a hydroxyl group at C2. 2DG can be phosphorylated by hexokinase in the initial step of glycolysis, but it is unable to be metabolized by phospho-hexose isomerase, and thus, it competitively inhibits glucose utilization. This eventually leads to elevated SIRT1 and p-AMPK protein levels [

57]. Though there is insufficient evidence in humans, in vitro and in vivo pre-clinical studies have shown that they successfully mimicked the reduction in the progression and growth of tumors, which was observed in the CR studies [

57,

58].

Finally, CR diets have been shown to be safe and feasible for patients who were undergoing cancer treatments, so long as the patient’s nutrition is monitored and accounted for.

2.2. Intermittent Fasting Is a Safe and Feasible Way of Increasing the Efficacy of CT/RT Treatment as Well as Decreasing Treatment Toxicity

In contrast to the CR, intermittent fasting (IF), or a time-restricted diet (TRD), is a dietary intervention that restricts the duration of the feeding time, and this is followed by periods of prolonged fasting [

21]. These periods of fasting can vary from more than 24 h periods (often referred to as short-term starvation or STS) to periods of only 12 or more hours [

60]. As early as 1982, the studies of murine models showed that a 24 h fasting/feeding period improved the radioresistance to whole-body irradiation, as the dietary intervention was correlated with increased survival rates [

61]. In the studies of in vitro human liver cell lines, HepG2 and HuH6, the results displayed that there was a notably increased radiosensitivity under the nutrient-starved conditions. This was attributed to an increase in mTORC1 activity, which directly correlated with an increase in the radiosensitivity [

47].

One possible explanation for this phenomenon is that fasting, even for short periods of time, can induce an anti-Warburg effect. This is likely mediated by hypoxia-inducible factor-1 (HIF-1), a central regulator of glycolysis, whose activation has been associated with angiogenesis, erythropoiesis, and the modulation of notable enzymes within aerobic glycolysis [

62]. Using a murine xenograft model of CT26 carcinoma cells that were subjected to fasting conditions, the researchers showed a decreased ability of the tumor cells to metabolically adapt, thereby resulting in higher rates of oxidative damage and tumor cell apoptosis [

15]. Alternate day STS has also been shown to significantly increase the radiosensitivity among the mouse models of mammary tumors. This is likely due to the increased amount of oxidative stress that is placed on the cancer cells, with the consequence of DNA damage, relative to the surrounding non-cancerous cells [

63,

64].

Alongside the potential benefits during RT, IF has been investigated in combination with CTs for many years. In mice and yeast models, 48 h STS was associated with a reduction in the hematological toxicity that occurs after CT use, increased rate of DNA repair, and an increased treatment efficacy in combating metastatic neuroblastoma cells [

39] (

Figure 2). In a randomized pilot study of dietary modulation among 13 HER2-negative breast cancer patients receiving CT, de Groot and colleagues demonstrated that a 48 h short-term fasting period was a feasible method of reducing bone marrow toxicity, as measured by the lymphocyte count and the CT-induced DNA damage in the healthy cells [

30] (

Figure 2)

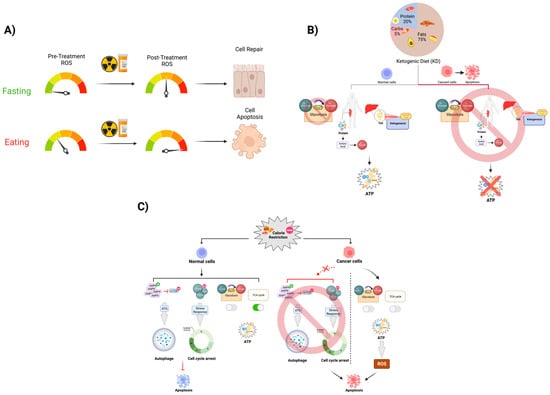

Figure 2. Fasting’s effect on anticancer treatment toxicity. The mechanism of radiation and some chemotherapeutic drugs are largely mediated through the induction of increased levels of oxidative stress-mediated DNA damage in the cell. Most of the oxidative stress in the cell is predominately produced by physiologic metabolic processes. (

A) During fasting periods in IF, the level of ROS that is produced by the mitochondria is significantly lower than it is during the active metabolism period. By introducing a period of short-term fasting pre- and post-cancer therapy that induces ROS, we expect this dietary modulation to protect normal cells from excess levels of DNA damage due to DNA stress. In this case, more normal cells can repair themselves and survive, while highly metabolic cancer cells are less likely to respond to short-term fasting as they are likely undergoing apoptosis as a result of this. (

B) As described by the Warburg effect, cancer cells vastly increase their uptake of glucose, developing an overreliance on glycolysis as their primary method of ATP generation. CR utilizes this lack of metabolic flexibility to disproportionately place more oxidative stress on cancer cells than they do on normal somatic cells, thereby leading to apoptosis. (

C) KD is able to exploit the Warburg Effect as well through purposefully depleting serum glucose via reducing the consumption of carbohydrates. This results in an overall increased reliance on fatty acid beta-oxidation and gluconeogenesis to provide energy and maintain appropriate serum glucose levels, effectively starving cancer cells of glucose and curbing tumor growth rates.

2.3. Ketogenic Diet Has Been Shown to Maintain Non-Fat Body Mass and Increase CT/RT Treatment Efficacy in Murine Models and Human Cancer Patients

The ketogenic diet (KD) has been the subject of numerous in vitro and in vivo studies, utilizing many different cell lines of various cancers and mouse models. We will, however, discuss both isocaloric KD and KD, which were administered at a caloric deficit. What demarcates KD from the previously mentioned diets is the specificity with which a potential patient must adhere to certain types of food, as well as the induction of a state of ketosis [

68].

The KD-driven ketosis increases the amount of oxidative stress throughout the body via the increased reliance on fatty acid oxidation. This also facilitates a significant drop in the amount of available blood glucose for any glycolysis-reliant cancer cells. The depletion of glucose could especially be vital to the treatment of glycolysis-dependent cancers in the brain. Here, ketone bodies are primarily utilized in a state of glucose depletion, as fats and proteins cannot cross the blood–brain barrier [

29]. The proposed anti-Warburg mechanism would allow for the selective increase in the radiosensitivity among the cancer cells when they are compared to the healthy cells [

55].

KD has been noted to exhibit complimentary effects when it is implemented in combination with RT and/or CT in both the isocaloric and calorically restrictive forms. In murine models with Mia PaCa-2 human pancreatic cancer xenografts, mice that were fed with a 4:1 KetoCal diet were significantly more likely to survive after 150 days of ongoing RT regimens than their AL contemporaries were, which were receiving the same RT [

33]. In the clinical trials that were conducted on patients with a histological diagnosis of pancreatic cancer, a combination of RT, CT, and KD were used consecutively. Only two patients were able to successfully comply with the intervention; the remaining patients withdrew due to an intolerance to the diet or a dose-limiting toxicity to it.

3. Conclusions

As a whole, dietary intervention such as CR, IF, or KD are feasible and have the potential to be effective in combination with standard of care oncologic therapies in improving the patients’ health, QoL, and treatment outcomes. However, further clinical trials using much larger patient populations must be conducted to explore these before they can be safely implemented in broader patient populations. Whereas pharmacologic interventions can be expensive, increase the medication burden, and are less adaptable to a broad variety of cancers and treatments, the dietary interventions are cost-effective and could be accessed by patients regardless of their socioeconomic status. In addition, these interventions allow the patient to participate in their treatment in a way that could significantly impact outcomes and encourages patient engagement and adherence. While we await the publication of more definitive trials examining these interventions, identifying the optimal implementation strategies, identifying the patients who are most likely to benefit from them as well as exploring the ways to improve adherence will be key to advancing future interventions that can improve the patient outcomes in the clinic.

This entry is adapted from the peer-reviewed paper 10.3390/cancers14205149