In the hockey stick controversy, the data and methods used in reconstructions of the temperature record of the past 1000 years have been disputed. Reconstructions have consistently shown that the rise in the instrumental temperature record of the past 150 years is not matched in earlier centuries, and the name "hockey stick graph" was coined for figures showing a long-term decline followed by an abrupt rise in temperatures. These graphs were publicised to explain the scientific findings of climatology, and in addition to scientific debate over the reconstructions, they have been the topic of political dispute. The issue is part of the global warming controversy and has been one focus of political responses to reports by the Intergovernmental Panel on Climate Change (IPCC). Arguments over the reconstructions have been taken up by fossil fuel industry–funded lobbying groups attempting to cast doubt on climate science. The use of proxy indicators to get quantitative estimates of the temperature record of past centuries was developed from the 1990s onwards, and found indications that recent warming was exceptional. The Bradley & Jones 1993 reconstruction introduced the "Composite Plus Scaling" (CPS) method used by most later large-scale reconstructions, and its findings were disputed by Patrick Michaels at the United States House Committee on Science. In 1998, Michael E. Mann, Raymond S. Bradley and Malcolm K. Hughes developed new statistical techniques to produce Mann, Bradley & Hughes 1998 (MBH98), the first eigenvector-based climate field reconstruction (CFR). This showed global patterns of annual surface temperature, and included a graph of average hemispheric temperatures back to 1400. In Mann, Bradley & Hughes 1999 (MBH99) the methodology was extended back to 1000. The term hockey stick was coined by the climatologist Jerry D. Mahlman, to describe the pattern this showed, envisaging a graph that is relatively flat to 1900 as forming an ice hockey stick's "shaft", followed by a sharp increase corresponding to the "blade". A version of this graph was featured prominently in the 2001 IPCC Third Assessment Report (TAR), along with four other reconstructions supporting the same conclusion. The graph was publicised, and became a focus of dispute for those opposed to the strengthening scientific consensus that late 20th-century warmth was exceptional. Those disputing the graph included Pat Michaels, the George C. Marshall Institute and Fred Singer. A paper by Willie Soon and Sallie Baliunas claiming greater medieval warmth was used by the Bush administration chief of staff Philip Cooney to justify altering the first Environmental Protection Agency Report on the Environment. The paper was quickly dismissed by scientists in the Soon and Baliunas controversy, but on July 28, Republican Jim Inhofe spoke in the Senate citing it to claim "that man-made global warming is the greatest hoax ever perpetrated on the American people". Later in 2003, a paper by Steve McIntyre and Ross McKitrick disputing the data used in MBH98 paper was publicised by the George C. Marshall Institute and the Competitive Enterprise Institute. In 2004, Hans von Storch published criticism of the statistical techniques as tending to underplay variations in earlier parts of the graph, though this was disputed and he later accepted that the effect was very small. In 2005, McIntyre and McKitrick published criticisms of the principal component analysis methodology as used in MBH98 and MBH99. The analysis therein was subsequently disputed by published papers, including Huybers 2005 and Wahl & Ammann 2007, which pointed to errors in the McIntyre and McKitrick methodology. In June 2005, Rep. Joe Barton launched what Sherwood Boehlert, chairman of the House Science Committee, called a "misguided and illegitimate investigation" into the data, methods and personal information of Mann, Bradley and Hughes. At Boehlert's request, a panel of scientists convened by the National Research Council was set up, which reported in 2006, supporting Mann's findings with some qualifications, including agreeing that there were some statistical failings but these had little effect on the result. Barton and U.S. Rep. Ed Whitfield requested Edward Wegman to set up a team of statisticians to investigate, and they supported McIntyre and McKitrick's view that there were statistical failings, although they did not quantify whether there was any significant effect. They also produced an extensive network analysis which has been discredited by expert opinion and found to have issues of plagiarism. Arguments against the MBH studies were reintroduced as part of the Climatic Research Unit email controversy, but dismissed by eight independent investigations. More than two dozen reconstructions, using various statistical methods and combinations of proxy records, have supported the broad consensus shown in the original 1998 hockey-stick graph, with variations in how flat the pre-20th century "shaft" appears. The 2007 IPCC Fourth Assessment Report cited 14 reconstructions, 10 of which covered 1,000 years or longer, to support its strengthened conclusion that it was likely that Northern Hemisphere temperatures during the 20th century were the highest in at least the past 1,300 years. Over a dozen subsequent reconstructions, including Mann et al. 2008 and PAGES 2k Consortium 2013, have supported these general conclusions.

- network analysis

- field reconstruction

- sallie

1. Background

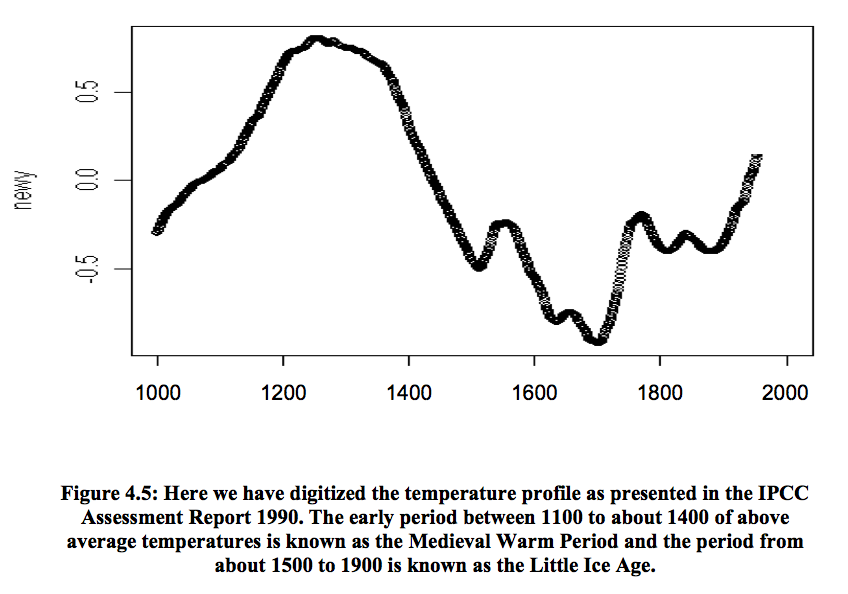

Paleoclimatology dates back to the 19th century, and the concept of examining varves in lake beds and tree rings to track local climatic changes was suggested in the 1930s.[2] In the 1960s, Hubert Lamb generalised from historical documents and temperature records of central England to propose a Medieval Warm Period from around 900 to 1300, followed by Little Ice Age. This was the basis of a "schematic diagram" featured in the IPCC First Assessment Report of 1990 beside cautions that the medieval warming might not have been global.[3] Early quantitative reconstructions were published in the 1980s.[4]

Publicity over the concerns of scientists about the implications of global warming led to increasing public and political interest, and the Reagan administration, concerned in part about the political impact of scientific findings, successfully lobbied for the 1988 formation of the Intergovernmental Panel on Climate Change to produce reports subject to detailed approval by government delegates.[5] The IPCC First Assessment Report in 1990 noted evidence that Holocene climatic optimum around 5,000-6,000 years ago had been warmer than the present (at least in summer) and that in some areas there had been exceptional warmth during "a shorter Medieval Warm Period (which may not have been global)" about AD 950-1250, followed by a cooler period of the Little Ice Age which ended only in the middle to late nineteenth century. The report discussed the difficulties with proxy data, "mainly pollen remains, lake varves and ocean sediments, insect and animal remains, glacier termini" but considered tree ring data was "not yet sufficiently easy to assess nor sufficiently integrated with indications from other data to be used in this report". A "schematic diagram" of global temperature variations over the last thousand years[6] has been traced to a graph based loosely on Lamb's 1965 paper, nominally representing central England, modified by Lamb in 1982.[1] Mike Hulme describes this schematic diagram as "Lamb's sketch on the back of an envelope", a "rather dodgy bit of hand-waving".[7]

Archives of climate proxies were developed: in 1993 Raymond S. Bradley and Phil Jones composited historical records, tree rings and ice cores for the Northern Hemisphere from 1400 up to the 1970s to produce a decadal reconstruction.[8] Like later reconstructions including the MBH "hockey stick" studies, their reconstruction indicated a slow cooling trend followed by an exceptional temperature rise in the 20th century.[9] This paper introduced the "Composite Plus Scaling" (CPS) method which was subsequently used by most large-scale climate reconstructions of hemispheric or global average temperatures.[10] This decadal summer temperature reconstruction, together with a separate curve plotting instrumental thermometer data from the 1850s onwards, was featured as Figure 3.20 in the IPCC Second Assessment Report (SAR) of 1996. It stated that, in this record, warming since the late 19th century was unprecedented. The section proposed that "The data from the last 1000 years are the most useful for determining the scales of natural climate variability". Recent studies including the 1994 reconstruction by Hughes and Diaz questioned how widespread the Medieval Warm Period had been at any one time, thus it was not possible "to conclude that global temperatures in the Medieval Warm Period were comparable to the warm decades of the late 20th century." The SAR concluded, "it appears that the 20th century has been at least as warm as any century since at least 1400 AD. In at least some areas, the recent period appears to be warmer than has been the case for a thousand or more years".[11]

Tim Barnett of the Scripps Institution of Oceanography was working towards the next IPCC assessment with Phil Jones, and in 1996 told journalist Fred Pearce: "What we hope is that the current patterns of temperature change prove distinctive, quite different from the patterns of natural variability in the past".[12] Dendroclimatologist Keith Briffa's February 1998 study reporting a divergence problem affecting some tree ring proxies after 1960 warned that this problem had to be taken into account to avoid overestimating past temperatures.[13]

1.1. Kyoto Protocol

While the details of the science were being worked out, the international community had been steadily working towards a global framework for a cap on greenhouse-gas emissions. The UN Conference on Environment and Development was held in Rio de Janeiro in 1992, and produced a treaty framework that called for voluntary capping of emissions at 1990 levels. Government representatives gathered in Kyoto later during 1998 and turned this framework into a binding commitment known as the Kyoto protocol.[2] Due to the nature of the treaty, it essentially required commitment from the United States and other highly industrial nations for proper implementation. By 1998, the Clinton Administration had signed the treaty, but vigorous lobbying meant ratification of the treaty was successfully opposed in the Senate by a bipartisan coalition of economic and energy interests. Lobbyists such as the Western Fuels Association funded scientists whose work might undermine the scientific basis of the treaty, and in 1998 it was revealed that the American Petroleum Institute had hosted informal discussions between individuals from oil companies, trade associations and conservative policy research organizations who opposed the treaty, and who had tentatively proposed an extensive plan to recruit and train scientists in media relations. A media-relations budget of $600,000 was proposed to persuade science writers and editors and television correspondents to question and undermine climate science.[14][15]

1.2. Controversy over Bradley and Jones 1993

At a hearing of the United States House Committee on Science on 6 March 1996, Chairman Robert Smith Walker questioned witnesses Robert Watson of the White House Office of Science and Technology and Michael MacCracken, head of the U.S. Global Change Research Program, over the scientific consensus shown by the IPCC report and about the peer reviewed status of the papers it cited.[16]

As reported by Pat Michaels on his World Climate Report website, MacCracken said during the hearing that "the last decade is the warmest since 1400", implying that the warming had been caused by the greenhouse effect, and replied to Walker's question about whether thermometers had then existed by explaining the use of biological materials as temperature proxies. Michaels showed a version of the graph based on Bradley & Jones 1993 with the instrumental temperature record curve removed, and argued on the basis of the "raw data" that the large rise in temperatures occurred in the 1920s, "before the greenhouse effect had changed very much", and "there’s actually been a decline since then". He said the Science Committee had been told of this, and of comments by one IPCC reviewer that this was misleading and the text should say "Composite indicators of summer temperature show that a rapid rise occurred around 1920, this rise was prior to the major greenhouse emissions. Since then, composite temperatures have dropped slightly on a decadal scale." Michaels said that the IPCC had not noted this in the agreed text, "instead leaving most readers with the impression that the trees of the Northern Hemisphere had changed their growth rates in response to man’s impact on the atmosphere." He questioned the consensus, as only one reviewer out of 2,500 scientists had noticed this problem.[16][17]

2. Climate Field Reconstruction (CFR) Methods; MBH 1998 and 1999

Variations on the "Composite Plus Scale" (CPS) method continued to be used to produce hemispheric or global mean temperature reconstructions. From 1998, this was complemented by Climate Field Reconstruction (CFR) methods, which could show how climate patterns had developed over large spatial areas, making the reconstruction useful for investigating natural variability and long-term oscillations as well as for comparisons with patterns produced by climate models. The CFR method made more use of climate information embedded in remote proxies, but was more dependent than CPS on assumptions that relationships between proxy indicators and large-scale climate patterns remained stable over time.[18] Related rigorous statistical methods had already been developed for tree ring data to produce regional reconstructions of temperatures, and other aspects such as rainfall.[19]

As part of his doctoral research, Michael E. Mann worked with seismologist Jeffrey Park on the development of statistical techniques for finding long-term oscillations of natural variability in the instrumental temperature record of global surface temperatures over the last 140 years; Mann & Park 1993 showed patterns relating to the El Niño–Southern Oscillation, and Mann & Park 1994 found what was later termed the Atlantic multidecadal oscillation. They then teamed up with Raymond S. Bradley to use these techniques on the dataset from his Bradley & Jones 1993 study with the aim of finding long-term oscillations of natural variability in global climate. The resulting reconstruction went back to 1400, and was published in November as Mann, Park & Bradley 1995. They were able to detect that the multiple proxies were varying in a coherent oscillatory way, indicating both the multidecadal pattern in the North Atlantic and a longer-term oscillation of roughly 250 years in the surrounding region. Their study did not calibrate these proxy patterns against a quantitative temperature scale, and a new statistical approach was needed to find how they related to surface temperatures to reconstruct past temperature patterns.[20][21]

2.1. Mann, Bradley and Hughes 1998

For his postdoctoral research, Mann joined Bradley and tree ring specialist Malcolm K. Hughes to develop a new statistical approach to reconstruct underlying spatial patterns of temperature variation combining diverse datasets of proxy information covering different periods across the globe, including a rich resource of tree ring networks for some areas and sparser proxies such as lake sediments, ice cores and corals, as well as some historical records.[22] Their global reconstruction was a major breakthrough in evaluation of past climate dynamics, and the first eigenvector-based climate field reconstruction (CFR) incorporating multiple climate proxy data sets of different types and lengths into a high-resolution global reconstruction.[23] To relate this data to measured temperatures, they used principal component analysis (PCA) to find the leading patterns, or principal components, of instrumental temperature records during the calibration period from 1902 to 1980. Their method was based on separate multiple regressions between each proxy record (or summary) and all of the leading principal components of the instrumental record. The least squares simultaneous solution of these multiple regressions used covariance between the proxy records. The results were then used to reconstruct large-scale patterns over time in the spatial field of interest (defined as the empirical orthogonal functions, or EOFs) using both local relationships of the proxies to climate and distant climate teleconnections.[10] Temperature records for almost 50 years prior to 1902 were analysed using PCA for the important step of validation calculations, which showed that the reconstructions were statistically meaningful, or skillful.[24]

A balance was required over the whole globe, but most of the proxy data came from tree rings in the Northern mid latitudes, largely in dense proxy networks. Since using all of the large numbers of tree ring records would have overwhelmed the sparse proxies from the polar regions and the tropics, they used principal component analysis (PCA) to produce PC summaries representing these large datasets, and then treated each summary as a proxy record in their CFR analysis. Networks represented in this way included the North American tree ring network (NOAMER) and Eurasia.[25]

The primary aim of CFR methods was to provide the spatially resolved reconstructions essential for coherent geophysical understanding of how parts of the climate system varied and responded to radiative forcing, so hemispheric averages were a secondary product.[26] The CFR method could also be used to reconstruct Northern Hemisphere mean temperatures, and the results closely resembled the earlier CPS reconstructions including Bradley & Jones 1993.[23] Mann describes this as the least scientifically interesting thing they could do with the rich spatial patterns, but also the aspect that got the most attention. Their original draft ended in 1980 as most reconstructions only went that far, but an anonymous peer reviewer of the paper suggested that the curve of instrumental temperature records should be shown up to the present to include the considerable warming that had taken place between 1980 and 1998.[27]

The Mann, Bradley & Hughes 1998 (MBH98) multiproxy study on "Global-scale temperature patterns and climate forcing over the past six centuries" was submitted to the journal Nature on 9 May 1997, accepted on 27 February 1998 and published on 23 April 1998. The paper announced a new statistical approach to find patterns of climate change in both time and global distribution, building on previous multiproxy reconstructions. The authors concluded that "Northern Hemisphere mean annual temperatures for three of the past eight years are warmer than any other year since (at least) AD1400", and estimated empirically that greenhouse gases had become the dominant climate forcing during the 20th century.[28] In a review in the same issue, Gabriele C. Hegerl described their method as "quite original and promising", which could help to verify model estimates of natural climate fluctuations and was "an important step towards reconstructing space–time records of historical temperature patterns".[29]

Publicity on publication of MBH98

Release of the paper on 22 April 1998 was given exceptional media coverage, possibly due to the chance that this happened to be Earth Day and it was the warmest year on record. There was an immediate media response to the press release, and the story featured in major newspapers including the New York Times , USA Today and the Boston Globe. Later reports appeared in Time (magazine) , U.S. News & World Report and Rolling Stone. On one afternoon, Mann was interviewed by CNN, CBS and NBC. In the CBS interview, John Roberts repeatedly asked him if the study proved that humans were responsible for global warming, to which he would go no further than that it was "highly suggestive" of that inference.[30]

The New York Times highlighted their finding that the 20th century had been the warmest century in 600 years, quoting Mann saying that "Our conclusion was that the warming of the past few decades appears to be closely tied to emission of greenhouse gases by humans and not any of the natural factors". Most proxy data are inherently imprecise, and Mann said: "We do have error bars. They are somewhat sizable as one gets farther back in time, and there is reasonable uncertainty in any given year. There is quite a bit of work to be done in reducing these uncertainties." Climatologist Tom Wigley had high regard for the progress the study made, but doubted if proxy data could ever be wholly convincing in detecting the human contribution to changing climate.[31]

Phil Jones of the UEA Climatic Research Unit told the New York Times he was doubtful about adding the 150-year thermometer record to extend the proxy reconstruction, and compared this with putting together apples and oranges; Mann et al. said they used a comparison with the thermometer record to check that recent proxy data were valid. Jones thought the study would provide important comparisons with the findings of climate modeling, which showed a "pretty reasonable" fit to proxy evidence.[31] A commentary on MBH98 by Jones was published in Science on 24 April 1998. He noted that it used almost all the available long-term proxy climate series, "and if the new multivariate method of relating these series to the instrumental data is as good as the paper claims, it should be statistically reliable." He discussed some of the difficulties, and emphasised that "Each paleoclimatic discipline has to come to terms with its own limitations and must unreservedly admit to problems, warts and all."[32]

Controversy over MBH 1998

On 11 May, contrarian Pat Michaels objected to press headlines such as "Proof of Greenhouse Warming" and "1990s Warmest Since 1400" in a feature he titled "Science Pundits Miss Big Picture Again" published on his Western Fuels Association funded World Climate Report website. He asserted that "almost all of the warming described in the article took place before 1935–long before major changes to the greenhouse effect–and the scientific methodology guarantees that the early 1990s should appear as the warmest years", citing the papers by Jones to support his view that all of the warming took place between 1920 and 1935: "In other words, if this is the human signal, it ended 63 years ago." He referred to his earlier pieces making the same complaint about the Bradley & Jones 1993 study featured in the Second Assessment Report, and about Overpeck et al. 1997.[33]

The George C. Marshall Institute alleged in June 1998 that MBH98 was deceptive in only going back to 1400: "Go back just a few hundred years more to the period 1000–1200 AD and you find that the climate was considerably warmer than now. This era is known as the Medieval Warm Period." It said that "by 1300 it began to cool, and by 1400 we were well into the Little Ice Age. It is no surprise that temperatures in 1997 were warmer than they were in the Little Ice Age", and so if "1997 had been compared with the years around 1000 AD, 1997 would have looked like a rather cool year" rather than being the warmest on record. It said that the Medieval Warm Period predated industrial greenhouse gas emissions, and had a natural origin.[34]

In August, criticisms by Willie Soon and Sallie Baliunas were published by Michaels on his World Climate Report website. They took issue with tree ring reconstructions by Briffa et al. 1998 and Jones 1998 as well as with MBH98, discussing the tree ring divergence problem as well as arguing that warming had ended early in the 20th century before CO

2 increases and so was natural. They reiterated the suggestion that MBH98 only went back to 1400 to avoid showing the Medieval Warm Period, and implied that it too suffered from the tree ring divergence problem which actually affected a different reconstruction and not MBH98. Michaels subsequently gave Mann the opportunity to post a reply on the website in September 1998.[35]

In October 1998, the borehole reconstruction published by Pollack, Huang and Shen gave independent support to the conclusion that 20th-century warmth was exceptional for the past 500 years.[36]

2.2. Jones et al. 1998

Jones, Keith Briffa, Tim P. Barnett and Simon Tett had independently produced a "Composite Plus Scale" (CPS) reconstruction extending back for a thousand years, comparing tree ring, coral layer, and glacial proxy records, but not specifically estimating uncertainties. Jones et al. 1998 was submitted to The Holocene on 16 October 1997; their revised manuscript was accepted on 3 February and published in May 1998. As Bradley recalls, Mann's initial view was that there was too little information and too much uncertainty to go back so far, but Bradley said "Why don't we try to use the same approach we used in Nature, and see if we could push it back a bit further?" Within a few weeks, Mann responded that to his surprise, "There is a certain amount of skill. We can actually say something, although there are large uncertainties."[37][38]

2.3. Mann, Bradley and Hughes 1999

In considering the 1998 Jones et al. reconstruction which went back a thousand years, Mann, Bradley and Hughes reviewed their own research and reexamined 24 proxy records which extended back before 1400. Mann carried out a series of statistical sensitivity tests, removing each proxy in turn to see the effect its removal had on the result. He found that certain proxies were critical to the reliability of the reconstruction, particularly one tree ring dataset collected by Gordon Jacoby and Rosanne D'Arrigo in a part of North America that Bradley's earlier research had identified as a key region.[39] This dataset only extended back to 1400, and though another proxy dataset from the same region (in the International Tree-Ring Data Bank) went further back and should have given reliable proxies for earlier periods, validation tests only supported their reconstruction after 1400. To find out why, Mann compared the two datasets and found that they tracked each other closely from 1400 to 1800, then diverged until around 1900 when they again tracked each other. He found a likely reason in the CO

2 "fertilisation effect" affecting tree rings as identified by Graybill and Idso, with the effect ending once CO

2 levels had increased to the point where warmth again became the key factor controlling tree growth at high altitude. Mann used comparisons with other tree ring data from the region to produce a corrected version of this dataset. Their reconstruction using this corrected dataset passed the validation tests for the extended period, but they were cautious about the increased uncertainties.[40]

The Mann, Bradley and Hughes reconstruction covering 1,000 years (MBH99) was submitted in October 1998 to Geophysical Research Letters which published it in March 1999 with the cautious title Northern Hemisphere temperatures during the past millennium: inferences, uncertainties, and limitations to emphasise the increasing uncertainty involved in reconstructions of the period before 1400 when fewer proxies were available.[37][41] A University of Massachusetts Amherst news release dated 3 March 1999 announced publication in the 15 March issue of Geophysical Research Letters, "strongly suggesting that the 1990s were the warmest decade of the millennium, with 1998 the warmest year so far." Bradley was quoted as saying "Temperatures in the latter half of the 20th century were unprecedented", while Mann said "As you go back farther in time, the data becomes sketchier. One can’t quite pin things down as well, but, our results do reveal that significant changes have occurred, and temperatures in the latter 20th century have been exceptionally warm compared to the preceding 900 years. Though substantial uncertainties exist in the estimates, these are nonetheless startling revelations." While the reconstruction supported theories of a relatively warm medieval period, Hughes said "even the warmer intervals in the reconstruction pale in comparison with mid-to-late 20th-century temperatures."[42] The New York Times report had a colored version of the graph, distinguishing the instrumental record from the proxy evidence and emphasising the increasing range of possible error in earlier times, which MBH said would "preclude, as yet, any definitive conclusions" about climate before 1400.[43]

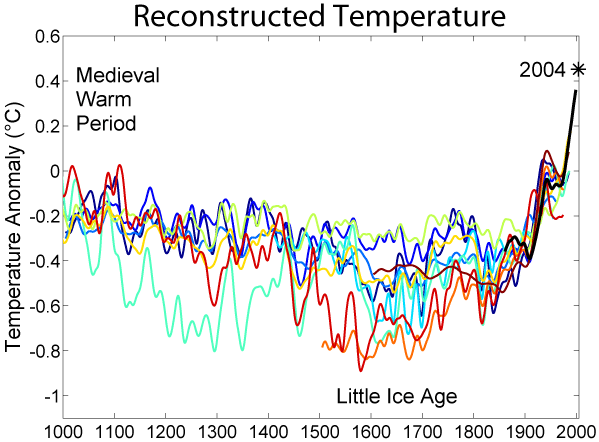

The reconstruction found significant variability around a long-term cooling trend of −0.02 °C per century, as expected from orbital forcing, interrupted in the 20th century by rapid warming which stood out from the whole period, with the 1990s "the warmest decade, and 1998 the warmest year, at moderately high levels of confidence". The time series line graph Figure 2(a) showed their reconstruction from AD 1000 to 1980 as a thin line, wavering around a thicker dark 40-year smoothed line. This curve followed a downward trend (shown as a thin dot-dashed line) from a Medieval Warm Period (about as warm as the 1950s) down to a cooler Little Ice Age before rising sharply in the 20th century. Thermometer data shown with a dotted line overlapped the reconstruction for a calibration period from 1902 to 1980, then continued sharply up to 1998. A shaded area showed uncertainties to two standard error limits, in medieval times rising almost as high as recent temperatures.[41][44][45] When Mann gave a talk about the study to the National Oceanic and Atmospheric Administration's Geophysical Fluid Dynamics Laboratory, Jerry Mahlman nicknamed the graph the "hockey stick",[37] with the slow cooling trend the "stick", and the anomalous 20th century warming the "blade".[9]

Critique and independent reconstructions

Briffa and Tim Osborn critically examined MBH99 in a May 1999 detailed study of the uncertainties of various proxies. They raised questions later adopted by critics of Mann's work, including the point that bristlecone pines from the Western U.S. could have been affected by pollution such as rising CO

2 levels as well as temperature. The temperature curve was supported by other studies, but most of these shared the limited well dated proxy evidence then available, and so few were truly independent. The uncertainties in earlier times rose as high as those in the reconstruction at 1980, but did not reach the temperatures of later thermometer data. They concluded that although the 20th century was almost certainly the warmest of the millennium, the amount of anthropogenic warming remains uncertain."[46][47]

With work progressing on the next IPCC report, Chris Folland told researchers on 22 September 1999 that a figure showing temperature changes over the millennium "is a clear favourite for the policy makers' summary". Two graphs competed: Jones et al. (1998) and MBH99. In November, Jones produced a simplified figure for the cover of the short annual World Meteorological Organization report, which lacks the status of the more important IPCC reports. Two fifty-year smoothed curves going back to 1000 were shown, from MBH99 and Jones et al. (1998), with a third curve to 1400 from Briffa's new paper, combined with modern temperature data bringing the lines up to 1999: in 2010 the lack of a clarity about this change of data was criticised as misleading.[48]

Briffa's paper as published in the January 2000 issue of Quaternary Science Reviews showed the unusual warmth of the last century, but cautioned that the impact of human activities on tree growth made it subtly difficult to isolate a clear climate message.[49] In February 2000 Thomas J. Crowley and Thomas S. Lowery's reconstruction incorporated data not used previously. It reached the conclusion that peak Medieval warmth only occurred during two or three short periods of 20 to 30 years, with temperatures around 1950s levels, refuting claims that 20th century warming was not unusual.[50]

Reviewing twenty years of progress in palaeoclimatology, Jones noted the reconstructions by Jones et al. (1998), MBH99, Briffa (2000) and Crowley & Lowery (2000) showing good agreement using different methods, but cautioned that use of many of the same proxy series meant that they were not independent, and more work was needed.[51]

Controversy over draft of IPCC Third Assessment Report

In May 2000, while drafts of the IPCC Third Assessment Report were in the review process, Fred Singer's contrarian Science and Environmental Policy Project held a press event on Capitol Hill, Washington, D.C. and alleged that the Summary for Policymakers was a "political document put together by a few scientific bureaucrats". Regarding the draft conclusion that the 20th Century was the warmest in the last 1000 years, he said "We don't accept this. We challenge this". Wibjörn Karlén said the IPCC report "relies extensively on an attempt to reconstruct the global climate during the last 1000 years". In what has become a recurring contrarian theme, he said "This reconstruction shows neither a Medieval Warm Period nor a Little Ice Age. But extensive evidence shows that both these events occurred on a global scale and that climates fluctuated significantly." Mann notes that MBH99 shows both, but the Medieval Warm Period is shown as reaching only mid-20th century levels of warmth and not more recent levels.[52]

A hearing of the United States Senate Committee on Commerce, Science and Transportation, chaired by Senator John McCain, was held on 18 July 2000 to discuss the U.S. National Assessment on Climate Change (NACC) report on the Potential Consequences of Climate Variability and Change which had been released in June for a 60-day public comment period.[53] Witnesses included Fred Singer, whose statement cited the Oregon Petition against the Kyoto Protocol to claim that his skeptic views on human causes of climate change were not fringe. He said there had probably been no global warming since the 1940s, and "Satellite data show no appreciable warming of the global atmosphere since 1979. In fact, if one ignores the unusual El Nino year of 1998, one sees a cooling trend." From this, he concluded that "The post-1980 global warming trend from surface thermometers is not credible. The absence of such warming would do away with the widely touted 'hockey stick' graph (with its 'unusual' temperature rise in the past 100 years)".[54] The NACC report as published stated that "New studies indicate that temperatures in recent decades are higher than at any time in at least the past 1,000 years" and featured graphs showing "1000 Years of Global CO

2 and Temperature Change". Its list of sources said that the temperature data was from MBH99, but the graph did not show error bars or show which part was reconstruction and which was the instrumental record.[55]

Contrarian John Lawrence Daly commented on the TAR draft and the NACC report in a blog posting which alleged that the IPCC 1995 report which reported "a discernible human influence on global climate" had also "presented this graph (Fig 1.) of temperature change since 900 AD", showing a graph which resembled the IPCC 1990 Figure 7.1.c schematic but with changed wording. He wrote that this graph "asserts that temperatures during the Medieval Warm Period were higher than those of today", and described climate changes as due to solar variation. He said that the MBH99 hockey stick graph swiftly "altered the whole landscape of how past climate history was to be interpreted by the greenhouse sciences" and "in one scientific coup overturned the whole of climate history", so that soon afterwards "Overturning its own previous view in the 1995 report, the IPCC presented the `Hockey Stick' as the new orthodoxy with hardly an apology or explanation for the abrupt U-turn since its 1995 report." Around two years later he revised his post to refer to IPCC 1990 rather than the 1995 report.[56]

2.4. IPCC Third Assessment Report, 2001

The Working Group 1 (WG1) part of the IPCC Third Assessment Report (TAR) included a subsection on multi-proxy synthesis of recent temperature change. This noted five earlier large-scale palaeoclimate reconstructions, then discussed the Mann, Bradley & Hughes 1998 reconstruction going back to 1400 AD and its extension back to 1000 AD in Mann, Bradley & Hughes 1999 (MBH99), while emphasising the substantial uncertainties in the earlier period. The MBH99 conclusion that the 1990s were likely to have been the warmest decade, and 1998 the warmest year, of the past millennium in the Northern Hemisphere, with "likely" defined as "66–90% chance", was supported by reconstructions by Crowley & Lowery 2000 and by Jones et al. 1998 using different data and methods. The Pollack, Huang & Shen 1998 reconstruction covering the past 500 years gave independent support for this conclusion, which was compared against the independent (extra-tropical, warm-season) tree-ring density NH temperature reconstruction of Briffa 2000.[57]

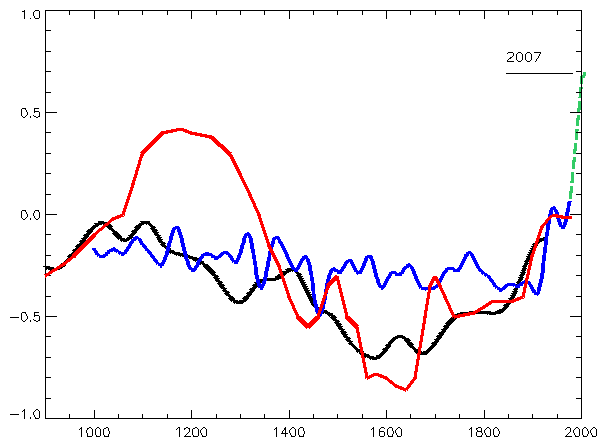

Its Figure 2.21 showed smoothed curves from the MBH99, Jones et al. and Briffa reconstructions, together with modern thermometer data as a red line and the grey shaded 95% confidence range from MBH99. Above it, figure 2.20 was adapted from MBH99.[57] Figure 5 in WG1 Technical Summary B (as shown to the right) repeated this figure without the linear trend line declining from AD 1000 to 1850.[58]

This iconic graph adapted from MBH99 was featured prominently in the WG1 Summary for Policymakers under a graph of the instrumental temperature record for the past 140 years. The text stated that it was "likely that, in the Northern Hemisphere, the 1990s was the warmest decade and 1998 the warmest year" in the past 1,000 years.[59] Versions of these graphs also featured less prominently in the short Synthesis Report Summary for Policymakers, which included a sentence stating that "The increase in surface temperature over the 20th century for the Northern Hemisphere is likely to have been greater than that for any other century in the last thousand years", and the Synthesis Report - Questions.[60]

The Working Group 1 scientific basis report was agreed unanimously by all member government representatives in January 2001 at a meeting held in Shanghai, China. A large poster of the IPCC illustration based on the MBH99 graph formed the backdrop when Sir John T. Houghton, as Co-Chair of the working group, presented the report in an announcement shown on television, leading to wide publicity.[37][61]

The original MBH98 and MBH99 papers avoided undue representation of large numbers of tree ring proxies by using a principal component analysis step to summarise these proxy networks, but from 2001 Mann stopped using this method and introduced a multivariate Climate Field Reconstruction (CFR) technique based on the regularized expectation–maximization (RegEM) method which did not require this PCA step. A paper he published jointly with Scott Rutherford examined the accuracy of this method, and discussed the issue that regression methods of reconstruction tended to underestimate the amplitude of variation.[62]

3. Controversy after IPCC Third Assessment Report

3.1. IPCC Graph Enters Political Controversy

Rather than displaying all of the long-term temperature reconstructions, the opening figure of the Working Group 1 Summary for Policymakers in the IPCC Third Assessment Report (TAR) highlighted an IPCC illustration based only on the MBH99 paper,[59] and a poster of the hockey stick graph was the backdrop when the report was presented in January 2001,[61] in a televised announcement. The graph was seen by mass media and the public as central to the IPCC case for global warming, which had actually been based on other unrelated evidence. Jerry Mahlman, who had coined the "hockey stick" nickname, described this emphasis on the graph as "a colossal mistake, just as it was a mistake for the climate-science-writing press to amplify it". He added that it was "not the smoking gun. That's the data we've had for the past 150 years, which is quite consistent with the expectation that the climate is continuing to warm."[37] From an expert viewpoint the graph was, like all newly published science, preliminary and uncertain, but it was widely used to publicise the issue of global warming.[44] The 1999 study had been a pioneering work in progress, and had emphasised the uncertainties, but publicity often played this down. Mann later said "The label was always a caricature and it became a stick to beat us with".[63]

Controversy over the graph extended outside the scientific community, with accusations from political opponents of climate science.[63] Science historian Spencer Weart states that "The dedicated minority who denied that there was any global warming problem promptly attacked the calculations."[44] The graph was targeted by those opposing ratification of the Kyoto Protocol on global warming. As Mann said, "Advocates on both sides of the climate-change debate at various times have misrepresented the results for their own purposes". Environmental groups presented the graph flatteringly, and the caution about uncertainty in the original graph tended to be understated or removed: a "hockey stick" graph without error bars featured in the U.S. National Assessment on Climate Change report. Similar graphs were used by those disputing the findings with the claim that the graph was inaccurate. When a later Wall Street Journal editorial used a graph without error bars in this way, Gerald North described this as "very misleading, in fact downright dishonest". Funding was provided by the American Petroleum Institute for research critical of the graph.[37]

A paper by Chris de Freitas published by the Canadian Society of Petroleum Geologists in June 2002 argued against the IPCC findings and the Kyoto Protocol, claiming that global warming posed no danger and CO

2 was innocuous. A section disputing the "hockey stick" curve concluded it was merely a mathematical construct promoted by the IPCC to support the "notion" that recent temperatures were unprecedented.[64] Towards the end of 2002, the book Taken By Storm : the troubled science, policy, and politics of global warming by Christopher Essex and Ross McKitrick, published with assistance from the Fraser Institute,[65] included a chapter about the graph titled "T-Rex Plays Hockey".[66][67]

Iconic use of the IPCC graph came to symbolise conflict in which mainstream climate scientists were criticised, with some sceptics focussing on the hockey stick graph in the hope that they could damage the credence given to climate scientists.[68]

3.2. Soon & Baliunas and Inhofe's Hoax Accusation

An early attempt to refute the hockey stick graph appeared in a joint paper by Willie Soon, who had already argued that climate change was primarily due to solar variation, and Sallie Baliunas who had contested whether ozone depletion was due to man-made chemicals.[69][70] The Soon and Baliunas literature review used data from previous papers to argue that the Medieval Warm Period had been warmer than the 20th century, and that recent warming was not unusual. They sent their paper to the editor Chris de Freitas, an opponent of action to curb carbon dioxide emissions who has been characterized by Fred Pearce as a "climate contrarian". Chris de Freitas approved the paper for publication in the relatively obscure journal Climate Research, where it appeared on 31 January 2003. In March Soon and Baliunas published an extended paper in Energy & Environment along with others including David Legates. Two scientists cited in the papers later said that their work was misrepresented.[71][72][73]

The Bush administration's Council on Environmental Quality chief of staff Philip Cooney, a lawyer who had formerly been a lobbyist for the American Petroleum Institute, edited the draft first Environmental Protection Agency Report on the Environment to remove all references to reconstructions showing world temperatures rising over the last 1,000 years, and inserted a reference to Soon & Baliunas. He wrote in a 21 April 2003 memo that "The recent paper of Soon-Baliunas contradicts a dogmatic view held by many in the climate science community".[74]

The Climate Research paper was criticised by many other scientists, including several of the journal's editors.[75] In a BBC interview, while Legates said their paper showed the MBH data and methods were invalid, Phil Jones said "This isn't a scientific paper, it's absolutely awful."[76] On 8 July Eos featured a detailed rebuttal of both papers by 13 scientists including Mann and Jones, presenting strong evidence that Soon and Baliunas had used improper statistical methods. Responding to the controversy, the publisher of Climate Research upgraded Hans von Storch from editor to editor in chief as of 1 August 2003. After seeing a preprint of the Eos rebuttal, von Storch decided that the Soon and Baliunas paper was seriously flawed and should not have been published as it was. He proposed a new editorial system, and an editorial saying that the review process had failed.[71][77]

When the McCain-Lieberman bill proposing restrictions on greenhouse gases was being debated in the Senate on 28 July 2003, Senator James M. Inhofe made a two-hour speech opposing the bill. He cited the Soon and Baliunas paper to support his conclusion: "Wake up, America. With all the hysteria, all the fear, all the phony science, could it be that manmade global warming is the greatest hoax ever perpetrated on the American people? I believe it is."[75][78] Inhofe convened a hearing of the United States Senate Committee on Environment and Public Works held on 29 July 2003, examining work by the small group of researchers saying there was no evidence of significant human-caused global warming. Three scientists were invited, Mann giving testimony supporting the consensus position, opposed by long-term skeptics Willie Soon and David Legates.[75][79] The Soon and Baliunas paper was discussed. Senator Jeffords read out an email in which von Storch stated his view "that the review of the Soon et al. paper failed to detect significant methodological flaws in the paper. The critique published in the Eos journal by Mann et al. is valid." In reply, Mann testified "I believe it is the mainstream view of just about every scientist in my field that I have talked to that there is little that is valid in that paper. They got just about everything wrong."[71][79] He later recalled that he "left that meeting having demonstrated what the mainstream views on climate science are".[80]

The publisher of Climate Research agreed that the flawed Soon and Baliunas paper should not have been published uncorrected, but von Storch's proposals to improve the editorial process were rejected, and von Storch with three other board members resigned. News of his resignation was discussed at the senate committee hearing.[71][79]

3.3. McIntyre and McKitrick 2003

Ratification of the Kyoto Protocol became a major political issue in Canada, and the government issued pamphlets which said that the "20th century was the warmest globally in the past 1,000 years". Toronto businessman Stephen McIntyre saw this as based on the hockey stick graph, and in 2003 he became interested in the IPCC process which had featured the graph prominently. With a background in mineral exploration, including the oil and gas exploration company CGX Energy, he felt that he had the mathematical expertise and experience to independently audit the graph.[81][82] McIntyre downloaded datasets for MBH99 from a ftp server, but could not locate the ftp site for MBH98 datasets and on 8 April wrote to Mann to request this information. Following email exchanges, Mann's assistant sent the information as text files around 23 April 2003.[83]

McIntyre then made a series of comments about the data on the internet discussion group climateskeptics, and Sonja Boehmer-Christiansen suggested that he should write and submit an article on the topic. She edited the little-known social science journal Energy & Environment which avoided standard peer review and had recently published the extended Soon and Baliunas paper. McIntyre agreed, and made contact with University of Guelph economics professor Ross McKitrick, a senior fellow of the Fraser Institute which opposed the Kyoto treaty, and co-author of Taken By Storm: The Troubled Science, Policy and Politics of Global Warming.[82][84]

McIntyre drafted an article before they first met on 19 September 2003, and they then worked together intensively on an extensive re-write. McKitrick suggested submitting the paper to Nature, but after drafting a short version to fit the word limit they submitted the full paper to Energy & Environment on 2 October. After review, resubmission on 14 October and further corrections, the paper was published on the web on 27 October 2003, only three and a half weeks after its first submission.[85][86] Boehmer-Christiansen later said that she had published the paper quickly "for policy impact reasons, eg publication well before COP9", the United Nations Framework Convention on Climate Change negotiations in December 2003.[87]

At the same time that the McIntyre and McKitrick (MM03) paper "Corrections to the Mann et al. (1998) Proxy Data Base and Northern Hemisphere Average Temperature Series" was published on the web, McIntyre set up climate2003 as a web site for the paper.[88][89]

Disputed data

McIntyre and McKitrick said that they had not been able to replicate the Mann, Bradley and Hughes results due to problems with the data: although the sparse data for the earlier periods was difficult to analyse, their criticism was comprehensively refuted by Wahl & Ammann 2007.[69]

The McIntyre & McKitrick 2003 paper (MM03) said that Mann, Bradley & Hughes 1998 (MBH98) "hockey stick" shape was "primarily an artefact of poor data handling and use of obsolete proxy records". It listed data issues, and stated that when they applied the MBH98 method to corrected data, "The major finding is that the values in the early 15th century exceed any values in the 20th century." They disputed infilled data and had computed principal components analysis using standard algorithms, but said that these "algorithms fail in the presence of missing data". When they used this method on revised data they got different results, and concluded that the "hockey stick" shape "is primarily an artefact of poor data handling, obsolete data and incorrect calculation of principal components".[88]

A draft response by Mann, Bradley and Hughes was put on the University of Virginia website on 1 November 2003, and amended two days later. They said that MM03 appeared seriously flawed as it "used neither the data nor the procedures of MBH98", having deleted key proxy information. In particular, MM03 had misunderstood the stepwise reconstruction technique MBH98 applied. This made use of all the progressively richer and more widespread networks available for later periods by calculating separately principal components for each period of overlapping datasets, for example 1400–1980, 1450–1980, and so on. In treating these as one series with missing information, MM03 had eliminated whole datasets covering the 15th century. The data which MM03 reported difficulty in finding had been available since May 2000 on the public File Transfer Protocol site for the MBH98 paper. Mann, Bradley and Hughes commented that "The standard protocol for scientific journals receiving critical comments on a published paper is to provide the authors being criticized with an opportunity to review the criticism prior to publication, and offer them the chance to respond. Mann and colleagues were given no such opportunity."[90] In 2007 the IPCC AR4 noted the MM03 claim that MBH98 could not be replicated, and reported that "Wahl and Ammann (2007) showed that this was a consequence of differences in the way McIntyre and McKitrick (2003) had implemented the method of Mann et al. (1998) and that the original reconstruction could be closely duplicated using the original proxy data."[91]

Publicity and Washington briefing

On the day of publication, the ExxonMobil funded lobbying website Tech Central Station issued a press release headed "TCS Newsflash: Important Global Warming Study Audited – Numerous Errors Found; New Research Reveals the UN IPCC 'Hockey Stick' Theory of Climate Change is Flawed" announcing that "Canadian business executive Stephen McIntyre and economist Ross McKitrick have presented more evidence that the 20th century wasn't the warmest on record".[81][92] In a Senate debate on 29 October, a day before the McCain-Lieberman bill vote, Senator Jim Inhofe misrepresented the claims made in the MM03 paper and said that it demonstrated high Medieval Warm Period temperatures in the 15th century (during the Little Ice Age). In attacking the MBH papers, he erroneously described Mann as being in charge of the IPCC. In the same debate, Senator Olympia Snowe used the hockey stick graph to demonstrate the reality of climate change. On the morning of the vote, USA Today carried an op-ed by Tech Central Station editor Nick Shulz saying that MM03 "upsets a key scientific claim about climate change", and that "Mann never made his data available online". The vote was 55–43 against the McCain-Lieberman bill. After Mann and associates showed the newspaper that the data was available online, as it had been for years,[93][94] USA Today issued a correction on 13 November.[95]

McIntyre was flown to Washington, D.C. to brief business leaders and the staff of Senator Jim Inhofe.[96] On 18 November McIntyre and McKitrick traveled there to present a briefing, sponsored by the George C. Marshall Institute and the Competitive Enterprise Institute,[97] to United States Congress staffers and others.[98] At an event at the George C. Marshall Institute, co-hosted by Myron Ebell of the Cooler Heads Coalition, McIntyre said that he had contacted Mann for the data set but found problems in replicating the curves of the graph because of missing or wrong data. He first met McKitrick, for "lunch at the exact hour that Hurricane Isabel hit Toronto" (19 September 2003). They prepared their corrections in a proxy data set using 1999 data, and using publicly disclosed methods produced a reconstruction which differed from MBH98 in showing high peaks of temperature in the 15th century. They were not saying that these temperatures had occurred, but that Mann's results were incorrect. When they published their paper, it attracted attention, with David Appell being the first reporter to take an interest. They said that after Appell's article was published with comments from Mann, they had followed links to Mann's FTP site and on 29 October copied data files which were subsequently deleted from the site.[99]

In an immediate response to these briefings, Mann, Bradley and Hughes said that the analysis by McIntyre and McKitrick was botched and showed numerous statistical errors including selective removal of records to invent 15th century warming. Climatologist Tom Wigley of the National Center for Atmospheric Research described McIntyre and McKitrick's arguments as "seriously flawed" and "silly", noting that as well as the MBH99 paper around a dozen independent studies had suggested higher temperatures in the late 20th century. George Shambaugh of Georgetown University said he leant in favor of Mann on a statistical basis, geoscientist Michael Oppenheimer compared those arguing over the existence of human-caused global warming with tobacco industry scientists who tried to obscure the link with lung cancer. Against these points, William O'Keefe of the Marshall Institute (previously with the American Petroleum Institute) said there was value in the skeptics keeping the debate going, "We have to encourage healthy debate".[98]

3.4. von Storch and Zorita 2004

The statistical methods used in the MBH reconstruction were questioned in a 2004 paper by Hans von Storch with a team including Eduardo Zorita,[100] which said that the methodology used to average the data and the wide uncertainties might have hidden abrupt climate changes, possibly as large as the 20th century spike in measured temperatures.[45] They used the pseudoproxy method which Mann and Rutherford had developed in 2002, and like them found that regression methods of reconstruction tended to underestimate the amplitude of variation, a problem covered by the wide error bars in MBH99. It was a reasonable critique of nearly all the reconstructions at that time, but MBH were singled out.[62] Tim Osborn and Keith Briffa responded, highlighting this conclusion of von Storch et al.[101]

Zorita and von Storch later claimed their paper was a breakthrough in moving the question from "the reality of the blade of the hockey stick" to focus on "the real problems, namely the 'wobbliness' of the shaft of the hockey-stick, and the suppressing of valid scientific questions by gate keeping".[102] In their initial press release, von Storch called the hockey stick "quatsch" ("nonsense" or "garbage") but other researchers subsequently found that the von Storch paper had an undisclosed additional step which had overstated the wobbliness. By detrending data before estimating statistical relationships it had removed the main pattern of variation.[103] The von Storch et al. view that the graph was defective overall was refuted by Wahl, Ritson and Ammann (2006),[69] who pointed to this incorrect implementation of the reconstruction procedure.[104] Stefan Rahmstorf added that the paper had shown only results supporting its conclusions, but its supplementary online material included contradictory results which supported MBH.[105]

3.5. Reconstructions, Inhofe and State of Fear

Borehole climate reconstructions in a paper by Pollack and Smerdon, published in June 2004, supported estimates of a surface warming of around 1 °C (1.8 °F) over the period from 1500 to 2000.[106]

In a study published in November 2004 Edward R. Cook, Jan Esper and Rosanne D'Arrigo re-examined their 2002 paper, and now supported MBH. They concluded that "annual temperatures up to AD 2000 over extra-tropical NH land areas have probably exceeded by about 0.3 °C the warmest previous interval over the past 1162 years".[107]

In a Senate speech on 4 January 2005, Inhofe repeated his assertion that "the threat of catastrophic global warming" was the "greatest hoax ever perpetrated on the American people". He singled out the hockey stick graph and Mann for criticism, accusing Mann of having "effectively erased the well-known phenomena of the Medieval Warming Period-when, by the way, it was warmer than it is today-and the Little Ice Age". He quoted von Storch as criticising the graph.[108] In a CBS News opinion piece, Chris Mooney said that Inhofe had extensively cited Michael Crichton's fictional thriller, State of Fear, mistakenly describing Crichton as a "scientist", and had misrepresented three scientists as disputing the "hockey stick" when they had been challenging a completely different paper which Mann had co-authored.[109]

3.6. McIntyre and McKitrick 2005

In 2004 Stephen McIntyre blogged on his website climate2003.com about his efforts with Ross McKitrick to get an extended analysis of the hockey stick into the journal Nature.[110][111] At this stage Nature contacted Mann, Bradley, and Hughes, about minor errors in the online supplement to MBH98. In a corrigendum published on 1 July 2004 they acknowledged that McIntyre and McKitrick had pointed out errors in proxy data that had been included as supplementary information, and supplied a full corrected listing of the data. They included a documented archive of all the data used in MBH98, and expanded details of their methods. They stated, "None of these errors affect our previously published results."[112]

Following the review process, Nature rejected the comment from McIntyre and McKitrick, who then put the record of their submitted paper and the referees' reports up on their web site. This caught the attention of Richard A. Muller, who had previously supported criticism of the hockey stick paper. On 15 October Muller published his view that the graph was "an artifact of poor mathematics", summarising the as yet unpublished comment including its claim that the principal components procedure produced hockey stick shapes from random data. He said that the "discovery hit me like a bombshell".[113]

On 14 October the McIntyre and McKitrick comment was submitted to Geophysical Research Letters, a publication of the American Geophysical Union,[114] and the AGU consented to it being shown at their 13–17 December conference. McIntyre attended the conference, and on 17 December presented a poster session showing his principal components argument about the MBH papers. He found that "People who were not mathematically inclined were intrigued by a graphic showing 8 hockeysticks - 7 simulated and 1 MBH (the same sort of graphic as the one put up here a while ago, but just showing 1 simulation.) Quantity seems to matter in the demonstration. No one could tell the difference without being told."[115] On 17 January 2005 the M&M comment was accepted by GRL for publication as a paper.[114]

Climate blogs

McIntyre commented on climate2003.com on 26 October 2004, "Maybe I'll start blogging some odds and ends that I'm working on. I'm going to post up some more observations on some of the blog criticisms."[115]

On 10 December Mann and nine other scientists launched the RealClimate website as "a resource where the public can go to see what actual scientists working in the field have to say about the latest issues".[80]

On 2 February 2005 McIntyre set up his Climate Audit blog, having found difficulties with posting comments on the climate2003.com layout.[116]

Principal components analysis methodology

In their renewed criticism, McIntyre & McKitrick 2005 (MM05) found a minor technical statistical error in the Mann, Bradley & Hughes 1998 (MBH98) method, and said it would produce hockey stick shapes from random data. This claim was given widespread publicity and political spin, enabling the George W. Bush administration to assert that the 1997 Kyoto Protocol was discredited. Scientists found that the issues raised by McIntyre and McKitrick were minor and did not affect the main conclusions of MBH98 or Mann, Bradley & Hughes 1999.[69][110] Reconstructions using different statistical methods had produced similar "hockey stick" graphs. Mann himself had already stopped using the criticised statistical method in 2001, when he changed over to the RegEM climate field reconstruction method.[117]

The available climate proxy records were not spaced evenly over the northern hemisphere: some areas were covered by closely spaced networks with numerous tree-ring proxies, but in many areas only sparse proxy temperature records were available, such as lake sediments, ice cores or corals. To achieve balance, the MBH 1998 (and 1999) studies represented each dense network by using principal component analysis (PCA) to find the leading patterns of variation (PC1, PC2, PC3 etc.) ranked by the percentage of variation they explained. To establish how many significant principal components should be kept so that the patterns put together characterized the original dataset, they used an objective selection rule procedure which involved creating randomised surrogate datasets with the same characteristics and treating them with exactly the same conventions as the original data.[118] The temperature records were of various lengths, the shortest being the instrumental record from 1902 to 1980, and their convention centered data over this modern calibration period.[119] The selection rule found two significant patterns for the North American tree ring network (NOAMER); PC1 emphasized high altitude tree ring data from the Western U.S. showing a cooler period followed by 20th century warming, PC2 emphasised lower elevation tree ring series showing less of a 20th-century trend. This is an acceptable convention, the more conventional method of centering data over the whole period of the study (from 1400 to 1980) produces a very similar outcome but changes the order of PCs and requires more PCs to produce a valid result. Mann did not use the PCA step after 2001, his subsequent reconstructions used the RegEM Climate Field Reconstruction technique incorporating all available individual proxy records instead of replacing groups of records with principal components;[120] tests have shown that the results are nearly identical.[121]

The McIntyre and McKitrick analysis called the PCA centering over the 1902–1980 modern period "an unusual data transformation which strongly affects the resulting PCs". When they centered NOAMER data over the whole 1400–1980 period, this changed the order of principal components so that the warming pattern of high altitude tree ring data was demoted from PC1 to PC4.[114] Instead of recalculating the objective selection rule which increased the number of significant PCs from two to five, they only kept PC1 and PC2. This removed the significant 20th century warming pattern of PC4, discarding data that produced the "hockey stick" shape.[122][123] They said that "In the controversial 15th century period, the MBH98 method effectively selects only one species (bristlecone pine) into the critical North American PC1",[114] but subsequent investigation showed that the "hockey stick" shape remained with the correct selection rule, even when bristlecone pine proxies were removed.[123]

Their MM05 paper said that the MBH98 (1902–1980 centering) "method, when tested on persistent red noise, nearly always produces a hockey stick shaped first principal component (PC1)", by picking out "series that randomly 'trend' up or down during the ending sub-segment of the series".[114] Though modern centering produces a small bias in this way, the MM05 methods exaggerated the effect.[119] Tests of the MBH98 methodology on pseudoproxies formed with noise varying from red noise to white noise found that this effect caused only very small differences which were within the uncertainty range and had no significance for the final reconstruction.[124] Red noise for surrogate datasets should have the characteristics of natural variation, but the statistical method used by McIntyre and McKitrick produced "persistent red noise" based on 20th century warming trends which showed inflated long-term swings, and overstated the tendency of the MBH98 method to produce hockey stick shapes. Their use of this persistent red noise invalidated their claim that "the MBH98 15th century reconstruction lacks statistical significance", and there was also a data handling error in the MM05 method. Studies using appropriate red noise found that MBH98 passed the threshold for statistical skill, but the MM05 reconstructions failed verification tests.[119][123][125]

To demonstrate that some simulations using their persistent red noise "bore a quite remarkable similarity to the actual MBH98 temperature reconstruction", McIntyre and McKitrick produced illustrations for comparison.[114] Figure 4.4 of the Wegman Report showed 12 of these pre-selected simulations. It called this "One of the most compelling illustrations that McIntyre and McKitrick have produced", and said that the "MBH98 algorithm found ‘hockey stick’ trend in each of the independent replications".[126] McIntyre and McKitrick's code selected 100 simulations with the highest "hockey stick index" from the 10,000 simulations they had carried out, and their illustrations were taken from this pre-selected 1%.[127]

Publicity

In a public relations campaign two weeks before the McIntyre and McKitrick paper was published, the Canadian National Post for 27 January carried a front-page article alleging that "A pivotal global warming study central to the Kyoto Protocol contains serious flaws caused by a computer programming glitch and other faulty methodology, according to new Canadian research." It reprinted in two parts a long article by Marcel Crok which had appeared in Natuurwetenschap & Techniek under the heading "Proof that mankind causes climate change is refuted, Kyoto protocol based on flawed statistics" and the assertion that the MBH99 finding of exceptional late 20th century warmth was "the central pillar of the Kyoto Protocol", despite the protocol having been adopted in December 1997, before either of the MBH papers had been published. The Bush administration had already decided to disregard the Kyoto Protocol which was to come into effect later that month, and this enabled them to say that the protocol was discredited.[128]

McIntyre & McKitrick 2005 (MM05) was published in Geophysical Research Letters on 12 February 2005. They also published an extended critique in Energy & Environment responding to criticism of their 2003 paper and alleging that other reconstructions were not independent as there was "much overlapping" of authors.[129] Two days later, a lead article in the Wall Street Journal said that McIntyre's new paper was "circulating inside energy companies and government agencies. Canada's environment ministry has ordered a review", and though McIntyre did not take strong position on whether or not fossil-fuel use was causing global warming, "He just says he has found a flaw in a main leg supporting the global-warming consensus, the consensus that led to an international initiative taking effect this week: Kyoto."[130]

Technical issues were discussed in RealClimate on 18 February in a blog entry by Gavin Schmidt and Caspar Ammann,[131] and in a BBC News interview Schmidt said that by using a different convention but not altering subsequent steps in the analysis accordingly, McIntyre and McKitrick had removed significant data which would have given the same result as the MBH papers.[132]

On 4 April 2005, McKitrick gave a presentation to the Asia-Pacific Economic Cooperation Study Centre outlining the arguments of MM05, alleging that the TAR had given undue prominence given to the hockey stick graph, and discussing publicity given to the TAR conclusions in Canada. He said, "In the 1995 Second Assessment Report of the IPCC, there was no hockey stick. Instead the millennial climate history contained a MWP and a subsequent Little Ice Age, as shown as in Figure 3." The figure, headed "World Climate History according to IPCC in 1995", resembled the schematic 7.1(c) from IPCC 1990, and corrections to refer to IPCC 1990 were made on 22 July 2005.[133]

At the end of April Science published a reconstruction by J. Oerlemans based on glacier length records from different parts of the world, and found consistent independent evidence for the period from 1600 to 1990 supporting other reconstructions regarding magnitude and timing of global warming.[134] In May the University Corporation for Atmospheric Research advised media about a detailed analysis by Eugene Wahl and Caspar Ammann, first presented at the American Geophysical Union’s December 2004 meeting in San Francisco , which used their own code to replicate the MBH results, and found the MBH method to be robust even with modifications. Their work contradicted the claims by McIntyre and McKitrick about high 15th century global temperatures and allegations of methodological bias towards a hockey stick outcomes, and they concluded that the criticisms of the hockey stick graph were groundless.[135]

4. Congressional Investigations

The increasing politicisation of the issue was demonstrated when,[136] on 23 June 2005, Rep. Joe Barton, chairman of the House Committee on Energy and Commerce wrote joint letters with Ed Whitfield, Chairman of the Subcommittee on Oversight and Investigations, referring to issues raised by the 14 February 2005 article in the Wall Street Journal and demanding full records on climate research. The letters were sent to the IPCC Chairman Rajendra Pachauri, National Science Foundation Director Arden Bement, and to the three scientists Mann, Bradley and Hughes.[137] The letters told the scientists to provide not just data and methods, but also personal information about their finances and careers, information about grants provided to the institutions they had worked for, and the exact computer codes used to generate their results.[138]

Sherwood Boehlert, chairman of the House Science Committee, told his fellow Republican Joe Barton it was a "misguided and illegitimate investigation" into something that should properly be under the jurisdiction of the Science Committee, and wrote "My primary concern about your investigation is that its purpose seems to be to intimidate scientists rather than to learn from them, and to substitute congressional political review for scientific review." Barton's committee spokesman sent a sarcastic response to this, and to Democrat Henry A. Waxman's letter asking Barton to withdraw the letters and saying he had "failed to hold a single hearing on the subject of global warming" during eleven years as chairman, and had "vociferously opposed all legislative efforts in the Committee to address global warming ... These letters do not appear to be a serious attempt to understand the science of global warming. Some might interpret them as a transparent effort to bully and harass climate change experts who have reached conclusions with which you disagree." The U.S. National Academy of Sciences (NAS) president Ralph J. Cicerone wrote to Barton that "A congressional investigation, based on the authority of the House Commerce Committee, is probably not the best way to resolve a scientific issue, and a focus on individual scientists can be intimidating", and proposed that the NAS should appoint an independent panel to investigate. Barton dismissed this offer.[139][140]

Mann, Bradley and Hughes sent formal letters giving their detailed responses to Barton and Whitfield. On 15 July, Mann wrote emphasising that the full data and necessary methods information was already publicly available in full accordance with National Science Foundation (NSF) requirements, so that other scientists had been able to reproduce their work. NSF policy was that computer codes "are considered the intellectual property of researchers and are not subject to disclosure", as the NSF had advised McIntyre and McKitrick in 2003, but notwithstanding these property rights, the program used to generate the original MBH98 temperature reconstructions had been made available at the Mann et al. public ftp site.[141]

Many scientists protested against Barton's investigation, with 20 prominent climatologists questioning his approach.[142] Alan I. Leshner wrote to him on behalf of the American Association for the Advancement of Science expressing deep concern about the letters, which gave "the impression of a search for some basis on which to discredit these particular scientists and findings, rather than a search for understanding".[143] He stated that MBH had given out their full data and descriptions of methods, and were not the only evidence in the IPCC TAR that recent temperatures were likely the warmest in 1,000 years; "a variety of independent lines of evidence, summarized in a number of peer-reviewed publications, were cited in support". Thomas Crowley argued that the aim was intimidation of climate researchers in general, and Bradley thought the letters were intended to damage confidence in the IPCC during preparation of its next report.[144] A Washington Post editorial on 23 July which described the investigation as harassment quoted Bradley as saying it was "intrusive, far-reaching and intimidating", and Alan I. Leshner of the AAAS describing it as unprecedented in the 22 years he had been a government scientist; he thought it could "have a chilling effect on the willingness of people to work in areas that are politically relevant".[138] Benjamin D. Santer told the New Scientist "There are people who believe that if they bring down Mike Mann, they can bring down the IPCC."[145]

Congressman Boehlert said the investigation was as "at best foolhardy" with the tone of the letters showing the committee's "inexperience" in relation to science. Barton was given support by global warming sceptic Myron Ebell of the Competitive Enterprise Institute, who said "We've always wanted to get the science on trial ... we would like to figure out a way to get this into a court of law", and "this could work".[144] In his Junk Science column on Fox News, Steven Milloy said Barton's inquiry was reasonable.[146]

4.1. Reconstruction Methodology

The July 2005 issue of the Journal of Climate published a paper it had accepted for publication the previous September, co-authored by Scott Rutherford, Mann, Osborn, Briffa, Jones, Bradley and Hughes, which examined the sensitivity of proxy based reconstruction to method and found that a wide range of alternative statistical approaches gave nearly indistinguishable results. In particular, omitting principal component analysis made no significant difference.[121]

In comments on MM05 made in October, Peter Huybers showed that McIntyre and McKitrick had omitted a critical step in calculating significance levels, and MBH98 had shown it correctly.[147] Though the disputed principal components analysis method would in theory have some effect, its influence on the amplitude of the final reconstruction was very small.[148] In their comment, Hans von Storch and Eduardo Zorita examined McIntyre and McKitrick's claim that normalising data prior to principal component analysis by centering in relation to the calibration period of 1902–1980, instead of the whole period, would nearly always produce hockey stick shaped leading principal components. They found that it caused only very minor deviations which would not have a significant impact on the result.[124] McIntyre and McKitrick contributed replies to these comments.[148]

In November 2005, Science Committee chair Sherwood Boehlert requested the National Academy of Science to arrange a review of climate reconstructions including the hockey stick studies, and its National Research Council set up a special committee to investigate and report.[149]

4.2. Inconvenient Truth

On 28 February 2006 Wahl & Ammann 2007 was accepted for publication, and an "in press" copy was made available on the internet. Two more reconstructions were published, using different methodologies and supporting the main conclusions of MBH. Rosanne D'Arrigo, Rob Wilson and Gordon Jacoby suggested that medieval temperatures had been almost 0.7 °C cooler than the late 20th century but less homogenous,[150] Osborn and Briffa found the spatial extent of recent warmth more significant than that during the medieval warm period.[151][152] They were followed in April by a third reconstruction led by Gabriele C. Hegerl.[153]