Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Environmental Sciences

We are currently living in the era of big data. The volume of collected or archived geospatial data for land use and land cover (LULC) mapping including remotely sensed satellite imagery and auxiliary geospatial datasets is increasing. Innovative machine learning, deep learning algorithms, and cutting-edge cloud computing have also recently been developed. While new opportunities are provided by these geospatial big data and advanced computer technologies for LULC mapping, challenges also emerge for LULC mapping from using these geospatial big data.

- land use and land cover mapping

- remote sensing

- machine learning

- deep learning

- geospatial big data

1. Introduction

Accurate and timely land use and land cover (LULC) maps are important for a variety of applications such as urban and regional planning, disasters and hazards monitoring, natural resources and environmental management, and food security [1,2,3]. LULC mapping may help tackle many significant large-scale challenges, such as global warming, the accelerating loss of species habitat, unprecedented population migration, increasing urbanization, and growing inequalities within and between nations [4,5]. Therefore, it is important to produce accurate LULC maps.

The land use concept and the land cover concept, though related, are distinctly different [6]. Land cover mainly refers to direct observations of terrestrial ecosystems, natural resources, and habitats on the Earth’s surface, while land use generally describes a certain land type produced, changed or maintained by the arrangements, activities, and inputs of people. Land use relates to the purpose for which land is utilized by people, but land cover specifies landscape patterns and characteristics. Examples of land use may include multi-family residential homes, state parks, reservoirs, and shopping centers. In contrast, examples of land cover may include forests, wetlands, built areas, water, and grasslands. However, land use and land cover are often used as interchangeable terms in existing research literature.

Remotely sensed satellite imagery is a valuable source for LULC mapping [7,8,9]. Many studies have attempted to extract LULC information from remotely sensed imagery [2,10]. Advances in remote sensing technologies have resulted in improvements in spectral, spatial, and temporal resolutions of satellite imagery, all of which benefit LULC mapping. LULC mapping is currently experiencing a transformation from the coarse and moderate scales to much finer scales in order to provide more precise land knowledge. Although remotely sensed imagery has been used in LULC mapping since the launch of Landsat 1 in 1972 [11], it is still difficult to capture complex and diverse LULC information and patterns by using remotely sensed imagery alone [12]. Ancillary data are typically needed as a supplement to remotely sensed imagery in order to accurately identify LULC information, especially the land use information related to socioeconomic aspects [13].

With the development of GPS and data acquisition techniques, the merging of big data with spatial location information—such as social media data, mobile phone tracking data, public transport smart card data, Wi-Fi access point data, wireless sensor networks, and other sensing information generated by Internet of Things devices—may provide useful ancillary data for LULC mapping [14]. Compared to traditional geospatial data acquisition, these geospatial big data are normally obtained at a lower cost and have different coverages and better spatio-temporal resolutions. They contain abundant human activity information and may thus be used to compensate for the lack of socioeconomic attributes of the remotely sensed imagery data for accurate LULC mapping [15]. In fact, the aforementioned geospatial big data were integrated with remotely sensed imagery and other source data for accurate LULC mapping in many studies [16,17].

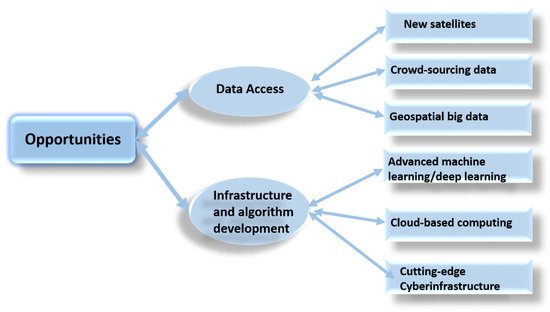

We are currently living in the era of big data. The volume of collected or archived geospatial data, including remotely sensed data, is increasing from terabytes to petabytes and even to exabytes [18]. For example, the European Space Agency (ESA), the National Aeronautics and Space Administration (NASA), the United States Geological Survey (USGS), and the National Oceanic and Atmospheric Administration (NOAA) provide a huge amount of freely available remotely sensed data and other Geographic Information System (GIS) data for LULC mapping. Social media sites, such as Facebook, Twitter, and Instagram, are generating an enormous volume of data with geospatial location information that can be used for LULC mapping nowadays [19]. Progress in data access and algorithm development in the era of big data provides opportunities for developing improved LULC maps [20]. Figure 1 illustrates the major opportunities of LULC mapping in the era of big data. Databases that offer free access to LULC maps at the global scale have emerged. For example, as a free search engine, “Collect Earth” developed by the Food and Agriculture Organization (FAO) can help derive past and present LULC change information [21].

Figure 1. Major opportunities of LULC mapping in the era of big data.

While these geospatial big data provide new opportunities, challenges remain in storing, managing, analyzing, and visualizing these data for LULC mapping [22]. Geospatial big data not only have various forms but are also often associated with unstructured data that are difficult to manage [23]. It is extremely difficult to integrate, analyze, and transform these heterogeneous geospatial big data from different sources into useful values for LULC mapping. Traditional LULC classification or mapping solutions and software face excessive challenges in dealing with these large and complex geospatial big data. New approaches are needed to efficiently process and analyze these data to reveal patterns, trends, and associations related to LULC mapping [24].

Lately, advanced machine learning techniques, especially deep learning (DL), have been developed for large-scale LULC mapping based on multispectral and hyperspectral satellite images or the integration of satellite imagery with other geospatial big data [25]. Deep learning has demonstrated better performance compared to traditional methods, such as random forest (RF) and support vector machine (SVM), e.g., [26,27,28]. Nevertheless, there are still many issues in applying advanced machine learning or deep learning for accurate LULC mapping using geospatial big data.

2. LULC Mapping from Remotely Sensed Imagery Data

As mentioned previously, remote sensing has become one of the most important methods for LULC mapping [48,49]. Many existing LULC maps were made by the classification of remotely sensed satellite imagery data [50]. Remotely sensed data have multi-source, multi-scale, high-dimension, and non-linear characteristics [51]. Since the advent of remote sensing technology, many satellites have been launched. Every day, a large set of spaceborne and airborne sensors provide a massive amount of remotely sensed data. At present, there are more than 200 on-orbit satellite sensors capturing a large amount of multi-temporal and multi-scale remotely sensed data. For example, NASA’s Earth Observing System Data and Information System (EOSDIS) managed more than 7.5 petabytes of archived remotely sensed data and archived a daily data increase of four TB in 2013 [52]. Many satellite imagery data providers release timely remotely sensed data to the public without any cost. USGS, NASA, NOAA, IPMUS Terra, NEO, and Copernicus open access hubs are among the most popular open access remotely sensed data providers.

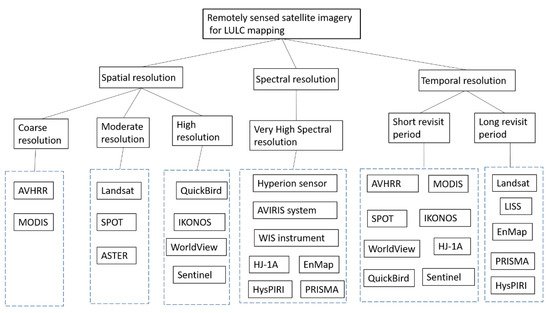

In the past, many LULC maps were made from coarse spatial resolution satellite imagery data such as advanced very-high-resolution radiometer (AVHRR) and moderate-resolution imaging spectroradiometer (MODIS) [53]. Advances in remote sensing technology and the launch of sensors with moderate spatial resolutions, such as Landsat, Satellite Pour l’Observation de la Terre (SPOT), and Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER), have contributed to enhanced LULC mapping, e.g., [54,55]. Lately, detailed LULC maps have been produced from high-resolution imagery data such as QuickBird, IKONOS, and WorldView, which can provide more detailed spatial and spectral information for LULC mapping, e.g., [56,57,58]. With these high-resolution remotely sensed data, it is possible to identify the detailed geometries, textures, sizes, locations, and adjacent information of ground objects at a much finer scale for LULC mapping [59].

In addition to the different spatial resolutions, the remotely sensed data for LULC mapping also have different spectral and temporal resolutions. Many satellite sensors produce imagery data with very-high spectral resolutions [32]. For example, the Hyperion sensor consists of 220 spectral bands, the AVIRIS system provides 224 spectral bands, the WIS instrument has 812 bands, and the hyperspectral imager equipped in HJ-1A has 128 bands. Furthermore, remotely sensed data may come from different types of satellites. Some satellites use optical sensors such as SPOT, Landsat, and IKONOS; some use microwave synthetic aperture radar (SAR) sensors such as TerraSAR, Envisat, and RADARSAT; while others use multi-mode sensors such as MODIS. While the optical satellite imagery data face challenges in producing LULC maps under cloudy weather conditions, microwave SAR data allow LULC mapping under all weather conditions, including the constantly cloudy weather situation [60,61,62]. From a temporal resolution perspective, these satellites also have different capabilities to revisit an observation area. Some satellites have a short revisit period of one day (e.g., MODIS and WorldView), while other satellites have a long revisit period of 16 days (e.g., Landsat). Figure 2 shows different types of remotely sensed satellite imagery for LULC mapping. Teeuw et al. [63], Navin and Agilandeeswari [64], and Pandey et al. [40] provided detailed tables for the characteristics of different types of remotely sensed data.

Figure 2. Different types of remotely sensed satellite imagery for LULC mapping.

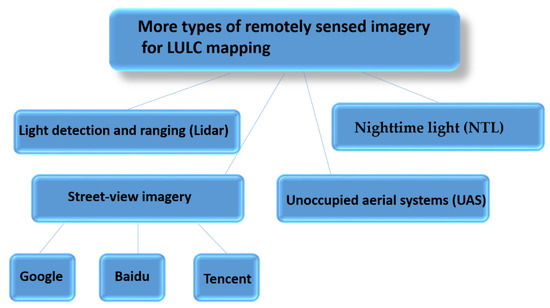

As illustrated in Figure 3, more types of remotely sensed data have emerged to provide additional observations for LULC mapping [51]. These remotely sensed data provide observations to differentiate LULC types with complex structures, which are difficult to differentiate in the past. For example, as a unique measure of human activities and socio-economic attributes, remote sensing-based nighttime lights (NTL) imagery is especially useful for urban LULC mapping at different spatial and temporal scales, e.g., [65,66]. Light detection and ranging (Lidar) is another type of remotely sensed data for detailed LULC mapping, e.g., [67]. Unlike optical data, airborne Lidar data can capture highly accurate structural information to differentiate LULC types with different structures, components, and compositions [68]. In addition, street-view imagery from Google, Baidu, and Tencent also functions as an additional type of remotely sensed data for LULC mapping, e.g., [69,70]. In contrast to the overhead view captured by most other remote sensing methods, street-view imagery data provide street-level or eye-level observations along the road networks. By providing information about what people typically see at street level on ground, street-view imagery data provide crucial information on the functions of objects conventionally hidden from the view above, e.g., [71]. For example, street-view imagery data have been used for level II or III land use classification (e.g., differentiation of commercial buildings and residential buildings by using text information on buildings from street-view imagery data [72]. Street-view imagery can also be used for ground truth purposes. Recently, unoccupied aerial system (UAS) platforms with small-sized and high-detection-precision sensors have also started producing massive high-resolution images as well, and have been extensively used for high-resolution LULC mapping, e.g., [73,74]. Currently, the amount of data collected by UAS is about to explode.

Figure 3. More types of remotely sensed imagery for LULC mapping.

Because of the diversity and high dimensionality of remotely sensed data, LULC mapping from remotely sensed big data becomes complex. It is challenging to identify the right datasets and combine them to make detailed LULC maps at large scales. Although the multi-source optical and microwave remotely sensed data allow us to obtain LULC information from multiple viewpoints, they sometimes cause confusion in deciding which type is the most appropriate for particular LULC mapping. In addition, because of the data representation challenge, it is difficult to integrate the various remotely sensed data with different features (e.g., spectral signatures in optical imagery and electromagnetic radiations in microwave imagery) from various sources. Traditional pixel-level, feature-level, and decision-level fusion cannot be used to integrate remotely sensed imagery with different scales and/or formats [18]. New approaches need to be developed to fuse remotely sensed imagery with other geospatial big data, such as photos from a social network and crowdsourcing spatial data, for LULC mapping.

3. LULC Mapping from Integration of Geospatial Big Data and Remotely Sensed Imagery Data

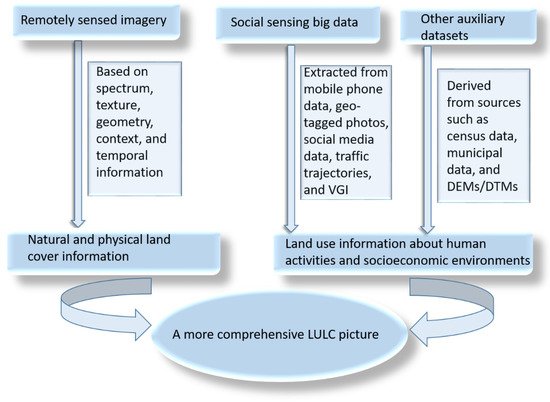

Although remotely sensed data have become one of the most important data sources for LULC mapping, these have limitations [42,75]. Remotely sensed data are valuable to extract natural and physical land cover information based on spectrum, texture, geometry, context, and temporal information, but they have limitations in capturing the patterns of human activities and socioeconomic environments and describing indirect anthropogenic differences among different land use classes [13]. For example, while the spectral information of remotely sensed imagery data is effective to extract land cover information such as water area, forest land, and built-up area, it is almost impossible to distinguish some land use classes such as some industrial land, residential land, and commercial land using the spectral information of remotely sensed data alone [72].

With the development of mobile positioning techniques, wireless communication, and the Internet of Things, new emerging types of social sensing big data are providing complementary information to differentiate some land use classes caused by human activities and socioeconomic environments [9]. Examples of these emerging social sensing big data include mobile phone data, geo-tagged photos, social media data, traffic trajectories, and volunteered geographic information (VGI) data [76]. These emerging social sensing big data are able to more effectively capture human activities and dynamic socioeconomic environments, and are regarded as complements of remotely sensed imagery data for effectively LULC mapping [77,78]. For example, Geo-Wiki is a crowdsourcing platform for LULC mapping and other tasks, which was used to derive the global LULC reference data via four campaigns [79]. Flickr offers online services for the sharing of digital photos with geographic locations based on social networks, which was used to identify socioeconomic and human activities in LULC mapping [19]. OpenStreetMap (OSM) (as a VGI database) allows the adding, editing, and updating of basic geographic map information with users’ experience and knowledge, which was also used to uncover some land use types and patterns, e.g., [80,81]. The points of interest (POIs), as one of the most common categories of crowdsourced data, were explored for land use classification by many scholars, e.g., [82,83,84]. In addition, a large amount of GPS traffic trajectory data also further enriched the remotely sensed data in excavating human activities at a fine scale for accurate LULC mapping [85].

These emerging social sensing big data improved the existing LULC maps by providing more detailed socioeconomic information and finer spatio-temporal resolutions [86]. Many studies have been conducted to integrate the social sensing big data with remotely sensed data for LULC mapping at different scales and locations, e.g., [87,88,89]. For example, Hu et al. [90] developed a protocol to identify urban land use functions over large areas using satellite images and open social data. Yin et al. [91] employed both the decision-level integration and feature-level integration of remotely sensed data with social sensing big data for urban land use mapping. Integrating data from these social sensing big data with remotely sensed data may provide a more comprehensive picture of LULC patterns, as shown in Figure 4.

Figure 4. LULC mapping from the integration of geospatial big data and remotely sensed data.

In addition to the integration of remotely sensed data and social sensing big data for LULC mapping, other auxiliary datasets may also be used for LULC mapping [92]. For example, census data including demography, employment, education, housing, and income information may provide valuable information to reveal spatial differences in socioeconomic statures across different land use types, e.g., [24]. Municipal data such as water consumption data may offer important information to identify the socioeconomic functions of land uses and help classify mixed patterns of land uses [93]. In addition, topographic information such as elevation, slope, and aspect information extracted from digital elevation or digital terrain models (DEMs/DTMs) may also be combined with remotely sensed data to increase the accuracy of urban land use classification, e.g., [94].

Ubiquitous sensor networks can constantly obtain spatio-temporal data in days, hours, minutes, seconds, or even milliseconds. These spatio-temporal data allow people to acquire multi-dimensional dynamic information about various land entities and human activities, which may be used for making or updating LULC maps. LULC mapping is expanding from professional aspects to public aspects with the development of The Internet of Things (IoT) and Volunteered Geographic Information (VGI), as evidenced by Geo-Wiki and My Maps feature in Google Maps. However, the non-professional characteristics of IoT and VGI often make the data obtained from them contain data uncertainty such as data loss, noise, inconsistency, and ambiguity [95]. Therefore, it is important to develop quality assurance procedures such as data cleaning and quality inspection for high-quality LULC mapping.

It is still challenging to integrate multi-source remotely sensed data, social sensing big data, and other auxiliary datasets for LULC mapping because of intensive computing and the heterogeneity in spatial data structures, formats, resolutions, scales, and data quality. Novel machine learning including deep learning and cloud computing approaches are urgently needed for LULC mapping.

4. Machine Learning and Cloud Computing for LULC Mapping

Machine learning is a data analysis method and a subset of artificial intelligence based on the idea that computer systems can learn from data to identify patterns and make decisions with minimal human intervention. There are many different machine learning approaches for LULC mapping [96,97], such as support vector machine (SVM), random forest (RF), and K-nearest neighborhood (KNN). The strengths of machine learning include the capacity to handle data of high dimensionality and to map LULC classes with very complex characteristics. With growing volumes and varieties of the available aforementioned remotely sensed imagery and geospatial big data, cheaper and more powerful computational processing tools, and affordable data storage, machine learning has become more popular than ever for analyzing bigger and more complex data and delivering more accurate LULC mapping results at larger scales [10]. Machine learning provides the foundation for autonomously solving data-based LULC mapping problems [98].

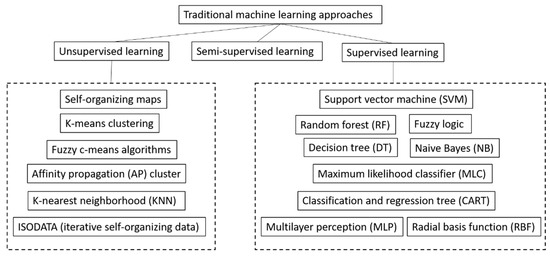

Supervised learning, unsupervised learning, and semi-supervised learning are the three main types of machine learning methods for LULC mapping, as shown in Figure 5. Supervised learning algorithms are trained using labeled LULC examples and apply what has been learned in the labeled LULC example data to predict the labels of new LULC data. By inferring methods such as regression and gradient boosting, supervised learning methods use patterns to predict the values of the labels on unlabeled LULC data [99]. Popular supervised learning methods include support vector machine (SVM), random forest (RF), classification and regression tree (CART), radial basis function (RBF), decision tree (DT), multilayer perception (MLP), naive Bayes (NB), maximum likelihood classifier (MLC), and fuzzy logic. Unsupervised learning algorithms are used with data that have no historical LULC labels and computers infer a function to describe a hidden structure from unlabeled LULC data. Unsupervised learning methods are used when it is unclear what the LULC mapping results will look like and computers need to dig through hidden layers of LULC data and cluster data together based on the similarities or differences of LULC classes. Popular unsupervised learning methods include self-organizing maps, k-means clustering, nearest-neighbor mapping, affinity propagation (AP) cluster algorithm, ISODATA (iterative self-organizing data), and fuzzy c-means algorithms. Semi-supervised learning is similar to supervised learning. However, it uses both labeled and unlabeled data for training—usually a small amount of labeled data with a large amount of unlabeled data.

Figure 5. Types of traditional machine learning approaches for LULC mapping.

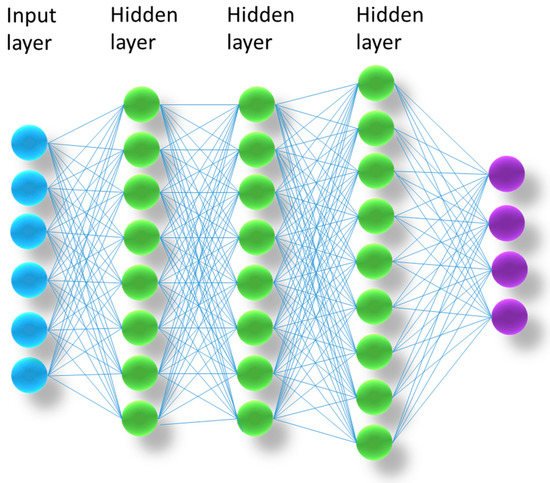

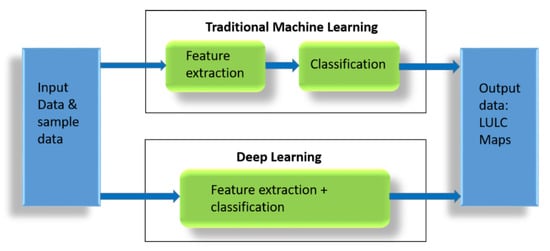

Recent advances in machine learning for LULC mapping have been accomplished via deep learning approaches [100,101]. As illustrated in Figure 6, deep learning is a subfield of machine learning. All deep learning is machine learning, but not all machine learning is deep learning. Deep learning emerged because shallow machine learning cannot successfully analyze big data for LULC mapping. While basic machine learning models do become progressively better at performing their specific functions as they take in new emergent data, they still need some human intervention. Deep learning algorithms in layers can build an “artificial neural network” (Figure 7) that is able to learn and make intelligent classification decisions on its own [102]. Figure 8 illustrates the differences between traditional machine learning and deep learning. For traditional machine learning, feature extraction and classification are separate processes and humans are needed to perform feature extraction. With a deep learning model, feature extraction is integrated with classification and a classification algorithm can determine whether a class prediction is accurate through its own neural network—beyond the training data and without requiring human help. Deep learning algorithms can be considered as a both sophisticated and mathematically complex evolution of machine learning algorithms [103]. Deep learning algorithms analyze data with a logic structure similar to how a human would draw LULC mapping conclusions. When fed training data, deep learning algorithms would eventually learn from their own errors whether a LULC class prediction is good or whether it needs to adjust.

Figure 6. Deep learning is a subfield of machine learning.

Figure 7. A simple artificial neural network.

Figure 8. Differences between traditional machine learning and deep learning.

In recent studies, deep learning outperformed other machine learning algorithms in some LULC mapping problems, particularly in detecting fine-scale types such as small artificial objects [29,104,105]. Deep learning algorithms have been used to automatically extract spatial features from very-high-resolution satellite images such as IKONOS, WorldView-3, and SPOT-5, e.g., [101].

There are several different types of deep learning algorithms for LULC mapping, among which the most popular algorithms include convolutional neural networks (CNNs) and recurrent neural networks (RNNs). CNNs are some of the most popular neural network architectures because they can extract low-level features with a high-frequency spectrum, such as the edges, angles, and outlines of LULC objects, whatever the shape, size, or color of the objects are. Therefore, CNNs are well suited for LULC mapping [106]. Some popular CNN architectures used in the literature are LeNet5, AlexNet, VGGNet, CaffeNet, GoogLeNet, and ResNet models. RNNs have built-in feedback loops that allow the algorithms to “remember” past data points. RNNs can use this memory of past events to inform their understanding of current events or even predict the future. RNNs are mainly designed to process time series data and are suitable to detect LULC changes [29].

By performing complex abstractions over data through a hierarchical learning process, deep leaning algorithms have shown great potential for analyzing big datasets for LULC mapping [16]. The hidden layers in deep leaning approaches can discover class structures and patterns in big data and extract valuable class knowledge. Deep learning is also able to handle nonlinear and highly complex big data more effectively than conventional machine learning methods [43,100]. However, compared to traditional machine learning approaches, deep learning requires a vast amount of training data and substantial computing power [27]. A deep learning algorithm requires much more data than a traditional machine learning algorithm to properly conduct LULC mapping. Due to the complex multi-layer structure, a deep learning system needs a large training dataset to eliminate fluctuations and make high-quality class interpretations [43]. Without a large set of training data, deep learning may show a similar or worse performance than classical machine learning techniques such as SVM [107].

The emergence of cloud computing infrastructure and high-performance GPUs (graphic processing units, used for faster calculations) helped to solve the expensive computational problem faced by deep learning [108]. The storage and processing requirements of big data for LULC mapping are greater than that available in traditional computer systems and technologies [109]. The existing cluster-based high-performance computing (HPC) with plenty of computational capacities can be used for storing large remotely sensed data and other big data for LULC mapping. However, it is still challenging to process these big remotely sensed data and other big data for large-scale LULC mapping because system architectures and the tools of the existing cluster-based HPC have not been optimized to process such data. The cluster systems or peta-scale supercomputers are not good at loading, transferring, and processing extremely big remotely sensed data and other data for LULC mapping. A potential solution to this problem is cloud computing. Cloud computing satisfies the two main requirements of LULC mapping using big data analytics solutions: (1) scalable storage that can accommodate growing data; and (2) a high processing capability that can run complex LULC mapping tasks in a timely manner. Cloud computing makes deep learning more accessible, making it easier to manage large datasets and train algorithms for distributed hardware, and deploy them efficiently [110]. It provides access to special hardware configures, including GPUs, field-programmable gate arrays (FPGAs), TensorFlow processing units (TPUs), and massively parallel high-performance computing (HPC) systems.

Cloud computing has been used for storing big remotely sensed data and other data for LULC mapping with good scalability [111]. Three main types of cloud computing services have been used [112]: (1) infrastructure-as-a-service (IaaS), which allows renting IT infrastructures. Servers, virtual machines with storages, networks, and operating systems are completely provided and managed by a cloud provider. Users can pay for what they use; (2) platforms-as-a-service (PaaS): this service is an on-demand style of service where users can obtain a complete development environment required for software applications; (3) software-as-a-service (SaaS): using SaaS, it is possible to deliver software applications over the Internet, such as the ‘on demand’ or ‘subscription’ services.

Google AppEngine, Microsoft Azure, and Amazon EC2 are the most popular cloud providers and offer pay-as-you-go clouding computing for storing, processing, and visualizing big remotely sensed data and other data for LULC mapping. GoogleTM developed a geospatial data analysis platform—Google Earth Engine (GEE)—capable of storing and analyzing vast amounts of remotely sensed data for rapid LULC mapping at large scales [113,114]. GEE provides users with free access to numerous remotely sensed datasets including Landsat, Sentinel, and MODIS images. GEE has already proven its capacities for LULC classification and change detection, e.g., [115,116,117,118,119,120,121,122,123,124]. Microsoft Azure Cloud Services and Amazon Web Services (AWS) have also been used to improve LULC mapping and monitoring [125]. Microsoft Azure Cloud Services have established artificial intelligence (AI) for an Earth initiative to address environmental challenges. However, Azure only offers Landsat and Sentinel-2 products for North America and MODIS imagery. Amazon Web Services offer open data from more satellites such as Sentinel-1, Sentinel-2, Landsat-8, and China–Brazil Earth Resources Satellite program (CBERS-4), NOAA image datasets, as well as global model outputs.

In addition to machine learning and cloud computing approaches, other advanced cyberinfrastructure techniques, such as novel scalable parallel file systems capable of storing and managing massive data, and NoSQL (Not Only SQL) databases for managing big unstructured or non-relational data have also been developed for LULC mapping with complex characteristics [126].

This entry is adapted from the peer-reviewed paper 10.3390/land11101692

This entry is offline, you can click here to edit this entry!